Differential Private Federated Learning . Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Nevertheless, privacy leakage may still happen. The aim is to hide clients' contributions during. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization.

from www.mdpi.com

Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. The aim is to hide clients' contributions during. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models.

Applied Sciences Free FullText Kalman FilterBased Differential

Differential Private Federated Learning In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Nevertheless, privacy leakage may still happen. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. The aim is to hide clients' contributions during. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data.

From www.semanticscholar.org

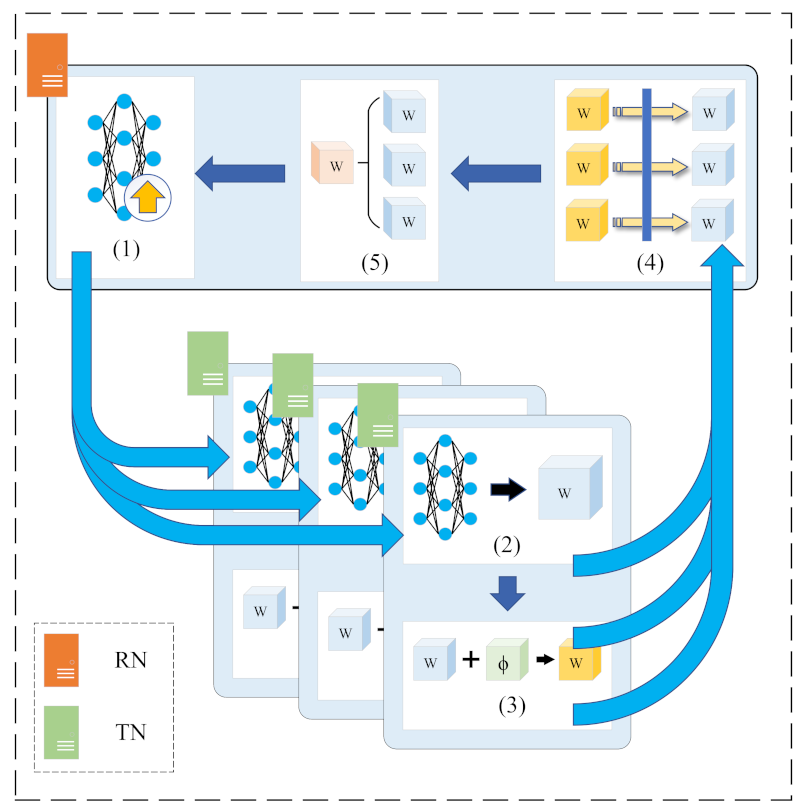

Figure 2 from Heterogeneous DifferentialPrivate Federated Learning Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Nevertheless, privacy leakage may still happen. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Federated learning (fl), as a. Differential Private Federated Learning.

From www.researchgate.net

Federated learning framework with differential privacy update Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. In. Differential Private Federated Learning.

From decentrapress.com

Understanding Federated Learning and its Benefits Decentrapress Differential Private Federated Learning Nevertheless, privacy leakage may still happen. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. We tackle this problem and propose an algorithm for client sided. Differential Private Federated Learning.

From www.semanticscholar.org

FedSeC a Robust Differential Private Federated Learning Framework in Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Federated learning (fl), a type of. Differential Private Federated Learning.

From www.semanticscholar.org

Figure 1 from Adaptive Local Steps Federated Learning with Differential Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Nevertheless, privacy leakage may still happen. We tackle this problem and. Differential Private Federated Learning.

From www.semanticscholar.org

Figure 3 from Signal Modulation Recognition Method Based on Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private. Differential Private Federated Learning.

From blog.ml.cmu.edu

On Privacy and Personalization in Federated Learning A Retrospective Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable. Differential Private Federated Learning.

From laptrinhx.com

Private Deep Learning of Medical Data for Hospitals using Federated Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. In this paper, to effectively prevent information leakage, we propose a novel. Differential Private Federated Learning.

From www.mdpi.com

Electronics Free FullText Vertically Federated Learning with Differential Private Federated Learning In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. The aim is to hide clients' contributions during. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl),. Differential Private Federated Learning.

From imirzadeh.me

Private AI Differential Privacy and Federated Learning Iman Mirzadeh Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. The aim is to hide clients' contributions during. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In differentially. Differential Private Federated Learning.

From www.semanticscholar.org

Differential Privacy Federated Learning based PrivacyPreserving Differential Private Federated Learning Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. The aim is to hide clients' contributions during. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In differentially private federated learning (dpfl), gradient clipping and random noise addition. Differential Private Federated Learning.

From www.aimodels.fyi

Enhancing Federated Learning with Adaptive Differential Privacy and Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Differential privacy has emerged as the de facto standard. Differential Private Federated Learning.

From www.mdpi.com

Future Free FullText Differential Private Federated Differential Private Federated Learning In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In this paper, to effectively prevent information leakage, we propose a novel. Differential Private Federated Learning.

From www.mdpi.com

Applied Sciences Free FullText Kalman FilterBased Differential Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Nevertheless, privacy leakage may still happen. We. Differential Private Federated Learning.

From www.mdpi.com

Future Free FullText Differential Private Federated Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided. Differential Private Federated Learning.

From ai.googleblog.com

Distributed differential privacy for federated learning Google AI Blog Differential Private Federated Learning Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous. Differential Private Federated Learning.

From www.mdpi.com

Future Free FullText Exploring Homomorphic Encryption and Differential Private Federated Learning Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into. Differential Private Federated Learning.

From odymit.github.io

Privacy and Robustness in Federated Learning Attacks and Defenses Differential Private Federated Learning In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Nevertheless, privacy leakage may still happen. In this paper, to effectively prevent. Differential Private Federated Learning.

From www.semanticscholar.org

Figure 1 from Locally Differential Private Federated Learning with Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Nevertheless, privacy leakage may still happen. We. Differential Private Federated Learning.

From www.mdpi.com

Applied Sciences Free FullText Local Differential PrivacyBased Differential Private Federated Learning Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. The aim is to hide clients' contributions during. Differential privacy has emerged as the de facto standard for privacy protection in. Differential Private Federated Learning.

From towardsdatascience.com

AI Differential Privacy and Federated Learning by Pier Paolo Ippolito Differential Private Federated Learning Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training. Differential Private Federated Learning.

From speakerdeck.com

Secure and Private Federated Learning with Differential Privacy Differential Private Federated Learning Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. We. Differential Private Federated Learning.

From www.youtube.com

Brendan McMahan Guarding user Privacy with Federated Learning and Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Nevertheless, privacy leakage may still happen. In this paper, to effectively prevent information leakage, we propose a novel framework based. Differential Private Federated Learning.

From www.researchgate.net

Different models of differential privacy in Federated Learning. Red Differential Private Federated Learning In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. The aim is to hide clients' contributions during. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. Differential privacy has emerged as the de facto. Differential Private Federated Learning.

From aigptnow.com

Understanding the Basics of Differential Privacy and Federated Learning Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. Federated learning (fl), a type of collaborative. Differential Private Federated Learning.

From jeit.ac.cn

A Study of Local Differential Privacy Mechanisms Based on Federated Differential Private Federated Learning Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. The aim is to hide clients' contributions during. Nevertheless, privacy leakage may still happen. Differential privacy has emerged as the de facto standard for privacy protection in federated. Differential Private Federated Learning.

From towardsdatascience.com

AI Differential Privacy and Federated Learning by Pier Paolo Ippolito Differential Private Federated Learning In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. The aim is to hide clients' contributions during. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Federated learning (fl),. Differential Private Federated Learning.

From www.scirp.org

A Systematic Survey for Differential Privacy Techniques in Federated Differential Private Federated Learning Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. The aim is to hide clients' contributions during. Nevertheless, privacy leakage may still happen. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. In this paper, to effectively prevent information. Differential Private Federated Learning.

From blog.openmined.org

Private Deep Learning of Medical Data for Hospitals using Federated Differential Private Federated Learning We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept of differential. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. Federated. Differential Private Federated Learning.

From www.researchgate.net

(PDF) Differential Private Federated Learning for PrivacyPreserving Differential Private Federated Learning Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. In this paper, to. Differential Private Federated Learning.

From medium.com

Differentially Private Federated Learning A Client Level Perspective Differential Private Federated Learning Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. In differentially private federated learning (dpfl), gradient clipping and random noise addition disproportionately affect. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. The aim is to hide clients'. Differential Private Federated Learning.

From vtiya.medium.com

The difference between differential privacy and federated learning by Differential Private Federated Learning The aim is to hide clients' contributions during. Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. We tackle this problem and propose an algorithm for client sided differential. Differential Private Federated Learning.

From www.semanticscholar.org

Figure 1 from Differentially Private Federated Learning with Differential Private Federated Learning Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. The aim is to hide clients' contributions during. Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. We tackle this problem and propose an algorithm for. Differential Private Federated Learning.

From www.mdpi.com

Electronics Free FullText PrivacyEnhanced Federated Learning A Differential Private Federated Learning Federated learning (fl), a type of collaborative machine learning framework, is capable of helping protect users’ private data while training the data into useful models. Federated learning (fl), as a type of distributed machine learning, is capable of significantly preserving client's private data. In this paper, to effectively prevent information leakage, we propose a novel framework based on the concept. Differential Private Federated Learning.

From www.semanticscholar.org

FedSeC a Robust Differential Private Federated Learning Framework in Differential Private Federated Learning The aim is to hide clients' contributions during. Nevertheless, privacy leakage may still happen. We tackle this problem and propose an algorithm for client sided differential privacy preserving federated optimization. Differential privacy has emerged as the de facto standard for privacy protection in federated learning due to its rigorous mathematical foundation and provable guarantee. In differentially private federated learning (dpfl),. Differential Private Federated Learning.