Time Distributed Keras . We will use a simple sequence learning problem to demonstrate the timedistributed layer. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. This is where time distributed layer can give a hand. To effectively learn how to use this. The shape of the input in the above. I am trying to grasp what timedistributed wrapper does in keras. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. But what if you need to adapt each input before or after this layer? Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. I get that timedistributed applies a layer to every. Keras proposes this one, and we will first try to understand how to. The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence.

from www.scaler.com

I get that timedistributed applies a layer to every. The shape of the input in the above. Keras proposes this one, and we will first try to understand how to. We will use a simple sequence learning problem to demonstrate the timedistributed layer. But what if you need to adapt each input before or after this layer? To effectively learn how to use this. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input.

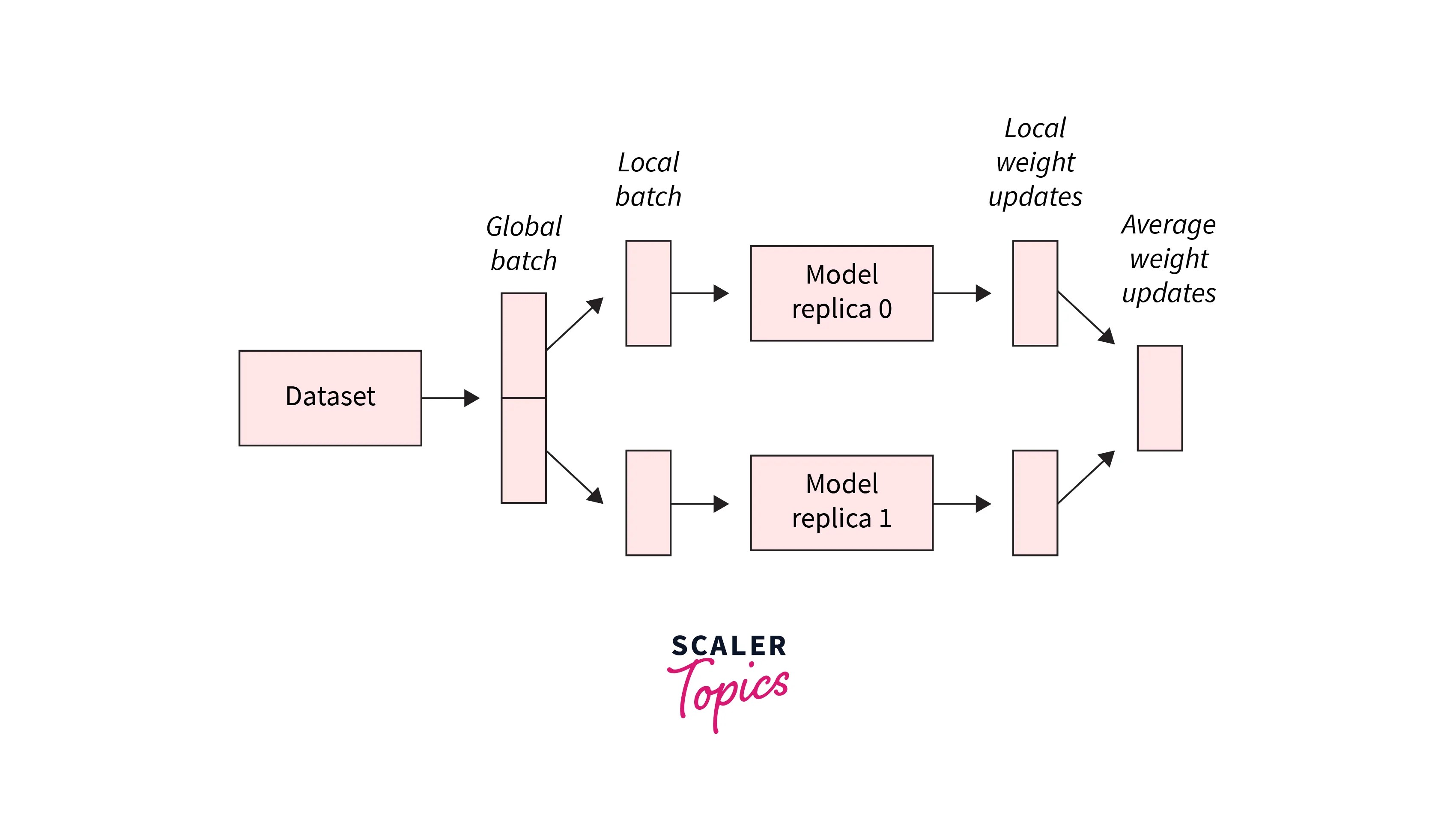

Distributed Training for Standard Training Loops in Keras Scaler Topics

Time Distributed Keras We will use a simple sequence learning problem to demonstrate the timedistributed layer. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. But what if you need to adapt each input before or after this layer? We will use a simple sequence learning problem to demonstrate the timedistributed layer. The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. This is where time distributed layer can give a hand. To effectively learn how to use this. Deploy ml on mobile, microcontrollers and other edge devices. The shape of the input in the above. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. I get that timedistributed applies a layer to every. I am trying to grasp what timedistributed wrapper does in keras. Keras proposes this one, and we will first try to understand how to.

From stackoverflow.com

tensorflow Keras TimeDistributed for multiinput case? Stack Overflow Time Distributed Keras In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. The shape of the input in the above. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice. Time Distributed Keras.

From github.com

GitHub harvitronix/supersimpledistributedkeras A super simple way Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. I am trying to grasp what timedistributed wrapper does in keras. I get that timedistributed applies a layer to every. Deploy ml on mobile, microcontrollers and other edge devices. In the above example, the repeatvector layer. Time Distributed Keras.

From www.youtube.com

understanding TimeDistributed layer in Tensorflow, keras in Urdu Time Distributed Keras I get that timedistributed applies a layer to every. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. This is where time distributed layer can give a hand. But what if you need to adapt each input before or after this layer? The timedistributed. Time Distributed Keras.

From github.com

Time_Distributed Keras vs TF Keras · Issue 21304 · tensorflow Time Distributed Keras But what if you need to adapt each input before or after this layer? The shape of the input in the above. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. I get that timedistributed applies a. Time Distributed Keras.

From datascience.stackexchange.com

machine learning time series prediction with several independent Time Distributed Keras Keras proposes this one, and we will first try to understand how to. The shape of the input in the above. Deploy ml on mobile, microcontrollers and other edge devices. To effectively learn how to use this. But what if you need to adapt each input before or after this layer? In the above example, the repeatvector layer repeats the. Time Distributed Keras.

From www.youtube.com

PYTHON What is the role of TimeDistributed layer in Keras? YouTube Time Distributed Keras Keras proposes this one, and we will first try to understand how to. This is where time distributed layer can give a hand. To effectively learn how to use this. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number. Time Distributed Keras.

From github.com

How to fix dense and timedistributed error in python? · Issue 12550 Time Distributed Keras But what if you need to adapt each input before or after this layer? Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The shape of the input in the above. I get that timedistributed applies a layer to every. We will use a simple sequence learning problem to demonstrate the timedistributed layer.. Time Distributed Keras.

From 9to5answer.com

[Solved] TimeDistributed(Dense) vs Dense in Keras Same 9to5Answer Time Distributed Keras Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. Deploy ml on mobile, microcontrollers and other edge devices. Keras proposes this one, and we will first try to understand how to. This is where time distributed layer. Time Distributed Keras.

From www.scaler.com

Distributed Training with Keras Scaler Topics Time Distributed Keras Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. We will use a simple sequence learning problem to demonstrate the timedistributed layer. To effectively learn how to use this. I get that timedistributed applies a layer to every. Deploy ml on mobile, microcontrollers and other edge devices. This is where time distributed layer. Time Distributed Keras.

From stackoverflow.com

python Keras Embedding layer Stack Overflow Time Distributed Keras I get that timedistributed applies a layer to every. The shape of the input in the above. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. The timedistributed layer in keras is a wrapper layer that allows. Time Distributed Keras.

From www.pinterest.es

Keras/TF Time Distributed CNN+LSTM for visual recognition Kaggle Time Distributed Keras Keras proposes this one, and we will first try to understand how to. We will use a simple sequence learning problem to demonstrate the timedistributed layer. I get that timedistributed applies a layer to every. This is where time distributed layer can give a hand. To effectively learn how to use this. In the above example, the repeatvector layer repeats. Time Distributed Keras.

From data-flair.training

Keras MultiGPU and Distributed Training Mechanism with Examples Time Distributed Keras We will use a simple sequence learning problem to demonstrate the timedistributed layer. The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply. Time Distributed Keras.

From blog.csdn.net

【pytorch记录】pytorch的分布式 torch.distributed.launch 命令在做什么呢CSDN博客 Time Distributed Keras We will use a simple sequence learning problem to demonstrate the timedistributed layer. To effectively learn how to use this. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence.. Time Distributed Keras.

From www.business-science.io

Time Series Analysis KERAS LSTM Deep Learning Part 1 Time Distributed Keras I am trying to grasp what timedistributed wrapper does in keras. I get that timedistributed applies a layer to every. But what if you need to adapt each input before or after this layer? Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. In the above. Time Distributed Keras.

From stackoverflow.com

neural network Issue with TimeDistributed LSTMs Stack Overflow Time Distributed Keras I get that timedistributed applies a layer to every. But what if you need to adapt each input before or after this layer? To effectively learn how to use this. This is where time distributed layer can give a hand. Keras proposes this one, and we will first try to understand how to. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply. Time Distributed Keras.

From www.web-dev-qa-db-fra.com

python — Comment configurer 1DConvolution et LSTM dans Keras Time Distributed Keras In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. We will use a simple sequence learning problem to demonstrate the timedistributed layer. This is where time distributed layer can give a hand. Keras proposes this one, and we will first try to understand how to. To effectively learn how to use this. But. Time Distributed Keras.

From www.slidestalk.com

Analytics ZooDistributed Tensorflow, Keras and BigDL in product Time Distributed Keras To effectively learn how to use this. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. We will use a simple sequence learning problem to demonstrate the timedistributed layer. I am trying to grasp what timedistributed wrapper does in keras. In the above example, the repeatvector layer repeats the incoming inputs a specific. Time Distributed Keras.

From medium.com

Mastering Distributed Training with Keras 3 A Complete Guide to the Time Distributed Keras Deploy ml on mobile, microcontrollers and other edge devices. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. We will use a simple sequence learning problem to demonstrate the timedistributed layer. To effectively learn how to use. Time Distributed Keras.

From github.com

Use LSTM for recurrent convolutional network without time distributed Time Distributed Keras We will use a simple sequence learning problem to demonstrate the timedistributed layer. Keras proposes this one, and we will first try to understand how to. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. To effectively learn how to use this. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every. Time Distributed Keras.

From github.com

plot_model's expand_nested looks wonky for model with multiple time Time Distributed Keras Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. But what if you need to adapt each input before or after this layer? I am trying to grasp what timedistributed wrapper does in keras. Deploy ml on mobile, microcontrollers and other edge devices. I get that timedistributed applies a layer to every. The. Time Distributed Keras.

From github.com

ValueError Error when checking model target expected time_distributed Time Distributed Keras Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Keras proposes this one, and we will first try to understand how to. To effectively learn how to use this. I am trying to grasp what timedistributed wrapper does in keras. We will use a simple sequence learning problem to demonstrate the timedistributed layer.. Time Distributed Keras.

From github.com

Variantional network shape error when using time Time Distributed Keras Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. But what if you need to adapt each input before or after this layer? The shape of the input in the above. We will use a simple sequence. Time Distributed Keras.

From towardsdatascience.com

Time Series Forecasting Prediction Intervals by Brendan Artley Time Distributed Keras Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. But what if you need to adapt each input before or after this layer? To effectively learn how to use this. The shape of the input in the above. We will use a simple sequence learning problem to demonstrate the timedistributed layer. In the. Time Distributed Keras.

From link.springer.com

Correction to Speech emotion recognition using time distributed 2D Time Distributed Keras Keras proposes this one, and we will first try to understand how to. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. But what if you need to adapt each input before or after this layer? Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Deploy. Time Distributed Keras.

From medium.com

Mastering MultiGPU Distributed Training for Keras Models Using PyTorch Time Distributed Keras Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. I am trying to grasp what timedistributed wrapper does in keras. Deploy. Time Distributed Keras.

From climate.com

Disease ID How We Scaled Our Deep Learning Model with Distributed Training Time Distributed Keras In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. Keras proposes this one, and we will first try to understand how to. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. I am trying to grasp what. Time Distributed Keras.

From www.slidestalk.com

Analytics ZooDistributed Tensorflow, Keras and BigDL in product Time Distributed Keras This is where time distributed layer can give a hand. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. I get that timedistributed applies a layer to every. Deploy ml on mobile, microcontrollers and other edge devices. But what if you need to adapt each input before or after this layer? The shape. Time Distributed Keras.

From www.telesens.co

Distributed data parallel training using Pytorch on AWS Telesens Time Distributed Keras Deploy ml on mobile, microcontrollers and other edge devices. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. I am trying to grasp what timedistributed wrapper does in keras. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. I get that timedistributed applies a layer to. Time Distributed Keras.

From www.desertcart.co.za

Buy HandsOn Guide To IMAGE CLASSIFICATION Using ScikitLearn, Keras Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. In the above example, the repeatvector layer repeats the incoming inputs a specific. Time Distributed Keras.

From harareschoolofai.github.io

Keras Introduction Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. This is where time distributed layer can give a hand. Deploy ml on mobile, microcontrollers and other edge devices. To. Time Distributed Keras.

From blog.twitter.com

Distributed training of sparse ML models — Part 1 Network bottlenecks Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. I get that timedistributed applies a layer to every. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The shape of the input in the above. I am trying. Time Distributed Keras.

From www.scaler.com

Distributed Training with Keras Scaler Topics Time Distributed Keras The shape of the input in the above. I am trying to grasp what timedistributed wrapper does in keras. This is where time distributed layer can give a hand. I get that timedistributed applies a layer to every. But what if you need to adapt each input before or after this layer? In the above example, the repeatvector layer repeats. Time Distributed Keras.

From github.com

Keras TimeDistributed on a Model creates duplicate layers, and is Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. We will use a simple sequence learning problem to demonstrate the timedistributed layer. In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. This is where time distributed layer can. Time Distributed Keras.

From www.scaler.com

Distributed Training with Keras Scaler Topics Time Distributed Keras The timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of a sequence. The shape of the input in the above. But what if you need to adapt each input before or after this layer? This is where time distributed layer can give a hand. In the above example,. Time Distributed Keras.

From www.scaler.com

Distributed Training for Standard Training Loops in Keras Scaler Topics Time Distributed Keras In the above example, the repeatvector layer repeats the incoming inputs a specific number of time. We will use a simple sequence learning problem to demonstrate the timedistributed layer. The shape of the input in the above. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Keras proposes this one, and we will. Time Distributed Keras.