Clamp Vs Clip Pytorch. in numpy, while using np.clamp (x, min, max) we can pass an array of min/max values but pytorch only. How to implement it in. the clamp() function in pytorch clamps all elements in the input tensor into the range specified by min and. torch.clip(input, min=none, max=none, *, out=none) → tensor tensor.clip(min=none, max=none) → tensor. © copyright 2019, torch contributors. what is gradient clipping and how does it occur? so any value below 0.4 has been clamped and updated to be 0.4, any value above 0.6 has been clamped and updated to 0.6, and any value in between has. a=torch.tensor ( [3+4j]) torch.clamp (a, 2+3j, 1+5j) tensor ( [ (3.0000 + 4.0000j)], dtype=torch.complex128) torch.clamp (a, 1+5j, 2) tensor ( [ (1.0000 +.

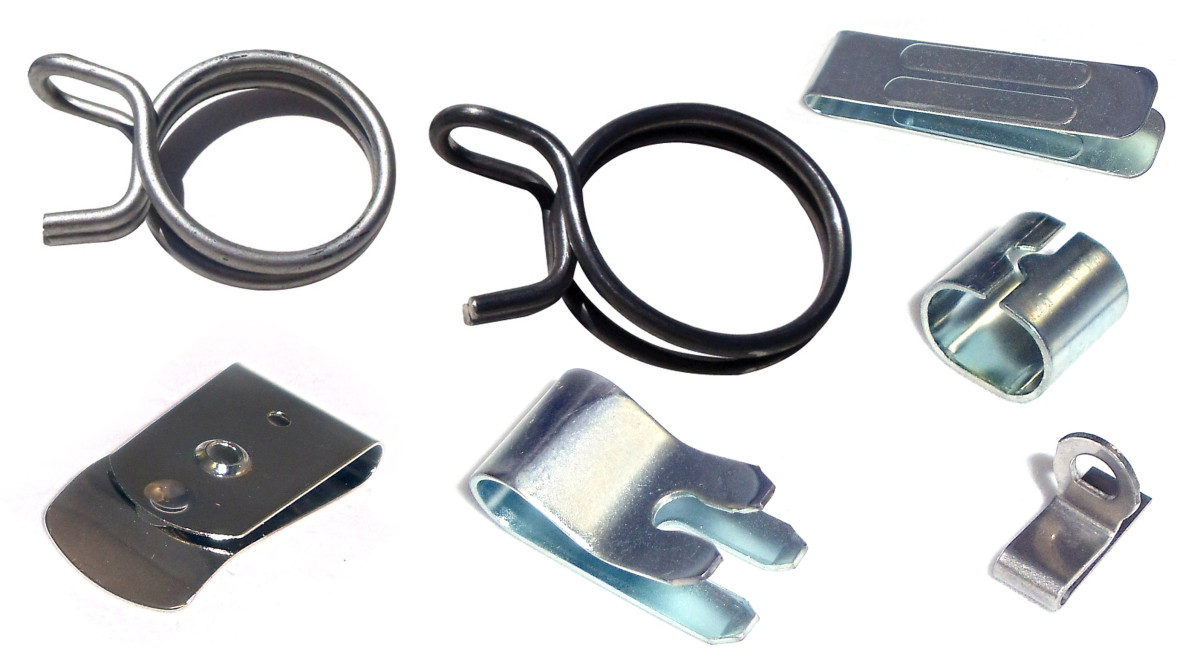

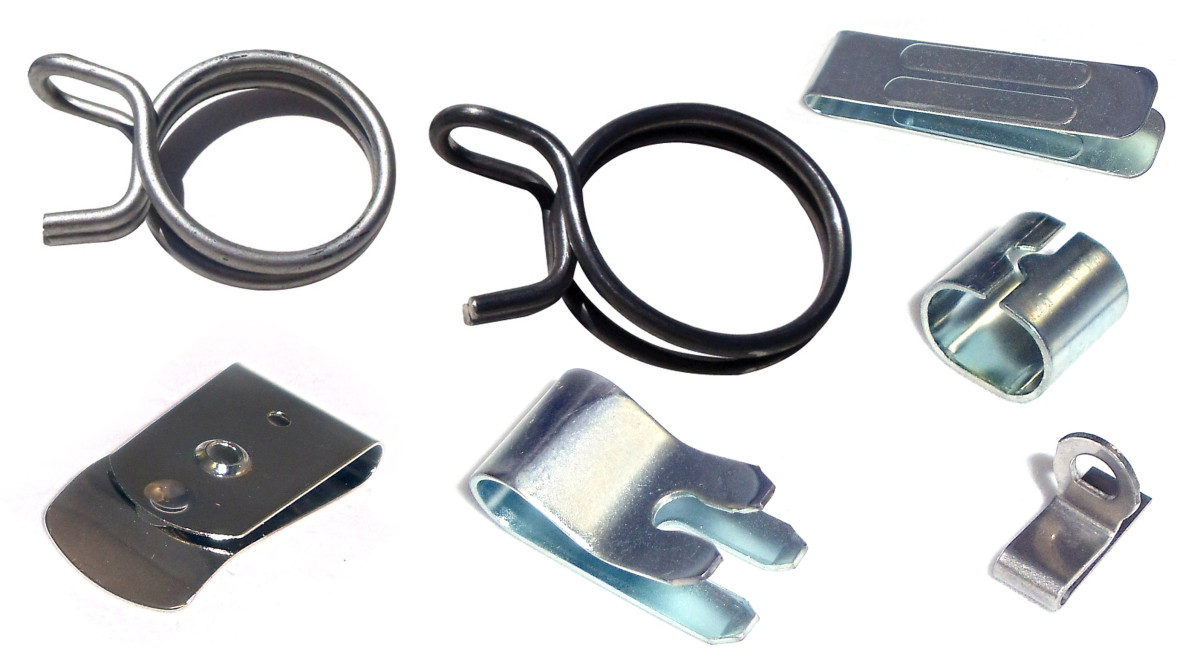

from www.pspring.com

How to implement it in. the clamp() function in pytorch clamps all elements in the input tensor into the range specified by min and. torch.clip(input, min=none, max=none, *, out=none) → tensor in numpy, while using np.clamp (x, min, max) we can pass an array of min/max values but pytorch only. what is gradient clipping and how does it occur? so any value below 0.4 has been clamped and updated to be 0.4, any value above 0.6 has been clamped and updated to 0.6, and any value in between has. © copyright 2019, torch contributors. tensor.clip(min=none, max=none) → tensor. a=torch.tensor ( [3+4j]) torch.clamp (a, 2+3j, 1+5j) tensor ( [ (3.0000 + 4.0000j)], dtype=torch.complex128) torch.clamp (a, 1+5j, 2) tensor ( [ (1.0000 +.

Clips & Hose Clamps Peterson Spring

Clamp Vs Clip Pytorch torch.clip(input, min=none, max=none, *, out=none) → tensor © copyright 2019, torch contributors. in numpy, while using np.clamp (x, min, max) we can pass an array of min/max values but pytorch only. so any value below 0.4 has been clamped and updated to be 0.4, any value above 0.6 has been clamped and updated to 0.6, and any value in between has. a=torch.tensor ( [3+4j]) torch.clamp (a, 2+3j, 1+5j) tensor ( [ (3.0000 + 4.0000j)], dtype=torch.complex128) torch.clamp (a, 1+5j, 2) tensor ( [ (1.0000 +. tensor.clip(min=none, max=none) → tensor. torch.clip(input, min=none, max=none, *, out=none) → tensor the clamp() function in pytorch clamps all elements in the input tensor into the range specified by min and. what is gradient clipping and how does it occur? How to implement it in.