Torch.nn.embedding Example . So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. We must build a matrix of weights that will be loaded into the pytorch embedding layer. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. Assign a unique number to each. This simple operation is the foundation of many advanced. In pytorch an embedding layer is available through torch.nn.embedding class. This might be helpful getting to grips. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. The vocabulary size, and the dimensionality of. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch.

from www.tutorialexample.com

The vocabulary size, and the dimensionality of. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This mapping is done through an embedding matrix, which is a. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the foundation of many advanced. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. We must build a matrix of weights that will be loaded into the pytorch embedding layer. This might be helpful getting to grips. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments:

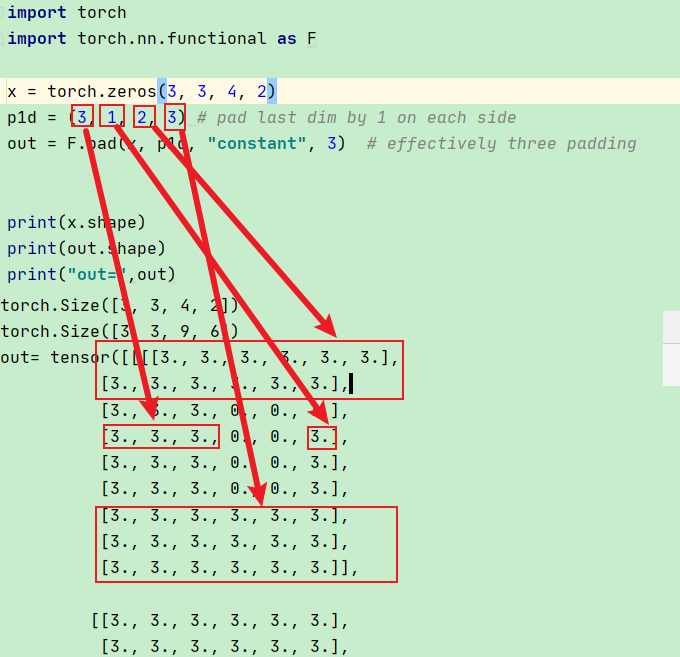

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial

Torch.nn.embedding Example The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the foundation of many advanced. In pytorch an embedding layer is available through torch.nn.embedding class. Assign a unique number to each. This mapping is done through an embedding matrix, which is a. The vocabulary size, and the dimensionality of. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This might be helpful getting to grips. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. We must build a matrix of weights that will be loaded into the pytorch embedding layer.

From blog.csdn.net

关于nn.embedding的理解_nn.embedding怎么处理floatCSDN博客 Torch.nn.embedding Example We must build a matrix of weights that will be loaded into the pytorch embedding layer. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This might be helpful getting to grips. In this brief article i will show how an embedding layer is equivalent to a linear. Torch.nn.embedding Example.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Torch.nn.embedding Example This mapping is done through an embedding matrix, which is a. Assign a unique number to each. This simple operation is the foundation of many advanced. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: The nn.embedding layer is a simple lookup table that maps an index value to. Torch.nn.embedding Example.

From blog.csdn.net

nn.embedding函数详解(pytorch)CSDN博客 Torch.nn.embedding Example Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. We must build a matrix of weights that will be loaded into the pytorch embedding layer. This mapping is done through an embedding matrix, which is a. Assign a unique number to each. If i have 1000 words, using. Torch.nn.embedding Example.

From www.programmersought.com

nn.moduleList and Sequential of pytorch Programmer Sought Torch.nn.embedding Example Assign a unique number to each. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: The vocabulary size, and the dimensionality of. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. This mapping is done through an. Torch.nn.embedding Example.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch.nn.embedding Example This mapping is done through an embedding matrix, which is a. In pytorch an embedding layer is available through torch.nn.embedding class. We must build a matrix of weights that will be loaded into the pytorch embedding layer. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. In this. Torch.nn.embedding Example.

From pythonguides.com

PyTorch Nn Conv2d [With 12 Examples] Python Guides Torch.nn.embedding Example The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. The vocabulary. Torch.nn.embedding Example.

From blog.csdn.net

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用_embedding_dim Torch.nn.embedding Example This simple operation is the foundation of many advanced. This mapping is done through an embedding matrix, which is a. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. This might be helpful getting to grips. In this brief article i will show how an embedding layer is equivalent to a linear layer (without. Torch.nn.embedding Example.

From jamesmccaffrey.wordpress.com

PyTorch Word Embedding Layer from Scratch James D. McCaffrey Torch.nn.embedding Example If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: This might be helpful getting to grips. In pytorch. Torch.nn.embedding Example.

From blog.csdn.net

torch.nn.embedding的工作原理_nn.embedding原理CSDN博客 Torch.nn.embedding Example The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: This mapping is done through an embedding matrix, which is a. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. This might be helpful getting to grips. We. Torch.nn.embedding Example.

From www.youtube.com

torch.nn.Embedding How embedding weights are updated in Torch.nn.embedding Example This might be helpful getting to grips. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. In this brief article i will show how an embedding layer is equivalent to a linear layer (without. Torch.nn.embedding Example.

From blog.csdn.net

torch.nn.Embedding()参数讲解_nn.embedding参数CSDN博客 Torch.nn.embedding Example This simple operation is the foundation of many advanced. We must build a matrix of weights that will be loaded into the pytorch embedding layer. This might be helpful getting to grips. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: So, once you have the embedding layer defined, and the vocabulary defined and encoded. Torch.nn.embedding Example.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Torch.nn.embedding Example Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. In pytorch an embedding layer is available through torch.nn.embedding class. This mapping is done through an embedding matrix, which is a. The vocabulary size,. Torch.nn.embedding Example.

From www.youtube.com

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch.nn.embedding Example We must build a matrix of weights that will be loaded into the pytorch embedding layer. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. This mapping. Torch.nn.embedding Example.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.embedding Example If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. This might be helpful getting to grips. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: The vocabulary size, and the dimensionality of. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Nn.embedding is a pytorch layer that maps indices from a. Torch.nn.embedding Example.

From blog.csdn.net

【Pytorch学习】nn.Embedding的讲解及使用CSDN博客 Torch.nn.embedding Example The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. In pytorch an embedding layer is available through torch.nn.embedding class. This might be helpful getting to grips. This simple operation is the foundation of many advanced. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. If i have 1000 words, using nn.embedding(1000,. Torch.nn.embedding Example.

From discuss.pytorch.org

Understanding how filters are created in torch.nn.Conv2d nlp Torch.nn.embedding Example In pytorch an embedding layer is available through torch.nn.embedding class. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the foundation of many advanced. The nn.embedding layer is. Torch.nn.embedding Example.

From blog.csdn.net

torch.nn.Embedding()的固定化_embedding 固定初始化CSDN博客 Torch.nn.embedding Example The vocabulary size, and the dimensionality of. Assign a unique number to each. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. Nn.embedding is a pytorch layer that maps indices from a. Torch.nn.embedding Example.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch.nn.embedding Example The vocabulary size, and the dimensionality of. This simple operation is the foundation of many advanced. Assign a unique number to each. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: If i have. Torch.nn.embedding Example.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Torch.nn.embedding Example This simple operation is the foundation of many advanced. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. The vocabulary size, and the dimensionality of. In this brief article i will show how an embedding layer is equivalent to a linear layer. Torch.nn.embedding Example.

From blog.csdn.net

torch.nn.Embedding参数详解之num_embeddings,embedding_dim_torchembeddingCSDN博客 Torch.nn.embedding Example This might be helpful getting to grips. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. This mapping is done through an embedding matrix, which is a. Assign a unique number to each. The vocabulary size, and the dimensionality. Torch.nn.embedding Example.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Torch.nn.embedding Example If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. This might be helpful getting to grips. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Assign a unique number to each. This simple operation is the foundation of many advanced. This mapping is done through an embedding matrix, which is a. We must build a matrix. Torch.nn.embedding Example.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch.nn.embedding Example The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This simple operation is the foundation of many advanced. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. The vocabulary size, and the dimensionality of. Assign a unique number to each. This mapping is done through an embedding matrix, which is a.. Torch.nn.embedding Example.

From www.tutorialexample.com

torch.nn.Linear() weight Shape Explained PyTorch Tutorial Torch.nn.embedding Example The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: This might be helpful getting to grips. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a. Torch.nn.embedding Example.

From discuss.pytorch.org

[Solved, Self Implementing] How to return sparse tensor from nn Torch.nn.embedding Example Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. We must build a matrix of weights that will be loaded into the pytorch embedding layer. Assign a unique number to each. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. The vocabulary size, and the dimensionality of. This mapping is done. Torch.nn.embedding Example.

From stackoverflow.com

python Understanding torch.nn.LayerNorm in nlp Stack Overflow Torch.nn.embedding Example This mapping is done through an embedding matrix, which is a. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. This. Torch.nn.embedding Example.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch.nn.embedding Example The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. In pytorch an embedding layer is available through torch.nn.embedding class. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This might be helpful getting to grips. The vocabulary. Torch.nn.embedding Example.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch.nn.embedding Example Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Assign a unique number to each. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. We must build a matrix of weights that will be loaded into the pytorch embedding layer. This mapping is done through an embedding matrix, which is a. The vocabulary size, and the dimensionality. Torch.nn.embedding Example.

From zhuanlan.zhihu.com

torch.nn 之 Normalization Layers 知乎 Torch.nn.embedding Example The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known. Torch.nn.embedding Example.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch.nn.embedding Example The vocabulary size, and the dimensionality of. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of. Torch.nn.embedding Example.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.embedding Example This mapping is done through an embedding matrix, which is a. Assign a unique number to each. So, once you have the embedding layer defined, and the vocabulary defined and encoded (i.e. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. This might be helpful getting to grips. In pytorch an embedding layer. Torch.nn.embedding Example.

From blog.csdn.net

对nn.Embedding的理解以及nn.Embedding不能嵌入单个数值问题_nn.embedding(beCSDN博客 Torch.nn.embedding Example The vocabulary size, and the dimensionality of. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. Assign a unique number to each. The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain. Torch.nn.embedding Example.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch.nn.embedding Example Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the foundation of many advanced. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. This might be. Torch.nn.embedding Example.

From sebarnold.net

nn package — PyTorch Tutorials 0.2.0_4 documentation Torch.nn.embedding Example This simple operation is the foundation of many advanced. This might be helpful getting to grips. In this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example in pytorch. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Nn.embedding is a pytorch layer that maps indices from a fixed. Torch.nn.embedding Example.

From blog.csdn.net

【Pytorch学习】nn.Embedding的讲解及使用CSDN博客 Torch.nn.embedding Example The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: This mapping is done through an embedding matrix, which is a. This simple operation is the foundation of many advanced. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. Nn.embedding is a pytorch layer that maps indices from a. Torch.nn.embedding Example.

From blog.csdn.net

pytorch nn.Embedding的用法和理解CSDN博客 Torch.nn.embedding Example The nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. If i have 1000 words, using nn.embedding(1000, 30) to make 30 dimension vectors of each word. The vocabulary size, and the dimensionality of. The module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: So, once. Torch.nn.embedding Example.