Weight Decay Machine Learning . weight decay is a regularization technique in deep learning. more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. We have described both the l 2 norm and the l 1 norm, which are special cases of the more. Weight decay works by adding a penalty. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. It introduces a penalty term. what is weight decay? In the first case our model takes more epochs to fit. what is weight decay in machine learning? weight decay is a technique used in machine learning to prevent overfitting. norms and weight decay. this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function l(w) to. Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the.

from discuss.pytorch.org

It introduces a penalty term. what is weight decay? this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay is a technique used in machine learning to prevent overfitting. norms and weight decay. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. what is weight decay in machine learning? in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function l(w) to.

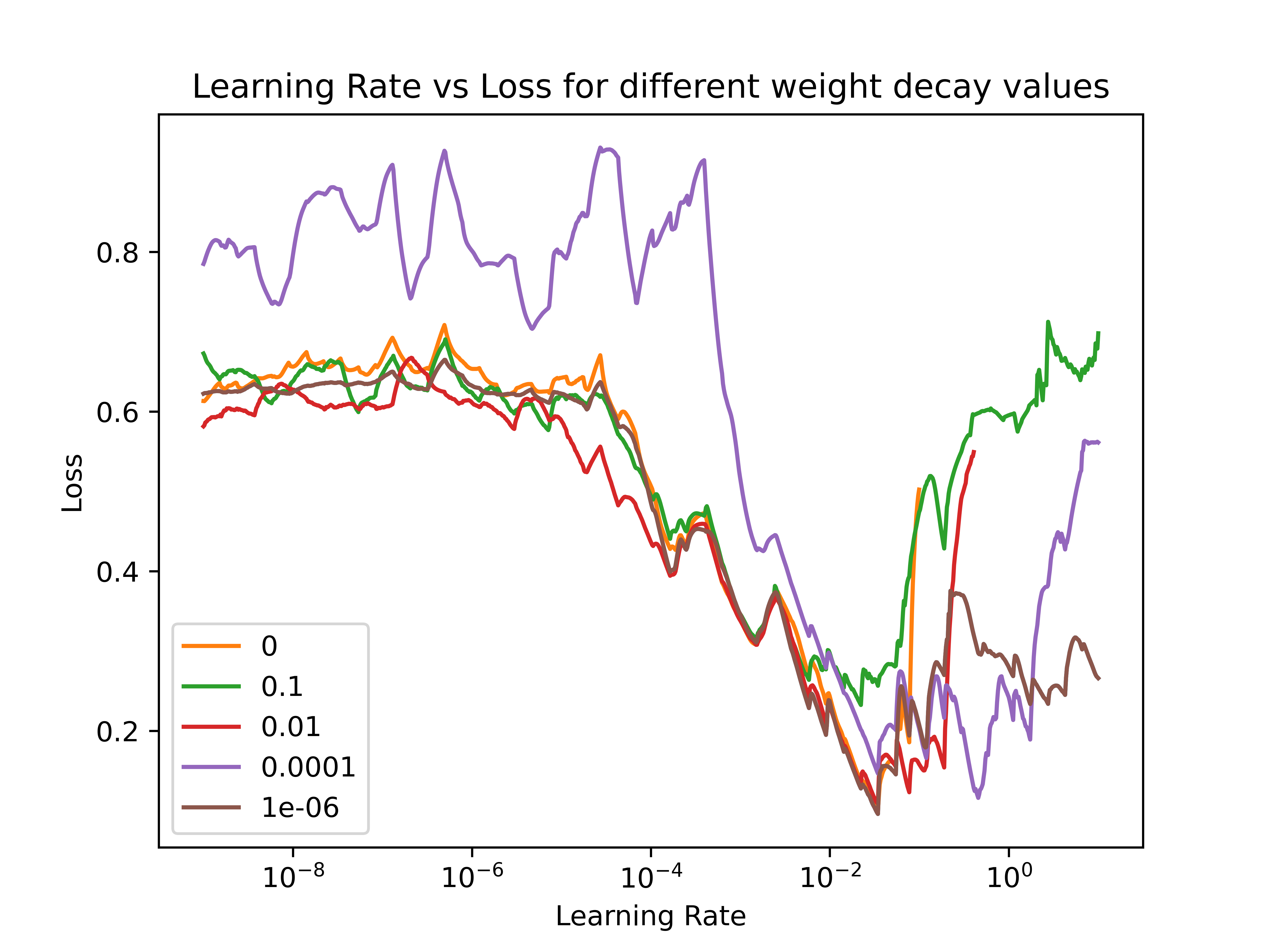

LR finder for different weight decay values (cycle LR, Hyperparameter

Weight Decay Machine Learning Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. weight decay is a regularization technique in deep learning. It introduces a penalty term. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. weight decay is a technique used in machine learning to prevent overfitting. I’ve used 3 values for weight decay, the default 0.01 , the best value of 0.1 and a large value of 10. in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. We have described both the l 2 norm and the l 1 norm, which are special cases of the more. norms and weight decay. in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. what is weight decay in machine learning? Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function l(w) to. weight decay is a regularization method to make models generalize better by learning smoother.

From www.slideserve.com

PPT Lecture 1 Learning without Overlearning PowerPoint Presentation Weight Decay Machine Learning It introduces a penalty term. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added. Weight Decay Machine Learning.

From www.researchgate.net

Graph representing the learning rate associated with weight decay Weight Decay Machine Learning more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. Weight decay is a. Weight Decay Machine Learning.

From towardsdatascience.com

This thing called Weight Decay. Learn how to use weight decay to train Weight Decay Machine Learning I’ve used 3 values for weight decay, the default 0.01 , the best value of 0.1 and a large value of 10. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. We have described both the l 2 norm and the l 1 norm, which are special cases of the. Weight Decay Machine Learning.

From www.semanticscholar.org

[PDF] Fixing Weight Decay Regularization in Adam Semantic Scholar Weight Decay Machine Learning weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. Weight decay is a pivotal technique in machine learning, serving as a cornerstone for model. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. the developed online obesity risk prediction system. Weight Decay Machine Learning.

From www.semanticscholar.org

[PDF] Fixing Weight Decay Regularization in Adam Semantic Scholar Weight Decay Machine Learning in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. norms and weight decay. this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural. Weight Decay Machine Learning.

From medium.com

Techniques of Weight Decay part3(Machine Learning) by Monodeep Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. the developed online obesity risk prediction system is deployed on a webpage, allowing information input. weight decay is a regularization technique in deep learning. . Weight Decay Machine Learning.

From www.researchgate.net

Our neural network settings weight matrix W and bias b are Weight Decay Machine Learning weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. Weight. Weight Decay Machine Learning.

From www.researchgate.net

Accuracy versus Weight decay and Learning rate parameters. Download Weight Decay Machine Learning this particular choice of regularizer is known in the machine learning literature as weight decay because in. what is weight decay? what is weight decay in machine learning? It introduces a penalty term. weight decay is a regularization technique in deep learning. the developed online obesity risk prediction system is deployed on a webpage, allowing. Weight Decay Machine Learning.

From www.youtube.com

44 Weight Decay in Neural Network with PyTorch L2 Regularization Weight Decay Machine Learning in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. Weight decay is a pivotal technique in machine learning, serving as a cornerstone for model. In the first case our model takes more epochs to fit. weight decay is a technique used in machine learning to prevent overfitting.. Weight Decay Machine Learning.

From stackoverflow.com

python How does a decaying learning rate schedule with AdamW Weight Decay Machine Learning in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. weight decay is a regularization technique in deep learning. in machine learning, the weight decay term λ∥w∥², with strength given by. Weight Decay Machine Learning.

From towardsdatascience.com

The Serendipitous Effectiveness of Weight Decay in Deep Learning by Weight Decay Machine Learning We have described both the l 2 norm and the l 1 norm, which are special cases of the more. this particular choice of regularizer is known in the machine learning literature as weight decay because in. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function. Weight Decay Machine Learning.

From www.youtube.com

Regularization in Deep Learning L2 Regularization in ANN L1 Weight Decay Machine Learning more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. weight decay is a regularization technique in deep learning. Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. in this blog post, we’ll explore the big idea behind l2 regularization and. Weight Decay Machine Learning.

From programmathically.com

Weight Decay in Neural Networks Programmathically Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. It introduces a penalty term. We have described both the l 2 norm and the l 1 norm, which are special cases of the more. weight decay is a technique used in machine learning to prevent overfitting. Weight decay works by adding a penalty.. Weight Decay Machine Learning.

From ee.kaist.ac.kr

Weight Decay Scheduling and Knowledge Distillation for Active Learning Weight Decay Machine Learning the developed online obesity risk prediction system is deployed on a webpage, allowing information input. more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. Weight decay is a pivotal technique in machine learning, serving. Weight Decay Machine Learning.

From velog.io

Regularization Weight Decay Weight Decay Machine Learning weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. this particular choice of regularizer is known in the machine learning literature as weight decay because in. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. In the. Weight Decay Machine Learning.

From kmoy1.github.io

Improving Neural Network Training — Machine Learning Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. the developed online obesity risk prediction system is deployed on a webpage, allowing information input. In the first case our model takes more epochs to fit. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural. Weight Decay Machine Learning.

From www.paperswithcode.com

Weight Decay Explained Papers With Code Weight Decay Machine Learning what is weight decay? norms and weight decay. more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. this particular choice of regularizer is known in the machine learning literature as weight. Weight Decay Machine Learning.

From www.youtube.com

Learning Rate decay, Weight initialization YouTube Weight Decay Machine Learning in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. the developed online obesity risk prediction system is deployed on a webpage, allowing information input. weight decay is a regularization method to make models generalize better by learning smoother. in machine learning, the weight decay term. Weight Decay Machine Learning.

From vitalflux.com

Weight Decay in Machine Learning Concepts Analytics Yogi Weight Decay Machine Learning weight decay is a technique used in machine learning to prevent overfitting. In the first case our model takes more epochs to fit. Weight decay works by adding a penalty. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. in this tutorial, we presented the weight. Weight Decay Machine Learning.

From www.paepper.com

Understanding the difference between weight decay and L2 regularization Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. what is weight decay? norms and weight decay. in this tutorial, we presented the weight decay loss. It introduces a penalty term. the developed online obesity risk prediction system is deployed on a webpage, allowing information input. in machine learning,. Weight Decay Machine Learning.

From zhuanlan.zhihu.com

L2正则=Weight Decay?并不是这样 知乎 Weight Decay Machine Learning what is weight decay in machine learning? We have described both the l 2 norm and the l 1 norm, which are special cases of the more. more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. In the first case our model takes more epochs to fit. more commonly called \(\ell_2\). Weight Decay Machine Learning.

From discuss.pytorch.org

LR finder for different weight decay values (cycle LR, Hyperparameter Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. weight decay is a regularization technique in deep learning. I’ve used 3 values for weight decay, the default 0.01 , the best value of 0.1. Weight Decay Machine Learning.

From machinelearningmastery.com

How to Reduce Overfitting With Dropout Regularization in Keras Weight Decay Machine Learning this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. It introduces a penalty. Weight Decay Machine Learning.

From www.slideserve.com

PPT Lecture 1 Learning without Overlearning PowerPoint Presentation Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. what is. Weight Decay Machine Learning.

From www.semanticscholar.org

Figure 1 from Simulated annealing and weight decay in adaptive learning Weight Decay Machine Learning We have described both the l 2 norm and the l 1 norm, which are special cases of the more. in this work, we highlight that the role of weight decay in modern deep learning is different from its regularization. It introduces a penalty term. In the first case our model takes more epochs to fit. in this. Weight Decay Machine Learning.

From www.researchgate.net

(PDF) Adaptive Weight Decay for Deep Neural Networks Weight Decay Machine Learning more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight. Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. in this blog post, we’ll explore the big idea behind l2 regularization and weight decay, their equivalence in. weight decay is a. Weight Decay Machine Learning.

From www.researchgate.net

Learning Rate and Weight Decay values used during training of the Weight Decay Machine Learning this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. Weight decay works by adding a penalty. in this work, we highlight that the role of weight decay in modern. Weight Decay Machine Learning.

From www.researchgate.net

Graph representing the learning rate associated with weight decay Weight Decay Machine Learning in this tutorial, we presented the weight decay loss. more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. this particular choice of regularizer is known in the machine learning literature as weight decay because in. weight decay is a regularization method to make models generalize better by learning smoother. . Weight Decay Machine Learning.

From www.researchgate.net

12 Effects of learning rate and weight decay on the validation accuracy Weight Decay Machine Learning what is weight decay in machine learning? what is weight decay? weight decay is a regularization method to make models generalize better by learning smoother. Weight decay works by adding a penalty. weight decay is a regularization technique in deep learning. Weight decay is a pivotal technique in machine learning, serving as a cornerstone for model.. Weight Decay Machine Learning.

From www.aiproblog.com

Understand the Impact of Learning Rate on Model Performance With Deep Weight Decay Machine Learning Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function l(w) to. more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic gradient descent, weight.. Weight Decay Machine Learning.

From www.evidentlyai.com

Machine Learning Monitoring, Part 5 Why You Should Care About Data and Weight Decay Machine Learning what is weight decay in machine learning? weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. Weight decay is a pivotal technique in machine learning, serving as a cornerstone for model. weight decay, or l 2 regularization, is a regularization technique applied to the weights of a neural network.. Weight Decay Machine Learning.

From discuss.pytorch.org

About learning rate and weight decay PyTorch Forums Weight Decay Machine Learning weight decay is a technique used in machine learning to prevent overfitting. weight decay is a regularization technique in deep learning. this particular choice of regularizer is known in the machine learning literature as weight decay because in. Weight decay is a regularization technique by adding a small penalty, usually the l2 norm of the. more. Weight Decay Machine Learning.

From www.youtube.com

Regularization Weight Decay Regularization Machine Learning Weight Decay Machine Learning weight decay is a regularization method to make models generalize better by learning smoother. weight decay and ℓ 2 subscript ℓ 2 \ell_{2} regularization are widely studied topics in machine learning. We have described both the l 2 norm and the l 1 norm, which are special cases of the more. It introduces a penalty term. in. Weight Decay Machine Learning.

From discuss.pytorch.org

How does SGD weight_decay work? autograd PyTorch Forums Weight Decay Machine Learning what is weight decay? more commonly called ℓ2 regularization outside of deep learning circles when optimized by minibatch stochastic. weight decay is a regularization method to make models generalize better by learning smoother. what is weight decay in machine learning? more commonly called \(\ell_2\) regularization outside of deep learning circles when optimized by minibatch stochastic. Weight Decay Machine Learning.

From wandb.ai

Fundamentals of Neural Networks on Weights & Biases Weight Decay Machine Learning weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and. Weight decay works by adding a penalty. in machine learning, the weight decay term λ∥w∥², with strength given by hyperparameter λ>0, can be added to the loss function l(w) to. Weight decay is a pivotal technique in machine. Weight Decay Machine Learning.