Pytorch Gradient Example . Automatic differentiation allows you to compute gradients of tensors. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. code to show various ways to create gradient enabled tensors note: i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. By pytorch’s design, gradients can only be calculated for floating. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t.

from debuggercafe.com

gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. code to show various ways to create gradient enabled tensors note: By pytorch’s design, gradients can only be calculated for floating. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. Automatic differentiation allows you to compute gradients of tensors.

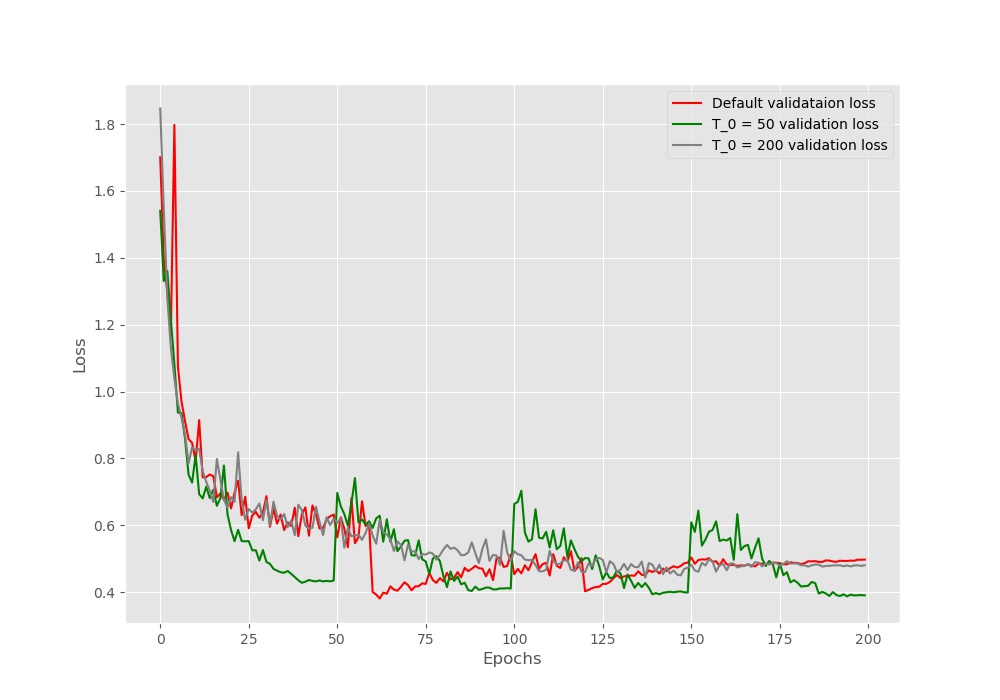

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts

Pytorch Gradient Example in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. Automatic differentiation allows you to compute gradients of tensors. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. By pytorch’s design, gradients can only be calculated for floating. code to show various ways to create gradient enabled tensors note: gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Gradient Example Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. code to show various ways to create gradient enabled tensors note: in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows. Pytorch Gradient Example.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Gradient Example in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows you to compute gradients of tensors. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient descent is an iterative optimization. Pytorch Gradient Example.

From www.youtube.com

CS 320 Apr262021 (Part 3) pytorch gradients YouTube Pytorch Gradient Example gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. code to show various ways to create gradient enabled tensors note: gradient descent. Pytorch Gradient Example.

From www.researchgate.net

PyTorch implementation example for Smooth Gradient Download Pytorch Gradient Example By pytorch’s design, gradients can only be calculated for floating. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. gradient is a. Pytorch Gradient Example.

From www.bilibili.com

PyTorch Tutorial 03 Gradient Calcul... 哔哩哔哩 Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: By pytorch’s design, gradients can only be calculated for floating. Automatic differentiation allows you to compute gradients of tensors. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. gradient descent is an iterative optimization method used to. Pytorch Gradient Example.

From www.youtube.com

PyTorch Autograd Explained Indepth Tutorial YouTube Pytorch Gradient Example in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. Torch.gradient(input,. Pytorch Gradient Example.

From github.com

GitHub graphcore/GradientPytorch Pytorch Gradient Example in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. code to show various ways to create gradient enabled tensors note: Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient descent is. Pytorch Gradient Example.

From zhuanlan.zhihu.com

PyTorch中的parameters 知乎 Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: By pytorch’s design, gradients can only be calculated for floating. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows you to compute gradients of tensors. i have some pytorch code which demonstrates the. Pytorch Gradient Example.

From www.youtube.com

PyTorch Lecture 03 Gradient Descent YouTube Pytorch Gradient Example gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. in pytorch, gradients are an integral part of. Pytorch Gradient Example.

From forum.pyro.ai

Pyro/Pytorch gradient norm visualization Misc. Pyro Discussion Forum Pytorch Gradient Example Automatic differentiation allows you to compute gradients of tensors. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. gradient is a tensor of the same shape as q, and it. Pytorch Gradient Example.

From www.tutoraspire.com

Gradient with PyTorch Online Tutorials Library List Pytorch Gradient Example i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. Automatic differentiation allows you to compute gradients of tensors. Torch.gradient(input,. Pytorch Gradient Example.

From 9to5answer.com

[Solved] How to do gradient clipping in pytorch? 9to5Answer Pytorch Gradient Example Automatic differentiation allows you to compute gradients of tensors. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. code to show various ways to create gradient enabled tensors note: Torch.gradient(input, *,. Pytorch Gradient Example.

From www.youtube.com

PyTorch Tutorial for Beginners Basics & Gradient Descent Tensors Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: Automatic differentiation allows you to compute gradients of tensors. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. . Pytorch Gradient Example.

From pytorch.org

How Computational Graphs are Constructed in PyTorch PyTorch Pytorch Gradient Example gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. code to show various ways to create gradient enabled tensors note: in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows you to compute gradients of tensors.. Pytorch Gradient Example.

From seunghan96.github.io

(PyG) Pytorch Geometric Review 1 intro AAA (All About AI) Pytorch Gradient Example By pytorch’s design, gradients can only be calculated for floating. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows you to compute gradients of tensors. gradient is. Pytorch Gradient Example.

From www.youtube.com

PyTorch Basics and Gradient Descent Deep Learning with PyTorch Zero Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: By pytorch’s design, gradients can only be calculated for floating. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values.. Pytorch Gradient Example.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Gradient Example gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. code to show various ways to create gradient enabled tensors note: in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. i have some pytorch code which demonstrates. Pytorch Gradient Example.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Gradient Example By pytorch’s design, gradients can only be calculated for floating. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. i have some. Pytorch Gradient Example.

From wandb.ai

Monitor Your PyTorch Models With Five Extra Lines of Code on Weights Pytorch Gradient Example Automatic differentiation allows you to compute gradients of tensors. By pytorch’s design, gradients can only be calculated for floating. code to show various ways to create gradient enabled tensors note: i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient is a tensor of the same shape as q,. Pytorch Gradient Example.

From github.com

GitHub Finspire13/pytorchpolicygradientexample A toy example of Pytorch Gradient Example Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. By pytorch’s design, gradients can only be calculated for floating. Automatic differentiation allows you to compute gradients of tensors. in pytorch,. Pytorch Gradient Example.

From github.com

IntegratedGradientPytorch/ig.py at main · shyhyawJou/Integrated Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. Automatic differentiation allows you to compute gradients of tensors. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. gradient descent is an iterative optimization method used to find the minimum of. Pytorch Gradient Example.

From towardsdatascience.com

Introducing PyTorch Forecasting by Jan Beitner Towards Data Science Pytorch Gradient Example Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows you to compute gradients of tensors. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. By pytorch’s design, gradients can only be. Pytorch Gradient Example.

From www.tutoraspire.com

PyTorch Gradient Descent Online Tutorials Library List Pytorch Gradient Example i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. code to show various ways to create gradient enabled tensors note: By pytorch’s design, gradients can only be calculated for floating. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework.. Pytorch Gradient Example.

From discuss.pytorch.org

Check gradient flow in network PyTorch Forums Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. in pytorch, gradients are an integral part of. Pytorch Gradient Example.

From www.youtube.com

CS 320 Apr 17 (Part 4) Gradients in PyTorch YouTube Pytorch Gradient Example in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. i. Pytorch Gradient Example.

From velog.io

PyTorch Tutorial 03. Gradient Descent & AutoGrad Pytorch Gradient Example i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. By pytorch’s design, gradients can only be calculated for floating. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. in pytorch, gradients are an integral part of automatic differentiation, which is. Pytorch Gradient Example.

From stacktuts.com

How to do gradient clipping in pytorch? StackTuts Pytorch Gradient Example gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. By pytorch’s design, gradients can only be calculated for floating. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. code to show. Pytorch Gradient Example.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation. Pytorch Gradient Example.

From github.com

GitHub gradientai/PyTorch Gradient Notebooks default PyTorch repository Pytorch Gradient Example Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. code to show various ways to create gradient enabled tensors note: i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. Automatic differentiation allows you to compute gradients of tensors. gradient descent is an iterative optimization method used to find the minimum of an. Pytorch Gradient Example.

From www.youtube.com

Gradient with respect to input in PyTorch (FGSM attack + Integrated Pytorch Gradient Example gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. By pytorch’s design, gradients can only be calculated for floating. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. Automatic differentiation allows you to compute gradients of tensors. code to show various ways to create gradient enabled tensors note: in pytorch, gradients. Pytorch Gradient Example.

From www.youtube.com

PyTorch Tutorial 03 Gradient Calculation With Autograd YouTube Pytorch Gradient Example Automatic differentiation allows you to compute gradients of tensors. code to show various ways to create gradient enabled tensors note: gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. By pytorch’s. Pytorch Gradient Example.

From www.databricks.com

Seven Reasons to Learn PyTorch on Databricks The Databricks Blog Pytorch Gradient Example gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. By pytorch’s design, gradients can only be calculated for floating. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. gradient is a tensor of the same shape as. Pytorch Gradient Example.

From blog.paperspace.com

PyTorch Basics Understanding Autograd and Computation Graphs Pytorch Gradient Example By pytorch’s design, gradients can only be calculated for floating. Torch.gradient(input, *, spacing=1, dim=none, edge_order=1)→listoftensors ¶. Automatic differentiation allows you to compute gradients of tensors. code to show various ways to create gradient enabled tensors note: gradient descent is an iterative optimization method used to find the minimum of an objective function by updating values. in pytorch,. Pytorch Gradient Example.

From hexuanweng.github.io

PyTorch Tutorial Gradient Descent Hex.* Pytorch Gradient Example i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. gradient is a tensor of the same shape as q, and it represents the gradient of q w.r.t. in pytorch, gradients are an integral part of automatic differentiation, which is a key feature provided by the framework. Automatic differentiation allows. Pytorch Gradient Example.

From www.youtube.com

6 Pytorch Tutorial Gradient YouTube Pytorch Gradient Example code to show various ways to create gradient enabled tensors note: i have some pytorch code which demonstrates the gradient calculation within pytorch, but i am thoroughly confused. By pytorch’s design, gradients can only be calculated for floating. Automatic differentiation allows you to compute gradients of tensors. gradient descent is an iterative optimization method used to find. Pytorch Gradient Example.