Gradienttape' Object Is Not Callable . Tape is required when a tensor loss is passed. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. A tensorflow module for recording operations to enable automatic differentiation. To record gradients with respect to a. This behaviour works as expected using. Tf.gradienttape provides hooks that give the user control over what is or is not watched. I have linked standalone code below. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. I am using a custom loss function. 266 tape = tf.gradienttape() valueerror: It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'.

from blog.csdn.net

I have linked standalone code below. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This behaviour works as expected using. To record gradients with respect to a. Tf.gradienttape provides hooks that give the user control over what is or is not watched. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. I am using a custom loss function. Tape is required when a tensor loss is passed. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. 266 tape = tf.gradienttape() valueerror:

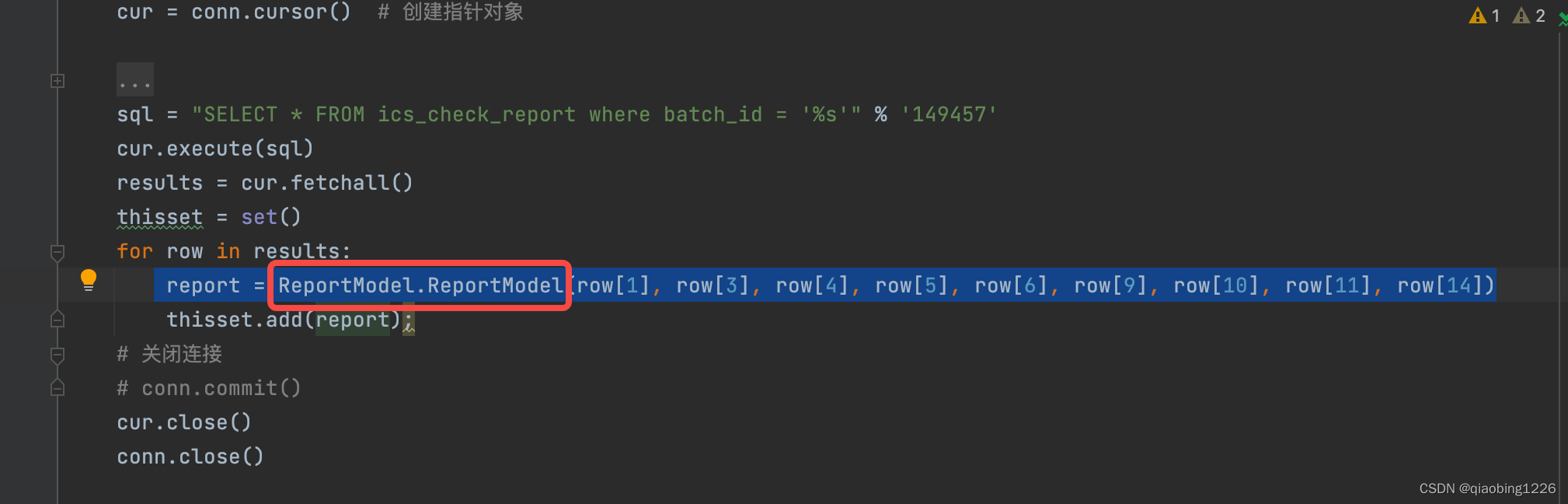

TypeError ‘module‘ object is not callable 报错解决_cursor' object is not

Gradienttape' Object Is Not Callable This behaviour works as expected using. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. I am using a custom loss function. Tape is required when a tensor loss is passed. A tensorflow module for recording operations to enable automatic differentiation. To record gradients with respect to a. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. Tf.gradienttape provides hooks that give the user control over what is or is not watched. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. I have linked standalone code below. 266 tape = tf.gradienttape() valueerror: This behaviour works as expected using.

From briefly.co

TypeError 'list' object is not callable Briefly Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. To record gradients with respect to a. I have linked standalone code below. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. I am using a custom loss function. Tape is required when a tensor loss is passed. This allows. Gradienttape' Object Is Not Callable.

From barkmanoil.com

Python Typeerror List Object Is Not Callable? The 18 Top Answers Gradienttape' Object Is Not Callable I have linked standalone code below. Tf.gradienttape provides hooks that give the user control over what is or is not watched. A tensorflow module for recording operations to enable automatic differentiation. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. To record gradients with respect to a. It simply causes __enter__ and. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror 'series' object is not callable [SOLVED] Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This behaviour works as expected using. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. I have linked standalone code below. Tf.gradienttape provides hooks that give. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror '_io.textiowrapper' object is not callable Gradienttape' Object Is Not Callable To record gradients with respect to a. I have linked standalone code below. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. I am using a custom loss function. Tape is required when a tensor loss is passed. Tf.gradienttape provides hooks that give the user control over what is or is not watched.. Gradienttape' Object Is Not Callable.

From itsourcecode.com

typeerror 'int' object is not callable [SOLVED] Gradienttape' Object Is Not Callable Tape is required when a tensor loss is passed. 266 tape = tf.gradienttape() valueerror: This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. Tf.gradienttape provides hooks that give the user control over what is or is not watched. There seems to be a problem leading to the error 'kerastensor' object. Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

'List' Object Is Not Callable Causes and Fixing Tips Position Is Gradienttape' Object Is Not Callable Tape is required when a tensor loss is passed. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. I am using a custom loss function. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. There seems to be a problem. Gradienttape' Object Is Not Callable.

From giofcykle.blob.core.windows.net

Gradienttape' Object Is Not Subscriptable at Vicky ODonnell blog Gradienttape' Object Is Not Callable This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. This behaviour works as expected using. A tensorflow module for recording operations to enable automatic differentiation. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. 266 tape = tf.gradienttape() valueerror: I. Gradienttape' Object Is Not Callable.

From www.youtube.com

TypeError 'Tensor' object is not callable YouTube Gradienttape' Object Is Not Callable I am using a custom loss function. To record gradients with respect to a. This behaviour works as expected using. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. I have linked standalone code below. A tensorflow module for recording operations to enable automatic differentiation. 266 tape = tf.gradienttape() valueerror: This allows multiple. Gradienttape' Object Is Not Callable.

From copyassignment.com

TypeError 'list' Object Is Not Callable CopyAssignment Gradienttape' Object Is Not Callable I have linked standalone code below. I am using a custom loss function. A tensorflow module for recording operations to enable automatic differentiation. To record gradients with respect to a. 266 tape = tf.gradienttape() valueerror: This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. Tf.gradienttape provides hooks that give the. Gradienttape' Object Is Not Callable.

From www.cnblogs.com

TypeError 'dict' object is not callable 错误 杪冬的鸡汤不好喝 博客园 Gradienttape' Object Is Not Callable Tf.gradienttape provides hooks that give the user control over what is or is not watched. I have linked standalone code below. To record gradients with respect to a. I am using a custom loss function. A tensorflow module for recording operations to enable automatic differentiation. There seems to be a problem leading to the error 'kerastensor' object has no attribute. Gradienttape' Object Is Not Callable.

From itsourcecode.com

[SOLVED] typeerror numpy.float64 object is not callable Gradienttape' Object Is Not Callable There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. Tape is required when a tensor loss is passed. Tf.gradienttape provides hooks that give the user control over what is or is not watched. A tensorflow module. Gradienttape' Object Is Not Callable.

From www.stechies.com

TypeError 'module' object is not callable in Python Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. I am using a custom loss function. I have linked standalone code below. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. To record gradients with respect to a. 266 tape = tf.gradienttape() valueerror: Tape is required when a tensor loss is. Gradienttape' Object Is Not Callable.

From itsourcecode.com

typeerror '_axesstack' object is not callable [SOLVED] Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. Tf.gradienttape provides hooks that give the user control over what is or is not watched. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return. Gradienttape' Object Is Not Callable.

From sebhastian.com

How to fix TypeError 'numpy.ndarray' object is not callable sebhastian Gradienttape' Object Is Not Callable There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. A tensorflow module for recording operations to enable automatic differentiation. To record gradients with respect to a. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. This behaviour works as expected using. I am. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror 'collections.ordereddict' object is not callable [SOLVED] Gradienttape' Object Is Not Callable I am using a custom loss function. This behaviour works as expected using. Tf.gradienttape provides hooks that give the user control over what is or is not watched. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient() method as resources are released when the tape. Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

Typeerror Object Is Not Callable Resolved Position Is Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. I am using a custom loss function. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. This allows multiple calls to the gradient() method. Gradienttape' Object Is Not Callable.

From nhanvietluanvan.com

Understanding The 'Dict' Object Unraveling The 'Not Callable' Phenomenon Gradienttape' Object Is Not Callable It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. 266 tape = tf.gradienttape() valueerror: I am using a custom loss function. Tf.gradienttape provides hooks that give the user control over what is or is not watched. A tensorflow module for recording operations to enable automatic differentiation. To record gradients with. Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

Typeerror ‘Dataframe’ Object Is Not Callable Learn How To Fix It Gradienttape' Object Is Not Callable There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. I am using a custom loss function. A. Gradienttape' Object Is Not Callable.

From giofcykle.blob.core.windows.net

Gradienttape' Object Is Not Subscriptable at Vicky ODonnell blog Gradienttape' Object Is Not Callable To record gradients with respect to a. 266 tape = tf.gradienttape() valueerror: It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. This behaviour works as expected using. Tape is required when a tensor loss. Gradienttape' Object Is Not Callable.

From giofcykle.blob.core.windows.net

Gradienttape' Object Is Not Subscriptable at Vicky ODonnell blog Gradienttape' Object Is Not Callable Tape is required when a tensor loss is passed. Tf.gradienttape provides hooks that give the user control over what is or is not watched. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This behaviour works as expected using. It simply causes __enter__ and __exit__ to be executed on that object, which. Gradienttape' Object Is Not Callable.

From onextdigital.com

Typeerror 'int' object is not callable How to fix it in Python Gradienttape' Object Is Not Callable Tf.gradienttape provides hooks that give the user control over what is or is not watched. This behaviour works as expected using. A tensorflow module for recording operations to enable automatic differentiation. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. This allows multiple calls to the gradient() method as resources are released when. Gradienttape' Object Is Not Callable.

From www.pythonpool.com

[Solved] TypeError List Object is Not Callable Python Pool Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. 266 tape = tf.gradienttape() valueerror: This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. This behaviour works as expected using. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. To. Gradienttape' Object Is Not Callable.

From errorsden.com

Fixing Python Flask TypeError 'dict' object is not callable errorsden Gradienttape' Object Is Not Callable Tape is required when a tensor loss is passed. A tensorflow module for recording operations to enable automatic differentiation. 266 tape = tf.gradienttape() valueerror: To record gradients with respect to a. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. Tf.gradienttape provides hooks that give the user control over what is or. Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

Typeerror Object Is Not Callable Resolved Position Is Gradienttape' Object Is Not Callable Tf.gradienttape provides hooks that give the user control over what is or is not watched. I am using a custom loss function. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. A tensorflow module for recording operations to enable automatic differentiation. I have linked standalone code below. Tape is required. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror object is not callable [SOLVED] Gradienttape' Object Is Not Callable I am using a custom loss function. I have linked standalone code below. To record gradients with respect to a. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient(). Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

Typeerror 'Int' Object Is Not Callable A Set of Solutions Position Gradienttape' Object Is Not Callable I am using a custom loss function. A tensorflow module for recording operations to enable automatic differentiation. Tf.gradienttape provides hooks that give the user control over what is or is not watched. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. This allows multiple calls to the gradient() method as resources are released. Gradienttape' Object Is Not Callable.

From nhanvietluanvan.com

Typeerror Object Is Not Callable A Guide To Troubleshoot Gradienttape' Object Is Not Callable I am using a custom loss function. I have linked standalone code below. Tape is required when a tensor loss is passed. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. Tf.gradienttape provides. Gradienttape' Object Is Not Callable.

From www.positioniseverything.net

Float Object Is Not Callable Causes and Solutions in Detail Position Gradienttape' Object Is Not Callable To record gradients with respect to a. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. 266. Gradienttape' Object Is Not Callable.

From blog.csdn.net

TypeError ‘module‘ object is not callable 报错解决_cursor' object is not Gradienttape' Object Is Not Callable Tf.gradienttape provides hooks that give the user control over what is or is not watched. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. I have linked standalone code below. Tape is required. Gradienttape' Object Is Not Callable.

From 9to5answer.com

[Solved] 'ListSerializer' object is not callable 9to5Answer Gradienttape' Object Is Not Callable This behaviour works as expected using. 266 tape = tf.gradienttape() valueerror: There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. I have linked standalone code below. Tf.gradienttape provides hooks that give the user control. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror 'dict' object is not callable [SOLVED] Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. Tape is required when a tensor loss is passed. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. It simply causes __enter__ and __exit__ to be. Gradienttape' Object Is Not Callable.

From giofcykle.blob.core.windows.net

Gradienttape' Object Is Not Subscriptable at Vicky ODonnell blog Gradienttape' Object Is Not Callable Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. I have linked standalone code below. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. To record gradients with respect to a. 266 tape = tf.gradienttape() valueerror: There seems to be a problem. Gradienttape' Object Is Not Callable.

From www.youtube.com

TypeError 'dict' object is not callable while using dict( ) YouTube Gradienttape' Object Is Not Callable A tensorflow module for recording operations to enable automatic differentiation. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. 266 tape = tf.gradienttape() valueerror: This behaviour works as expected using. It simply causes __enter__ and __exit__ to be executed on that object, which may or may not invalidate it. Tape is required. Gradienttape' Object Is Not Callable.

From www.vrogue.co

Tensorflow Model Fit Format Data Correctly Typeerror vrogue.co Gradienttape' Object Is Not Callable There seems to be a problem leading to the error 'kerastensor' object has no attribute '_id'. Tape.watch on a numpy input converted to a tensor still causes tape.gradient (model_output, model_input) to return none. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. I have linked standalone code below. I am. Gradienttape' Object Is Not Callable.

From itsourcecode.com

Typeerror 'rangeindex' object is not callable [SOLVED] Gradienttape' Object Is Not Callable Tape is required when a tensor loss is passed. This allows multiple calls to the gradient() method as resources are released when the tape object is garbage collected. 266 tape = tf.gradienttape() valueerror: I have linked standalone code below. I am using a custom loss function. It simply causes __enter__ and __exit__ to be executed on that object, which may. Gradienttape' Object Is Not Callable.