Pytorch Compute Jacobian . Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. Learn how to compute the jacobian of a given function using pytorch's autograd module. We offer a convenience api to compute hessians: When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. If this flag is true , we use the vmap. See parameters, return type, and examples for.

from bestofai.com

Learn how to compute the jacobian of a given function using pytorch's autograd module. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. We offer a convenience api to compute hessians: Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. See parameters, return type, and examples for. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. If this flag is true , we use the vmap.

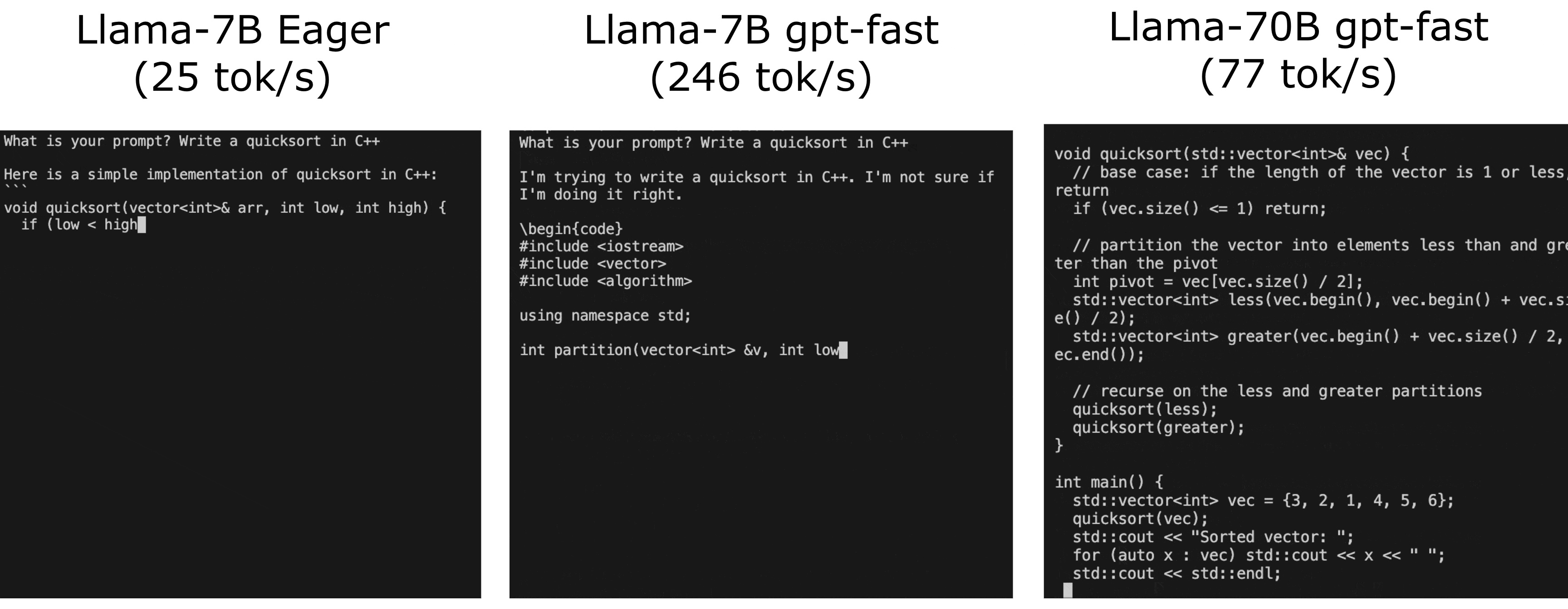

Accelerating Generative AI with PyTorch II GPT, Fast

Pytorch Compute Jacobian Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. We offer a convenience api to compute hessians: Learn how to compute the jacobian of a given function using pytorch's autograd module. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. If this flag is true , we use the vmap. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. See parameters, return type, and examples for. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output.

From discuss.pytorch.org

Doubt regarding shape after Jacobian autograd PyTorch Forums Pytorch Compute Jacobian We offer a convenience api to compute hessians: See parameters, return type, and examples for. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian of a given function using pytorch's autograd module. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a. Pytorch Compute Jacobian.

From www.youtube.com

Automatically Compute Jacobian matrices in Python and Generate Python Pytorch Compute Jacobian A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. We offer a convenience api to compute hessians: Learn how to use the jacobian() function from torch.autograd.functional to calculate the. Pytorch Compute Jacobian.

From discuss.pytorch.org

Compute Jacobian matrix of model output layer versus input layer Pytorch Compute Jacobian I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. Learn how to compute the jacobian of a given function using pytorch's autograd module. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian matrix. Pytorch Compute Jacobian.

From github.com

Jacobian should be Jacobian transpose (at least according to wikipedia Pytorch Compute Jacobian A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. If this flag is true , we use the vmap. Learn how to compute the jacobian matrix of. Pytorch Compute Jacobian.

From zhuanlan.zhihu.com

从手推反向传播梯度开始(续) Jacobian 矩阵 知乎 Pytorch Compute Jacobian If this flag is true , we use the vmap. Learn how to compute the jacobian of a given function using pytorch's autograd module. We offer a convenience api to compute hessians: When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. Hessians are the jacobian of the jacobian (or the partial derivative of the partial.. Pytorch Compute Jacobian.

From www.youtube.com

Jacobian in PyTorch YouTube Pytorch Compute Jacobian Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. We offer a convenience api to compute hessians: When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. See parameters, return type, and examples for. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives. Pytorch Compute Jacobian.

From zhuanlan.zhihu.com

vectorJacobian product 解释 pytorch autograd 知乎 Pytorch Compute Jacobian We offer a convenience api to compute hessians: Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. Learn how to compute the jacobian of a given function using pytorch's autograd module. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. A discussion thread on how. Pytorch Compute Jacobian.

From blog.csdn.net

Pytorch,Tensorflow Autograd/AutoDiff nutshells Jacobian,Gradient Pytorch Compute Jacobian If this flag is true , we use the vmap. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. I’m trying to compute jacobian (and its inverse) of the output of an intermediate. Pytorch Compute Jacobian.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Compute Jacobian Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. If this flag is true , we use the vmap. We offer a convenience api to compute hessians: See parameters, return type, and examples for. I’m trying. Pytorch Compute Jacobian.

From debuggercafe.com

Language Translation using PyTorch Transformer Pytorch Compute Jacobian When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. If this flag is true , we use the vmap. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. We offer a convenience api to compute hessians: See parameters, return type, and examples for. I’m trying to compute jacobian (and its. Pytorch Compute Jacobian.

From 9to5answer.com

[Solved] How to compute the cosine_similarity in pytorch 9to5Answer Pytorch Compute Jacobian I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. Learn how to compute the jacobian of. Pytorch Compute Jacobian.

From www.alibabacloud.com

Deploy a PyTorch deep learning model on a seventhgeneration security Pytorch Compute Jacobian Learn how to compute the jacobian of a given function using pytorch's autograd module. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. I’m trying to compute jacobian (and its inverse) of. Pytorch Compute Jacobian.

From github.com

pytorchJacobian/jacobian.py at master · ChenAoPhys/pytorchJacobian Pytorch Compute Jacobian When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. See parameters, return type, and examples for. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. Learn how to compute the jacobian of a given function using pytorch's autograd module. I’m trying to compute jacobian (and. Pytorch Compute Jacobian.

From www.codeunderscored.com

PyTorch argmax() Code Underscored Pytorch Compute Jacobian If this flag is true , we use the vmap. Learn how to compute the jacobian of a given function using pytorch's autograd module. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. When computing the jacobian, usually we invoke autograd.grad once per row of the. Pytorch Compute Jacobian.

From www.youtube.com

How to Compute the Jacobian of a Robot Manipulator A Numerical Example Pytorch Compute Jacobian I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. We offer a convenience api to compute hessians: Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. When computing the jacobian, usually we invoke autograd.grad once per. Pytorch Compute Jacobian.

From www.hotzxgirl.com

Binary Classification Using Pytorch Cpu On Macos James D Hot Sex Picture Pytorch Compute Jacobian Learn how to compute the jacobian of a given function using pytorch's autograd module. If this flag is true , we use the vmap. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial. Pytorch Compute Jacobian.

From www.pythonheidong.com

PyTorch的gradcheck()报错问题RuntimeError Jacobian mismatch for output 0 Pytorch Compute Jacobian I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian of a given function using pytorch's autograd module. We offer a convenience api to compute. Pytorch Compute Jacobian.

From github.com

Jacobians computed by autograd.functional.jacobian with compute_graph Pytorch Compute Jacobian A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. We offer a convenience api to compute hessians: Hessians are the jacobian of the jacobian (or the partial derivative of the partial. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer. Pytorch Compute Jacobian.

From www.myxxgirl.com

Custom Yolov On Pascal Voc Using Pytorch Lightening A Hugging Face My Pytorch Compute Jacobian We offer a convenience api to compute hessians: Hessians are the jacobian of the jacobian (or the partial derivative of the partial. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. If this flag is true , we use the vmap. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and. Pytorch Compute Jacobian.

From www.pinterest.com

How to compute gradients in Tensorflow and Pytorch Learning framework Pytorch Compute Jacobian We offer a convenience api to compute hessians: I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. Hessians are the jacobian. Pytorch Compute Jacobian.

From bestofai.com

Accelerating Generative AI with PyTorch II GPT, Fast Pytorch Compute Jacobian We offer a convenience api to compute hessians: Learn how to compute the jacobian of a given function using pytorch's autograd module. If this flag is true , we use the vmap. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. I’m trying to compute jacobian. Pytorch Compute Jacobian.

From www.facebook.com

PyTorch Computing and AI leader NVIDIA AI will also be... Pytorch Compute Jacobian See parameters, return type, and examples for. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. I’m trying to. Pytorch Compute Jacobian.

From pytorch-hub-preview.netlify.app

Announcing PyTorch Conference 2022 PyTorch Pytorch Compute Jacobian If this flag is true , we use the vmap. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. We offer a convenience api to compute hessians: I’m trying to compute jacobian (and its inverse) of. Pytorch Compute Jacobian.

From phoevoseneko.blogspot.com

20+ calculator jacobian PhoevosEneko Pytorch Compute Jacobian Learn how to compute the jacobian of a given function using pytorch's autograd module. If this flag is true , we use the vmap. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. A discussion thread on how to use pytorch autograd module to. Pytorch Compute Jacobian.

From python.plainenglish.io

Image Classification with PyTorch by Varrel Tantio Python in Plain Pytorch Compute Jacobian When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. See parameters, return type, and examples for. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and. Pytorch Compute Jacobian.

From stlplaces.com

How to Calculate Gradients on A Tensor In PyTorch in 2024? Pytorch Compute Jacobian I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. If this flag is true , we use the vmap. Learn how to compute the jacobian of a given function using. Pytorch Compute Jacobian.

From dxoeyqsmj.blob.core.windows.net

Pytorch Backward Jacobian at Ollie Viera blog Pytorch Compute Jacobian If this flag is true , we use the vmap. We offer a convenience api to compute hessians: Learn how to compute the jacobian of a given function using pytorch's autograd module. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. I’m trying to compute jacobian (and its inverse) of. Pytorch Compute Jacobian.

From www.yawin.in

Jacobian matrix of partial derivatives Yawin Pytorch Compute Jacobian When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. We offer a convenience api to compute hessians: See parameters, return type, and examples for. Learn how to compute the jacobian of a given function using pytorch's autograd module. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop.. Pytorch Compute Jacobian.

From github.com

how to compute the real Jacobian matrix using autograd tool · Issue Pytorch Compute Jacobian Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. We offer a convenience api to compute hessians: Learn how to compute the jacobian of a given function. Pytorch Compute Jacobian.

From velog.io

[PyTorch] Autograd02 With Jacobian Pytorch Compute Jacobian If this flag is true , we use the vmap. When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function with. See parameters, return type, and examples for. I’m trying to compute jacobian (and its inverse) of the. Pytorch Compute Jacobian.

From phoevoseneko.blogspot.com

20+ calculator jacobian PhoevosEneko Pytorch Compute Jacobian Hessians are the jacobian of the jacobian (or the partial derivative of the partial. If this flag is true , we use the vmap. See parameters, return type, and examples for. Learn how to compute the jacobian of a given function using pytorch's autograd module. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer. Pytorch Compute Jacobian.

From stackoverflow.com

python log determinant jacobian in Normalizing Flow training with Pytorch Compute Jacobian If this flag is true , we use the vmap. A discussion thread on how to use pytorch autograd module to calculate the jacobian matrix of a function with tensor input and output. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer. Hessians are. Pytorch Compute Jacobian.

From math.stackexchange.com

multivariable calculus Computing the Jacobian for the change of Pytorch Compute Jacobian We offer a convenience api to compute hessians: If this flag is true , we use the vmap. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop. I’m trying to compute jacobian (and its inverse) of the output of an intermediate layer (block1) with respect to the input to the first layer.. Pytorch Compute Jacobian.

From github.com

Speed up Jacobian in PyTorch · Issue 1000 · pytorch/functorch · GitHub Pytorch Compute Jacobian Learn how to compute the jacobian of a given function using pytorch's autograd module. See parameters, return type, and examples for. We offer a convenience api to compute hessians: Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian matrix of a tensor using torch.autograd.grad function and a for loop.. Pytorch Compute Jacobian.

From www.youtube.com

How to find Jacobian Matrix? Solved Examples Robotics 101 YouTube Pytorch Compute Jacobian When computing the jacobian, usually we invoke autograd.grad once per row of the jacobian. Hessians are the jacobian of the jacobian (or the partial derivative of the partial. Learn how to compute the jacobian of a given function using pytorch's autograd module. Learn how to use the jacobian() function from torch.autograd.functional to calculate the partial derivatives of a given function. Pytorch Compute Jacobian.