Filter Function Spark . Master pyspark filter function with real examples. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. You can use where () operator. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. If your conditions were to be in a list form e.g. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Columnorname) → dataframe [source] ¶ filters rows using the given condition.

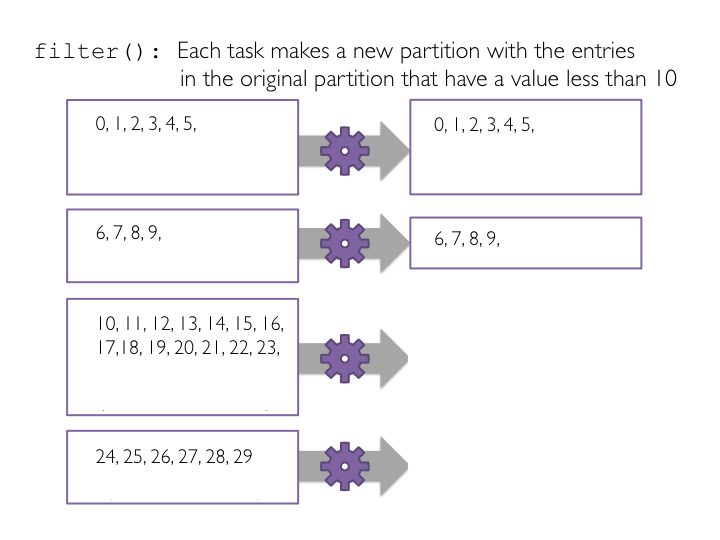

from databricks-prod-cloudfront.cloud.databricks.com

You can use where () operator. If your conditions were to be in a list form e.g. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Master pyspark filter function with real examples. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:.

Module 2 Spark Tutorial Lab Databricks

Filter Function Spark In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Master pyspark filter function with real examples. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. If your conditions were to be in a list form e.g. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. You can use where () operator.

From www.youtube.com

Spark AR tutorial Create filter with Particle System (Emitter Frezee Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. In this article, we are going to filter the dataframe on multiple columns by using filter (). Filter Function Spark.

From sparkbyexamples.com

How to Use NOT IN Filter in Pandas Spark By {Examples} Filter Function Spark In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. If your conditions were. Filter Function Spark.

From www.slideserve.com

PPT Active Filters PowerPoint Presentation, free download ID7021104 Filter Function Spark Master pyspark filter function with real examples. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Where is used in dataframes to. Filter Function Spark.

From howtoexcel.net

How to Use the Filter Function in Excel Filter Function Spark Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. If your conditions were to be in a list form e.g. You can use where (). Filter Function Spark.

From www.researchgate.net

The top figure shows the filter functions contributing to the Filter Function Spark Filter is used in rdds to filter elements that satisfy a boolean expression or a function. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Columnorname). Filter Function Spark.

From www.slideserve.com

PPT Active Filters PowerPoint Presentation, free download ID7021104 Filter Function Spark Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Filter is used in rdds to filter elements that satisfy. Filter Function Spark.

From databricks-prod-cloudfront.cloud.databricks.com

Module 2 Spark Tutorial Lab Databricks Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. Master pyspark filter function with real examples. In this article, we are. Filter Function Spark.

From sparkbyexamples.com

Pandas Series filter() Function Spark By {Examples} Filter Function Spark The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python.. Filter Function Spark.

From sparkbyexamples.com

Spark Window Functions with Examples Spark By {Examples} Filter Function Spark In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you. Filter Function Spark.

From exceljet.net

FILTER function with two criteria (video) Exceljet Filter Function Spark Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Filter is used in rdds to. Filter Function Spark.

From sparkbyexamples.com

Python filter() Function Spark By {Examples} Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. If your conditions were to be in a list form e.g. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. You can use where () operator. Spark filter () or where () function filters the rows from dataframe or dataset based. Filter Function Spark.

From www.learntospark.com

How to Filter Data in Apache Spark Spark Dataframe Filter using PySpark Filter Function Spark The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. If your conditions were to be in a list form e.g. Master pyspark filter function with real examples. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Pyspark filter() function is used to create. Filter Function Spark.

From www.simplilearn.com

Spark Parallelize The Essential Element of Spark Filter Function Spark Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. In this article, we are going to filter the dataframe on multiple. Filter Function Spark.

From www.exceldemy.com

How to Use FILTER Function in Excel (9 Easy Examples) ExcelDemy Filter Function Spark In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Master pyspark filter function with real examples. If your conditions were to be in a list form e.g. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value.. Filter Function Spark.

From medium.com

Spark 3.0 New DataFrame functions — Part 2 CSV Pushdown Filter, max_by Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. You can use where () operator. Master pyspark filter function with real examples. Rdd.filter(func), where func is a function that takes an element as input and returns. Filter Function Spark.

From mindmajix.com

What is Spark SQL Spark SQL Tutorial Filter Function Spark You can use where () operator. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. If your conditions were to be in a list form e.g. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Spark filter. Filter Function Spark.

From www.youtube.com

RL Low pass filter Transfer function and frequency response (magnitude Filter Function Spark If your conditions were to be in a list form e.g. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Pyspark filter() function is used to create a. Filter Function Spark.

From analyticshut.com

Filtering Rows in Spark Using Where and Filter Analyticshut Filter Function Spark You can use where () operator. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. If your conditions were to be in a list form e.g.. Filter Function Spark.

From www.slideserve.com

PPT Active Filters PowerPoint Presentation, free download ID7021104 Filter Function Spark If your conditions were to be in a list form e.g. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Spark filter () or where () function filters the rows from dataframe or. Filter Function Spark.

From www.educba.com

Filter Function in Matlab Different Examples of Filter Function in Matlab Filter Function Spark You can use where () operator. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. Master pyspark filter function with real examples.. Filter Function Spark.

From www.youtube.com

How Sort and Filter Works in Spark Spark Scenario Based Question Filter Function Spark You can use where () operator. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Master pyspark filter function with real examples. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Spark filter () or where () function filters the rows from dataframe or dataset based. Filter Function Spark.

From www.youtube.com

How to Make "Which are You" Instgram Filter Spark AR Tutorial YouTube Filter Function Spark Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. If your conditions were to. Filter Function Spark.

From sparkbyexamples.com

Use length function in substring in Spark Spark By {Examples} Filter Function Spark You can use where () operator. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given.. Filter Function Spark.

From sparkbyexamples.com

Spark RDD filter() with examples Spark By {Examples} Filter Function Spark Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Master pyspark filter function with real examples. Columnorname) → dataframe [source] ¶ filters rows using the given condition. If your conditions. Filter Function Spark.

From www.youtube.com

Frequency Response of the Cascade of Filters and Notch Filters YouTube Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. You can use where () operator. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. If your conditions were to be in a. Filter Function Spark.

From www.slideserve.com

PPT Active Filters PowerPoint Presentation, free download ID7021104 Filter Function Spark Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Columnorname) → dataframe [source] ¶ filters rows using the given condition. In this article, we are going to filter the dataframe. Filter Function Spark.

From www.learntospark.com

How to Filter Data in Apache Spark Spark Dataframe Filter using PySpark Filter Function Spark The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. You can use where () operator. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Spark filter () or where () function filters the rows. Filter Function Spark.

From sparkbyexamples.com

Filter in sparklyr R Interface to Spark Spark By {Examples} Filter Function Spark Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Columnorname) → dataframe [source] ¶ filters rows using the given condition. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Rdd.filter(func), where func is a function that takes an element as input and returns a. Filter Function Spark.

From www.researchgate.net

Figure .. Overview of a DNN (a). Filter functions are computed by Filter Function Spark Filter is used in rdds to filter elements that satisfy a boolean expression or a function. In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. You can. Filter Function Spark.

From sparkbyexamples.com

Difference Between filter() and where() in Spark? Spark By {Examples} Filter Function Spark If your conditions were to be in a list form e.g. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Rdd.filter(func), where func is a function that takes an element as input and returns a boolean value. Filter_values_list =['value1', 'value2'] and. Filter Function Spark.

From sparkbyexamples.com

R dplyr filter() Subset DataFrame Rows Spark By {Examples} Filter Function Spark Columnorname) → dataframe [source] ¶ filters rows using the given condition. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. In this article, we are going to filter the dataframe on multiple columns by. Filter Function Spark.

From betterdatascience.com

Apache Spark for Data Science UserDefined Functions (UDF) Explained Filter Function Spark Filter is used in rdds to filter elements that satisfy a boolean expression or a function. Columnorname) → dataframe [source] ¶ filters rows using the given condition. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. Master pyspark filter function with real examples. If your conditions were. Filter Function Spark.

From autosourcepk.blogspot.com

Auto source SPARK PLUG FUNCTIONS, CONSTRUCTION, WORKING PRINCIPLE AND Filter Function Spark The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Filter_values_list =['value1', 'value2'] and you are filtering on a single column, then you can do:. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Pyspark filter() function is used to create a new dataframe. Filter Function Spark.

From sparkbyexamples.com

Filter Spark DataFrame Based on Date Spark By {Examples} Filter Function Spark Pyspark filter() function is used to create a new dataframe by filtering the elements from an existing dataframe based on the given. The where() & filter()function in spark offers powerful capabilities to selectively retain or discard rows based on specified. Where is used in dataframes to filter rows that satisfy a boolean expression or a column expression. Master pyspark filter. Filter Function Spark.

From sparkbyexamples.com

Spark SQL Functions Archives Page 5 of 5 Spark By {Examples} Filter Function Spark In this article, we are going to filter the dataframe on multiple columns by using filter () and where () function in pyspark in python. Spark filter () or where () function filters the rows from dataframe or dataset based on the given one or multiple conditions. The where() & filter()function in spark offers powerful capabilities to selectively retain or. Filter Function Spark.