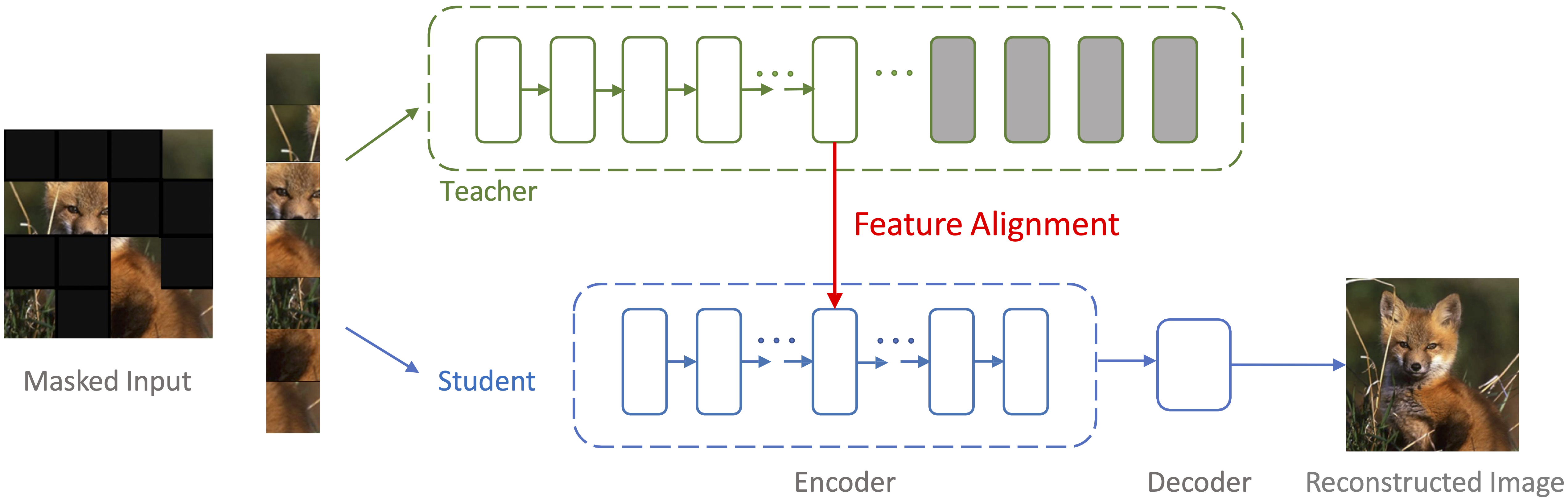

Masked Autoencoders Enable Efficient Knowledge Distillers . The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within.

from paperswithcode.com

The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,.

Masked Autoencoders Enable Efficient Knowledge Distillers Papers With

Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers.

From deepai.org

AdaMAE Adaptive Masking for Efficient Spatiotemporal Learning with Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.catalyzex.com

Masked Autoencoders Enable Efficient Knowledge Distillers Paper and Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7. Masked Autoencoders Enable Efficient Knowledge Distillers.

From blog.csdn.net

Masked Autoencoders Are Scalable Vision Learners_he k, chen x, xie s Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From viblo.asia

Paper reading VideoMAE Masked Autoencoders are DataEfficient Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 2 from Efficient Transformer Inference for Extremely Weak Edge Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression. Masked Autoencoders Enable Efficient Knowledge Distillers.

From deepai.org

How to Understand Masked Autoencoders DeepAI Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of the paper titled masked autoencoders enable. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.researchgate.net

(PDF) Masked Autoencoders are Efficient Class Incremental Learners Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. View a pdf of. Masked Autoencoders Enable Efficient Knowledge Distillers.

From zhuanlan.zhihu.com

[2022] Masked Autoencoders for Point Cloud Selfsupervised Learning 知乎 Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.marktechpost.com

UC Berkeley and UCSF Researchers Propose CrossAttention Masked Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The experimental results demonstrate that masked modeling. Masked Autoencoders Enable Efficient Knowledge Distillers.

From paperswithcode.com

Masked Autoencoders Enable Efficient Knowledge Distillers Papers With Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.youtube.com

"난" 선생이고 "난" 학생이야. Masked Autoencoders Enable Efficient Knowledge Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Masked autoencoders enable efficient. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 1 from GenerictoSpecific Distillation of Masked Autoencoders Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the. Masked Autoencoders Enable Efficient Knowledge Distillers.

From multiplatform.ai

CrossAttention Masked Autoencoders (CrossMAE) Revolutionizing Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang,. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.youtube.com

Masked Autoencoders that Listen YouTube Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.v7labs.com

An Introduction to Autoencoders Everything You Need to Know Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.researchgate.net

(PDF) Efficient Masked Autoencoders with SelfConsistency Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.youtube.com

Masked Autoencoders (MAE) Paper Explained YouTube Masked Autoencoders Enable Efficient Knowledge Distillers Masked autoencoders enable efficient knowledge distillers. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 2 from AdaMAE Adaptive Masking for Efficient Spatiotemporal Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. Masked autoencoders enable efficient knowledge distillers. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is. Masked Autoencoders Enable Efficient Knowledge Distillers.

From weichen582.github.io

Chen Wei Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From paperswithcode.com

ConvMAE Masked Convolution Meets Masked Autoencoders Papers With Code Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

[PDF] CMAEV Contrastive Masked Autoencoders for Video Action Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the. Masked Autoencoders Enable Efficient Knowledge Distillers.

From paperswithcode.com

SupMAE Supervised Masked Autoencoders Are Efficient Vision Learners Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 2 from Masked Autoencoders are Efficient Class Incremental Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the. Masked Autoencoders Enable Efficient Knowledge Distillers.

From deepai.org

Masked Autoencoders Enable Efficient Knowledge Distillers DeepAI Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.youtube.com

[CVPR 2023] Masked Autoencoders Enable Efficient Knowledge Distillers Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient. Masked Autoencoders Enable Efficient Knowledge Distillers.

From huggingface.co

Paper page VideoMAE Masked Autoencoders are DataEfficient Learners Masked Autoencoders Enable Efficient Knowledge Distillers Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 2 from GenerictoSpecific Distillation of Masked Autoencoders Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the. Masked Autoencoders Enable Efficient Knowledge Distillers.

From deepai.org

Masked Autoencoders are Efficient Class Incremental Learners DeepAI Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. This work is. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 2 from Visual Representation Learning from Unlabeled Video using Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Masked autoencoders enable efficient knowledge distillers. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression. Masked Autoencoders Enable Efficient Knowledge Distillers.

From blog.csdn.net

「Masked Autoencoders」MAE算法相关及后续工作整理_mae相关的工作CSDN博客 Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Masked autoencoders enable efficient knowledge distillers. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is. Masked Autoencoders Enable Efficient Knowledge Distillers.

From paperswithcode.com

MTSMAE Masked Autoencoders for Multivariate TimeSeries Forecasting Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. Yutong bai, zeyu wang,. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Table 3 from Masked Autoencoders Enable Efficient Knowledge Distillers Masked Autoencoders Enable Efficient Knowledge Distillers The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. View a pdf of. Masked Autoencoders Enable Efficient Knowledge Distillers.

From deepai.org

Hybrid Distillation Connecting Masked Autoencoders with Contrastive Masked Autoencoders Enable Efficient Knowledge Distillers View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Masked autoencoders enable efficient knowledge distillers. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 1 from Ada MAE Adaptive Masking for Efficient Spatiotemporal Masked Autoencoders Enable Efficient Knowledge Distillers This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. The experimental results demonstrate that masked modeling does not lose effectiveness even without reconstruction on masked regions,. Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. Masked autoencoders enable efficient knowledge distillers. View a pdf of. Masked Autoencoders Enable Efficient Knowledge Distillers.

From www.semanticscholar.org

Figure 1 from Improving Masked Autoencoders by Learning Where to Mask Masked Autoencoders Enable Efficient Knowledge Distillers Yutong bai, zeyu wang, junfei xiao, chen wei, huiyu wang, alan yuille, yuyin zhou,. This work is a small step towards unleashing the potential of knowledge distillation, a popular model compression technique, within. Masked autoencoders enable efficient knowledge distillers. View a pdf of the paper titled masked autoencoders enable efficient knowledge distillers, by yutong bai and 7 other authors. The. Masked Autoencoders Enable Efficient Knowledge Distillers.