Rdd Get Number Partitions . Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on pyspark rdd use: Returns the number of partitions in rdd.

from giobtyevn.blob.core.windows.net

In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Rdd.getnumpartitions() → int [source] ¶. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Returns the number of partitions in rdd. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame.

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog

Rdd Get Number Partitions Returns the number of partitions in rdd. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Rdd.getnumpartitions() → int [source] ¶. For showing partitions on pyspark rdd use: Returns the number of partitions in rdd. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the.

From subscription.packtpub.com

RDD partitioning Apache Spark 2.x for Java Developers Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. For showing partitions on pyspark rdd use: Rdd.getnumpartitions() → int [source] ¶. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient. Rdd Get Number Partitions.

From www.youtube.com

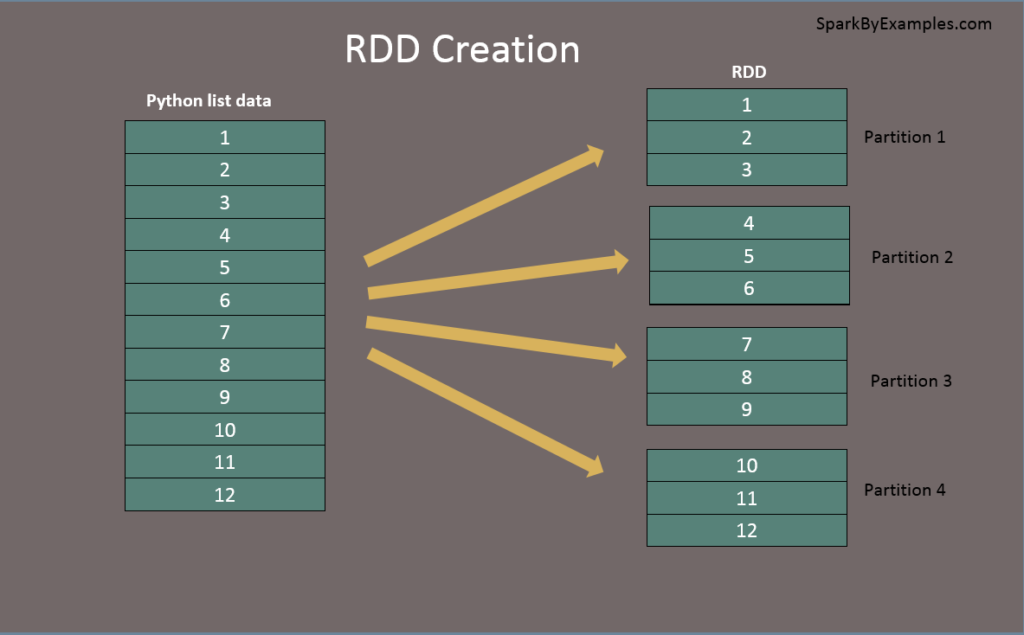

3 Create RDD using List RDD with Partition in PySpark in Hindi Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. In apache spark, you can use the rdd.getnumpartitions(). Rdd Get Number Partitions.

From stackoverflow.com

Increasing the speed for Spark DataFrame to RDD conversion by possibly Rdd Get Number Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on pyspark rdd use: Once you have the number of partitions, you can calculate the approximate. Rdd Get Number Partitions.

From classroomsecrets.co.uk

Partition Numbers to 100 Classroom Secrets Classroom Secrets Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. In. Rdd Get Number Partitions.

From zhuanlan.zhihu.com

[NSDI'12] Resilient Distributed Datasets 知乎 Rdd Get Number Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In summary, you can easily find the number of partitions of a. Rdd Get Number Partitions.

From blog.csdn.net

spark学习13之RDD的partitions数目获取_spark中的一个ask可以处理一个rdd中客个partition的数CSDN博客 Rdd Get Number Partitions Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In this method, we are going to. Rdd Get Number Partitions.

From stackoverflow.com

Increasing the speed for Spark DataFrame to RDD conversion by possibly Rdd Get Number Partitions Returns the number of partitions in rdd. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Rdd.getnumpartitions() → int [source] ¶. In summary, you can easily find the number of partitions of a. Rdd Get Number Partitions.

From blog.csdn.net

spark学习13之RDD的partitions数目获取_spark中的一个ask可以处理一个rdd中客个partition的数CSDN博客 Rdd Get Number Partitions Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns. Rdd Get Number Partitions.

From slideplayer.com

Working with Key/Value Pairs ppt download Rdd Get Number Partitions Rdd.getnumpartitions() → int [source] ¶. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Returns the number of partitions in rdd. In summary, you can easily find the. Rdd Get Number Partitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Rdd Get Number Partitions Returns the number of partitions in rdd. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In this method, we are going to find the number of partitions in a data frame using. Rdd Get Number Partitions.

From slideplayer.com

Lecture 29 Distributed Systems ppt download Rdd Get Number Partitions Rdd.getnumpartitions() → int [source] ¶. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Once you have the number of partitions, you can calculate the approximate. Rdd Get Number Partitions.

From slidetodoc.com

Introduction to Spark Internals Matei Zaharia UC Berkeley Rdd Get Number Partitions In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In this method, we are going to find the number of partitions in a data frame using. Rdd Get Number Partitions.

From nbviewer.org

Lab0_Spark_Tutorial_RDD Databricks Rdd Get Number Partitions Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Rdd.getnumpartitions(). Rdd Get Number Partitions.

From markglh.github.io

Big Data in IoT Rdd Get Number Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). For showing partitions on pyspark rdd use: Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size. Rdd Get Number Partitions.

From www.jamesandchey.net

SQL Server Management Studio Using Row_Number, Partition, and Rdd Get Number Partitions Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on. Rdd Get Number Partitions.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark Rdd Get Number Partitions For showing partitions on pyspark rdd use: Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In summary, you can easily find the number of partitions of a. Rdd Get Number Partitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Rdd Get Number Partitions For showing partitions on pyspark rdd use: Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a. Rdd Get Number Partitions.

From matnoble.github.io

图解Spark RDD的五大特性 MatNoble Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In summary, you can easily find the number of partitions of. Rdd Get Number Partitions.

From toien.github.io

Spark 分区数量 Kwritin Rdd Get Number Partitions Returns the number of partitions in rdd. Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient. Rdd Get Number Partitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Rdd Get Number Partitions In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by. Rdd Get Number Partitions.

From www.youtube.com

How to create partitions in RDD YouTube Rdd Get Number Partitions In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. For showing partitions on pyspark rdd use: In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Returns the number of partitions in. Rdd Get Number Partitions.

From www.researchgate.net

Execution diagram for the map primitive. The primitive takes an RDD Rdd Get Number Partitions Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In summary, you can easily. Rdd Get Number Partitions.

From matnoble.github.io

图解Spark RDD的五大特性 MatNoble Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Returns the number of partitions in rdd. In apache spark, you. Rdd Get Number Partitions.

From www.youtube.com

Why should we partition the data in spark? YouTube Rdd Get Number Partitions To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In summary, you can easily find the. Rdd Get Number Partitions.

From stackoverflow.com

disk partitioning In GUID Partition Table how can I know how many Rdd Get Number Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. In this method,. Rdd Get Number Partitions.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In apache spark, you can use the. Rdd Get Number Partitions.

From blog.csdn.net

RDD用法与实例(五):glom的用法_rdd.glomCSDN博客 Rdd Get Number Partitions In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Rdd.getnumpartitions() → int [source] ¶. For showing partitions on pyspark rdd use: Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by. Rdd Get Number Partitions.

From www.youtube.com

How To Fix The Selected Disk Already Contains the Maximum Number of Rdd Get Number Partitions For showing partitions on pyspark rdd use: Returns the number of partitions in rdd. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In this method, we are going to find the number of partitions in a. Rdd Get Number Partitions.

From www.dezyre.com

How Data Partitioning in Spark helps achieve more parallelism? Rdd Get Number Partitions Rdd.getnumpartitions() → int [source] ¶. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Returns the number of partitions in rdd. In. Rdd Get Number Partitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Rdd Get Number Partitions In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. For showing partitions on pyspark rdd use: Returns the number of partitions in. Rdd Get Number Partitions.

From eureka.patsnap.com

RDD partition internal data index establishing method, click checking Rdd Get Number Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In summary, you can easily find the number. Rdd Get Number Partitions.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Rdd Get Number Partitions Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing. Rdd Get Number Partitions.

From www.bigdatainrealworld.com

What is RDD? Big Data In Real World Rdd Get Number Partitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Returns. Rdd Get Number Partitions.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Rdd Get Number Partitions In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on pyspark rdd use: In apache spark, you can use the rdd.getnumpartitions() method. Rdd Get Number Partitions.

From www.youtube.com

What is RDD partitioning YouTube Rdd Get Number Partitions To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In summary, you can easily find the number of partitions of. Rdd Get Number Partitions.