Torch.nn.embedding Sparse . When should i choose to set sparse=true for an embedding layer? Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. What are the pros and cons of the sparse and dense versions. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. So you define your embedding as follows. See notes under torch.nn.embedding for more details regarding.

from www.youtube.com

We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. See notes under torch.nn.embedding for more details regarding. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. What are the pros and cons of the sparse and dense versions. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); When should i choose to set sparse=true for an embedding layer? Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. So you define your embedding as follows. Weight will be a sparse tensor. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,.

torch.nn.Embedding How embedding weights are updated in

Torch.nn.embedding Sparse Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Weight will be a sparse tensor. So you define your embedding as follows. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. What are the pros and cons of the sparse and dense versions. See notes under torch.nn.embedding for more details regarding. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. When should i choose to set sparse=true for an embedding layer?

From jamesmccaffrey.wordpress.com

PyTorch Word Embedding Layer from Scratch James D. McCaffrey Torch.nn.embedding Sparse See notes under torch.nn.embedding for more details regarding. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Weight will be a sparse tensor. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Class torch.nn.embedding(num_embeddings, embedding_dim,. Torch.nn.embedding Sparse.

From www.youtube.com

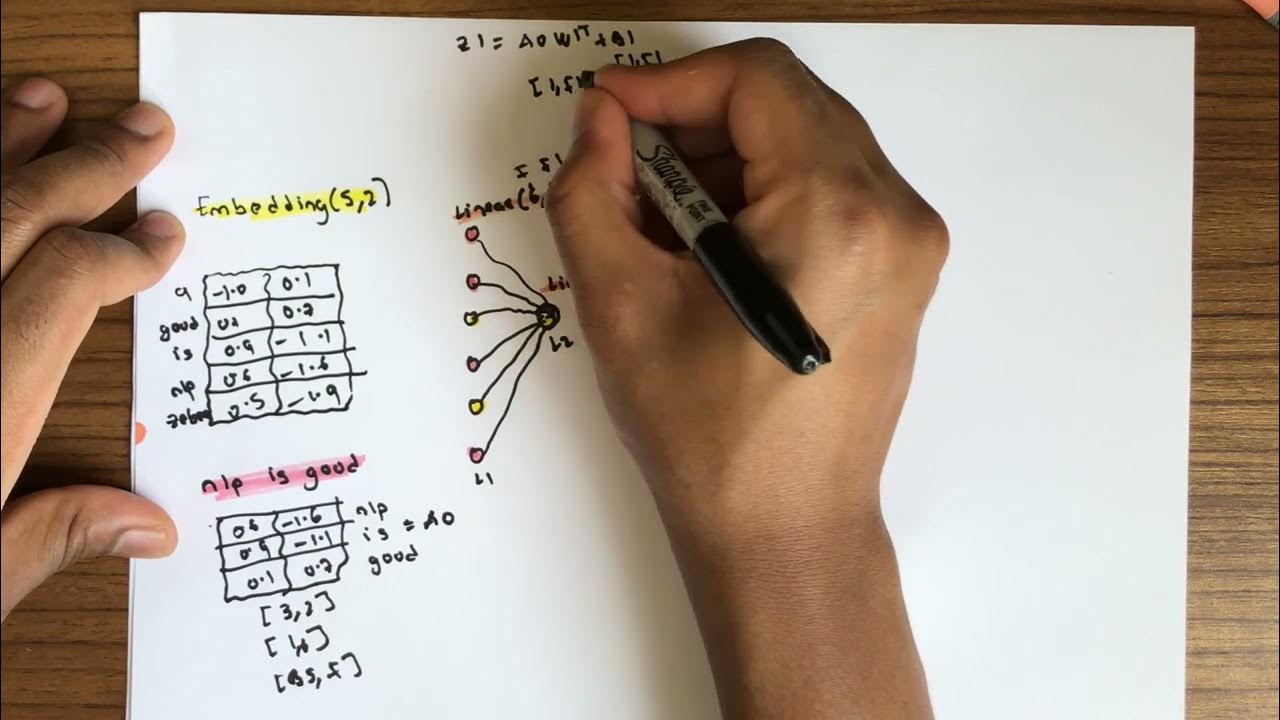

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch.nn.embedding Sparse We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. When should i choose to set sparse=true for an embedding layer? Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Weight will be a. Torch.nn.embedding Sparse.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.embedding Sparse See notes under torch.nn.embedding for more details regarding. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. What are the pros and cons of the sparse and dense versions. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. So you define your embedding as follows. Sparse gradients mode can be enabled. Torch.nn.embedding Sparse.

From blog.csdn.net

torch.nn.Embedding()参数讲解_nn.embedding参数CSDN博客 Torch.nn.embedding Sparse So you define your embedding as follows. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. When should i choose to set sparse=true for an embedding layer? What are the pros and cons of the sparse and dense versions. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Sparse gradients mode can be enabled. Torch.nn.embedding Sparse.

From blog.csdn.net

关于nn.embedding的理解_nn.embedding怎么处理floatCSDN博客 Torch.nn.embedding Sparse See notes under torch.nn.embedding for more details regarding. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. So you define your embedding as follows. Weight will be a sparse tensor. What are the pros and cons of. Torch.nn.embedding Sparse.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch.nn.embedding Sparse When should i choose to set sparse=true for an embedding layer? Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. What are the pros and cons of the sparse and dense versions. See notes under torch.nn.embedding for. Torch.nn.embedding Sparse.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch.nn.embedding Sparse So you define your embedding as follows. Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); What are the pros and cons of the sparse and dense versions. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. See notes. Torch.nn.embedding Sparse.

From github.com

Embedding Sparse · Issue 17912 · pytorch/pytorch · GitHub Torch.nn.embedding Sparse See notes under torch.nn.embedding for more details regarding. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Learn how to speed up and reduce memory usage of deep. Torch.nn.embedding Sparse.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch.nn.embedding Sparse Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); So you define your embedding as follows. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. See notes under torch.nn.embedding for. Torch.nn.embedding Sparse.

From discuss.pytorch.org

[Solved, Self Implementing] How to return sparse tensor from nn Torch.nn.embedding Sparse So you define your embedding as follows. Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are. Torch.nn.embedding Sparse.

From blog.csdn.net

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用_embedding_dim Torch.nn.embedding Sparse Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Weight will be a sparse tensor. What are the pros and cons of the sparse and dense. Torch.nn.embedding Sparse.

From www.youtube.com

sparse=True in nn.Embedding in PyTorch YouTube Torch.nn.embedding Sparse Weight will be a sparse tensor. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. So you define your embedding as follows. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. See notes under torch.nn.embedding for more details regarding. We want it to be straightforward to. Torch.nn.embedding Sparse.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Sparse Layers(12)_pytorch的sparse layerCSDN博客 Torch.nn.embedding Sparse Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. See notes under torch.nn.embedding for more details regarding. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); We want it to be straightforward to construct a. Torch.nn.embedding Sparse.

From blog.csdn.net

【Pytorch学习】nn.Embedding的讲解及使用CSDN博客 Torch.nn.embedding Sparse Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. What are the pros and cons of the sparse and dense versions. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. So you define your embedding as follows. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Learn how to speed up and reduce memory usage of deep. Torch.nn.embedding Sparse.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Torch.nn.embedding Sparse Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Weight will be a sparse tensor. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. See notes under torch.nn.embedding for. Torch.nn.embedding Sparse.

From www.ppmy.cn

nn.embedding函数详解(pytorch) Torch.nn.embedding Sparse Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. So you define your embedding as follows. What are the pros and cons of the sparse and dense versions. When should i choose to set sparse=true for an embedding layer? Weight will be a sparse tensor. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing. Torch.nn.embedding Sparse.

From ericpengshuai.github.io

【PyTorch】RNN 像我这样的人 Torch.nn.embedding Sparse Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. See notes under torch.nn.embedding for more details regarding. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Weight will be a sparse tensor. Sparse. Torch.nn.embedding Sparse.

From blog.csdn.net

torch.nn.Embedding参数详解之num_embeddings,embedding_dim_torchembeddingCSDN博客 Torch.nn.embedding Sparse Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. When should i choose to set sparse=true for an embedding layer? So you define your embedding as follows. We want it to be straightforward to construct a sparse tensor from a given dense. Torch.nn.embedding Sparse.

From www.youtube.com

torch.nn.Embedding How embedding weights are updated in Torch.nn.embedding Sparse Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. What are the pros and cons of the sparse and dense versions. See notes under torch.nn.embedding for more details. Torch.nn.embedding Sparse.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch.nn.embedding Sparse Weight will be a sparse tensor. So you define your embedding as follows. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. See notes under torch.nn.embedding for more details regarding. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated. Torch.nn.embedding Sparse.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch.nn.embedding Sparse We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. See notes under torch.nn.embedding for more details regarding. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers);. Torch.nn.embedding Sparse.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch.nn.embedding Sparse What are the pros and cons of the sparse and dense versions. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. See notes under torch.nn.embedding for more details regarding. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. So you define your embedding as follows. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Weight will be. Torch.nn.embedding Sparse.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch.nn.embedding Sparse What are the pros and cons of the sparse and dense versions. See notes under torch.nn.embedding for more details regarding. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. So you define your. Torch.nn.embedding Sparse.

From blog.csdn.net

torch.nn.embedding的工作原理_nn.embedding原理CSDN博客 Torch.nn.embedding Sparse What are the pros and cons of the sparse and dense versions. So you define your embedding as follows. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Weight will be a sparse tensor. Sparse gradients mode can be. Torch.nn.embedding Sparse.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch.nn.embedding Sparse Weight will be a sparse tensor. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. What are the pros and cons of the sparse and dense versions. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. So you define your embedding as follows. Sparse gradients mode can be. Torch.nn.embedding Sparse.

From blog.csdn.net

torch.nn.Embedding()的固定化_embedding 固定初始化CSDN博客 Torch.nn.embedding Sparse Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); When should i choose to set sparse=true for an embedding layer? Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch. Torch.nn.embedding Sparse.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch.nn.embedding Sparse Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); When should i choose to set sparse=true for an embedding layer? Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. See notes under torch.nn.embedding for more. Torch.nn.embedding Sparse.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Torch.nn.embedding Sparse When should i choose to set sparse=true for an embedding layer? Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by. Torch.nn.embedding Sparse.

From www.cnblogs.com

torch.nn.Embedding()实现文本转换词向量 luyizhou 博客园 Torch.nn.embedding Sparse We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. What are the pros and cons of the sparse and dense versions. Weight will be a sparse tensor. So you define your embedding as follows. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch. Torch.nn.embedding Sparse.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch.nn.embedding Sparse What are the pros and cons of the sparse and dense versions. When should i choose to set sparse=true for an embedding layer? Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); We want it to be straightforward to construct a sparse tensor from a given dense. Torch.nn.embedding Sparse.

From blog.csdn.net

对nn.Embedding的理解以及nn.Embedding不能嵌入单个数值问题_nn.embedding(beCSDN博客 Torch.nn.embedding Sparse Weight will be a sparse tensor. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. See notes under torch.nn.embedding for more details regarding.. Torch.nn.embedding Sparse.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Sparse Layers(12)_pytorch的sparse layerCSDN博客 Torch.nn.embedding Sparse Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); When should i choose to set sparse=true for an embedding layer? So you define your embedding as. Torch.nn.embedding Sparse.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Sparse Layers(12)_pytorch的sparse layerCSDN博客 Torch.nn.embedding Sparse Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Learn how to speed up and reduce memory usage of deep learning recommender systems in pytorch by using sparse embedding layers. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. What are the pros and cons of the sparse and dense versions. Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates. Torch.nn.embedding Sparse.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Torch.nn.embedding Sparse We want it to be straightforward to construct a sparse tensor from a given dense tensor by providing conversion. When should i choose to set sparse=true for an embedding layer? Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. Class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none, max_norm=none, norm_type=2.0,. See notes under torch.nn.embedding for more details regarding. So you define your embedding as follows. Weight will be a. Torch.nn.embedding Sparse.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.embedding Sparse Sparse gradients mode can be enabled for nn.embedding, with it gradient elementwise mean & variance estimates are updated correctly (for specific optimizers); When should i choose to set sparse=true for an embedding layer? Weight will be a sparse tensor. Embedding class torch.nn.embedding(num_embeddings, embedding_dim, padding_idx=none,. We want it to be straightforward to construct a sparse tensor from a given dense tensor. Torch.nn.embedding Sparse.