Pytorch Embedding Implementation . this module is often used to retrieve word embeddings using indices. i am currently working on class embedding() in pytorch and i looked at its implementation. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. The input to the module is a list of indices,. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. The input to the module is a list of indices, and the. this module is often used to store word embeddings and retrieve them using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information.

from blog.acolyer.org

this module is often used to store word embeddings and retrieve them using indices. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. i am currently working on class embedding() in pytorch and i looked at its implementation. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is a list of indices, and the. this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices,. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use.

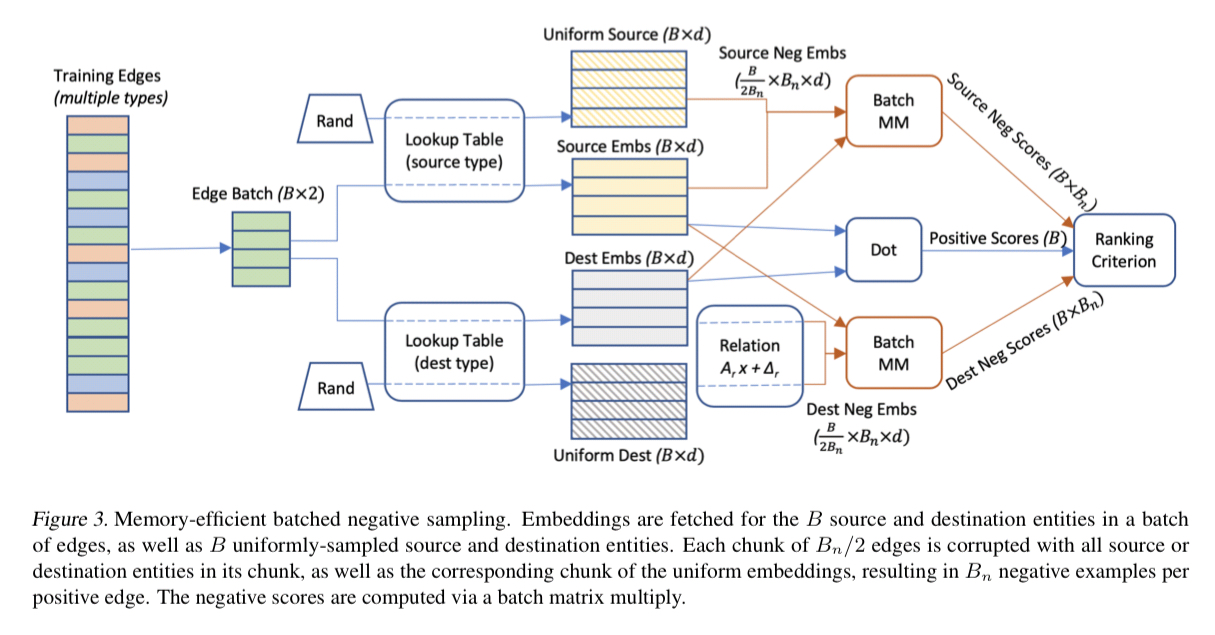

PyTorchBigGraph a largescale graph embedding system the morning paper

Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this module is often used to retrieve word embeddings using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. The input to the module is a list of indices,. this module is often used to store word embeddings and retrieve them using indices. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. The input to the module is a list of indices, and the. i am currently working on class embedding() in pytorch and i looked at its implementation. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. The input to the module is a list of indices, and the. The input to the module is a list of indices,. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. in this brief article. Pytorch Embedding Implementation.

From github.com

GitHub xwzy/Tripletdeephashpytorch Pytorch implementation of Pytorch Embedding Implementation in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. in summary, word embeddings are a representation of the *semantics* of a word,. Pytorch Embedding Implementation.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Pytorch Embedding Implementation The input to the module is a list of indices, and the. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. i am currently working on class embedding() in pytorch and i looked at its implementation. this module is often used to retrieve word embeddings using indices. this. Pytorch Embedding Implementation.

From github.com

GitHub wzlxjtu/PositionalEncoding2D A PyTorch implementation of the Pytorch Embedding Implementation this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. i am currently working on class embedding() in pytorch and i looked at its implementation. The input to the module is a list of indices, and the. The input to the module is a list. Pytorch Embedding Implementation.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. i am currently working on class embedding() in pytorch and i looked at its implementation. The input to the module is a list of indices,. in this brief article i will show how an embedding layer is equivalent. Pytorch Embedding Implementation.

From dongtienvietnam.com

Importing Pytorch In Jupyter Notebook A StepByStep Guide Pytorch Embedding Implementation this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices, and the. i am currently working on class embedding() in pytorch and i looked at its implementation. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input. Pytorch Embedding Implementation.

From theaisummer.com

Pytorch AI Summer Pytorch Embedding Implementation in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is a list of indices,. The input to the module is. Pytorch Embedding Implementation.

From github.com

GitHub chenxinli001/StegaNeRF Official Pytorch implementation of Pytorch Embedding Implementation this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. The input to the module is a list of indices,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example.. Pytorch Embedding Implementation.

From github.com

About time embedding implementation · Issue 77 · lucidrains/denoising Pytorch Embedding Implementation i am currently working on class embedding() in pytorch and i looked at its implementation. this module is often used to store word embeddings and retrieve them using indices. this module is often used to retrieve word embeddings using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic. Pytorch Embedding Implementation.

From github.com

GitHub marlincodes/HTGN PyTorch Implementation for "Discretetime Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. The input to the module is a list of indices, and the. i am currently working on class embedding() in pytorch and i looked at its implementation. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic. Pytorch Embedding Implementation.

From clay-atlas.com

[PyTorch] Use "Embedding" Layer To Process Text ClayTechnology World Pytorch Embedding Implementation in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. this module is often used to store word embeddings and retrieve them using indices. i am currently working on class embedding() in pytorch and i looked at its implementation. in summary,. Pytorch Embedding Implementation.

From lightning.ai

Introduction to Coding Neural Networks with PyTorch + Lightning Pytorch Embedding Implementation in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is a list of indices,. this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices, and the. i've currently implemented my model to use. Pytorch Embedding Implementation.

From coderzcolumn.com

PyTorch LSTM Networks For Text Classification Tasks (Word Embeddings) Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. The input to the module is a list of indices, and the. i am currently. Pytorch Embedding Implementation.

From github.com

GitHub claying/OTK A Pytorch implementation of the optimal transport Pytorch Embedding Implementation this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. this example uses nn.embedding so the inputs of the forward() method. Pytorch Embedding Implementation.

From github.com

GitHub Pytorch Pytorch Embedding Implementation i am currently working on class embedding() in pytorch and i looked at its implementation. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is. Pytorch Embedding Implementation.

From github.com

GitHub ssuiliu/learnableembedsizesforRecSys A PyTorch Pytorch Embedding Implementation The input to the module is a list of indices,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. this module is often used to store word embeddings and retrieve them using indices. i am currently working on class embedding() in. Pytorch Embedding Implementation.

From pytorch.org

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. i am currently working on class embedding() in pytorch and i looked at its implementation. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is a list of indices, and. Pytorch Embedding Implementation.

From github.com

GitHub This is the repository for the Pytorch Embedding Implementation i am currently working on class embedding() in pytorch and i looked at its implementation. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. The input to the module is a list of indices,. this module is often used to retrieve word embeddings. Pytorch Embedding Implementation.

From barkmanoil.com

Pytorch Nn Embedding? The 18 Correct Answer Pytorch Embedding Implementation this module is often used to retrieve word embeddings using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. The input to the module is a list of indices,. Pytorch Embedding Implementation.

From awesomeopensource.com

Zstgan Pytorch Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this module is often used to retrieve word embeddings using indices. i am currently working on class embedding() in pytorch and i looked at its implementation. The input to the module is a list of indices,. this. Pytorch Embedding Implementation.

From pytorch.org

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. The input to the module is a list of indices,. this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices, and the. i am currently working on class embedding() in pytorch and. Pytorch Embedding Implementation.

From github.com

GitHub graytowne/caser_pytorch A PyTorch implementation of Pytorch Embedding Implementation in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. this module is often used to retrieve word embeddings using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. this example uses. Pytorch Embedding Implementation.

From www.youtube.com

[pytorch] Embedding, LSTM 입출력 텐서(Tensor) Shape 이해하고 모델링 하기 YouTube Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this module is often used to store word embeddings and retrieve them using indices. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. The. Pytorch Embedding Implementation.

From github.com

GitHub Deepayan137/DeepClustering A pytorch implementation of the Pytorch Embedding Implementation i am currently working on class embedding() in pytorch and i looked at its implementation. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. The input to the module is a list of indices,. this module is often used to store. Pytorch Embedding Implementation.

From www.aritrasen.com

Deep Learning with Pytorch Text Generation LSTMs 3.3 Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. this module is often used to retrieve word embeddings using indices. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. i am currently working on class. Pytorch Embedding Implementation.

From github.com

GitHub xwzy/Tripletdeephashpytorch Pytorch implementation of Pytorch Embedding Implementation The input to the module is a list of indices, and the. The input to the module is a list of indices,. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation. Pytorch Embedding Implementation.

From blog.acolyer.org

PyTorchBigGraph a largescale graph embedding system the morning paper Pytorch Embedding Implementation this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. this module is often used to store word embeddings and retrieve them using indices. i am currently working on class embedding() in pytorch and i looked at its implementation. The input to the module. Pytorch Embedding Implementation.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Pytorch Embedding Implementation this module is often used to retrieve word embeddings using indices. The input to the module is a list of indices,. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. The input to the module is a list of indices, and the. this example uses nn.embedding so the inputs. Pytorch Embedding Implementation.

From wandb.ai

Interpret any PyTorch Model Using W&B Embedding Projector embedding Pytorch Embedding Implementation this module is often used to store word embeddings and retrieve them using indices. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this module is often used to retrieve word embeddings using indices. this example uses nn.embedding so the inputs of the forward() method is. Pytorch Embedding Implementation.

From github.com

GitHub mateoespinosa/cem Concept Embedding Models Pytorch Implementation Pytorch Embedding Implementation this module is often used to retrieve word embeddings using indices. this module is often used to store word embeddings and retrieve them using indices. in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. this example uses nn.embedding so the inputs of the forward() method is a list. Pytorch Embedding Implementation.

From blog.munhou.com

Pytorch Implementation of GEE A Gradientbased Explainable Variational Pytorch Embedding Implementation this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. this module is often used to store word embeddings and retrieve them using indices. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. . Pytorch Embedding Implementation.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Embedding Implementation in summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. this module is often used to store word embeddings and retrieve them using indices. i've currently. Pytorch Embedding Implementation.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Embedding Implementation The input to the module is a list of indices,. this example uses nn.embedding so the inputs of the forward() method is a list of word indexes (the implementation doesn’t seem to use. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example.. Pytorch Embedding Implementation.

From www.reddit.com

[R] A PyTorch Implementation of "SINE Scalable Network Pytorch Embedding Implementation i am currently working on class embedding() in pytorch and i looked at its implementation. The input to the module is a list of indices,. i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. The input to the module is a list of indices, and the. this. Pytorch Embedding Implementation.

From kushalj001.github.io

Building Sequential Models in PyTorch Black Box ML Pytorch Embedding Implementation i've currently implemented my model to use just one embedding layer for both source and target tensors, but i'm. this module is often used to retrieve word embeddings using indices. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. i. Pytorch Embedding Implementation.