Yarn Kill All Running Jobs . It may be time consuming to get all the application ids from yarn and kill them one by one. Once a job is deployed and running, we can kill it if required. To kill a yarn application by its name, you can use the command: You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. For example, to kill a job that is hang for a very long time. You can use a bash for loop to accomplish this repetitive task. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. This article provides steps to kill spark jobs submitted to a yarn. Run list to show all the jobs, then use the jobid/applicationid in the appropriate command.

from blog.csdn.net

Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. To kill a yarn application by its name, you can use the command: You can use a bash for loop to accomplish this repetitive task. This article provides steps to kill spark jobs submitted to a yarn. It may be time consuming to get all the application ids from yarn and kill them one by one. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Once a job is deployed and running, we can kill it if required. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time.

spark on yarn执行完hsql命令 yarn队列一直没有释放资源_hive 提交任务后一直不分配资源CSDN博客

Yarn Kill All Running Jobs You can use a bash for loop to accomplish this repetitive task. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Once a job is deployed and running, we can kill it if required. Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time. You can use a bash for loop to accomplish this repetitive task. It may be time consuming to get all the application ids from yarn and kill them one by one. To kill a yarn application by its name, you can use the command:

From lightrun.com

Errors when running `yarn start` Yarn Kill All Running Jobs You can use a bash for loop to accomplish this repetitive task. Once a job is deployed and running, we can kill it if required. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang. Yarn Kill All Running Jobs.

From www.cnblogs.com

hadoop job kill 与 yarn application kii(作业卡了或作业重复提交或MapReduce任务运行到 Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. To kill a yarn application by its name, you can use the command: Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. Sometime we get a situation where we have to get lists of all. Yarn Kill All Running Jobs.

From www.dreamstime.com

Yarn Thread Running in the Machine Stock Image Image of manufacturing Yarn Kill All Running Jobs You can use a bash for loop to accomplish this repetitive task. To kill a yarn application by its name, you can use the command: Once a job is deployed and running, we can kill it if required. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually.. Yarn Kill All Running Jobs.

From blog.csdn.net

spark on yarn执行完hsql命令 yarn队列一直没有释放资源_hive 提交任务后一直不分配资源CSDN博客 Yarn Kill All Running Jobs You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. To kill a yarn application by its name, you can use the. Yarn Kill All Running Jobs.

From www.dreamstime.com

Yarn Thread Running in the Machine Stock Photo Image of factory Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. It may be time consuming to get all the application ids from yarn and kill them one by one. For example, to kill a job that is hang for a very long time. You can kill a spark job through various methods such as using. Yarn Kill All Running Jobs.

From velog.io

[Day 58] Yarn run, yarn dev 에러 해결방법 Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. This article provides steps to kill spark jobs submitted to a yarn. For example, to kill a job that is hang for a very long time. Once a job is deployed and running, we can kill it if required. To kill. Yarn Kill All Running Jobs.

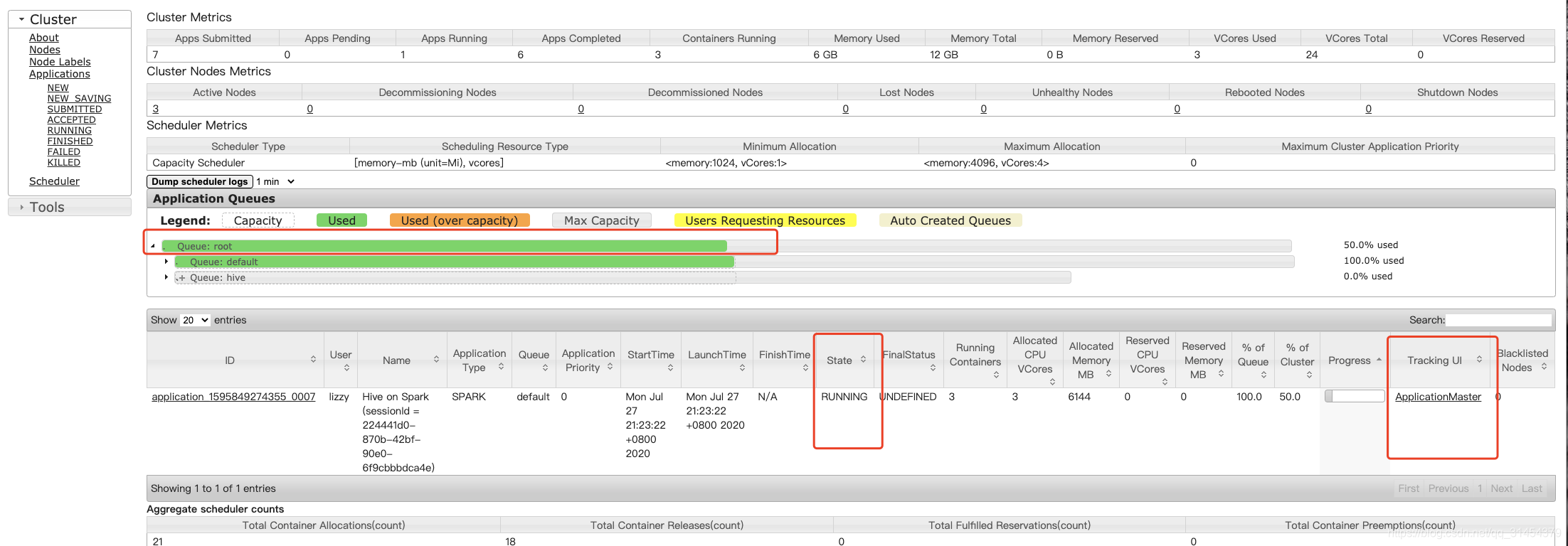

From community.cloudera.com

Solved Once YARN queue is at capacity, running jobs stop Yarn Kill All Running Jobs Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time. Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. Once a job is deployed. Yarn Kill All Running Jobs.

From blog.csdn.net

spark on yarnkill正在跑进程_failing over to rm84CSDN博客 Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. You can use a bash for loop to accomplish this repetitive task. This article provides steps to kill spark jobs submitted to a yarn. For example, to kill a job that is hang for a very long time. To kill a yarn application by its name, you. Yarn Kill All Running Jobs.

From ahmedzbyr.gitlab.io

Long Running Jobs in YARN distcp. AHMED ZBYR Yarn Kill All Running Jobs You can use a bash for loop to accomplish this repetitive task. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. Once a job is deployed and running, we can kill it if required. For example, to kill a job that is hang. Yarn Kill All Running Jobs.

From www.youtube.com

YARN Running Map Reduce Jobs YouTube Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. To kill a yarn application by its name, you can use the command: It may be time consuming to get all the application ids from yarn and kill them one by one. Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. Sometime. Yarn Kill All Running Jobs.

From stackoverflow.com

hadoop yarn How to kill a running Spark application? Stack Overflow Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. For example, to kill a job that is hang for a very long time. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. Once. Yarn Kill All Running Jobs.

From linuxhint.com

How to Install Yarn on Windows Yarn Kill All Running Jobs To kill a yarn application by its name, you can use the command: This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. It may be time consuming to get all the. Yarn Kill All Running Jobs.

From www.simplilearn.com.cach3.com

Yarn Tutorial Yarn Kill All Running Jobs To kill a yarn application by its name, you can use the command: Once a job is deployed and running, we can kill it if required. It may be time consuming to get all the application ids from yarn and kill them one by one. You can kill a spark job through various methods such as using the spark web. Yarn Kill All Running Jobs.

From github.com

`yarn run deploy` not working · Issue 7460 · facebook/createreactapp Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time. Run list to show all the jobs,. Yarn Kill All Running Jobs.

From www.junyao.tech

Flink on yarn 俊瑶先森 Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. It may be time consuming to get all the application ids from yarn and kill them one by one. This article provides steps to kill spark jobs submitted to a yarn. To kill a yarn application by its name, you can use the command: You. Yarn Kill All Running Jobs.

From trishagurumi.com

Yarn Types and Weights A Starting Guide trishagurumi Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. To kill a yarn application by its name, you can use the command: Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. This article provides steps to kill. Yarn Kill All Running Jobs.

From www.youtube.com

RUNNING PILE WARP YARNS THROUGH FRAMES AND JACQUARD en341 YouTube Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. For example, to kill a job that is hang for a very long time. It may be time consuming to get all the application ids from yarn and kill them one by one. To kill a yarn application by its name, you can use the command: You can. Yarn Kill All Running Jobs.

From winway.github.io

YARN集群任务一直处于Accepted状态无法Running winway's blog Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. This article provides steps to kill spark jobs submitted to a yarn. For example, to kill a job that is hang for a very long time. You can use a bash for loop to accomplish this repetitive task. It may be time consuming to get. Yarn Kill All Running Jobs.

From www.youtube.com

Did npm just kill yarn? YouTube Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. This article provides steps to kill spark jobs submitted to a yarn. For example, to kill a job that is hang for a very long time. Sometime we get a situation where we have to get lists of all long running. Yarn Kill All Running Jobs.

From blog.csdn.net

Flink 搭建 Flink On Yarn 集群模式_flink on yarn 动态发布CSDN博客 Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. You can use a bash for loop to accomplish this repetitive task. For example, to kill a job that is hang for. Yarn Kill All Running Jobs.

From blog.csdn.net

Flink on Yarn两种运行模式详解_flink on yarn的运行模式CSDN博客 Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. It may be time consuming to get all the application ids from yarn and kill them one by one. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. To kill a yarn application by. Yarn Kill All Running Jobs.

From www.pinterest.com

New yarnbomb in progress. Yarn art, Installation art, Installation Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time. You can use a bash for loop. Yarn Kill All Running Jobs.

From blog.csdn.net

spark on yarnkill正在跑进程_failing over to rm84CSDN博客 Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. This article provides steps to kill spark jobs submitted to a yarn. You can use a bash for loop to accomplish this repetitive task. For example, to kill a job that is hang for a very long time. Once a job. Yarn Kill All Running Jobs.

From medium.com

Running Spark Jobs on YARN. When running Spark on YARN, each Spark Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. It may be time consuming to get all the application ids from yarn and kill them one by one. To kill a yarn application by its name, you can use the command: You can use a bash for loop to accomplish this repetitive task. For example, to. Yarn Kill All Running Jobs.

From github.com

When yarn build is run for the first time, the files of yarn build are Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. You can use a bash for loop to accomplish this repetitive. Yarn Kill All Running Jobs.

From stackoverflow.com

amazon web services Kill YARN application according to user name Yarn Kill All Running Jobs Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that is hang for a very long time. You can use a bash for loop to accomplish this repetitive task. To kill a yarn application by its name,. Yarn Kill All Running Jobs.

From docs.cloudera.com

Viewing the YARN job history Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. You can use a bash for loop to accomplish this repetitive task. To kill a yarn application by its name, you can. Yarn Kill All Running Jobs.

From www.youtube.com

Yarn Run In Calculation YouTube Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. You can use a bash for loop to accomplish this repetitive task. This article provides steps to kill spark jobs submitted to a yarn. To kill a yarn application by its name, you can use the command: For example, to kill. Yarn Kill All Running Jobs.

From www.craiyon.com

Yarn mario character on Craiyon Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. For example, to kill a job that. Yarn Kill All Running Jobs.

From www.youtube.com

030 Job Run YARN in hadoop YouTube Yarn Kill All Running Jobs Once a job is deployed and running, we can kill it if required. To kill a yarn application by its name, you can use the command: You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. You can use a bash for loop to accomplish this repetitive task.. Yarn Kill All Running Jobs.

From www.youtube.com

Ran out of yarn! Add More Yarn to Your Knit YouTube Yarn Kill All Running Jobs For example, to kill a job that is hang for a very long time. This article provides steps to kill spark jobs submitted to a yarn. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Sometime we get a situation where we have to get lists of. Yarn Kill All Running Jobs.

From www.youtube.com

What To Do if You Run Out of Yarn Mid Project How to Attach Yarn YouTube Yarn Kill All Running Jobs Run list to show all the jobs, then use the jobid/applicationid in the appropriate command. This article provides steps to kill spark jobs submitted to a yarn. You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Sometime we get a situation where we have to get lists. Yarn Kill All Running Jobs.

From klaaavssy.blob.core.windows.net

Yarn Kill Accepted Application at Cythia Jackson blog Yarn Kill All Running Jobs You can kill a spark job through various methods such as using the spark web ui, yarn cli, kubernetes commands, or manually. Once a job is deployed and running, we can kill it if required. For example, to kill a job that is hang for a very long time. You can use a bash for loop to accomplish this repetitive. Yarn Kill All Running Jobs.

From ceqdybus.blob.core.windows.net

Yarn Command Kill Application at Yvonne Kilgore blog Yarn Kill All Running Jobs It may be time consuming to get all the application ids from yarn and kill them one by one. For example, to kill a job that is hang for a very long time. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. Once. Yarn Kill All Running Jobs.

From gamercrafting.com

I can kill you with my brain speckled sock yarn GamerCrafting Yarns Yarn Kill All Running Jobs This article provides steps to kill spark jobs submitted to a yarn. Sometime we get a situation where we have to get lists of all long running and based on threshold we need to kill them.also sometime we. To kill a yarn application by its name, you can use the command: You can use a bash for loop to accomplish. Yarn Kill All Running Jobs.