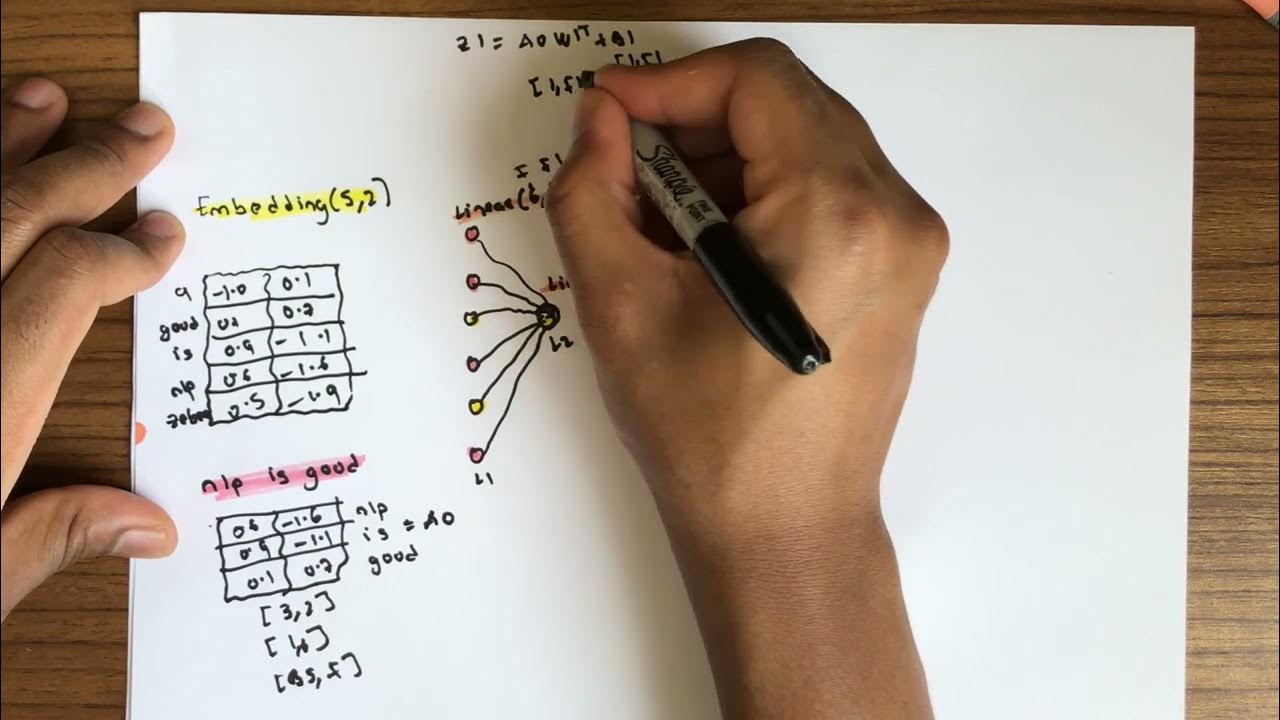

Torch Embedding . This module is often used to retrieve word. Word embeddings are dense vectors of real. Let me explain what it is, in simple terms. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Learn how to use word embeddings to encode lexical semantics in natural language processing. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. This mapping is done through an embedding matrix, which is a.

from www.youtube.com

A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Learn how to use word embeddings to encode lexical semantics in natural language processing. Word embeddings are dense vectors of real. Let me explain what it is, in simple terms. This module is often used to retrieve word. This mapping is done through an embedding matrix, which is a.

torch.nn.Embedding How embedding weights are updated in

Torch Embedding Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Let me explain what it is, in simple terms. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). This mapping is done through an embedding matrix, which is a. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. This module is often used to retrieve word. Word embeddings are dense vectors of real. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Learn how to use word embeddings to encode lexical semantics in natural language processing. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings.

From www.panthereast.com

EBK2 Stand Up Granule Embedding Torch Kit SIEVERT Torch Embedding Word embeddings are dense vectors of real. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings.. Torch Embedding.

From github.com

index out of range in self torch.embedding(weight, input, padding_idx Torch Embedding Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. Learn how to use word embeddings to encode lexical semantics in natural language processing. This is one of the simplest and most important layers when it comes to. Torch Embedding.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Torch Embedding This module is often used to retrieve word. Learn how to use word embeddings to encode lexical semantics in natural language processing. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp. Torch Embedding.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Torch Embedding This module is often used to retrieve word. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Generate a simple lookup table that. Torch Embedding.

From github.com

GitHub CyberZHG/torchpositionembedding Position embedding in PyTorch Torch Embedding Let me explain what it is, in simple terms. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Learn how to use word embeddings to encode. Torch Embedding.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Torch Embedding Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Word embeddings are dense vectors of. Torch Embedding.

From blog.csdn.net

torch.nn.embedding的工作原理_nn.embedding原理CSDN博客 Torch Embedding Learn how to use word embeddings to encode lexical semantics in natural language processing. Let me explain what it is, in simple terms. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a. Torch Embedding.

From yifanhu.net

Visualizing BERT Vocab/Token Embeddings Torch Embedding Learn how to use word embeddings to encode lexical semantics in natural language processing. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks.. Torch Embedding.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Let me explain what it is, in simple terms. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Word embeddings are dense vectors. Torch Embedding.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch Embedding This mapping is done through an embedding matrix, which is a. This module is often used to retrieve word. Let me explain what it is, in simple terms. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear. Torch Embedding.

From bugtoolz.com

Rotary Position Embedding (RoPE, 旋转式位置编码) 原理讲解+torch代码实现 编程之家 Torch Embedding Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Generate a simple lookup table that looks up embeddings in a fixed dictionary and. Torch Embedding.

From blog.csdn.net

torch.nn.Embedding()参数讲解_nn.embedding参数CSDN博客 Torch Embedding This mapping is done through an embedding matrix, which is a. Learn how to use word embeddings to encode lexical semantics in natural language processing. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Let me explain what it is, in simple terms. Learn how to use torch.nn.embedding to create and. Torch Embedding.

From blog.csdn.net

torch.nn.Embedding参数详解之num_embeddings,embedding_dim_torchembeddingCSDN博客 Torch Embedding This module is often used to retrieve word. Let me explain what it is, in simple terms. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. This mapping is done through an embedding matrix, which is a. Nn.embedding is a pytorch layer that maps indices. Torch Embedding.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch Embedding Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Word embeddings are dense vectors of real. Learn how to use word embeddings to encode lexical semantics in natural language processing. This. Torch Embedding.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Torch Embedding Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. This module is often used to retrieve word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known. Torch Embedding.

From www.researchgate.net

Implementation of Rotary Position Embedding(RoPE). Download Torch Embedding This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Learn how to use word embeddings to encode lexical semantics in natural language processing. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Word embeddings are dense vectors of real.. Torch Embedding.

From blog.csdn.net

PNN(Productbased Neural Network):模型学习及torch复现_pnn的embedding层CSDN博客 Torch Embedding This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Let me explain what it is,. Torch Embedding.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch Embedding This mapping is done through an embedding matrix, which is a. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Generate a simple lookup table that looks. Torch Embedding.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch Embedding A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. This module is often used to retrieve word. Generate a simple lookup table that looks up embeddings in a. Torch Embedding.

From coderzcolumn.com

How to Use GloVe Word Embeddings With PyTorch Networks? Torch Embedding This module is often used to retrieve word. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. This mapping is done through an embedding matrix, which is a. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Nn.embedding is. Torch Embedding.

From blog.csdn.net

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用_embedding_dim Torch Embedding Let me explain what it is, in simple terms. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. This module is often used to retrieve word. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. This mapping is done. Torch Embedding.

From www.youtube.com

torch.nn.Embedding How embedding weights are updated in Torch Embedding This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Learn how to use word embeddings to encode lexical semantics in natural language processing. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch,. Torch Embedding.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Torch Embedding A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). This is one of the simplest and most important layers when it comes to. Torch Embedding.

From theaisummer.com

Pytorch AI Summer Torch Embedding This module is often used to retrieve word. Learn how to use word embeddings to encode lexical semantics in natural language processing. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Learn how to use torch.nn.embedding. Torch Embedding.

From www.youtube.com

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch Embedding This module is often used to retrieve word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Let me explain what it is, in simple terms. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in. Torch Embedding.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Torch Embedding This module is often used to retrieve word. Word embeddings are dense vectors of real. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Let me explain what it is, in simple. Torch Embedding.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). Learn how to use word embeddings to encode lexical semantics in natural language processing. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Let me explain. Torch Embedding.

From github.com

rotaryembeddingtorch/rotary_embedding_torch.py at main · lucidrains Torch Embedding This mapping is done through an embedding matrix, which is a. Word embeddings are dense vectors of real. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Generate. Torch Embedding.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch Embedding This module is often used to retrieve word. Nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Word embeddings are dense vectors of real. Generate a simple lookup table that. Torch Embedding.

From github.com

paddle.embedding 与 torch.embedding 底层实现有什么不同吗 · Issue 44565 Torch Embedding Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. Learn how to use word embeddings to encode lexical semantics in natural language processing. This mapping is done through an embedding matrix, which is a. Let me explain what it is, in simple terms. Y ou might have seen the famous pytorch nn.embedding () layer. Torch Embedding.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding This mapping is done through an embedding matrix, which is a. Word embeddings are dense vectors of real. A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and how they are used for word representation in nlp tasks. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures.. Torch Embedding.

From colab.research.google.com

Google Colab Torch Embedding Word embeddings are dense vectors of real. This module is often used to retrieve word. Let me explain what it is, in simple terms. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear layers in pytorch, and. Torch Embedding.

From github.com

GitHub PyTorch implementation of some Torch Embedding This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Let me explain what it is, in simple terms. Learn how to use word embeddings to encode lexical semantics in natural language processing. Learn how to use torch.nn.embedding to create and retrieve word embeddings from a fixed dictionary and size. Y ou. Torch Embedding.

From colab.research.google.com

Google Colab Torch Embedding This module is often used to retrieve word. This is one of the simplest and most important layers when it comes to designing advanced nlp architectures. Y ou might have seen the famous pytorch nn.embedding () layer in multiple neural network architectures that involves natural language processing (nlp). A discussion thread about the difference between nn.embedding and nn.linear layers in. Torch Embedding.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding This module is often used to retrieve word. Generate a simple lookup table that looks up embeddings in a fixed dictionary and size. This mapping is done through an embedding matrix, which is a. Learn how to use word embeddings to encode lexical semantics in natural language processing. This is one of the simplest and most important layers when it. Torch Embedding.