Pytorch Module Embedding . In the example below, we will use the same trivial vocabulary example. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,.

from zhuanlan.zhihu.com

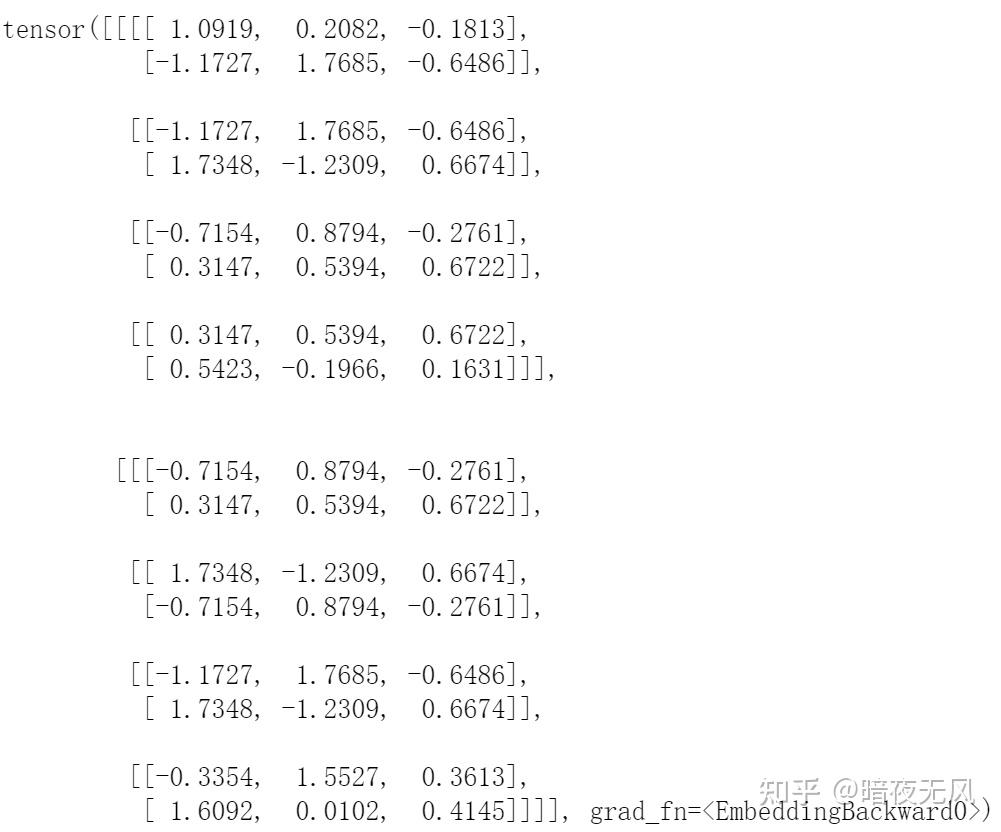

In the example below, we will use the same trivial vocabulary example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. This mapping is done through an embedding matrix, which is a. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks.

无脑入门pytorch系列(一)—— nn.embedding 知乎

Pytorch Module Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. This mapping is done through an embedding matrix, which is a. In the example below, we will use the same trivial vocabulary example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,.

From zhuanlan.zhihu.com

Pytorch一行代码便可以搭建整个transformer模型 知乎 Pytorch Module Embedding This mapping is done through an embedding matrix, which is a. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. . Pytorch Module Embedding.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Pytorch Module Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. In the example below, we will use the same trivial vocabulary example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. the module that. Pytorch Module Embedding.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Pytorch Module Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. In the example below, we will use the same trivial vocabulary example.. Pytorch Module Embedding.

From blog.eduonix.com

Learn To Build Neural Networks with PyTorch Eduonxi Blog Pytorch Module Embedding embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer. Pytorch Module Embedding.

From www.assemblyai.com

PyTorch Lightning for Dummies A Tutorial and Overview Pytorch Module Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. nn.embedding is a. Pytorch Module Embedding.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in pytorch, torch.embedding (part of the torch.nn module) is a building. Pytorch Module Embedding.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Module Embedding embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. In the example below, we will use the same trivial vocabulary example. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in pytorch, torch.embedding (part of the torch.nn module) is a. Pytorch Module Embedding.

From www.youtube.com

Understanding Embedding Layer in Pytorch YouTube Pytorch Module Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. In the example below, we will use the same trivial vocabulary example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done. Pytorch Module Embedding.

From pytorch.org

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Module Embedding in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. In the example below, we will use the same trivial vocabulary example. This mapping is done through an embedding matrix, which is a. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: in this brief. Pytorch Module Embedding.

From barkmanoil.com

Pytorch Nn Embedding? The 18 Correct Answer Pytorch Module Embedding torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. In the example below, we will use the same trivial vocabulary example. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. This mapping is done through an embedding matrix, which is a. embedding layers are. Pytorch Module Embedding.

From blog.acolyer.org

PyTorchBigGraph a largescale graph embedding system the morning paper Pytorch Module Embedding embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. In the example below, we will use the same trivial vocabulary example.. Pytorch Module Embedding.

From github.com

Embedding layer tensor shape · Issue 99268 · pytorch/pytorch · GitHub Pytorch Module Embedding This mapping is done through an embedding matrix, which is a. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. the module that allows you to use embeddings is torch.nn.embedding, which. Pytorch Module Embedding.

From blog.csdn.net

pytorch embedding层详解(从原理到实战)CSDN博客 Pytorch Module Embedding embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. In the example below, we will use the same trivial vocabulary example. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example.. Pytorch Module Embedding.

From blog.csdn.net

《PyTorch深度学习实践》第十二课(循环神经网络RNN)附加Embedding_rnn pytorch 代码 embeddingCSDN博客 Pytorch Module Embedding in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through. Pytorch Module Embedding.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Pytorch Module Embedding in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. In the example below, we will use the same. Pytorch Module Embedding.

From www.youtube.com

[pytorch] Embedding, LSTM 입출력 텐서(Tensor) Shape 이해하고 모델링 하기 YouTube Pytorch Module Embedding torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch. Pytorch Module Embedding.

From clay-atlas.com

[PyTorch] Use "Embedding" Layer To Process Text ClayTechnology World Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done through an embedding matrix, which is a. In the example below, we will use the same trivial vocabulary example. nn.embedding is a. Pytorch Module Embedding.

From aeyoo.net

pytorch Module介绍 TiuVe Pytorch Module Embedding In the example below, we will use the same trivial vocabulary example. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. in this brief. Pytorch Module Embedding.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: In the example below, we will use the same trivial vocabulary example. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none,. Pytorch Module Embedding.

From zhuanlan.zhihu.com

无脑入门pytorch系列(一)—— nn.embedding 知乎 Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. torch.nn.functional.embedding(input, weight, padding_idx=none,. Pytorch Module Embedding.

From www.youtube.com

Pytorch for Beginners 9 Extending Pytorch nn.Module properly YouTube Pytorch Module Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. In the example below, we will use the same trivial vocabulary example. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: in pytorch, torch.embedding (part of the torch.nn module) is a. Pytorch Module Embedding.

From zhuanlan.zhihu.com

Pytorch RPC Framework初体验 知乎 Pytorch Module Embedding This mapping is done through an embedding matrix, which is a. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. embedding layers are crucial. Pytorch Module Embedding.

From theaisummer.com

Pytorch AI Summer Pytorch Module Embedding embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. . Pytorch Module Embedding.

From wandb.ai

Interpret any PyTorch Model Using W&B Embedding Projector embedding Pytorch Module Embedding This mapping is done through an embedding matrix, which is a. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. In the example below, we will use the same trivial vocabulary example. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term). Pytorch Module Embedding.

From blog.csdn.net

pytorch embedding层详解(从原理到实战)CSDN博客 Pytorch Module Embedding In the example below, we will use the same trivial vocabulary example. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a.. Pytorch Module Embedding.

From www.assemblyai.com

PyTorch Lightning for Dummies A Tutorial and Overview Pytorch Module Embedding This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. the module that allows you to use embeddings. Pytorch Module Embedding.

From www.assemblyai.com

PyTorch Lightning for Dummies A Tutorial and Overview Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. In the example below, we will use the same trivial vocabulary example. in this brief article i will show. Pytorch Module Embedding.

From www.qinglite.cn

PyTorch 源码解读之 nn.Module轻识 Pytorch Module Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. In the example below, we will use the same trivial vocabulary example. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a. Pytorch Module Embedding.

From kushalj001.github.io

Building Sequential Models in PyTorch Black Box ML Pytorch Module Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. In the example below, we will use the same trivial vocabulary example. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. in pytorch, torch.embedding (part of the. Pytorch Module Embedding.

From opensourcebiology.eu

PyTorch Linear and PyTorch Embedding Layers Open Source Biology Pytorch Module Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. In the example below, we will use the same trivial vocabulary example. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: in this brief article i will show how an embedding. Pytorch Module Embedding.

From medium.com

Much Ado About PyTorch. Constructing RNN Models (LSTM, GRU… by Eniola Pytorch Module Embedding In the example below, we will use the same trivial vocabulary example. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done. Pytorch Module Embedding.

From www.youtube.com

What are PyTorch Embeddings Layers (6.4) YouTube Pytorch Module Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors. Pytorch Module Embedding.

From velog.io

PyTorch 기초 정리 Pytorch Module Embedding in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. In the example below, we will use the same trivial vocabulary example. embedding layers are crucial for capturing semantic relationships in deep learning, especially nlp tasks. the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments:. Pytorch Module Embedding.

From sebarnold.net

nn package — PyTorch Tutorials 0.2.0_4 documentation Pytorch Module Embedding the module that allows you to use embeddings is torch.nn.embedding, which takes two arguments: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. In the example below, we will use the same trivial vocabulary example. embedding layers are crucial for capturing semantic relationships in deep. Pytorch Module Embedding.

From www.aritrasen.com

Deep Learning with Pytorch Text Generation LSTMs 3.3 Pytorch Module Embedding In the example below, we will use the same trivial vocabulary example. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer is equivalent to a linear layer (without. Pytorch Module Embedding.