Design Etl Pipeline . 10k+ visitors in the past month It then transforms the data according to business rules, and it loads the data into a destination data. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Welcome to the world of etl pipelines using apache airflow. Etl stands for “extract, transform, load,” the.

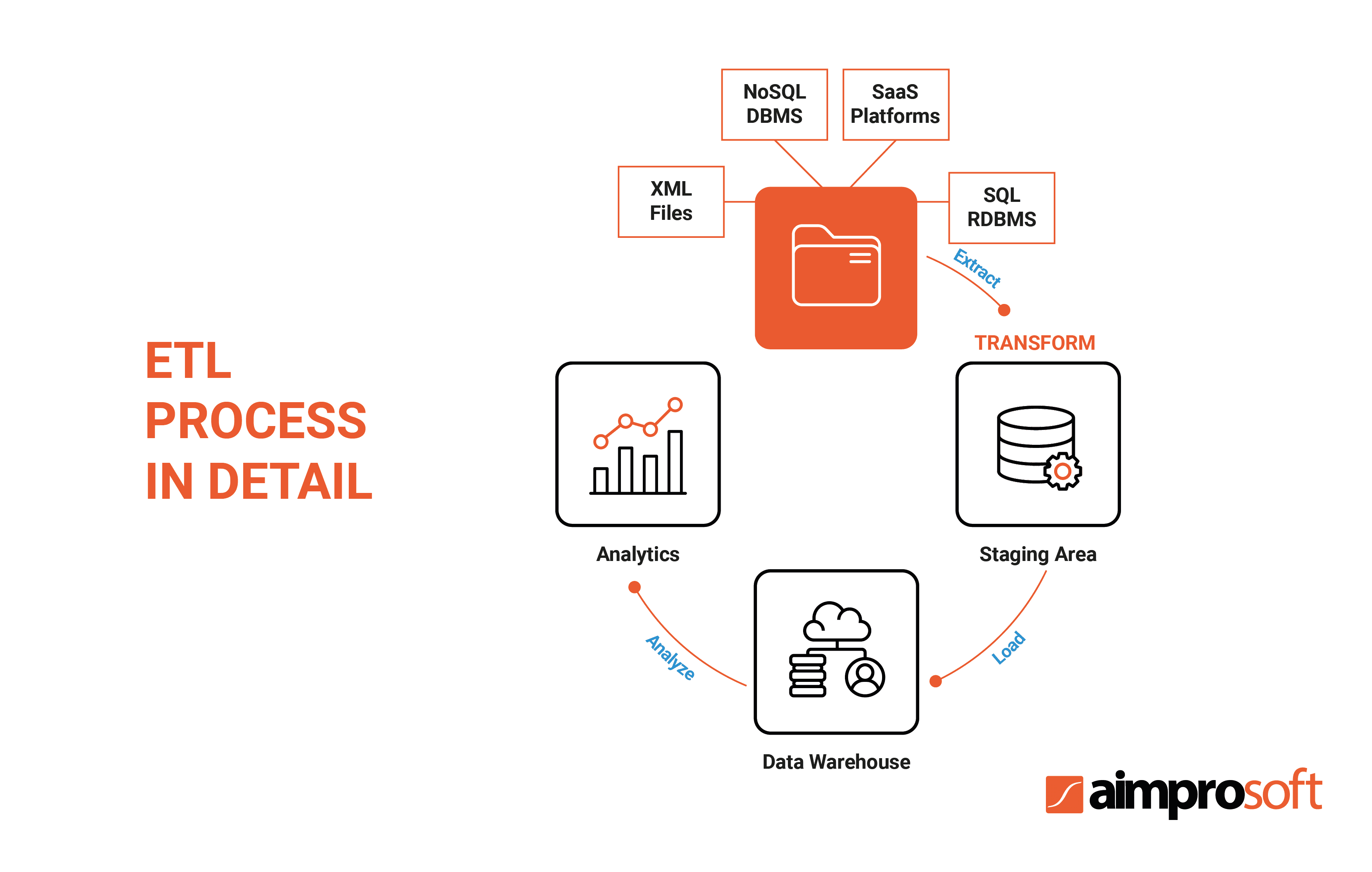

from www.aimprosoft.com

In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. It then transforms the data according to business rules, and it loads the data into a destination data. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Welcome to the world of etl pipelines using apache airflow. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the.

Developing an ETL Processes Best Practices Aimprosoft

Design Etl Pipeline An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Etl stands for “extract, transform, load,” the. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. 10k+ visitors in the past month Extract, transform, load (etl) is a data pipeline used to collect data from various sources. It then transforms the data according to business rules, and it loads the data into a destination data. Welcome to the world of etl pipelines using apache airflow.

From www.analyticsvidhya.com

A Complete Guide on Building an ETL Pipeline for Beginners Analytics Design Etl Pipeline Welcome to the world of etl pipelines using apache airflow. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. An. Design Etl Pipeline.

From www.youtube.com

What is Data Pipeline How to design Data Pipeline ? ETL vs Data Design Etl Pipeline An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Etl stands for “extract, transform, load,” the. An etl pipeline involves three stages. Design Etl Pipeline.

From databricks.com

Modernize your ETL pipelines to make your data more performant with Design Etl Pipeline Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the. It then transforms the data according to business rules, and it loads the data into a destination data. Extract, transform, load (etl) is a data pipeline. Design Etl Pipeline.

From streamsets.com

Data Pipeline Architecture A Deep Dive StreamSets Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Etl stands for “extract, transform, load,” the. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. 10k+ visitors in the past month Welcome to the. Design Etl Pipeline.

From dethwench.com

ETL Pipeline Documentation Here are my Tips and Tricks! DethWench Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. 10k+ visitors in the past month Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Etl stands for “extract, transform, load,” the. It then transforms the data according to business rules, and it loads the data into a. Design Etl Pipeline.

From skillcurb.com

ETL Pipeline Design for Beginners Architecture & Design Samples Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Welcome to the world of etl pipelines using apache airflow. 10k+ visitors in the past month It then transforms the data according to business rules, and it loads the. Design Etl Pipeline.

From www.altexsoft.com

What is Data Pipeline Components, Types, and Use Cases AltexSoft Design Etl Pipeline Welcome to the world of etl pipelines using apache airflow. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. It then transforms. Design Etl Pipeline.

From redpanda.com

ETL pipeline Components, tips, and best practices Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Welcome to the world of etl pipelines using apache airflow. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design. Design Etl Pipeline.

From www.zuar.com

What is an ETL Pipeline? Use Cases & Best Practices Zuar Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Etl stands for “extract, transform, load,” the. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Welcome to the world of etl pipelines using apache airflow. It. Design Etl Pipeline.

From www.impetus.com

Building serverless ETL pipelines on AWS Impetus Design Etl Pipeline Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. It then transforms the data. Design Etl Pipeline.

From mozartdata.com

What are ETL & ELT Pipelines? Mozart Data Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Extract, transform, load (etl) is. Design Etl Pipeline.

From trackit.io

ETL pipeline from AWS DynamoDB to Aurora PostgreSQL TrackIt Cloud Design Etl Pipeline Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. It then transforms the data according to. Design Etl Pipeline.

From acuto.io

How to Build an ETL Pipeline 7 Step Guide w/ Batch Processing Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Etl stands for “extract, transform, load,” the. 10k+ visitors in the past month Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline involves three stages during the. Design Etl Pipeline.

From www.mongodb.com

Reducing the Need for ETL with MongoDB Charts MongoDB Blog Design Etl Pipeline An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. 10k+ visitors in the past month Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Extract, transform, load (etl) is a data. Design Etl Pipeline.

From brightdata.com

ETL pipelines explained What is an ETL pipeline Design Etl Pipeline Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Etl stands for “extract, transform, load,” the. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. It then transforms the data according to business rules, and it loads the data into a destination data. Welcome. Design Etl Pipeline.

From www.informatica.com

What Is An ETL Pipeline? Informatica Design Etl Pipeline Welcome to the world of etl pipelines using apache airflow. 10k+ visitors in the past month Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Etl stands for “extract, transform, load,” the. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such. Design Etl Pipeline.

From 3.213.246.212

Build a SQLbased ETL pipeline with Apache Spark on Amazon EKS Dustin Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming. Design Etl Pipeline.

From www.aimprosoft.com

Developing an ETL Processes Best Practices Aimprosoft Design Etl Pipeline Extract, transform, load (etl) is a data pipeline used to collect data from various sources. Welcome to the world of etl pipelines using apache airflow. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. An etl pipeline involves three stages during the entire. Design Etl Pipeline.

From aws.plainenglish.io

Let's design a serverless ETL pipeline with AWS services by Kirity Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Welcome to the world of etl pipelines using apache airflow. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. An etl pipeline is the set of processes used to move data from a source or. Design Etl Pipeline.

From skillcurb.com

ETL Pipeline Design for Beginners Architecture & Design Samples Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Welcome to the world of etl pipelines using apache airflow. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Extract, transform, load (etl) is a data pipeline used to collect data from. Design Etl Pipeline.

From www.zuar.com

What is an ETL Pipeline? Use Cases & Best Practices Zuar Design Etl Pipeline Extract, transform, load (etl) is a data pipeline used to collect data from various sources. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. Welcome to the world of etl pipelines using apache airflow. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the. Whether you’re a. Design Etl Pipeline.

From medium.com

Understanding ETL Pipeline. An introduction to phases of ETL… by Design Etl Pipeline Welcome to the world of etl pipelines using apache airflow. 10k+ visitors in the past month Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. An etl pipeline is. Design Etl Pipeline.

From github.com

GitHub S19S98/ADFETLPipeline Design of an ETL pipeline in Azure Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Etl stands for “extract, transform, load,” the. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. 10k+ visitors in the past month Whether you’re a seasoned data engineer or just stepping into. Design Etl Pipeline.

From blog.coupler.io

ETL Architecture A Fit for Your Data Pipeline? Coupler.io Blog Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. 10k+ visitors in the past month An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as. Design Etl Pipeline.

From thedigitalskye.com

Build ETL Data Pipelines Even When You Don’t Know How to Code The Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Welcome to the world of etl pipelines using apache airflow. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. An etl pipeline is the set of processes used to move data from. Design Etl Pipeline.

From www.thecoraledge.com

Building Serverless ETL Pipelines with AWS Glue The Coral Edge Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Etl stands for “extract, transform, load,” the. Welcome to the world of etl pipelines using apache airflow. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. In this tutorial, we. Design Etl Pipeline.

From morioh.com

How to Design ETL Pipeline with PySpark using Python Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. It then transforms the data according to business rules, and it loads the data into a destination data. Extract, transform, load (etl) is a data. Design Etl Pipeline.

From www.fiverr.com

Design and build a robust etl pipeline on aws by Mehroz01 Fiverr Design Etl Pipeline 10k+ visitors in the past month An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Etl stands for “extract, transform, load,” the. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. It then transforms the data according. Design Etl Pipeline.

From www.analyticsvidhya.com

Building an ETL Data Pipeline Using Azure Data Factory Analytics Vidhya Design Etl Pipeline An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Welcome to the world of etl pipelines using apache airflow. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. In this tutorial, we will focus on pulling stock. Design Etl Pipeline.

From www.confluent.io

Build a RealTime Streaming ETL Pipeline in 20 Minutes Design Etl Pipeline In this tutorial, we will focus on pulling stock market data using the polygon api, transforming this data, and. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. It then transforms the data. Design Etl Pipeline.

From www.youtube.com

What is ETL Pipeline? ETL Pipeline Tutorial How to Build ETL Design Etl Pipeline Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Etl stands for “extract, transform, load,” the. An etl pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. Welcome to the world of etl. Design Etl Pipeline.

From www.clicdata.com

ETL Pipelines 101 Your Guide to Data Success ClicData Design Etl Pipeline An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. Etl stands for “extract, transform, load,” the. It then transforms the data according to business rules, and it loads the data into a destination data. An etl pipeline is the set of processes used to move data from a source or multiple sources into. Design Etl Pipeline.

From www.youtube.com

Modern ETL Pipelines with Change Data Capture Thiago Rigo GetYourGuide Design Etl Pipeline Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. 10k+ visitors in the past month Etl stands for “extract, transform, load,” the. An etl pipeline involves three stages during the entire data transfer. Design Etl Pipeline.

From skillcurb.com

ETL Pipeline Design for Beginners Architecture & Design Samples Design Etl Pipeline It then transforms the data according to business rules, and it loads the data into a destination data. Whether you’re a seasoned data engineer or just stepping into the field, mastering the art of etl pipeline design is crucial. An etl pipeline involves three stages during the entire data transfer process between source and destination—extract,. An etl pipeline is the. Design Etl Pipeline.