Model And Data Parallelism . the answer lies in two key techniques of distributed ml computing: Model parallelism and data parallelism. We further divide the latter into two. By partitioning the model the. one way to continue meeting these computational demands is through model parallelism: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. Data parallelism and model parallelism. there are two primary types of distributed parallel training: in the modern machine learning the various approaches to parallelism are used to:

from docs.aws.amazon.com

ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: By partitioning the model the. Model parallelism and data parallelism. Data parallelism and model parallelism. the answer lies in two key techniques of distributed ml computing: We further divide the latter into two. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. one way to continue meeting these computational demands is through model parallelism:

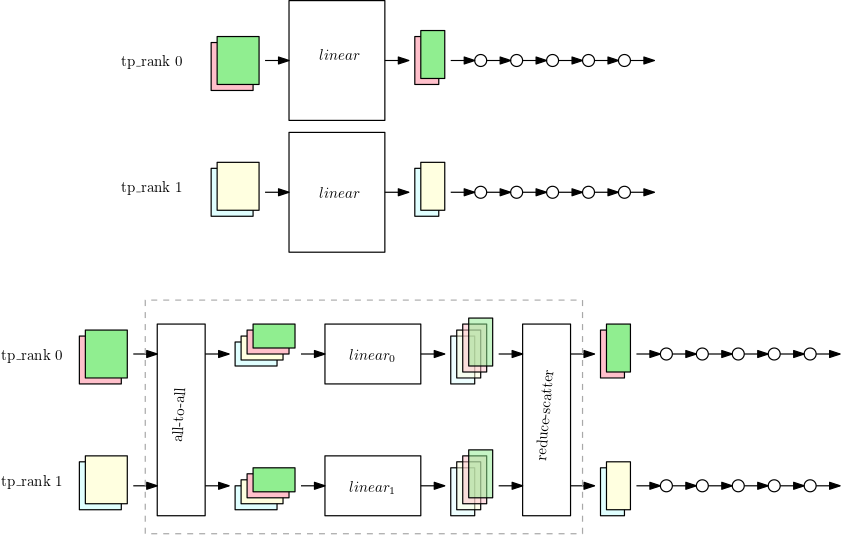

How Tensor Parallelism Works Amazon SageMaker

Model And Data Parallelism one way to continue meeting these computational demands is through model parallelism: one way to continue meeting these computational demands is through model parallelism: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. the answer lies in two key techniques of distributed ml computing: in the modern machine learning the various approaches to parallelism are used to: model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. Model parallelism and data parallelism. there are two primary types of distributed parallel training: By partitioning the model the. Data parallelism and model parallelism. We further divide the latter into two. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices.

From www.eurekalert.org

Data Parallelism vs. Model Par [IMAGE] EurekAlert! Science News Releases Model And Data Parallelism the answer lies in two key techniques of distributed ml computing: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: one way to continue meeting these computational demands is through model parallelism: in the modern machine. Model And Data Parallelism.

From slideplayer.com

Parallel Algorithm Models ppt download Model And Data Parallelism Data parallelism and model parallelism. ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. the answer lies in two key techniques of distributed ml computing: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices.. Model And Data Parallelism.

From www.vrogue.co

Data Parallel Distributed Training Of Deep Learning M vrogue.co Model And Data Parallelism the answer lies in two key techniques of distributed ml computing: We further divide the latter into two. Model parallelism and data parallelism. one way to continue meeting these computational demands is through model parallelism: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are. Model And Data Parallelism.

From www.youtube.com

Model vs Data Parallelism in Machine Learning YouTube Model And Data Parallelism one way to continue meeting these computational demands is through model parallelism: We further divide the latter into two. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. in the modern machine learning the various approaches to parallelism are used to: Model parallelism and. Model And Data Parallelism.

From www.researchgate.net

Data vs model parallelism. Download Scientific Diagram Model And Data Parallelism Model parallelism and data parallelism. one way to continue meeting these computational demands is through model parallelism: the answer lies in two key techniques of distributed ml computing: By partitioning the model the. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. in. Model And Data Parallelism.

From medium.com

An Overview of Pipeline Parallelism and its Research Progress by Xu Model And Data Parallelism model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. By partitioning the model the. We further divide the latter into two. the answer lies in. Model And Data Parallelism.

From ai-academy.training

Achieving Model Parallelism in Training GPT Models AI Academy Model And Data Parallelism We further divide the latter into two. one way to continue meeting these computational demands is through model parallelism: Data parallelism and model parallelism. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. in the modern machine learning the various approaches to parallelism are. Model And Data Parallelism.

From colossalai.org

Paradigms of Parallelism ColossalAI Model And Data Parallelism there are two primary types of distributed parallel training: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. We further divide the latter into two. By partitioning the model the. the answer lies in two key techniques of distributed ml computing: ddp processes. Model And Data Parallelism.

From www.vrogue.co

Data Parallel Distributed Training Of Deep Learning M vrogue.co Model And Data Parallelism ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. Data parallelism and model parallelism. one. Model And Data Parallelism.

From www.researchgate.net

Data parallelism vs. model parallelism (a) data parallelism, (b) model Model And Data Parallelism Data parallelism and model parallelism. there are two primary types of distributed parallel training: Model parallelism and data parallelism. By partitioning the model the. in the modern machine learning the various approaches to parallelism are used to: We further divide the latter into two. model parallelism is a distributed training method in which the deep learning model. Model And Data Parallelism.

From www.scaler.com

Model Parallelism Scaler Topics Model And Data Parallelism By partitioning the model the. the answer lies in two key techniques of distributed ml computing: We further divide the latter into two. one way to continue meeting these computational demands is through model parallelism: Data parallelism and model parallelism. there are two primary types of distributed parallel training: ddp processes can be placed on the. Model And Data Parallelism.

From resources.experfy.com

The Future of Computation for Machine Learning and Data Science Model And Data Parallelism Data parallelism and model parallelism. one way to continue meeting these computational demands is through model parallelism: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. By partitioning the model the. Model parallelism and data parallelism. model parallelism is a distributed training method in. Model And Data Parallelism.

From slideplayer.com

ICS 491 Big Data Analytics Fall 2017 Deep Learning ppt download Model And Data Parallelism there are two primary types of distributed parallel training: one way to continue meeting these computational demands is through model parallelism: By partitioning the model the. Model parallelism and data parallelism. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. ddp processes can. Model And Data Parallelism.

From www.telesens.co

Understanding Data Parallelism in Machine Learning Telesens Model And Data Parallelism Model parallelism and data parallelism. one way to continue meeting these computational demands is through model parallelism: We further divide the latter into two. there are two primary types of distributed parallel training: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. By partitioning. Model And Data Parallelism.

From docs.aws.amazon.com

Introduction to Model Parallelism Amazon SageMaker Model And Data Parallelism model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. By partitioning the model the. We further divide the latter into two. ddp. Model And Data Parallelism.

From www.codementor.io

Machine Learning How to Build Scalable Machine Learning Models Model And Data Parallelism We further divide the latter into two. Data parallelism and model parallelism. there are two primary types of distributed parallel training: model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. the answer lies in two key techniques of distributed ml computing: ddp processes. Model And Data Parallelism.

From medium.com

Machine Learning Concept 76 Supercharging Deep Learning Exploring Model And Data Parallelism Data parallelism and model parallelism. Model parallelism and data parallelism. ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. in the modern machine learning the various approaches to parallelism are used to: By partitioning the model the. model parallelism is a distributed training method in which the. Model And Data Parallelism.

From www.juyang.co

Distributed model training II Parameter Server and AllReduce Ju Yang Model And Data Parallelism We further divide the latter into two. Data parallelism and model parallelism. there are two primary types of distributed parallel training: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. one way to continue meeting these computational demands is through model parallelism: in. Model And Data Parallelism.

From www.mishalaskin.com

Training Deep Networks with Data Parallelism in Jax Model And Data Parallelism ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: the answer lies in two key techniques of distributed ml computing: By partitioning the model the. in the modern machine learning the various approaches to parallelism are used. Model And Data Parallelism.

From www.researchgate.net

Illustration of data parallelism with Word2Vec Download Scientific Model And Data Parallelism By partitioning the model the. We further divide the latter into two. in the modern machine learning the various approaches to parallelism are used to: model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. one way to continue meeting these computational demands is through. Model And Data Parallelism.

From www.researchgate.net

Workflow model for the dynamic data parallelism pattern. Download Model And Data Parallelism Model parallelism and data parallelism. the answer lies in two key techniques of distributed ml computing: model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. one way to continue meeting these computational demands is through model parallelism: We further divide the latter into two.. Model And Data Parallelism.

From thewindowsupdate.com

PipeDream A more effective way to train deep neural networks using Model And Data Parallelism We further divide the latter into two. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. there are two primary types of distributed parallel training: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across.. Model And Data Parallelism.

From docs.aws.amazon.com

How Tensor Parallelism Works Amazon SageMaker Model And Data Parallelism Model parallelism and data parallelism. We further divide the latter into two. in the modern machine learning the various approaches to parallelism are used to: the answer lies in two key techniques of distributed ml computing: there are two primary types of distributed parallel training: model parallelism is a distributed training method in which the deep. Model And Data Parallelism.

From thesequence.substack.com

⚙️ Edge183 Data vs Model Parallelism in Distributed Training Model And Data Parallelism Data parallelism and model parallelism. the answer lies in two key techniques of distributed ml computing: model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. By partitioning the model the. ddp processes can be placed on the same machine or across machines, but gpu. Model And Data Parallelism.

From czxttkl.com

Data Parallelism and Model Parallelism czxttkl Model And Data Parallelism there are two primary types of distributed parallel training: By partitioning the model the. We further divide the latter into two. Data parallelism and model parallelism. one way to continue meeting these computational demands is through model parallelism: Model parallelism and data parallelism. ddp processes can be placed on the same machine or across machines, but gpu. Model And Data Parallelism.

From www.myxxgirl.com

Distributed Training Of Ai Models Based On Data Parallelism A Model Model And Data Parallelism Data parallelism and model parallelism. Model parallelism and data parallelism. in the modern machine learning the various approaches to parallelism are used to: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. By partitioning the model the. one way to continue meeting these computational. Model And Data Parallelism.

From www.vrogue.co

Understanding Data Parallelism In Machine Learning Te vrogue.co Model And Data Parallelism model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. By partitioning the model the. ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: model. Model And Data Parallelism.

From www.vrogue.co

Understanding Data Parallelism In Machine Learning Te vrogue.co Model And Data Parallelism By partitioning the model the. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. Data parallelism and model parallelism. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. there are two. Model And Data Parallelism.

From www.researchgate.net

Illustration of data parallelism and model parallelism. Download Model And Data Parallelism Data parallelism and model parallelism. Model parallelism and data parallelism. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. in the modern machine learning the various approaches to parallelism are used to: By partitioning the model the. the answer lies in two key techniques. Model And Data Parallelism.

From siboehm.com

DataParallel Distributed Training of Deep Learning Models Model And Data Parallelism We further divide the latter into two. Data parallelism and model parallelism. Model parallelism and data parallelism. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within. Model And Data Parallelism.

From docs.chainer.org

Overview — Chainer 7.8.0 documentation Model And Data Parallelism Model parallelism and data parallelism. one way to continue meeting these computational demands is through model parallelism: ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple. Model And Data Parallelism.

From www.slideserve.com

PPT Introduction to Parallel Computing PowerPoint Presentation, free Model And Data Parallelism model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. there are two primary types of distributed parallel training: one way to continue meeting these computational demands is through model parallelism: We further divide the latter into two. the answer lies in two key. Model And Data Parallelism.

From www.slideserve.com

PPT Parallel and Distributed Systems in Machine Learning PowerPoint Model And Data Parallelism one way to continue meeting these computational demands is through model parallelism: the answer lies in two key techniques of distributed ml computing: model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. Data parallelism and model parallelism. ddp processes can be placed on. Model And Data Parallelism.

From czxttkl.com

Data Parallelism and Model Parallelism czxttkl Model And Data Parallelism Data parallelism and model parallelism. one way to continue meeting these computational demands is through model parallelism: Model parallelism and data parallelism. ddp processes can be placed on the same machine or across machines, but gpu devices cannot be shared across. there are two primary types of distributed parallel training: the answer lies in two key. Model And Data Parallelism.

From www.researchgate.net

Comparison of parallel data/model parallelism system, edge computing Model And Data Parallelism model parallelism is a distributed training method in which the deep learning model is partitioned across multiple devices, within or across instances. Data parallelism and model parallelism. model parallelism, where a deep learning model that is too large to fit on a single gpu is distributed across multiple devices. the answer lies in two key techniques of. Model And Data Parallelism.