Can Not Generate Buckets With Non-Number In Rdd . See the source code, class definitions,. And enumerate scenarios when to use dataframes and datasets instead of rdds. See examples of rdd creation, partitioning, shuffling,. I would like to create a histogram of n buckets for each key. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Timestamp, data 12345.0 10 12346.0. A resilient distributed dataset (rdd), the basic abstraction in spark. Outline their performance and optimization benefits; I have a pair rdd (key, value). Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Why and when you should use each set; Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: Represents an immutable, partitioned collection of elements that can be.

from www.numerade.com

Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Outline their performance and optimization benefits; Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: See the source code, class definitions,. Why and when you should use each set; Timestamp, data 12345.0 10 12346.0. See examples of rdd creation, partitioning, shuffling,. Represents an immutable, partitioned collection of elements that can be. I would like to create a histogram of n buckets for each key. And enumerate scenarios when to use dataframes and datasets instead of rdds.

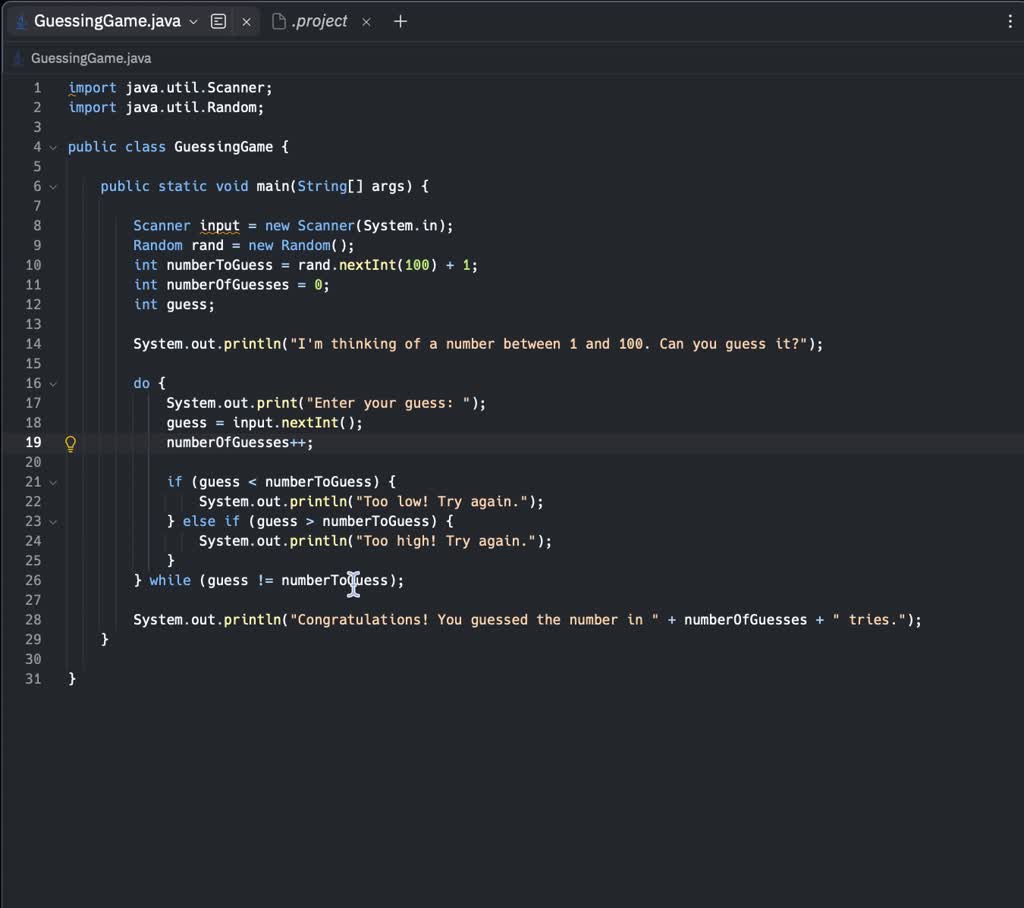

SOLVED create a pseudocode showing the logic for a program that

Can Not Generate Buckets With Non-Number In Rdd Timestamp, data 12345.0 10 12346.0. See the source code, class definitions,. I would like to create a histogram of n buckets for each key. Outline their performance and optimization benefits; Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Represents an immutable, partitioned collection of elements that can be. See examples of rdd creation, partitioning, shuffling,. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Why and when you should use each set; Timestamp, data 12345.0 10 12346.0. I have a pair rdd (key, value). Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: A resilient distributed dataset (rdd), the basic abstraction in spark. And enumerate scenarios when to use dataframes and datasets instead of rdds.

From www.researchgate.net

Expected number of non10anonymous buckets from Approximations A1 and Can Not Generate Buckets With Non-Number In Rdd I would like to create a histogram of n buckets for each key. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Represents an immutable, partitioned collection of elements that can be. See the source code, class definitions,. I have a pair rdd (key, value). Outline their performance and optimization. Can Not Generate Buckets With Non-Number In Rdd.

From www.clcoding.com

Day 61 Python Program to Print the Fibonacci sequence Computer Can Not Generate Buckets With Non-Number In Rdd Timestamp, data 12345.0 10 12346.0. See examples of rdd creation, partitioning, shuffling,. Why and when you should use each set; Represents an immutable, partitioned collection of elements that can be. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Learn how to create, transform, and operate on rdds (resilient distributed datasets). Can Not Generate Buckets With Non-Number In Rdd.

From devsday.ru

Generate a random number in Java DevsDay.ru Can Not Generate Buckets With Non-Number In Rdd Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. A resilient distributed dataset (rdd), the basic abstraction in spark. Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and. Can Not Generate Buckets With Non-Number In Rdd.

From circuitillico42d5.z22.web.core.windows.net

Choose A Random Number Between 1 And 3 Can Not Generate Buckets With Non-Number In Rdd A resilient distributed dataset (rdd), the basic abstraction in spark. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Timestamp, data 12345.0 10 12346.0. I have a pair rdd (key, value). Why and when you should use each set; I would like to create a histogram of n buckets for. Can Not Generate Buckets With Non-Number In Rdd.

From www.youtube.com

Java Programming Tutorial 10 Random Number Generator Number Can Not Generate Buckets With Non-Number In Rdd Outline their performance and optimization benefits; See examples of rdd creation, partitioning, shuffling,. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Why and when you should use each set; Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. And enumerate. Can Not Generate Buckets With Non-Number In Rdd.

From github.com

Questions about process debugging · Issue 44 · flyu/CUTRUNTools2.0 Can Not Generate Buckets With Non-Number In Rdd Timestamp, data 12345.0 10 12346.0. Outline their performance and optimization benefits; I have a pair rdd (key, value). Represents an immutable, partitioned collection of elements that can be. I would like to create a histogram of n buckets for each key. See examples of rdd creation, partitioning, shuffling,. And enumerate scenarios when to use dataframes and datasets instead of rdds.. Can Not Generate Buckets With Non-Number In Rdd.

From btechgeeks.com

C Program to Generate Random Numbers BTech Geeks Can Not Generate Buckets With Non-Number In Rdd A resilient distributed dataset (rdd), the basic abstraction in spark. And enumerate scenarios when to use dataframes and datasets instead of rdds. Represents an immutable, partitioned collection of elements that can be. I would like to create a histogram of n buckets for each key. See the source code, class definitions,. See examples of rdd creation, partitioning, shuffling,. I have. Can Not Generate Buckets With Non-Number In Rdd.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Can Not Generate Buckets With Non-Number In Rdd Why and when you should use each set; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; I would like to create a histogram of n buckets for each key. A resilient distributed dataset (rdd), the basic abstraction in spark. Learn how to use pyspark.rdd, a python interface for spark resilient. Can Not Generate Buckets With Non-Number In Rdd.

From www.geeksforgeeks.org

Bucket Sort Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). See the source code, class definitions,. I would like to create a histogram of n buckets for each key. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Timestamp, data 12345.0 10 12346.0. Suppose i have. Can Not Generate Buckets With Non-Number In Rdd.

From www.stechies.com

How to Generate a Random Whole Number using JavaScript? Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). See the source code, class definitions,. I have a pair rdd (key, value). Timestamp, data 12345.0 10 12346.0. See examples of rdd creation, partitioning, shuffling,. I would like to create a histogram of n buckets for each key. Represents an immutable, partitioned collection of elements that. Can Not Generate Buckets With Non-Number In Rdd.

From medium.com

An Introduction to Bucket Sort. This blog post is a continuation of a Can Not Generate Buckets With Non-Number In Rdd A resilient distributed dataset (rdd), the basic abstraction in spark. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; See the source code, class definitions,. I would like to create a histogram of n buckets for each key. I have a pair rdd (key, value). Timestamp, data 12345.0 10 12346.0. Represents. Can Not Generate Buckets With Non-Number In Rdd.

From www.atatus.com

A Guide to Math.random() in Java Can Not Generate Buckets With Non-Number In Rdd See examples of rdd creation, partitioning, shuffling,. Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: Outline their performance and optimization benefits; Why and when you should use each set; See the source code, class definitions,. Represents an immutable, partitioned collection of elements that can be. I would like to create a histogram of. Can Not Generate Buckets With Non-Number In Rdd.

From unicaregp.pakasak.com

Number Guessing Game in C++ using rand() Function Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Represents an immutable, partitioned collection of elements that can be. Why and when you should use each set; And enumerate scenarios when to use dataframes and datasets instead of rdds. See examples of rdd creation, partitioning, shuffling,. See the source code, class definitions,. A resilient distributed. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved A process that produces computer chips has a mean of Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). See the source code, class definitions,. A resilient distributed dataset (rdd), the basic abstraction in spark. Why and when you should use each set; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; I have a pair. Can Not Generate Buckets With Non-Number In Rdd.

From maibushyx.blogspot.com

34 Javascript Generate Random Number Between 1 And 100 Javascript Can Not Generate Buckets With Non-Number In Rdd And enumerate scenarios when to use dataframes and datasets instead of rdds. Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: A resilient distributed dataset (rdd), the basic abstraction in spark. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Represents an immutable, partitioned collection. Can Not Generate Buckets With Non-Number In Rdd.

From towardsdatascience.com

A Cheat Sheet on Generating Random Numbers in NumPy by Yong Cui Can Not Generate Buckets With Non-Number In Rdd A resilient distributed dataset (rdd), the basic abstraction in spark. Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Why and when you should use each set; I have a pair rdd (key, value). Timestamp, data 12345.0 10 12346.0. See examples of rdd creation, partitioning, shuffling,. And enumerate scenarios when to use dataframes and datasets. Can Not Generate Buckets With Non-Number In Rdd.

From www.coursehero.com

[Solved] . 1. Random numbers File random_numbers . py (Quick note Can Not Generate Buckets With Non-Number In Rdd In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Represents an immutable, partitioned collection of elements that can be. And enumerate scenarios when to use dataframes and datasets instead of rdds. I have a pair rdd (key, value). A resilient distributed dataset (rdd), the basic abstraction in spark. Suppose i have. Can Not Generate Buckets With Non-Number In Rdd.

From www.youtube.com

Generate a list of random numbers without repeats in Python YouTube Can Not Generate Buckets With Non-Number In Rdd Represents an immutable, partitioned collection of elements that can be. See the source code, class definitions,. See examples of rdd creation, partitioning, shuffling,. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; I would like to create a histogram of n buckets for each key. Timestamp, data 12345.0 10 12346.0. A. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved Part the explanation of how chromosome Can Not Generate Buckets With Non-Number In Rdd Why and when you should use each set; A resilient distributed dataset (rdd), the basic abstraction in spark. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Outline their performance and optimization benefits; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and. Can Not Generate Buckets With Non-Number In Rdd.

From priaxon.com

Excel Formula To Get Random Number Templates Printable Free Can Not Generate Buckets With Non-Number In Rdd Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. See examples of rdd creation, partitioning, shuffling,. Why and when you should use each set; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; I have a pair rdd (key, value). And. Can Not Generate Buckets With Non-Number In Rdd.

From www.atatus.com

A Guide to Math.random() in Java Can Not Generate Buckets With Non-Number In Rdd In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: I have a pair rdd (key, value). Outline their performance and optimization benefits; Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds).. Can Not Generate Buckets With Non-Number In Rdd.

From www.clintools.com

Random Number Generator Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). I have a pair rdd (key, value). Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. Why and when you should. Can Not Generate Buckets With Non-Number In Rdd.

From asenoveo6schematic.z14.web.core.windows.net

Pick A Random Number Between 1 And 52 Can Not Generate Buckets With Non-Number In Rdd Outline their performance and optimization benefits; And enumerate scenarios when to use dataframes and datasets instead of rdds. Why and when you should use each set; Represents an immutable, partitioned collection of elements that can be. Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). In this blog, i explore three sets of apis—rdds, dataframes,. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved You have determined that waiting times at a toll Can Not Generate Buckets With Non-Number In Rdd In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Outline their performance and optimization benefits; Why and when you should use each set; See examples of rdd creation, partitioning, shuffling,. See the source code, class definitions,. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved In this assignment you are going to program a number Can Not Generate Buckets With Non-Number In Rdd Outline their performance and optimization benefits; I would like to create a histogram of n buckets for each key. Why and when you should use each set; Timestamp, data 12345.0 10 12346.0. I have a pair rdd (key, value). Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. A resilient. Can Not Generate Buckets With Non-Number In Rdd.

From www.coursehero.com

[Solved] random.randint(1,50). Write a program that generates a random Can Not Generate Buckets With Non-Number In Rdd I have a pair rdd (key, value). And enumerate scenarios when to use dataframes and datasets instead of rdds. Represents an immutable, partitioned collection of elements that can be. See the source code, class definitions,. I would like to create a histogram of n buckets for each key. Learn how to use pyspark.rdd, a python interface for spark resilient distributed. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved Create a program that generates random numbers, store Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. A resilient distributed dataset (rdd), the basic abstraction in spark. Timestamp, data 12345.0 10 12346.0. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available. Can Not Generate Buckets With Non-Number In Rdd.

From www.numerade.com

SOLVED create a pseudocode showing the logic for a program that Can Not Generate Buckets With Non-Number In Rdd And enumerate scenarios when to use dataframes and datasets instead of rdds. A resilient distributed dataset (rdd), the basic abstraction in spark. Why and when you should use each set; See the source code, class definitions,. Timestamp, data 12345.0 10 12346.0. Outline their performance and optimization benefits; Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following. Can Not Generate Buckets With Non-Number In Rdd.

From www.youtube.com

5.Generating random numbers between 1 to 100 storing in an array using Can Not Generate Buckets With Non-Number In Rdd Timestamp, data 12345.0 10 12346.0. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; See examples of rdd creation, partitioning, shuffling,. And enumerate scenarios when to use dataframes and datasets instead of. Can Not Generate Buckets With Non-Number In Rdd.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Can Not Generate Buckets With Non-Number In Rdd Outline their performance and optimization benefits; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; And enumerate scenarios when to use dataframes and datasets instead of rdds. Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). Represents an immutable, partitioned collection of elements that can be.. Can Not Generate Buckets With Non-Number In Rdd.

From codescracker.com

Java Program to Generate Random Numbers Can Not Generate Buckets With Non-Number In Rdd Represents an immutable, partitioned collection of elements that can be. Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). I have a pair rdd (key, value). I would like to create a histogram of n buckets for each key. See examples of rdd creation, partitioning, shuffling,. Suppose i have a dataframe (df) (pandas) or rdd. Can Not Generate Buckets With Non-Number In Rdd.

From www.educba.com

Bucket Sort Algorithm Complete Guide on Bucket Sort Algorithm Can Not Generate Buckets With Non-Number In Rdd Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; See examples of rdd creation, partitioning, shuffling,. Outline their performance and optimization benefits; Learn how to use pyspark.rdd, a python interface for spark. Can Not Generate Buckets With Non-Number In Rdd.

From www.chegg.com

Solved Rearrange the following lines to produce a loop that Can Not Generate Buckets With Non-Number In Rdd See the source code, class definitions,. Learn how to create, transform, and operate on rdds (resilient distributed datasets) in pyspark, a core component of spark. I have a pair rdd (key, value). Outline their performance and optimization benefits; Represents an immutable, partitioned collection of elements that can be. Learn how to use pyspark.rdd, a python interface for spark resilient distributed. Can Not Generate Buckets With Non-Number In Rdd.

From guides.trasix.one

Catalog buckets Trasix Knowledge Base Can Not Generate Buckets With Non-Number In Rdd Timestamp, data 12345.0 10 12346.0. Represents an immutable, partitioned collection of elements that can be. Why and when you should use each set; In this blog, i explore three sets of apis—rdds, dataframes, and datasets—available in apache spark 2.2 and beyond; Suppose i have a dataframe (df) (pandas) or rdd (spark) with the following two columns: And enumerate scenarios when. Can Not Generate Buckets With Non-Number In Rdd.

From www.lisbonlx.com

Random Number List Generator Examples and Forms Can Not Generate Buckets With Non-Number In Rdd Learn how to use pyspark.rdd, a python interface for spark resilient distributed datasets (rdds). And enumerate scenarios when to use dataframes and datasets instead of rdds. See examples of rdd creation, partitioning, shuffling,. See the source code, class definitions,. Why and when you should use each set; I have a pair rdd (key, value). Suppose i have a dataframe (df). Can Not Generate Buckets With Non-Number In Rdd.