Average Precision Score Vs Precision Score . Average precision is calculated as the weighted mean of precisions at each threshold; Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. The weight is the increase in recall from the prior. Map (mean average precision) is the average of ap. In the documentation it describes average_precision_score as area under the precision recall curve. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each.

from analystprep.com

The weight is the increase in recall from the prior. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. In the documentation it describes average_precision_score as area under the precision recall curve. Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is the average of ap. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from.

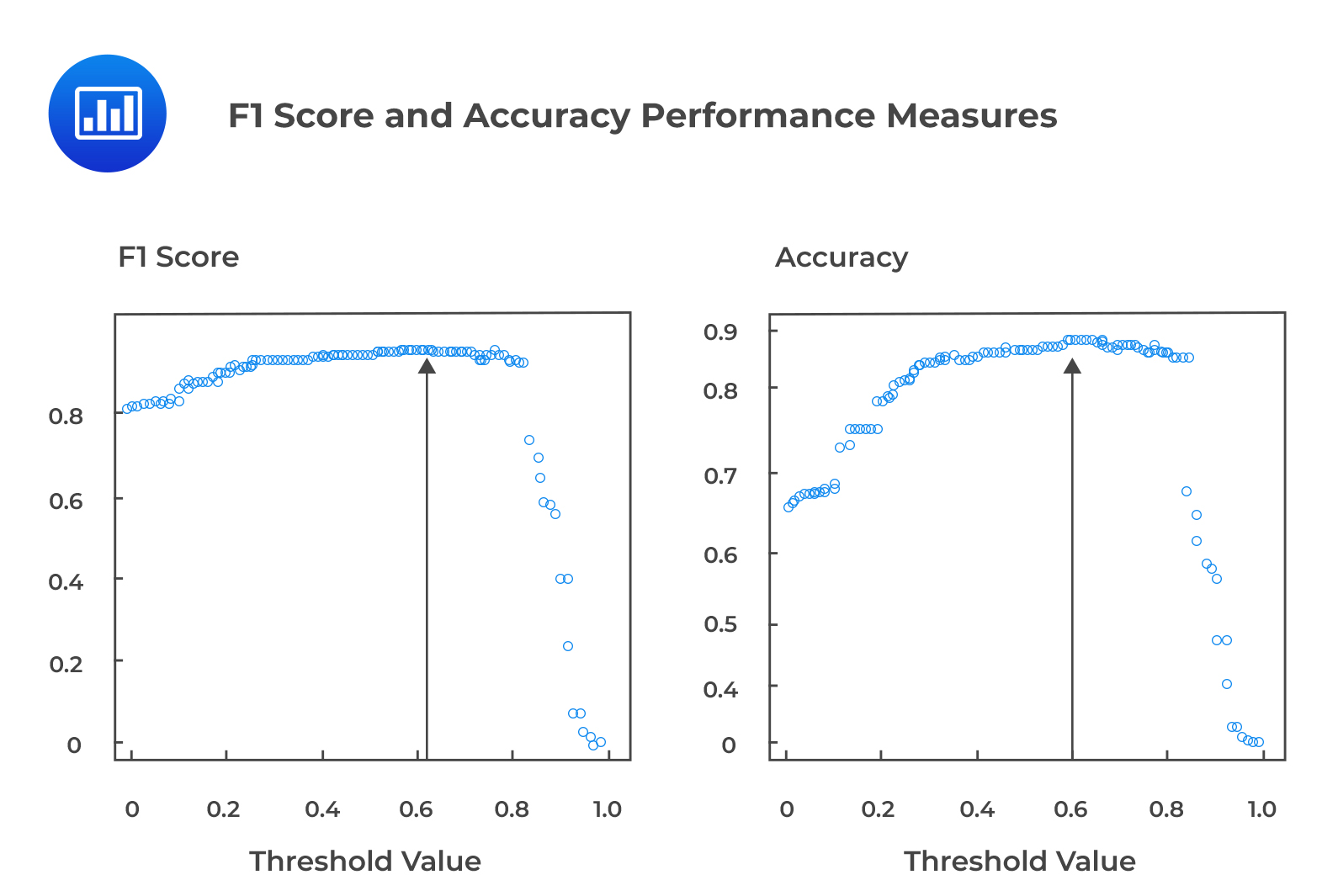

F1 Score and Accuracy Performance Measures CFA, FRM, and Actuarial

Average Precision Score Vs Precision Score In the documentation it describes average_precision_score as area under the precision recall curve. The weight is the increase in recall from the prior. In the documentation it describes average_precision_score as area under the precision recall curve. Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each.

From www.baeldung.com

Precision vs. Average Precision Baeldung on Computer Science Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. In the documentation it describes average_precision_score as area under the. Average Precision Score Vs Precision Score.

From www.evidentlyai.com

Accuracy, precision, and recall in multiclass classification Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are. Average Precision Score Vs Precision Score.

From www.researchgate.net

Average Precision score for the training and testing data at various Average Precision Score Vs Precision Score Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average precision is calculated as the weighted mean of precisions at each threshold; The weight is the increase in recall from the prior. Map (mean average precision) is the average of ap. Precision, recall, and f1 score, each. Average Precision Score Vs Precision Score.

From www.youtube.com

Precision, Recall, and F1 Score Explained for Binary Classification Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Map (mean average precision) is the average of ap. The weight is the increase in recall from the prior. Precision, recall, and f1 score, each. Average Precision Score Vs Precision Score.

From analystprep.com

F1 Score and Accuracy Performance Measures CFA, FRM, and Actuarial Average Precision Score Vs Precision Score Map (mean average precision) is the average of ap. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. In the documentation it describes average_precision_score as area under the precision recall curve. The weight is the increase in recall from the prior. Average precision is calculated as the weighted mean. Average Precision Score Vs Precision Score.

From www.researchgate.net

Mean ranks of average precision scores obtained using friedman test Average Precision Score Vs Precision Score The weight is the increase in recall from the prior. In the documentation it describes average_precision_score as area under the precision recall curve. Average precision is calculated as the weighted mean of precisions at each threshold; Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Average precision indicates whether. Average Precision Score Vs Precision Score.

From www.researchgate.net

Comparison of the average precision score (β), recall score (γ), and í Average Precision Score Vs Precision Score Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. In the documentation it describes average_precision_score as area under the precision recall curve. Map (mean average precision) is. Average Precision Score Vs Precision Score.

From www.researchgate.net

Detailed 5Fold average precision score for Statlog heart disease Average Precision Score Vs Precision Score Map (mean average precision) is the average of ap. The weight is the increase in recall from the prior. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and. Average Precision Score Vs Precision Score.

From www.researchgate.net

Detailed 5Fold average precision score for Statlog heart disease Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. The weight is the increase in recall from the prior. In the documentation it describes average_precision_score as area under the precision recall curve. Average precision indicates whether your model can. Average Precision Score Vs Precision Score.

From www.kdnuggets.com

Evaluating Object Detection Models Using Mean Average Precision KDnuggets Average Precision Score Vs Precision Score Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is. Average Precision Score Vs Precision Score.

From www.researchgate.net

Contribution of Correct Answers to Average Precision Score Download Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted. Average Precision Score Vs Precision Score.

From medium.com

Explaining Accuracy, Precision, Recall, and F1 Score by Vikas Singh Average Precision Score Vs Precision Score Map (mean average precision) is the average of ap. In the documentation it describes average_precision_score as area under the precision recall curve. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. The weight is the increase in recall from. Average Precision Score Vs Precision Score.

From www.researchgate.net

Detailed 5Fold average precision score for Abalone dataset with Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; The weight is the increase in recall from the prior. Map (mean average precision) is the average of ap. In the documentation it describes average_precision_score as area under the precision recall curve. Precision, recall, and f1 score, each in its own green box above, are all broken. Average Precision Score Vs Precision Score.

From stackoverflow.com

average precision sk.learn average_precision_score Stack Overflow Average Precision Score Vs Precision Score Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision indicates whether your model can correctly identify all. Average Precision Score Vs Precision Score.

From labelyourdata.com

Mean Average Precision (mAP) An Essential Accuracy Metric Label Your Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is the average of ap. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. In the documentation it describes average_precision_score as area under the precision recall curve. The weight is the increase. Average Precision Score Vs Precision Score.

From kili-technology.com

Mean Average Precision (mAP) A Complete Guide Average Precision Score Vs Precision Score Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. The weight is the increase in recall from the prior. Average precision is calculated as the weighted mean of precisions at each threshold; Precision, recall, and f1 score, each. Average Precision Score Vs Precision Score.

From www.researchgate.net

Accuracy, precision, recall, F1Score values for the classification Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above, are all broken down. Average Precision Score Vs Precision Score.

From www.researchgate.net

PrecisionRecall curve with the average precision score, microaveraged Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average precision is calculated as the weighted mean. Average Precision Score Vs Precision Score.

From www.v7labs.com

F1 Score in Machine Learning Intro & Calculation Average Precision Score Vs Precision Score In the documentation it describes average_precision_score as area under the precision recall curve. Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average precision is calculated as the weighted mean of precisions at each threshold; Precision, recall, and. Average Precision Score Vs Precision Score.

From towardsdatascience.com

Micro, Macro & Weighted Averages of F1 Score, Clearly Explained by Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Map (mean average precision) is. Average Precision Score Vs Precision Score.

From www.baeldung.com

Precision vs. Average Precision Baeldung on Computer Science Average Precision Score Vs Precision Score Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. The weight is the increase in recall from the prior. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Map (mean average precision) is the average of. Average Precision Score Vs Precision Score.

From vitalflux.com

Mean Average Precision (MAP) for Information Retrieval Systems Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision is calculated as the weighted mean of precisions at each threshold; Map (mean average precision) is the average of ap. The weight is the increase in recall from. Average Precision Score Vs Precision Score.

From towardsdatascience.com

Micro, Macro & Weighted Averages of F1 Score, Clearly Explained by Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; In the documentation it describes average_precision_score as area under the precision recall curve. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above,. Average Precision Score Vs Precision Score.

From verloop.io

F1 Score, Explained Sharp Sight verloop.io Average Precision Score Vs Precision Score In the documentation it describes average_precision_score as area under the precision recall curve. The weight is the increase in recall from the prior. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly. Average Precision Score Vs Precision Score.

From www.youtube.com

Evaluation 12 mean average precision YouTube Average Precision Score Vs Precision Score Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label. Average Precision Score Vs Precision Score.

From www.researchgate.net

Average Precision Score (APS) over iterations for four different Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Map (mean average precision) is the average of ap. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as. Average Precision Score Vs Precision Score.

From www.researchgate.net

Gaze concentration precision score versus accuracy score. Download Average Precision Score Vs Precision Score In the documentation it describes average_precision_score as area under the precision recall curve. Average precision is calculated as the weighted mean of precisions at each threshold; Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. The weight is the increase in recall from the prior. Precision, recall, and f1. Average Precision Score Vs Precision Score.

From www.v7labs.com

Mean Average Precision (mAP) Explained Everything You Need to Know Average Precision Score Vs Precision Score Average precision is calculated as the weighted mean of precisions at each threshold; Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. The weight is the increase in recall from the prior. Map (mean average precision) is the average of ap. Average_precision_score (y_true, y_score, *, average =. Average Precision Score Vs Precision Score.

From www.cnblogs.com

Mean Average Precision(mAP),Precision,Recall,Accuracy,F1_score,PR曲线、ROC Average Precision Score Vs Precision Score The weight is the increase in recall from the prior. In the documentation it describes average_precision_score as area under the precision recall curve. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Precision, recall, and f1 score, each in its own green box above, are all broken down by. Average Precision Score Vs Precision Score.

From labelyourdata.com

Mean Average Precision (mAP) An Essential Accuracy Metric Label Your Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Average precision is calculated as the weighted mean. Average Precision Score Vs Precision Score.

From huggingface.co

yonting/average_precision_score at main Average Precision Score Vs Precision Score Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Map (mean average precision) is the average of ap. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for. Average Precision Score Vs Precision Score.

From seunghan96.github.io

(CV summary) 13. mAP (Mean Average Precision) AAA (All About AI) Average Precision Score Vs Precision Score Map (mean average precision) is the average of ap. The weight is the increase in recall from the prior. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. In the documentation it describes average_precision_score as area under the precision. Average Precision Score Vs Precision Score.

From www.cnblogs.com

Mean Average Precision(mAP),Precision,Recall,Accuracy,F1_score,PR曲线、ROC Average Precision Score Vs Precision Score The weight is the increase in recall from the prior. Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples. Average Precision Score Vs Precision Score.

From juejin.cn

sklearn.metrics.average_precision_score计算平均精度(Average Precision)和精确率召回 Average Precision Score Vs Precision Score Average precision indicates whether your model can correctly identify all the positive examples without accidentally marking too many negative examples as positive. Map (mean average precision) is the average of ap. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. In the documentation it describes average_precision_score as area under. Average Precision Score Vs Precision Score.

From www.researchgate.net

Average precision scores for each classifier and segmentation approach Average Precision Score Vs Precision Score Precision, recall, and f1 score, each in its own green box above, are all broken down by class, and then a macro average and weighted average are given for each. Average_precision_score (y_true, y_score, *, average = 'macro', pos_label = 1, sample_weight = none) [source] # compute average precision (ap) from. Map (mean average precision) is the average of ap. In. Average Precision Score Vs Precision Score.