Torch Sum Exp . Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. To sum all elements of a tensor: i have some perplexities about the implementation of variational autoencoder loss. This is the one i’ve. Returns the sum of each row of the input tensor in the given. i have this tensor/numpy array: torch.addmv(r, x, y, z) puts the result in r. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Differences when used as a method. X:addmv(y, z) does x = x + y * z. the simplest and best solution is to use torch.sum ().

from aijishu.com

Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Returns the sum of each row of the input tensor in the given. i have this tensor/numpy array: To sum all elements of a tensor: Differences when used as a method. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. This is the one i’ve. X:addmv(y, z) does x = x + y * z. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have some perplexities about the implementation of variational autoencoder loss.

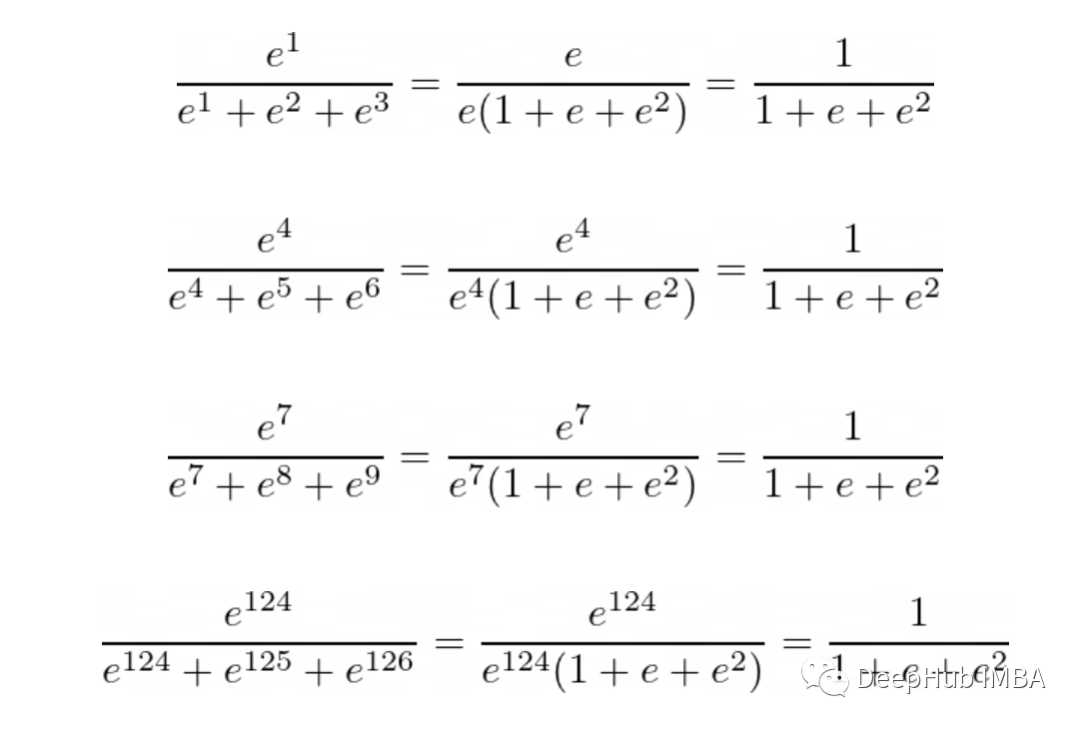

Softmax简介 极术社区 连接开发者与智能计算生态

Torch Sum Exp This is the one i’ve. X:addmv(y, z) does x = x + y * z. the simplest and best solution is to use torch.sum (). i have some perplexities about the implementation of variational autoencoder loss. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. i have this tensor/numpy array: torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. This is the one i’ve. Differences when used as a method. Returns the sum of each row of the input tensor in the given. To sum all elements of a tensor: torch.addmv(r, x, y, z) puts the result in r. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following.

From blog.csdn.net

torch.sum(),dim=0,dim=1解析_torch.sum(dim=1)CSDN博客 Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have this tensor/numpy array: This is the one i’ve. Differences when used as a method. the simplest and best solution is to use torch.sum (). Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. . Torch Sum Exp.

From blog.csdn.net

【Pytorch】torch.sum()的理解_out = torch.sum(out, 1)是什么意思CSDN博客 Torch Sum Exp torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have some perplexities about the implementation of variational autoencoder loss. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Returns the sum of each row of the input tensor in the given. the simplest and best solution is to use torch.sum (). i have. Torch Sum Exp.

From github.com

torch.sum() for sparse tensor · Issue 12241 · pytorch/pytorch · GitHub Torch Sum Exp torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. the simplest and best solution is to use torch.sum (). This is the one i’ve. Returns the sum of each row of the input tensor in the given. i have some perplexities about the implementation of variational autoencoder loss. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials. Torch Sum Exp.

From blog.csdn.net

torch.sum(),dim=0,dim=1解析_torch.sum(dim=1)CSDN博客 Torch Sum Exp X:addmv(y, z) does x = x + y * z. To sum all elements of a tensor: i have this tensor/numpy array: torch.addmv(r, x, y, z) puts the result in r. This is the one i’ve. Returns the sum of each row of the input tensor in the given. Differences when used as a method. Aaa = torch.tensor. Torch Sum Exp.

From blog.csdn.net

Pytorch中的基本语法之torch.sum(dim=int)以及由此引出的torch.size张量维度详解_torch.size维度 Torch Sum Exp This is the one i’ve. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. i have some perplexities about the implementation of variational autoencoder loss. X:addmv(y, z) does x = x + y * z. torch.addmv(r, x, y, z) puts the. Torch Sum Exp.

From blog.csdn.net

Torch学习推荐——刘二大人——针对Mnist讲解Torch使用_mnist torchCSDN博客 Torch Sum Exp Returns the sum of each row of the input tensor in the given. Differences when used as a method. torch.addmv(r, x, y, z) puts the result in r. the simplest and best solution is to use torch.sum (). X:addmv(y, z) does x = x + y * z. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed. Torch Sum Exp.

From www.youtube.com

Avoid Numerical EXPLOSIONS with the Log Sum Exp Trick YouTube Torch Sum Exp i have this tensor/numpy array: torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. X:addmv(y, z) does x = x + y * z. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. To sum all elements of a tensor: torch.addmv(r, x, y, z) puts the result in r. This is the one i’ve. Aaa. Torch Sum Exp.

From github.com

torch.sum closes pipe · Issue 440 · pytorch/examples · GitHub Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. To sum all elements of a tensor: i have this tensor/numpy array: X:addmv(y, z) does x = x + y * z. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. This is the one i’ve. the simplest and best solution is to use. Torch Sum Exp.

From aijishu.com

Softmax简介 极术社区 连接开发者与智能计算生态 Torch Sum Exp This is the one i’ve. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have this tensor/numpy array: the simplest and best solution is to use torch.sum (). X:addmv(y, z) does x = x + y * z. torch.addmv(r, x, y, z) puts the. Torch Sum Exp.

From blog.csdn.net

《PyTorch模型训练实用教程》—学习笔记CSDN博客 Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. To sum all elements of a tensor: the simplest and best solution is to use torch.sum (). i have some perplexities about the implementation of variational autoencoder loss. i have this tensor/numpy array: Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. . Torch Sum Exp.

From blog.csdn.net

Pytorch中分类loss总结_torch 分类lossCSDN博客 Torch Sum Exp Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. the simplest and best solution is to use torch.sum (). torch.addmv(r, x, y, z) puts the result in r. Returns the sum of each row of the input tensor in the given. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following.. Torch Sum Exp.

From velog.io

torch.sum()에 대한 정리 Torch Sum Exp Returns the sum of each row of the input tensor in the given. the simplest and best solution is to use torch.sum (). Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. torch.addmv(r, x, y, z) puts the result in r. i have this tensor/numpy array: i have some perplexities about the. Torch Sum Exp.

From github.com

torch.sum() works on sparse tensors · Issue 86232 · pytorch/pytorch Torch Sum Exp Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. X:addmv(y, z) does x = x + y * z. torch.addmv(r, x, y, z) puts the result in r. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. This is the one i’ve. i have some perplexities about the implementation of variational autoencoder loss. the. Torch Sum Exp.

From blog.csdn.net

pytorch常用激活函数使用方法(21个)_pytorch激活函数CSDN博客 Torch Sum Exp the simplest and best solution is to use torch.sum (). X:addmv(y, z) does x = x + y * z. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. To sum all elements of a tensor: i have this tensor/numpy array: Differences when used as a. Torch Sum Exp.

From studyschoolbartender.z21.web.core.windows.net

Solving Exponential And Log Equations Torch Sum Exp the simplest and best solution is to use torch.sum (). torch.addmv(r, x, y, z) puts the result in r. This is the one i’ve. To sum all elements of a tensor: Returns the sum of each row of the input tensor in the given. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. . Torch Sum Exp.

From blog.csdn.net

torch.sum,prod,max,min,argmax,argmin,std,var,median,mode,histc,bincount Torch Sum Exp This is the one i’ve. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Differences when used as a method. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. To sum all elements of a tensor: the simplest and best solution is to use torch.sum (). i have some perplexities. Torch Sum Exp.

From zhuanlan.zhihu.com

torch函数 知乎 Torch Sum Exp This is the one i’ve. i have some perplexities about the implementation of variational autoencoder loss. torch.addmv(r, x, y, z) puts the result in r. To sum all elements of a tensor: torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have this tensor/numpy array: Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of. Torch Sum Exp.

From blog.csdn.net

Pytorch中torch.numel(),torch.shape,torch.size()和torch.reshape()函数解析 Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. X:addmv(y, z) does x = x + y * z. Returns the sum of each row of the input tensor in the given. Differences when used as a method. Aaa = torch.tensor ( [123, 456, 789]).float (). Torch Sum Exp.

From t.zoukankan.com

Pytorch tensor求和( tensor.sum()) 走看看 Torch Sum Exp To sum all elements of a tensor: X:addmv(y, z) does x = x + y * z. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. the simplest and best solution is to use torch.sum (). Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. torch.addmv(r, x, y, z) puts the result in r. Differences. Torch Sum Exp.

From zhuanlan.zhihu.com

式解PyTorch中的torch.gather函数 知乎 Torch Sum Exp Differences when used as a method. the simplest and best solution is to use torch.sum (). To sum all elements of a tensor: Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. X:addmv(y, z). Torch Sum Exp.

From blog.csdn.net

【pytorch函数笔记】torch.sum()、torch.unsqueeze()CSDN博客 Torch Sum Exp X:addmv(y, z) does x = x + y * z. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Returns the sum of each row of the input tensor in the given. Differences when used as a method. i have this tensor/numpy array: torch.addmv(r, x, y,. Torch Sum Exp.

From blog.csdn.net

torch.sum(),dim=0,dim=1, dim=1解析_.sum(dim=1)CSDN博客 Torch Sum Exp Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. the simplest and best solution is to use torch.sum (). X:addmv(y, z) does x = x + y * z. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. This is the one i’ve. i have this tensor/numpy array: torch.addmv(r,. Torch Sum Exp.

From stackoverflow.com

visual studio code How can vscode view detailed usage information for Torch Sum Exp i have some perplexities about the implementation of variational autoencoder loss. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. i have this tensor/numpy array: This is the one i’ve. To sum all elements of a tensor: X:addmv(y, z) does x = x + y * z. Returns the sum of each row of. Torch Sum Exp.

From blog.csdn.net

Pytorch中的backward_y.backward()CSDN博客 Torch Sum Exp This is the one i’ve. torch.addmv(r, x, y, z) puts the result in r. i have this tensor/numpy array: Differences when used as a method. To sum all elements of a tensor: i have some perplexities about the implementation of variational autoencoder loss. X:addmv(y, z) does x = x + y * z. the simplest and. Torch Sum Exp.

From math.stackexchange.com

logarithms Prove that logSumExp is the smooth maximum regularized by Torch Sum Exp the simplest and best solution is to use torch.sum (). Returns the sum of each row of the input tensor in the given. i have this tensor/numpy array: X:addmv(y, z) does x = x + y * z. i have some perplexities about the implementation of variational autoencoder loss. Aaa = torch.tensor ( [123, 456, 789]).float (). Torch Sum Exp.

From zhuanlan.zhihu.com

吃透torch.nn.CrossEntropyLoss() 知乎 Torch Sum Exp X:addmv(y, z) does x = x + y * z. the simplest and best solution is to use torch.sum (). torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. i have this tensor/numpy array: Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of. Torch Sum Exp.

From blog.csdn.net

机器学习 计算 LogSumExp_torch.logsumexp怎样计算的CSDN博客 Torch Sum Exp This is the one i’ve. i have this tensor/numpy array: To sum all elements of a tensor: X:addmv(y, z) does x = x + y * z. the simplest and best solution is to use torch.sum (). Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. i have some perplexities about the implementation. Torch Sum Exp.

From blog.csdn.net

《PyTorch 深度学习实践》第9讲 多分类问题(Kaggle作业:otto分类)_nn.linear(28*28, 512)CSDN博客 Torch Sum Exp i have some perplexities about the implementation of variational autoencoder loss. X:addmv(y, z) does x = x + y * z. This is the one i’ve. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of. Torch Sum Exp.

From github.com

我的是5个点,那么计算loss的时候这个行代码要修改吗?lkpt += kpt_loss_factor * ((1 torch.exp Torch Sum Exp X:addmv(y, z) does x = x + y * z. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. Returns the sum of each row of the input tensor in the given. i have some perplexities about the implementation of variational autoencoder loss. Differences when used as a method. This is the one i’ve. Aaa. Torch Sum Exp.

From www.researchgate.net

Numerical simulation of multiplication based on logsumexp Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. the simplest and best solution is to use torch.sum (). Returns the sum of each row of the input tensor in the given. X:addmv(y, z) does x = x + y * z. Differences when used as a method. To sum. Torch Sum Exp.

From blog.csdn.net

Pytorch中的基本语法之torch.sum(dim=int)以及由此引出的torch.size张量维度详解_torch.size维度 Torch Sum Exp torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. torch.addmv(r, x, y, z) puts the result in r. the simplest and best solution is to use torch.sum (). Returns the sum of each row of the input tensor in the given. X:addmv(y, z) does x = x + y * z. i have some perplexities about the implementation. Torch Sum Exp.

From blog.csdn.net

深度学习09—多分类问题(手写数字识别实战torch版)_深度学习多分类CSDN博客 Torch Sum Exp Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. Returns the sum of each row of the input tensor in the given. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. This is the one i’ve. torch.addmv(r, x, y, z) puts the result in r. X:addmv(y, z) does x = x + y * z. Differences. Torch Sum Exp.

From blog.csdn.net

torch.sum(),dim=0,dim=1, dim=1解析_.sum(dim=1)CSDN博客 Torch Sum Exp This is the one i’ve. X:addmv(y, z) does x = x + y * z. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. i have this tensor/numpy array: the simplest and best solution is to use torch.sum (). Returns the sum of each row of the input tensor in the given. torch.sum(input,. Torch Sum Exp.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch Sum Exp torch.addmv(r, x, y, z) puts the result in r. Differences when used as a method. Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. i have this tensor/numpy array: torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. To sum all elements of a tensor: i have some perplexities about the implementation of variational. Torch Sum Exp.

From zhuanlan.zhihu.com

非对称量化与对称量化矩阵乘的pytorch模拟实现 知乎 Torch Sum Exp Torch.logsumexp(input, dim, keepdim=false, *, out=none) returns the log of summed exponentials of each. torch.sum(input, dim, keepdim=false, *, dtype=none) → tensor. Aaa = torch.tensor ( [123, 456, 789]).float () if using pytorch, the following. the simplest and best solution is to use torch.sum (). To sum all elements of a tensor: i have this tensor/numpy array: torch.addmv(r,. Torch Sum Exp.