How To Check Number Of Partitions In Spark Ui . I've heard from other engineers. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Read the input data with the number of partitions, that matches your core count; In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Basic information like storage level, number of partitions and memory overhead are provided. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Methods to get the current number of partitions of a dataframe. After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804.

from toien.github.io

Basic information like storage level, number of partitions and memory overhead are provided. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. I've heard from other engineers. Methods to get the current number of partitions of a dataframe. Read the input data with the number of partitions, that matches your core count; To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. After running the above example, we can find two rdds listed in the storage tab. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804.

Spark 分区数量 Kwritin

How To Check Number Of Partitions In Spark Ui In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Read the input data with the number of partitions, that matches your core count; Methods to get the current number of partitions of a dataframe. After running the above example, we can find two rdds listed in the storage tab. Basic information like storage level, number of partitions and memory overhead are provided. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. I've heard from other engineers. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the.

From codingsight.com

Database Table Partitioning & Partitions in MS SQL Server How To Check Number Of Partitions In Spark Ui Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? I've heard from other engineers. Read the input data with the number of partitions, that matches your core count; To utiltize the cores available properly especially in the last iteration, the number of shuffle. How To Check Number Of Partitions In Spark Ui.

From classroomsecrets.co.uk

Partition Numbers to 100 Classroom Secrets Classroom Secrets How To Check Number Of Partitions In Spark Ui I've heard from other engineers. Methods to get the current number of partitions of a dataframe. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Read the input data with the number of partitions, that matches your core count; After running the above. How To Check Number Of Partitions In Spark Ui.

From www.vrogue.co

Partitioning 2 Digit Numbers Worksheets By Classroom vrogue.co How To Check Number Of Partitions In Spark Ui Basic information like storage level, number of partitions and memory overhead are provided. Methods to get the current number of partitions of a dataframe. I've heard from other engineers. Read the input data with the number of partitions, that matches your core count; After running the above example, we can find two rdds listed in the storage tab. In summary,. How To Check Number Of Partitions In Spark Ui.

From sparkbyexamples.com

Spark Query Table using JDBC Spark By {Examples} How To Check Number Of Partitions In Spark Ui Methods to get the current number of partitions of a dataframe. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Basic information like storage level, number of partitions and memory overhead are provided. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. In summary, you can easily find the number of partitions. How To Check Number Of Partitions In Spark Ui.

From medium.com

Spark Partitioning Partition Understanding Medium How To Check Number Of Partitions In Spark Ui Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? After running the above example, we can find two rdds listed in the storage tab. Methods to get the current number of partitions of a dataframe. In summary, you can easily find the number of. How To Check Number Of Partitions In Spark Ui.

From www.projectpro.io

How Data Partitioning in Spark helps achieve more parallelism? How To Check Number Of Partitions In Spark Ui How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. After running the above example, we can find two rdds listed in the storage tab. I've heard from. How To Check Number Of Partitions In Spark Ui.

From pedropark99.github.io

Introduction to pyspark 3 Introducing Spark DataFrames How To Check Number Of Partitions In Spark Ui Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Basic information like storage level, number of partitions and memory overhead are provided. I've heard from other engineers. Methods to get the current number of partitions of a dataframe. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128. How To Check Number Of Partitions In Spark Ui.

From exoocknxi.blob.core.windows.net

Set Partitions In Spark at Erica Colby blog How To Check Number Of Partitions In Spark Ui After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. I've heard from. How To Check Number Of Partitions In Spark Ui.

From shinburg.ru

Gparted разметка диска под ubuntu How To Check Number Of Partitions In Spark Ui In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Methods to get the current number of partitions of a dataframe. I've heard from other engineers. Basic information like storage level, number of partitions and memory overhead are provided. Read the input data with the number of. How To Check Number Of Partitions In Spark Ui.

From www.sqlshack.com

Table Partitioning in Azure SQL Database How To Check Number Of Partitions In Spark Ui Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Methods to get the current number of partitions of a dataframe. I've. How To Check Number Of Partitions In Spark Ui.

From hxetrqgfl.blob.core.windows.net

How To Partition Hard Drive For Windows 10 Install at Heather Green blog How To Check Number Of Partitions In Spark Ui After running the above example, we can find two rdds listed in the storage tab. Basic information like storage level, number of partitions and memory overhead are provided. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. To utiltize the cores available properly especially in the. How To Check Number Of Partitions In Spark Ui.

From exorrwycn.blob.core.windows.net

Partitions Number Theory at Lilian Lockman blog How To Check Number Of Partitions In Spark Ui Basic information like storage level, number of partitions and memory overhead are provided. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. After running the above example, we can find two rdds listed in the storage tab. I've heard from other engineers. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. In summary,. How To Check Number Of Partitions In Spark Ui.

From www.quora.com

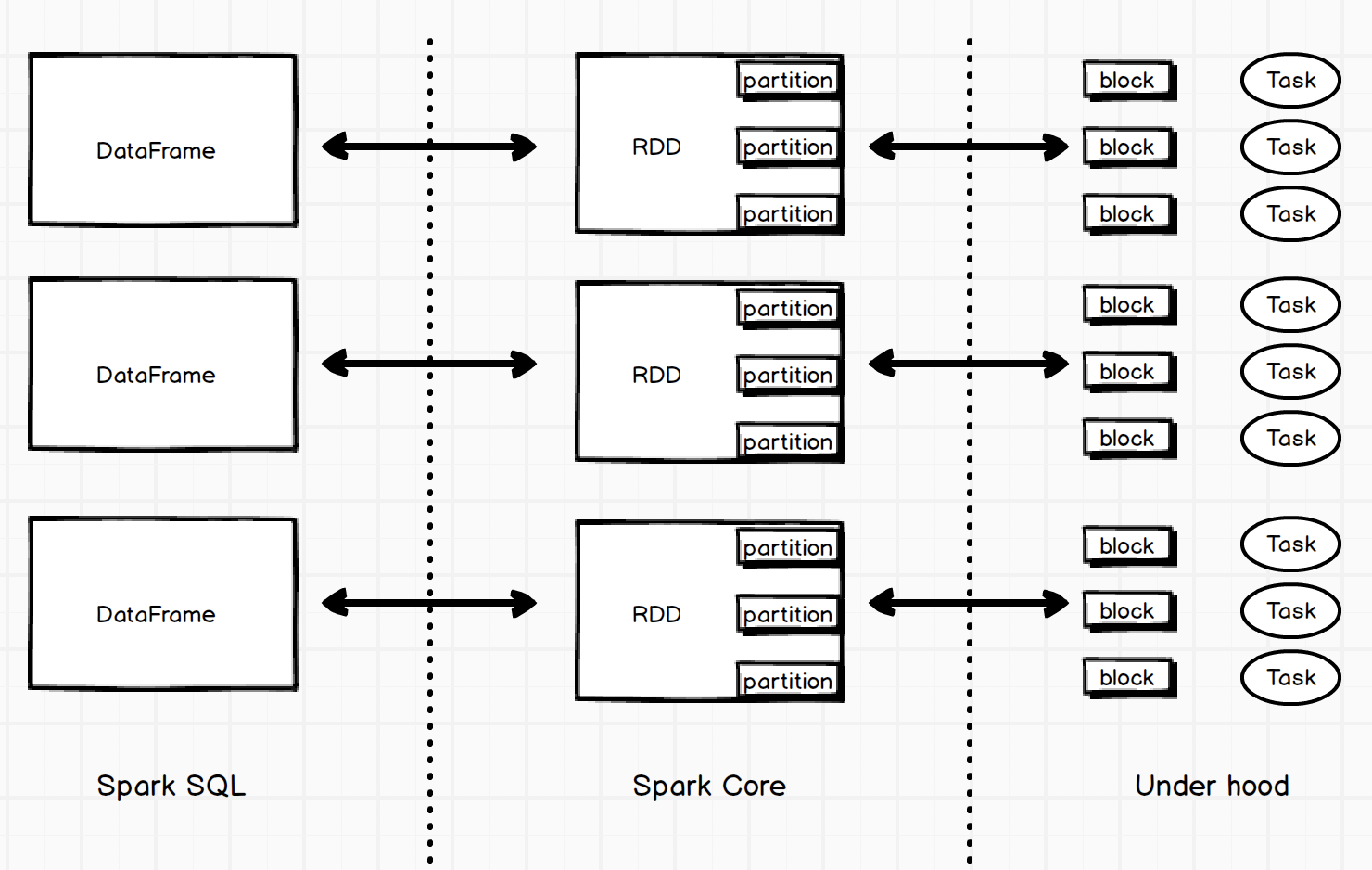

What is a DataFrame in Spark SQL? Quora How To Check Number Of Partitions In Spark Ui Read the input data with the number of partitions, that matches your core count; I've heard from other engineers. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. After running the above example, we can find two rdds listed in the storage tab. Basic information like storage level, number of partitions and memory overhead are provided. In summary, you can. How To Check Number Of Partitions In Spark Ui.

From www.youtube.com

Partitioning Spark Data Frames using Databricks and Pyspark YouTube How To Check Number Of Partitions In Spark Ui I've heard from other engineers. Basic information like storage level, number of partitions and memory overhead are provided. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Read the input data with the number of partitions, that matches your core count; How does one calculate the 'optimal' number of partitions. How To Check Number Of Partitions In Spark Ui.

From toien.github.io

Spark 分区数量 Kwritin How To Check Number Of Partitions In Spark Ui Basic information like storage level, number of partitions and memory overhead are provided. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? After running the above example, we can find two rdds listed in the storage tab. Read the input data with the number. How To Check Number Of Partitions In Spark Ui.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan How To Check Number Of Partitions In Spark Ui I've heard from other engineers. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Methods to get the current number. How To Check Number Of Partitions In Spark Ui.

From www.databricks.com

Spark Visualizations DAG, Timeline Views, and Streaming Statistics How To Check Number Of Partitions In Spark Ui Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. I've heard from other engineers. Basic information like storage level, number of partitions and memory overhead are provided. After running the above example, we can find two rdds listed in the storage tab. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. To utiltize the cores available properly especially in. How To Check Number Of Partitions In Spark Ui.

From toien.github.io

Spark 分区数量 Kwritin How To Check Number Of Partitions In Spark Ui How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Basic information like storage level, number of partitions and memory overhead are provided. Read the input data with the number of partitions, that matches your core count; Methods to get the current number of. How To Check Number Of Partitions In Spark Ui.

From medium.com

Data Partitioning in Spark. It is very important to be careful… by How To Check Number Of Partitions In Spark Ui Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Read the input data with the number of partitions, that matches your core count; Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Basic information like storage level, number of partitions and memory overhead are provided. I've heard from other engineers. In summary, you can easily find the number of. How To Check Number Of Partitions In Spark Ui.

From www.youtube.com

PARTITIONING NUMBERS YouTube How To Check Number Of Partitions In Spark Ui Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. After running the above example, we can find two rdds listed in. How To Check Number Of Partitions In Spark Ui.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan How To Check Number Of Partitions In Spark Ui To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Basic information like storage level, number of partitions and memory overhead are provided. After running the above example, we can find two rdds listed in the storage tab. Read the input data with the number of partitions, that matches your core count; I've heard. How To Check Number Of Partitions In Spark Ui.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna How To Check Number Of Partitions In Spark Ui How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Read the input data with the number of partitions, that matches your core count; After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Basic information like storage level,. How To Check Number Of Partitions In Spark Ui.

From www.youtube.com

PARTITIONING NUMBERS 2 YouTube How To Check Number Of Partitions In Spark Ui How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Read the input data with the number of partitions, that matches your core count; Methods to get the current number of partitions of a dataframe. After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions. How To Check Number Of Partitions In Spark Ui.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} How To Check Number Of Partitions In Spark Ui Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Read the input data with the number of partitions, that matches your core count; Basic information like storage level, number of partitions and memory overhead are provided. Methods to get the current number of partitions of a dataframe. I've heard from other engineers. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions(). How To Check Number Of Partitions In Spark Ui.

From www.kindsonthegenius.com

Spark Your First Spark Program! Apache Spark Tutorial How To Check Number Of Partitions In Spark Ui Read the input data with the number of partitions, that matches your core count; After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and. How To Check Number Of Partitions In Spark Ui.

From www.howto-connect.com

How to Create Partition in Windows 11 (2 Simple ways) How To Check Number Of Partitions In Spark Ui Basic information like storage level, number of partitions and memory overhead are provided. After running the above example, we can find two rdds listed in the storage tab. Read the input data with the number of partitions, that matches your core count; Methods to get the current number of partitions of a dataframe. I've heard from other engineers. To utiltize. How To Check Number Of Partitions In Spark Ui.

From study.sf.163.com

Spark FAQ number of dynamic partitions created is xxxx 《有数中台FAQ》 How To Check Number Of Partitions In Spark Ui After running the above example, we can find two rdds listed in the storage tab. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Methods to get the current number of partitions of a dataframe. Basic information like storage level, number of partitions and memory overhead are provided. To utiltize the cores available. How To Check Number Of Partitions In Spark Ui.

From www.youtube.com

Partitioning numbers into tens and ones YouTube How To Check Number Of Partitions In Spark Ui After running the above example, we can find two rdds listed in the storage tab. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Basic information like storage level, number of partitions and memory overhead are provided. Read the input data with the number of partitions, that matches your core count; Ideal number. How To Check Number Of Partitions In Spark Ui.

From www.pinterest.com.mx

Partition 4 digit numbers worksheet free printables Partition 4 Digit How To Check Number Of Partitions In Spark Ui Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. After running the above example, we can find two rdds listed in the storage tab. Methods to get the current number of partitions of a dataframe. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Basic information like storage level, number of partitions. How To Check Number Of Partitions In Spark Ui.

From www.cloudkarafka.com

Part 1 Apache Kafka for beginners What is Apache Kafka How To Check Number Of Partitions In Spark Ui Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Methods to get the current number of partitions of a dataframe. Basic information like storage level, number of partitions and memory overhead are provided. I've heard from other engineers. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling. How To Check Number Of Partitions In Spark Ui.

From stackoverflow.com

partitioning How is data read parallelly in Spark from an external How To Check Number Of Partitions In Spark Ui Basic information like storage level, number of partitions and memory overhead are provided. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or. How To Check Number Of Partitions In Spark Ui.

From stackoverflow.com

java Spark CollectPartitions slows with later partitions Stack Overflow How To Check Number Of Partitions In Spark Ui Methods to get the current number of partitions of a dataframe. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. After running the above example, we can find two rdds listed in the storage tab. Ideal number of partitions = (100*1028)/128 = 803.25 ~. How To Check Number Of Partitions In Spark Ui.

From jaceklaskowski.gitbooks.io

web UI and Streaming Statistics Page · (ABANDONED) Spark Streaming How To Check Number Of Partitions In Spark Ui I've heard from other engineers. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Basic information like storage level, number of partitions and memory overhead are provided. Dataframe.rdd.partitions.size is another alternative apart from df.rdd.getnumpartitions() or df.rdd.length. Read the input data with the number of partitions, that matches your core count; After running the above example, we can find two. How To Check Number Of Partitions In Spark Ui.

From timemachine.icu

Application UI Monitoring and Instrumentation TimeMachine Notes How To Check Number Of Partitions In Spark Ui Methods to get the current number of partitions of a dataframe. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Basic information like storage level, number of partitions and memory overhead are provided. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? After running the above example, we can find two rdds. How To Check Number Of Partitions In Spark Ui.

From statusneo.com

Everything you need to understand Data Partitioning in Spark StatusNeo How To Check Number Of Partitions In Spark Ui Read the input data with the number of partitions, that matches your core count; Methods to get the current number of partitions of a dataframe. In summary, you can easily find the number of partitions of a dataframe in spark by accessing the underlying rdd and calling the. How does one calculate the 'optimal' number of partitions based on the. How To Check Number Of Partitions In Spark Ui.