What Is Encoder Query . Attention input parameters — query, key, and value. This is essentially the approach proposed by the second paper (vaswani et al. Or if the encoder is a bidirectional rnn. The first step is to obtain the query (q), keys (k) and values (v). The attention layer takes its input in the form of three parameters, known as the query, key, and value. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The encoder rnn’s final hidden state. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. This is done by passing the same copy of the positional embeddings through three different linear layers, as. This query vector is used to calculate attention over the internal key. The attention layer takes its input in the form of three. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e.

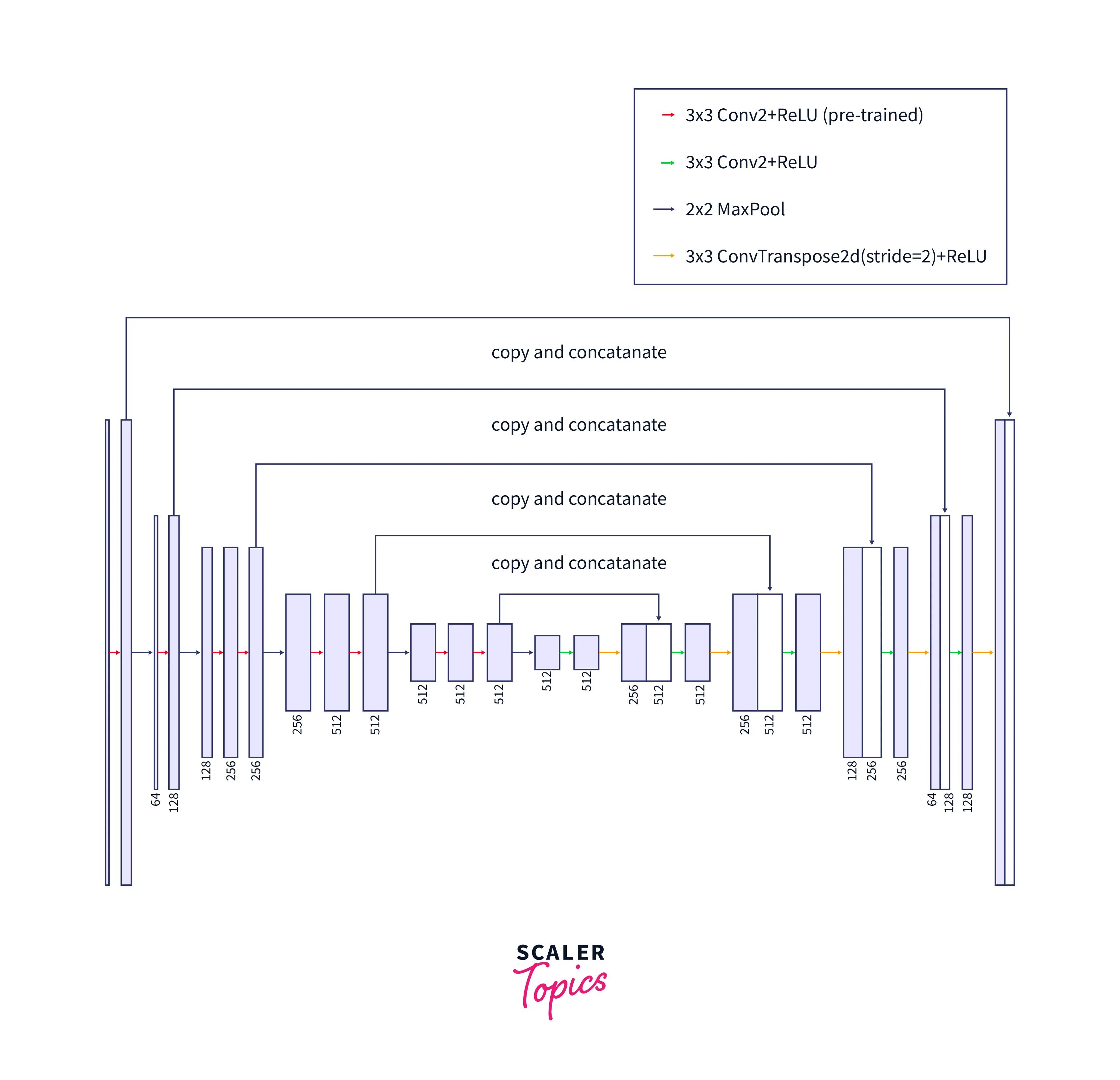

from www.scaler.com

The first step is to obtain the query (q), keys (k) and values (v). This query vector is used to calculate attention over the internal key. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. Or if the encoder is a bidirectional rnn. This is done by passing the same copy of the positional embeddings through three different linear layers, as. The encoder rnn’s final hidden state. The attention layer takes its input in the form of three. This is essentially the approach proposed by the second paper (vaswani et al. The attention layer takes its input in the form of three parameters, known as the query, key, and value. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e.

What is Encoder in Transformers Scaler Topics

What Is Encoder Query Attention input parameters — query, key, and value. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. Attention input parameters — query, key, and value. This query vector is used to calculate attention over the internal key. The attention layer takes its input in the form of three parameters, known as the query, key, and value. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. Or if the encoder is a bidirectional rnn. This is essentially the approach proposed by the second paper (vaswani et al. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. The attention layer takes its input in the form of three. This is done by passing the same copy of the positional embeddings through three different linear layers, as. The encoder rnn’s final hidden state. The first step is to obtain the query (q), keys (k) and values (v). The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning.

From www.researchgate.net

KGenhanced encoderdecoder query rewriting model Download Scientific Diagram What Is Encoder Query This is done by passing the same copy of the positional embeddings through three different linear layers, as. The encoder rnn’s final hidden state. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The attention layer takes its input in the form of three parameters, known as the query, key, and value.. What Is Encoder Query.

From www.youtube.com

What is Encoder? YouTube What Is Encoder Query The attention layer takes its input in the form of three parameters, known as the query, key, and value. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. Or if the encoder is a bidirectional rnn. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. It. What Is Encoder Query.

From www.researchgate.net

The structure of source code encoder and query encoder. Download Scientific Diagram What Is Encoder Query Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The attention layer takes its input in the form of three parameters, known as the query, key, and value. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. This query vector is. What Is Encoder Query.

From machinelearningmastery.com

Implementing the Transformer Encoder from Scratch in TensorFlow and Keras What Is Encoder Query Or if the encoder is a bidirectional rnn. This is essentially the approach proposed by the second paper (vaswani et al. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e.. What Is Encoder Query.

From www.researchgate.net

(PDF) Study of EncoderDecoder Architectures for CodeMix Search Query Translation What Is Encoder Query The attention layer takes its input in the form of three parameters, known as the query, key, and value. Attention input parameters — query, key, and value. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. Not all queries are easy to write, though, especially if you need to work with date fields or. What Is Encoder Query.

From www.researchgate.net

Pretraining of the query encoder. Download Scientific Diagram What Is Encoder Query The first step is to obtain the query (q), keys (k) and values (v). Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The attention layer takes its input in the form of three parameters, known as the query, key, and value. It first passes the prompt through an. What Is Encoder Query.

From joigpgovq.blob.core.windows.net

What Is Encoder And Decoder In Computer Architecture at Christy Mather blog What Is Encoder Query This is essentially the approach proposed by the second paper (vaswani et al. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. Attention input parameters — query, key, and value. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. The encoder typed models works to encode. What Is Encoder Query.

From qdrant.tech

Question Answering as a Service with Cohere and Qdrant Qdrant What Is Encoder Query This is done by passing the same copy of the positional embeddings through three different linear layers, as. The attention layer takes its input in the form of three parameters, known as the query, key, and value. The first step is to obtain the query (q), keys (k) and values (v). The attention layer takes its input in the form. What Is Encoder Query.

From ceaphkfx.blob.core.windows.net

What Is An Video Encoder at Margaret Shoop blog What Is Encoder Query This query vector is used to calculate attention over the internal key. The attention layer takes its input in the form of three parameters, known as the query, key, and value. Attention input parameters — query, key, and value. The encoder rnn’s final hidden state. Or if the encoder is a bidirectional rnn. 2017), where the two projection vectors are. What Is Encoder Query.

From www.scaler.com

What is Encoder in Transformers Scaler Topics What Is Encoder Query The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The first step is to obtain the query (q), keys (k) and values (v). The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. This is done by passing the same copy of the. What Is Encoder Query.

From jealouscomputers.com

What Is an Encoder and How Does It Work? (100 Working) What Is Encoder Query The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The first step is to obtain the query (q), keys (k) and values (v). The attention layer takes its input in the form of three. Or if the encoder is a bidirectional rnn. 2017), where the two projection vectors are called query (for. What Is Encoder Query.

From www.scaler.com

What is Encoder in Transformers Scaler Topics What Is Encoder Query The attention layer takes its input in the form of three. Attention input parameters — query, key, and value. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. Or if the encoder is a bidirectional rnn. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. The. What Is Encoder Query.

From huggingface.co

facebook/sparpaqbm25lexmodelqueryencoder at main What Is Encoder Query This is essentially the approach proposed by the second paper (vaswani et al. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. This is done by passing the same copy of the positional embeddings through three different linear layers, as. The first step is to obtain the query (q), keys. What Is Encoder Query.

From www.scaler.com

What is Encoder in Transformers Scaler Topics What Is Encoder Query The attention layer takes its input in the form of three parameters, known as the query, key, and value. This is done by passing the same copy of the positional embeddings through three different linear layers, as. The attention layer takes its input in the form of three. The encoder typed models works to encode input sequences of text into. What Is Encoder Query.

From www.encoder.com

Encoder The Ultimate Guide What is an Encoder, Uses & More EPC What Is Encoder Query Or if the encoder is a bidirectional rnn. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The encoder rnn’s final hidden state. This query vector is used to calculate attention over the internal key. The attention layer takes its input in the form of three. Not all queries are easy to. What Is Encoder Query.

From www.electricaltechnology.org

What is the Differences Between Encoder and Decoder? What Is Encoder Query 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. Or if the encoder is a bidirectional rnn. Attention input parameters — query, key, and value. The attention layer takes its input in the form of three parameters, known as the query, key, and value. This query vector is used to calculate attention over the. What Is Encoder Query.

From www.researchgate.net

The architecture of TGTR. (A) Topic Recognizer; (B) Query Encoder; (C)... Download Scientific What Is Encoder Query This query vector is used to calculate attention over the internal key. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. The attention layer takes its input in the form of three parameters, known as the query, key, and value. Not all queries are easy to write, though, especially if you need. What Is Encoder Query.

From www.youtube.com

Introduction to Encoders and Decoders YouTube What Is Encoder Query The attention layer takes its input in the form of three. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The first step is to obtain the query (q), keys (k) and values (v). The encoders job is to take in an input sequence and output a context vector / thought vector. What Is Encoder Query.

From www.geeksforgeeks.org

Self attention in NLP What Is Encoder Query The attention layer takes its input in the form of three parameters, known as the query, key, and value. This is done by passing the same copy of the positional embeddings through three different linear layers, as. This is essentially the approach proposed by the second paper (vaswani et al. The first step is to obtain the query (q), keys. What Is Encoder Query.

From theinstrumentguru.com

Encoder THE INSTRUMENT GURU What Is Encoder Query This is essentially the approach proposed by the second paper (vaswani et al. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. The encoder rnn’s final hidden state. The attention layer takes its input in the form of three parameters, known as the query, key, and value. The encoders job is to. What Is Encoder Query.

From www.nesabamedia.com

Apa itu Encoder? Mengenal Pengertian Encoder What Is Encoder Query The encoder rnn’s final hidden state. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. This is done by passing the same copy of the positional embeddings through three different linear layers, as. This query vector is used to calculate attention over the internal key. It first passes the prompt. What Is Encoder Query.

From huggingface.co

ncbi/MedCPTQueryEncoder · Hugging Face What Is Encoder Query This is essentially the approach proposed by the second paper (vaswani et al. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The encoder rnn’s final hidden state. This is done by passing the same copy of the positional embeddings through three different linear layers, as. This query vector is used to. What Is Encoder Query.

From magazine.sebastianraschka.com

Understanding Encoder And Decoder LLMs What Is Encoder Query The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. Attention input parameters — query, key, and value. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. The encoder rnn’s final hidden state. Not all queries are easy to write, though, especially if you need. What Is Encoder Query.

From www.maestro.io

What is an Encoder? The Ultimate Guide to Video Encoders for Streaming What Is Encoder Query The first step is to obtain the query (q), keys (k) and values (v). The encoder rnn’s final hidden state. This query vector is used to calculate attention over the internal key. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. The encoder typed models works to encode input sequences of text. What Is Encoder Query.

From github.com

GitHub AncaMt/CHATBOTencoderdecoder The objective of this project is to create a deep What Is Encoder Query Or if the encoder is a bidirectional rnn. The encoder rnn’s final hidden state. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The attention layer takes its input in the form of three parameters, known as the query, key, and value. The encoder typed models works to encode. What Is Encoder Query.

From magazine.sebastianraschka.com

Understanding Encoder And Decoder LLMs What Is Encoder Query This is done by passing the same copy of the positional embeddings through three different linear layers, as. Attention input parameters — query, key, and value. This query vector is used to calculate attention over the internal key. The encoder rnn’s final hidden state. The attention layer takes its input in the form of three parameters, known as the query,. What Is Encoder Query.

From www.geeksforgeeks.org

Digital logic Encoder What Is Encoder Query This is essentially the approach proposed by the second paper (vaswani et al. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The attention layer takes its input in the form of three parameters, known as the query, key, and value. 2017), where the two projection vectors are called. What Is Encoder Query.

From stats.stackexchange.com

neural networks What exactly are keys, queries, and values in attention mechanisms? Cross What Is Encoder Query Or if the encoder is a bidirectional rnn. The attention layer takes its input in the form of three parameters, known as the query, key, and value. The first step is to obtain the query (q), keys (k) and values (v). It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. The attention. What Is Encoder Query.

From exopwpicq.blob.core.windows.net

What Is The Encoder In Communication at Darlene Wray blog What Is Encoder Query The encoder typed models works to encode input sequences of text into a numerical representation of numbers. The first step is to obtain the query (q), keys (k) and values (v). This query vector is used to calculate attention over the internal key. The encoders job is to take in an input sequence and output a context vector / thought. What Is Encoder Query.

From www.scaler.com

Encoder in Digital Electronics Scaler Topics What Is Encoder Query 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. This is essentially the approach proposed by the second paper (vaswani et al. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. Not all queries are easy to write, though, especially if you need to. What Is Encoder Query.

From www.circuitdiagram.co

4 To 2 Encoder Truth Table And Circuit Diagram What Is Encoder Query Attention input parameters — query, key, and value. The encoder typed models works to encode input sequences of text into a numerical representation of numbers. Not all queries are easy to write, though, especially if you need to work with date fields or or operators. The attention layer takes its input in the form of three. The attention layer takes. What Is Encoder Query.

From lena-voita.github.io

Seq2seq and Attention What Is Encoder Query The first step is to obtain the query (q), keys (k) and values (v). 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. The attention layer takes its input in the form of three. This. What Is Encoder Query.

From circuitdblola.z19.web.core.windows.net

What Is A Priority Encoder What Is Encoder Query Attention input parameters — query, key, and value. The encoder rnn’s final hidden state. This is done by passing the same copy of the positional embeddings through three different linear layers, as. Or if the encoder is a bidirectional rnn. Not all queries are easy to write, though, especially if you need to work with date fields or or operators.. What Is Encoder Query.

From theinstrumentguru.com

Encoder and Decoder THE INSTRUMENT GURU What Is Encoder Query Not all queries are easy to write, though, especially if you need to work with date fields or or operators. 2017), where the two projection vectors are called query (for decoder) and key (for encoder),. The first step is to obtain the query (q), keys (k) and values (v). The attention layer takes its input in the form of three. What Is Encoder Query.

From electronicshacks.com

What Is a Priority Encoder? ElectronicsHacks What Is Encoder Query This is done by passing the same copy of the positional embeddings through three different linear layers, as. The encoders job is to take in an input sequence and output a context vector / thought vector (i.e. It first passes the prompt through an encoder to generate a query vector encoding the semantic meaning. This is essentially the approach proposed. What Is Encoder Query.