Torch Jit Save C++ . But you can load a torch::jit::module and then. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. Save an offline version of this module for use in a separate process. Pytorch jit is an optimizing jit compiler for pytorch. R save an offline version of this module for use in a separate process. The saved module serializes all of the methods, submodules, parameters, and. In order to achieve this pytorch models needs to be decoupled from any specific runtime. Save tensor in c++ and load in python. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. A nuanced explanation is as follows. This is useful when saving and. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. #include // save one tensor. In c++, call torch::save() to save.

from loexiizxq.blob.core.windows.net

This is useful when saving and. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. In c++, call torch::save() to save. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. Save an offline version of this module for use in a separate process. The saved module serializes all of the methods, submodules, parameters, and. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. Save tensor in c++ and load in python. Pytorch jit is an optimizing jit compiler for pytorch.

Torch.jit.trace Input Name at Robert Francis blog

Torch Jit Save C++ This is useful when saving and. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. The saved module serializes all of the methods, submodules, parameters, and. Pytorch jit is an optimizing jit compiler for pytorch. In c++, call torch::save() to save. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. In order to achieve this pytorch models needs to be decoupled from any specific runtime. Save tensor in c++ and load in python. R save an offline version of this module for use in a separate process. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. But you can load a torch::jit::module and then. A nuanced explanation is as follows. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. This is useful when saving and. Save an offline version of this module for use in a separate process. #include // save one tensor.

From dxouvjcwk.blob.core.windows.net

Torch Jit Dict at Susan Fairchild blog Torch Jit Save C++ #include // save one tensor. This is useful when saving and. The saved module serializes all of the methods, submodules, parameters, and. A nuanced explanation is as follows. Save an offline version of this module for use in a separate process. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create. Torch Jit Save C++.

From blog.csdn.net

torchjitload(model_path) 失败原因CSDN博客 Torch Jit Save C++ In c++, call torch::save() to save. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. Portability allows models to be deployed in multithreaded. Torch Jit Save C++.

From caffe2.ai

Caffe2 C++ API torchjitscriptTreeView Struct Reference Torch Jit Save C++ Save an offline version of this module for use in a separate process. But you can load a torch::jit::module and then. #include // save one tensor. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. In order to achieve this pytorch models needs to. Torch Jit Save C++.

From discuss.pytorch.org

Unable to save the pytorch model using torch.jit.scipt jit PyTorch Torch Jit Save C++ The saved module serializes all of the methods, submodules, parameters, and. Save tensor in c++ and load in python. In c++, call torch::save() to save. #include // save one tensor. A nuanced explanation is as follows. In order to achieve this pytorch models needs to be decoupled from any specific runtime. But you can load a torch::jit::module and then. There. Torch Jit Save C++.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Save C++ #include // save one tensor. This is useful when saving and. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. But you can load a torch::jit::module and then. In c++, call torch::save() to save. Pytorch jit is an optimizing jit compiler for pytorch. The. Torch Jit Save C++.

From github.com

torchjitscriptModule not threadsafe · Issue 15210 · pytorch Torch Jit Save C++ R save an offline version of this module for use in a separate process. Pytorch jit is an optimizing jit compiler for pytorch. This is useful when saving and. Save tensor in c++ and load in python. In c++, call torch::save() to save. A nuanced explanation is as follows. There is no way to take a model in c++ that. Torch Jit Save C++.

From github.com

pytorch/torch/csrc/jit/api/module_save.cpp at main · pytorch/pytorch Torch Jit Save C++ Save tensor in c++ and load in python. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. R save an offline version of this module for use in a separate process. #include //. Torch Jit Save C++.

From github.com

torch.jit.save() produces complex c++ error when path does not exist Torch Jit Save C++ But you can load a torch::jit::module and then. R save an offline version of this module for use in a separate process. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a. Torch Jit Save C++.

From juejin.cn

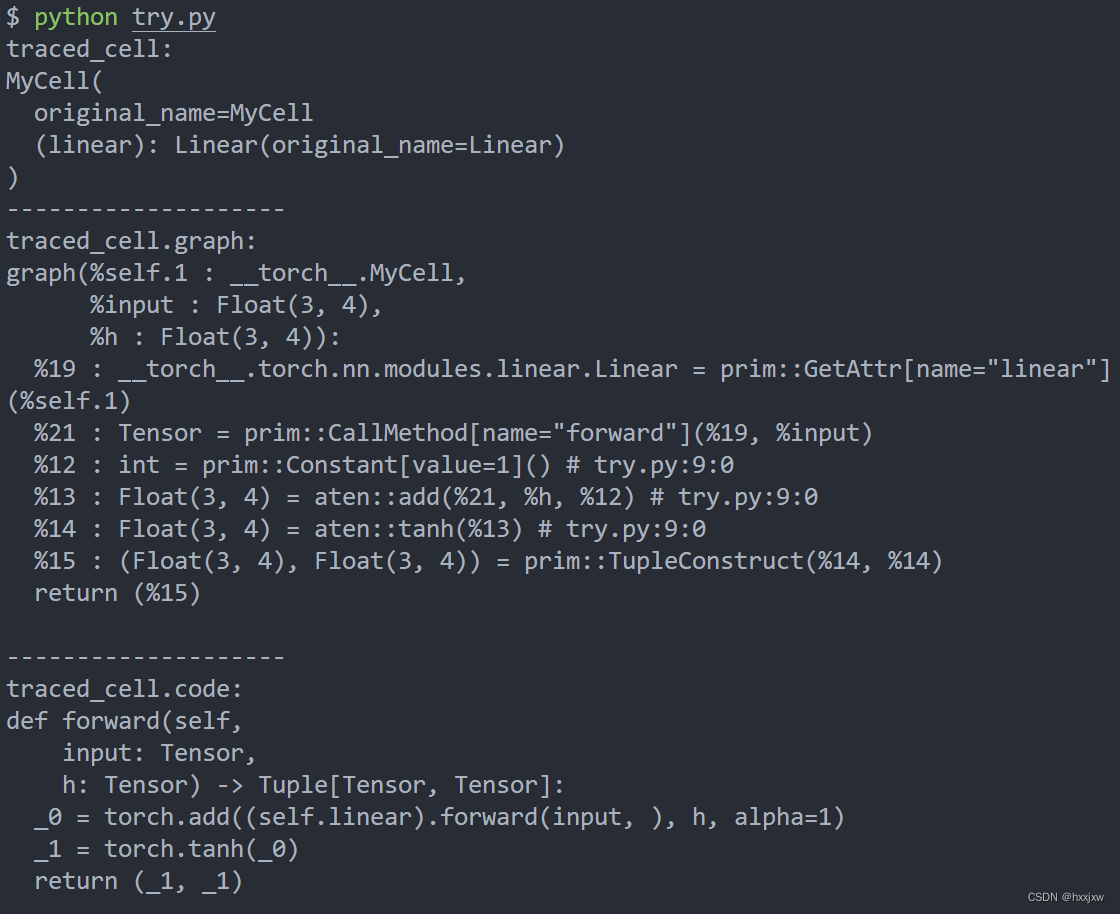

TorchScript 系列解读(二):Torch jit tracer 实现解析 掘金 Torch Jit Save C++ But you can load a torch::jit::module and then. This is useful when saving and. R save an offline version of this module for use in a separate process. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. Save an offline version of this module for use in a separate process. Portability. Torch Jit Save C++.

From blog.csdn.net

torch.jit.trace与torch.jit.script的区别CSDN博客 Torch Jit Save C++ Pytorch jit is an optimizing jit compiler for pytorch. The saved module serializes all of the methods, submodules, parameters, and. But you can load a torch::jit::module and then. In c++, call torch::save() to save. #include // save one tensor. This is useful when saving and. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is. Torch Jit Save C++.

From github.com

Using torchvision roi_align in libtorch c++ jit modules · Issue 3294 Torch Jit Save C++ To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. A nuanced explanation is as follows. Save tensor in c++ and load in python. R save an offline version of this module for use in a separate process. In order to achieve this pytorch models. Torch Jit Save C++.

From github.com

torch.jit.save() generates different contents in a file among different Torch Jit Save C++ #include // save one tensor. In order to achieve this pytorch models needs to be decoupled from any specific runtime. A nuanced explanation is as follows. This is useful when saving and. In c++, call torch::save() to save. Save tensor in c++ and load in python. But you can load a torch::jit::module and then. There is no way to take. Torch Jit Save C++.

From github.com

Performance issue with torch.jit.trace(), slow prediction in C++ (CPU Torch Jit Save C++ Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. In c++, call torch::save() to save. Save an offline version of this module for use in a separate process. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input. Torch Jit Save C++.

From github.com

torch.jit.load support specifying a target device. · Issue 775 Torch Jit Save C++ In c++, call torch::save() to save. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. Pytorch jit is an optimizing jit compiler for pytorch. In order to achieve this pytorch models needs to be decoupled from any specific runtime. Portability allows models to be deployed in multithreaded inference servers, mobiles, and. Torch Jit Save C++.

From github.com

Issues while saving a TorchScript using torch.jit.save Torch Jit Save C++ But you can load a torch::jit::module and then. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. R save an offline version of this module for use in a separate process. Pytorch jit is an optimizing jit compiler for pytorch. A nuanced explanation is as follows. In c++,. Torch Jit Save C++.

From huanghongxing-jim.github.io

Pytorch C/C++模型部署 Huang Hongxing Torch Jit Save C++ The saved module serializes all of the methods, submodules, parameters, and. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. Pytorch jit is an optimizing jit compiler for pytorch. #include // save one tensor. This is useful when saving and. R save an offline version of this module for use in. Torch Jit Save C++.

From github.com

torch.jit.save gives error RuntimeError Could not export Python Torch Jit Save C++ A nuanced explanation is as follows. The saved module serializes all of the methods, submodules, parameters, and. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. But you can load a torch::jit::module and then. This is useful when saving and. Pytorch jit is an optimizing jit compiler for pytorch. R save. Torch Jit Save C++.

From blog.csdn.net

C++上实现图像风格迁移_图片风格迁移算法c加加CSDN博客 Torch Jit Save C++ Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. In order to achieve this pytorch models needs to be decoupled from any specific runtime. The saved module serializes all of the methods, submodules, parameters, and. Save an offline version of this module for use in a separate process. This is useful. Torch Jit Save C++.

From loexiizxq.blob.core.windows.net

Torch.jit.trace Input Name at Robert Francis blog Torch Jit Save C++ #include // save one tensor. But you can load a torch::jit::module and then. In c++, call torch::save() to save. Pytorch jit is an optimizing jit compiler for pytorch. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. To convert a pytorch model to torch script via tracing, you must pass an. Torch Jit Save C++.

From klajnsgdr.blob.core.windows.net

Torch.jit.trace Dynamic Shape at Josephine Warren blog Torch Jit Save C++ In order to achieve this pytorch models needs to be decoupled from any specific runtime. A nuanced explanation is as follows. In c++, call torch::save() to save. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. This is useful when saving and. #include // save one tensor. But you can load. Torch Jit Save C++.

From github.com

C++ FrontEnd model.forward() crash when loading model with torchjit Torch Jit Save C++ To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. The saved module serializes all of the methods, submodules, parameters, and. Save an offline version of this module for use in a separate process. #include // save one tensor. A nuanced explanation is as follows.. Torch Jit Save C++.

From blog.csdn.net

C++上实现图像风格迁移_图片风格迁移算法c加加CSDN博客 Torch Jit Save C++ To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. This is useful when saving and. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. Pytorch jit is an optimizing jit compiler for. Torch Jit Save C++.

From loehilura.blob.core.windows.net

Torch Jit Trace And Save at Jason Sterling blog Torch Jit Save C++ R save an offline version of this module for use in a separate process. Pytorch jit is an optimizing jit compiler for pytorch. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. A nuanced explanation is as follows. This is useful when saving and. In c++, call torch::save() to save. Save. Torch Jit Save C++.

From loehilura.blob.core.windows.net

Torch Jit Trace And Save at Jason Sterling blog Torch Jit Save C++ Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. In c++, call torch::save() to save. Pytorch jit is an optimizing jit compiler for pytorch. A nuanced explanation is as follows. In order to achieve this pytorch models needs to be decoupled from any specific runtime. R save an offline version of. Torch Jit Save C++.

From loehilura.blob.core.windows.net

Torch Jit Trace And Save at Jason Sterling blog Torch Jit Save C++ The saved module serializes all of the methods, submodules, parameters, and. R save an offline version of this module for use in a separate process. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which. Torch Jit Save C++.

From github.com

[BUG] torch jit save / AssertionError Expected Module but got · Issue Torch Jit Save C++ This is useful when saving and. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that. Torch Jit Save C++.

From github.com

Cannot load a saved torch.jit.trace using C++'s torchjitload Torch Jit Save C++ But you can load a torch::jit::module and then. R save an offline version of this module for use in a separate process. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. There is. Torch Jit Save C++.

From github.com

using torchjittrace to run your model on c++ · Issue 70 · vchoutas Torch Jit Save C++ Pytorch jit is an optimizing jit compiler for pytorch. In c++, call torch::save() to save. In order to achieve this pytorch models needs to be decoupled from any specific runtime. R save an offline version of this module for use in a separate process. But you can load a torch::jit::module and then. Save tensor in c++ and load in python.. Torch Jit Save C++.

From github.com

[jit] the torch script and c++ api using · Issue 15523 · pytorch Torch Jit Save C++ In order to achieve this pytorch models needs to be decoupled from any specific runtime. #include // save one tensor. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along. Torch Jit Save C++.

From github.com

Microsoft C++ exception torchjitErrorReport at memory location Torch Jit Save C++ This is useful when saving and. In order to achieve this pytorch models needs to be decoupled from any specific runtime. Pytorch jit is an optimizing jit compiler for pytorch. R save an offline version of this module for use in a separate process. #include // save one tensor. In c++, call torch::save() to save. The saved module serializes all. Torch Jit Save C++.

From loexiizxq.blob.core.windows.net

Torch.jit.trace Input Name at Robert Francis blog Torch Jit Save C++ In order to achieve this pytorch models needs to be decoupled from any specific runtime. Portability allows models to be deployed in multithreaded inference servers, mobiles, and cars which is difficult with python. Save an offline version of this module for use in a separate process. #include // save one tensor. This is useful when saving and. Pytorch jit is. Torch Jit Save C++.

From github.com

Models saved in C++ LibTorch with torchsave, cannot be loaded in Torch Jit Save C++ This is useful when saving and. Save tensor in c++ and load in python. But you can load a torch::jit::module and then. A nuanced explanation is as follows. #include // save one tensor. Torch.jit.save(), on the other hand, serializes scriptmodules to a format that can be loaded in python or c++. In c++, call torch::save() to save. Save an offline. Torch Jit Save C++.

From blog.csdn.net

【官方文档解读】torch.jit.script 的使用,并附上官方文档中的示例代码CSDN博客 Torch Jit Save C++ There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module. The saved module serializes all of the methods, submodules, parameters, and. Save an offline version of this module for use in a separate process. #include // save one tensor. Torch.jit.save(), on the other hand, serializes scriptmodules to a format. Torch Jit Save C++.

From github.com

MaskRCNN model loaded fail with torchjitload(model_path) (C++ API Torch Jit Save C++ A nuanced explanation is as follows. To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. But you can load a torch::jit::module and then. The saved module serializes all of the methods, submodules, parameters, and. Pytorch jit is an optimizing jit compiler for pytorch. Save. Torch Jit Save C++.

From cenvcxsf.blob.core.windows.net

Torch Jit Quantization at Juana Alvarez blog Torch Jit Save C++ To convert a pytorch model to torch script via tracing, you must pass an instance of your model along with an example input to the. #include // save one tensor. Pytorch jit is an optimizing jit compiler for pytorch. There is no way to take a model in c++ that inherits from torch::nn::module and trace it to create a torch::jit::module.. Torch Jit Save C++.