Torch.autograd.set_Detect_Anomaly(True) . Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. It will have about 2mib. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Run the code snippet below. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak.

from discuss.pytorch.org

A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Run the code snippet below. It will have about 2mib. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak.

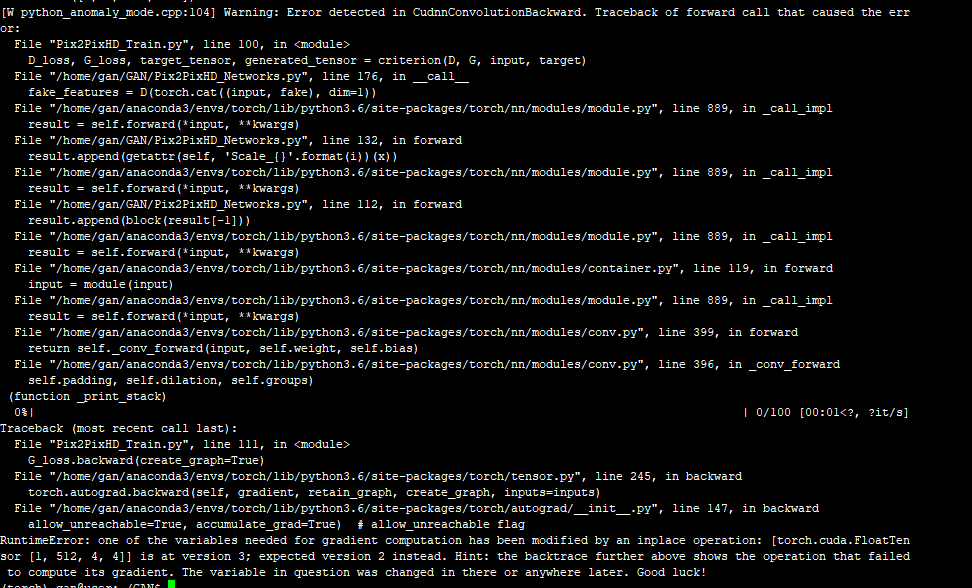

RuntimeError one of the variables needed for gradient computation has

Torch.autograd.set_Detect_Anomaly(True) Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. Run the code snippet below. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to.

From github.com

raises DataDependentOutputException with `torch Torch.autograd.set_Detect_Anomaly(True) A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Run the code snippet below. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. When using a. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Run the code snippet below. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while.. Torch.autograd.set_Detect_Anomaly(True).

From github.com

torch.autograd.set_detect_anomaly(True) does not exist in C++? · Issue Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. It will. Torch.autograd.set_Detect_Anomaly(True).

From www.reddit.com

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Run the code snippet below. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. It will have about 2mib. When using a gpu it’s better. Torch.autograd.set_Detect_Anomaly(True).

From exozxhilw.blob.core.windows.net

Torch Set_Detect_Anomaly at Neil Campbell blog Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a. Torch.autograd.set_Detect_Anomaly(True).

From github.com

autograd.grad with set_detect_anomaly(True) will cause memory leak Torch.autograd.set_Detect_Anomaly(True) A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. It will have about 2mib. Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

class torch.autograd.set_grad_enabled(mode bool)的使用举例_set grad enabled Torch.autograd.set_Detect_Anomaly(True) Run the code snippet below. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A. Torch.autograd.set_Detect_Anomaly(True).

From www.pythonfixing.com

[FIXED] torch.optim.LBFGS() does not change parameters PythonFixing Torch.autograd.set_Detect_Anomaly(True) Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Run the code snippet below. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. It will have about 2mib. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. Run the code snippet below. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Run the code snippet below. It will have about 2mib.. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

Inplace operation error_ autograd PyTorch Forums Torch.autograd.set_Detect_Anomaly(True) A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. It will have about 2mib. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Run. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

Pytorch中loss.backward()和torch.autograd.grad的使用和区别(通俗易懂)CSDN博客 Torch.autograd.set_Detect_Anomaly(True) Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime. Torch.autograd.set_Detect_Anomaly(True).

From www.youtube.com

allow_unused = True in torch.autograd in PyTorch YouTube Torch.autograd.set_Detect_Anomaly(True) Run the code snippet below. It will have about 2mib. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

PyTorch出现如下报错:RuntimeError one of the variables needed for gradient Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

Torch.autograd.set_Detect_Anomaly(True) When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. It will have about 2mib. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Learn how to. Torch.autograd.set_Detect_Anomaly(True).

From github.com

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Learn how to use torch.autograd to compute gradients. Torch.autograd.set_Detect_Anomaly(True).

From github.com

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. It will have about 2mib. Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. When using a gpu it’s better to set. Torch.autograd.set_Detect_Anomaly(True).

From github.com

Improve torch.autograd.set_detect_anomaly documentation · Issue 26408 Torch.autograd.set_Detect_Anomaly(True) A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. It will have. Torch.autograd.set_Detect_Anomaly(True).

From exozxhilw.blob.core.windows.net

Torch Set_Detect_Anomaly at Neil Campbell blog Torch.autograd.set_Detect_Anomaly(True) When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. It will have about 2mib. Using. Torch.autograd.set_Detect_Anomaly(True).

From github.com

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. It will have about 2mib. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. When using a. Torch.autograd.set_Detect_Anomaly(True).

From github.com

Debugging with enable anomaly detection torch.autograd.set_detect Torch.autograd.set_Detect_Anomaly(True) Run the code snippet below. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

torch.Tensor.requires_grad_(requires_grad=True)的使用说明CSDN博客 Torch.autograd.set_Detect_Anomaly(True) A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Run the code snippet below. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to. Torch.autograd.set_Detect_Anomaly(True).

From github.com

RuntimeError Function 'MmBackward0' returned nan values in its 1th Torch.autograd.set_Detect_Anomaly(True) A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why using torch.autograd.set_detect_anomaly(true). Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

torch.autograd.set_detect_anomaly在mmdetection中的用法_mmdetection autograd Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. It will have about 2mib. When using a. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

class torch.autograd.set_grad_enabled(mode bool)的使用举例_set grad enabled Torch.autograd.set_Detect_Anomaly(True) Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

RuntimeError one of the variables needed for gradient computation has Torch.autograd.set_Detect_Anomaly(True) When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. It will have about 2mib. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

CustomLoss Function Outputting Inf as a loss in one iteration and NaNs Torch.autograd.set_Detect_Anomaly(True) A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. It will have about 2mib. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly. Torch.autograd.set_Detect_Anomaly(True).

From github.com

Very slow runtime caused by `torch.autograd.set_detect_anomaly(True Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to. Torch.autograd.set_Detect_Anomaly(True).

From zhuanlan.zhihu.com

torch.autograd.grad()中的retain_graph参数问题 知乎 Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Run the code snippet below. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime. Torch.autograd.set_Detect_Anomaly(True).

From github.com

AttributeError 'Parameter' object has no attribute 'fisher' · Issue 8 Torch.autograd.set_Detect_Anomaly(True) Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Run the code snippet below. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions. Torch.autograd.set_Detect_Anomaly(True).

From github.com

using deepspeed initialize huggingface GPT2LMHeadModel, it occured Torch.autograd.set_Detect_Anomaly(True) A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Run the. Torch.autograd.set_Detect_Anomaly(True).

From docs.acceldata.io

Anomaly Detection Settings Torch Torch.autograd.set_Detect_Anomaly(True) Run the code snippet below. It will have about 2mib. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Learn how to use torch.autograd to compute gradients. Torch.autograd.set_Detect_Anomaly(True).

From khalitt.github.io

pytorch nan处理 null Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. Run the code snippet below. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause. Torch.autograd.set_Detect_Anomaly(True).

From blog.csdn.net

Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. Run the code snippet below. A user asks why they get a runtime error when using torch.autograd.set_detect_anomaly (true) to. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Using torch.autograd.grad() with torch.autograd.set_detect_anomaly(true) will cause memory leak. Learn how to use torch.autograd to compute gradients. Torch.autograd.set_Detect_Anomaly(True).

From discuss.pytorch.org

[Solved][Pytorch1.5] RuntimeError one of the variables needed for Torch.autograd.set_Detect_Anomaly(True) It will have about 2mib. A user asks why using torch.autograd.set_detect_anomaly(true) throws runtime error while. When using a gpu it’s better to set pin_memory=true, this instructs dataloader to use pinned memory and enables faster and. Run the code snippet below. Learn how to use torch.autograd to compute gradients of arbitrary scalar valued functions with minimal changes to the existing code.. Torch.autograd.set_Detect_Anomaly(True).