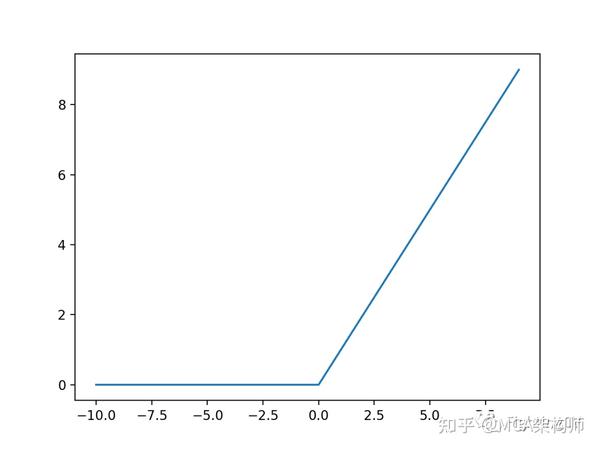

Rectified Linear Function . The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source of. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron.

from zhuanlan.zhihu.com

Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The kink in the function is the source of. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational.

原来ReLU这么好用!一文带你深度了解ReLU激活函数! 知乎

Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source of. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing.

From sciences24.com

Relu Activation Function بالعربي Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is an activation function that introduces the property. Rectified Linear Function.

From www.youtube.com

Leaky ReLU Activation Function Leaky Rectified Linear Unit function Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks,. Rectified Linear Function.

From www.researchgate.net

(PDF) Analysis of function of rectified linear unit used in deep learning Rectified Linear Function Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source of. The rectified linear unit. Rectified Linear Function.

From deepai.org

TaLU A Hybrid Activation Function Combining Tanh and Rectified Linear Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified Linear Function.

From lme.tf.fau.de

Lecture Notes in Deep Learning Activations, Convolutions, and Pooling Rectified Linear Function The kink in the function is the source of. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Relu, or rectified linear. Rectified Linear Function.

From awjunaid.com

How does the Rectified Linear Unit (ReLU) activation function work Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency. Rectified Linear Function.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Rectified Linear Function The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The kink in the function is the source of. Rectified linear units, or relus, are a type. Rectified Linear Function.

From www.researchgate.net

Rectified linear unit illustration Download Scientific Diagram Rectified Linear Function The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is one of the most popular. Rectified Linear Function.

From machinelearningmastery.com

A Gentle Introduction to the Rectified Linear Unit (ReLU Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep. Rectified Linear Function.

From www.oreilly.com

Rectified Linear Unit Neural Networks with R [Book] Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source of. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The rectified linear unit (relu) is an activation function that introduces. Rectified Linear Function.

From www.youtube.com

Tutorial 10 Activation Functions Rectified Linear Unit(relu) and Leaky Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The kink in the function is the source of. Rectified linear units, or relus, are a type of activation. Rectified Linear Function.

From www.researchgate.net

Rectified Linear Unit (ReLU) [72] Download Scientific Diagram Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is an activation. Rectified Linear Function.

From www.researchgate.net

ReLU activation function. ReLU, rectified linear unit Download Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The rectified linear unit (relu) is one of the most popular. Rectified Linear Function.

From mlnotebook.github.io

A Simple Neural Network Transfer Functions · Machine Learning Notebook Rectified Linear Function Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity. Rectified Linear Function.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function Download Scientific Rectified Linear Function Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. An activation function in the context of neural networks is a mathematical function. Rectified Linear Function.

From www.vrogue.co

Implement Rectified Linear Activation Function Relu U vrogue.co Rectified Linear Function The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The kink in the function is the source of. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Rectified linear units, or. Rectified Linear Function.

From www.youtube.com

Rectified Linear Unit (ReLU) Activation Function YouTube Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The kink in the function is the source of. An activation function in. Rectified Linear Function.

From www.researchgate.net

Activation function (ReLu). ReLu Rectified Linear Activation Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The kink in the function is the source of. Rectified linear units, or relus, are a type of activation. Rectified Linear Function.

From www.analyticsvidhya.com

Activation Functions for Neural Networks and their Implementation in Python Rectified Linear Function The kink in the function is the source of. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) function is a cornerstone activation. Rectified Linear Function.

From www.researchgate.net

2 Rectified Linear Unit function Download Scientific Diagram Rectified Linear Function The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of. Rectified Linear Function.

From www.semanticscholar.org

Figure 5 from Analysis of function of rectified linear unit used in Rectified Linear Function The kink in the function is the source of. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. An. Rectified Linear Function.

From zhuanlan.zhihu.com

原来ReLU这么好用!一文带你深度了解ReLU激活函数! 知乎 Rectified Linear Function The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The kink in the function is the source of. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of. Rectified Linear Function.

From www.vrogue.co

Rectified Linear Unitrelu Activation Functions vrogue.co Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Rectified linear units, or relus, are a type of activation function that are. Rectified Linear Function.

From www.aiplusinfo.com

Rectified Linear Unit (ReLU) Introduction and Uses in Machine Learning Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a mathematical function. Rectified Linear Function.

From www.researchgate.net

Rectified linear unit (ReLU) activation function Download Scientific Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) function is. Rectified Linear Function.

From www.researchgate.net

Functions including exponential linear unit (ELU), parametric rectified Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The rectified linear unit (relu) function is a cornerstone activation function,. Rectified Linear Function.

From www.researchgate.net

Plot of the sigmoid function, hyperbolic tangent, rectified linear unit Rectified Linear Function The kink in the function is the source of. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Relu,. Rectified Linear Function.

From www.researchgate.net

Rectified linear unit as activation function Download Scientific Diagram Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source of. The rectified linear unit (relu) is an. Rectified Linear Function.

From www.researchgate.net

The Rectified Linear Unit (ReLU) activation function Download Rectified Linear Function The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Rectified Linear Function.

From pub.aimind.so

Rectified Linear Unit (ReLU) Activation Function by Cognitive Creator Rectified Linear Function An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks,. Rectified Linear Function.

From srdas.github.io

Deep Learning Rectified Linear Function Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is. Rectified Linear Function.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function [16] Download Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The rectified linear unit (relu) function is a cornerstone activation function,. Rectified Linear Function.

From www.slideserve.com

PPT Perceptron PowerPoint Presentation, free download ID9003635 Rectified Linear Function The kink in the function is the source of. The rectified linear unit (relu) is one of the most popular activation functions used in neural networks, especially in deep learning. The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. The rectified linear unit (relu) is an activation function that introduces the property. Rectified Linear Function.

From www.gabormelli.com

Sshaped Rectified Linear Activation Function GMRKB Rectified Linear Function The rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for reducing. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational.. Rectified Linear Function.

From www.mplsvpn.info

Rectified Linear Unit Activation Function In Deep Learning MPLSVPN Rectified Linear Function Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. An activation function in the context of neural networks is a mathematical function. Rectified Linear Function.