Houlsby Adapter . On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact models that. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks.

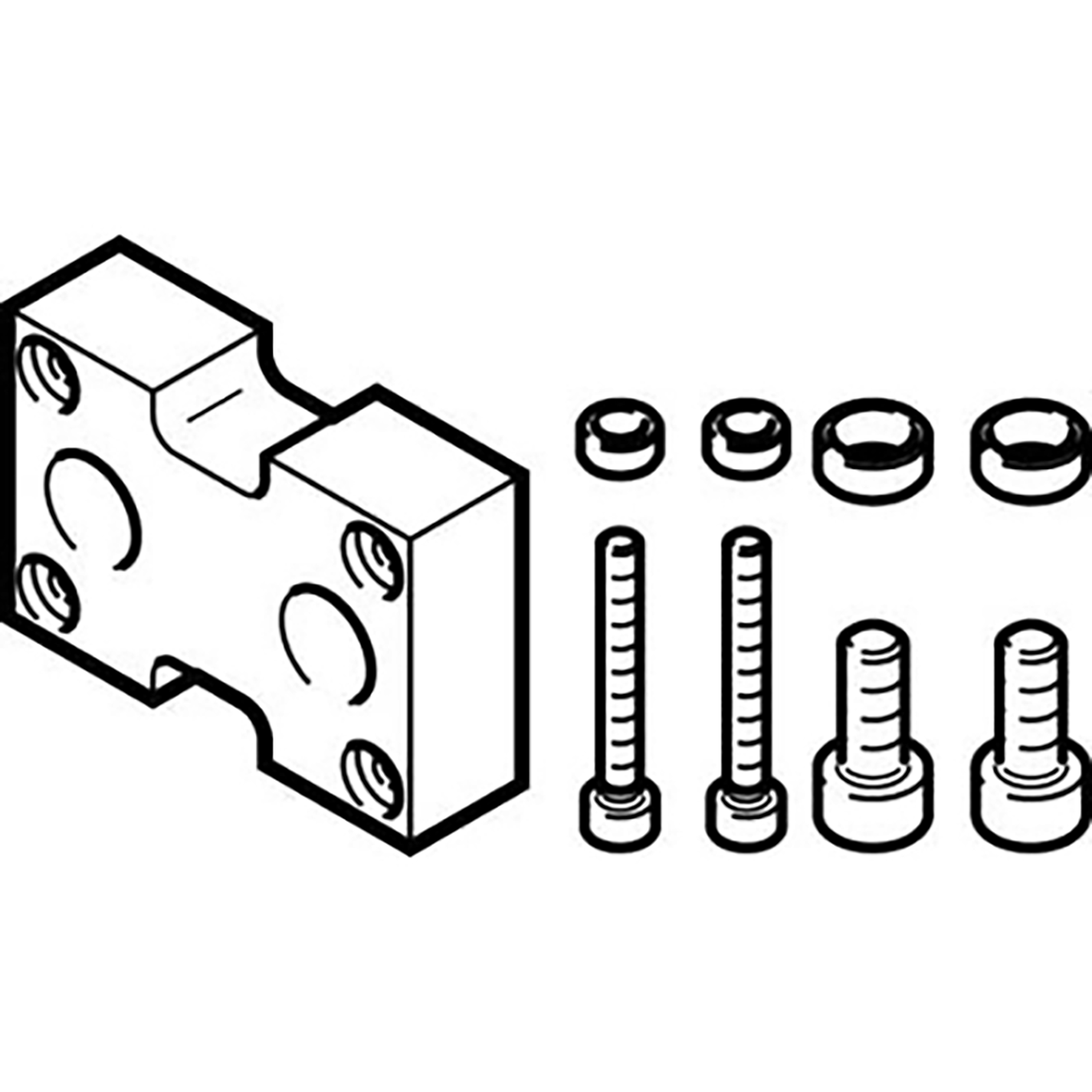

from shop.shepherd-hydraulics.com

This technique yields compact models that. On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text.

Adapter Kit Shepherd Hydraulics

Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks.

From shop.shepherd-hydraulics.com

Adapter Kit Shepherd Hydraulics Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. Adapters allow one to train a model to solve new tasks,. Houlsby Adapter.

From blog.zhuwenq.cc

KAdapter Infusing Knowledge into PreTraining Models with Adapters Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters. Houlsby Adapter.

From ljvmiranda921.github.io

Study notes on parameterefficient techniques Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's. Houlsby Adapter.

From gardenequipmentreview.com

Sealey JCA1 Single Tube Jerry Can Adaptor Garden Equipment Review Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. This technique yields compact models that. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's effectiveness, we transfer the recently. Houlsby Adapter.

From zhuanlan.zhihu.com

【NLP学习】Adapter的简介 知乎 Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. This technique yields compact models that. To demonstrate adapter’s effectiveness, we transfer the recently. Houlsby Adapter.

From ar5iv.labs.arxiv.org

[2305.15036] Exploring Adapterbased Transfer Learning for Houlsby Adapter On the glue benchmark (wang et al., 2018), adapter tuning. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. Adapters. Houlsby Adapter.

From karmainstrumentation.co.za

Adapters and Plugs Karma Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. We show that adapters achieve parameter efficient transfer for text tasks. On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model. Houlsby Adapter.

From www.rtmediasolutions.com.au

Threaded Brass Adaptor for Fan Tip RT Media Solutions Houlsby Adapter To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. We show that adapters achieve parameter efficient transfer for text tasks. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact. Houlsby Adapter.

From huggingface.co

shekmanchoy/news_Houlsby_adapter at main Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Houlsby Adapter.

From lightning.ai

Understanding ParameterEfficient of Large Language Models Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact. Houlsby Adapter.

From hydair.co.uk

Adapter Kit Hydair Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently. Houlsby Adapter.

From www.researchgate.net

A—Transformer layer without adapters, B—Transformer layer with a Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters. Houlsby Adapter.

From aar.us.com

Advanced Aesthetic Resources Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. We show that adapters achieve parameter efficient transfer for text tasks. On the glue benchmark (wang et al., 2018), adapter tuning. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's effectiveness,. Houlsby Adapter.

From github.com

GitHub strawberrypie/bert_adapter Implementation of the paper Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. This technique yields compact models that. On the glue benchmark (wang et al., 2018), adapter tuning. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Houlsby Adapter.

From zhuanlan.zhihu.com

以统一的视角看待 ParameterEfficient Trainer Learning,原来 adapter 和 prefix Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$. Houlsby Adapter.

From blog.zhuwenq.cc

KAdapter Infusing Knowledge into PreTraining Models with Adapters Houlsby Adapter On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters. Houlsby Adapter.

From hydair.co.uk

BULKHEAD ADAPTOR OD6 Hydair Houlsby Adapter We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters. Houlsby Adapter.

From shop.shepherd-hydraulics.com

Adapter Kit Shepherd Hydraulics Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per. Houlsby Adapter.

From www.desertcart.ae

Buy Cable Matters Plug & Play USB to Adapter with PXE, MAC Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. This technique yields compact models that. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow one to train a model to solve new tasks,. Houlsby Adapter.

From flameport.com

BEEKA branded double adaptor Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for. Houlsby Adapter.

From blog.csdn.net

预训练模型微调 一文带你了解Adapter Tuning_houlsby adapter trainingCSDN博客 Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model. Houlsby Adapter.

From adapterhub.ml

AdapterHub AdapterTransformers v3 Unifying Efficient Houlsby Adapter On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse. Houlsby Adapter.

From www.desertcart.co.za

Buy TESSAN US to UK Plug Adapter, Type G UK Travel Plug Adapter Houlsby Adapter On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness,. Houlsby Adapter.

From shop.shepherd-hydraulics.com

Adapter Kit Shepherd Hydraulics Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. On the glue. Houlsby Adapter.

From spares.bagnallandkirkwood.co.uk

Steyr LP2 LP10 LP50 & Evo10 Pistol Charging / Filling Adaptor With Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently. Houlsby Adapter.

From github.com

Why I would use the houlsby adapter instead of the pfeiffer one Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters. Houlsby Adapter.

From blog.csdn.net

预训练模型微调 一文带你了解Adapter Tuning_houlsby adapter trainingCSDN博客 Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. We show that adapters achieve parameter efficient transfer for text tasks. On the glue benchmark (wang et al., 2018),. Houlsby Adapter.

From www.researchgate.net

The Houlsby adapter proposed in [8]. The Transformer layer consists of Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. We show that adapters achieve parameter efficient transfer for. Houlsby Adapter.

From hydair.co.uk

KR 12MM BREAKAWAY ADAPTOR Hydair Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow. Houlsby Adapter.

From www.desertcart.jp

Buy 6 Pin To 16 Pin Cable Adapter, Portable 6 Pin OBD2 Adapter Houlsby Adapter On the glue benchmark (wang et al., 2018), adapter tuning. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. Adapters allow one to train a model to solve new tasks, but. Houlsby Adapter.

From neilhoulsby.github.io

Neil Houlsby Houlsby Adapter To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. This technique yields compact models that. On the glue benchmark (wang et al., 2018), adapter tuning. We show that adapters achieve parameter efficient transfer for text tasks. Adapters. Houlsby Adapter.

From www.researchgate.net

CLASSIC adopts AdapterBERT (Houlsby et al., 2019) and its adapters Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. On the glue benchmark (wang et al., 2018), adapter tuning. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per. Houlsby Adapter.

From tonisco.com

Baby Adapter (DIN/HIHI) Tonisco Houlsby Adapter This technique yields compact models that. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that. Houlsby Adapter.

From www.cnblogs.com

Lexicon Enhanced Chinese Sequence Labelling Using BERT Adapter (LEBERT Houlsby Adapter To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. We show that adapters achieve parameter efficient transfer for text tasks. To demonstrate adapter’s effectiveness, we transfer the recently proposed bert transformer model to $26$ diverse text. Adapters allow one to train a model to solve new tasks, but adjust only a few parameters. Houlsby Adapter.

From parweld.com

Universal Wire Feed Adaptor Parweld Houlsby Adapter Adapters allow one to train a model to solve new tasks, but adjust only a few parameters per task. We show that adapters achieve parameter efficient transfer for text tasks. This technique yields compact models that. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. To demonstrate adapter’s effectiveness, we transfer the recently. Houlsby Adapter.