Partition Spark When . Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. It is an important tool for achieving optimal s3 storage. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. The main idea behind data partitioning is to optimise your. When you create a dataframe from a file/table, based on certain. It is crucial for optimizing performance when dealing with large datasets. Simply put, partitions in spark are the smaller, manageable chunks of your big data. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing.

from sparkbyexamples.com

In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. When you create a dataframe from a file/table, based on certain. It is an important tool for achieving optimal s3 storage. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. It is crucial for optimizing performance when dealing with large datasets. The main idea behind data partitioning is to optimise your. Simply put, partitions in spark are the smaller, manageable chunks of your big data.

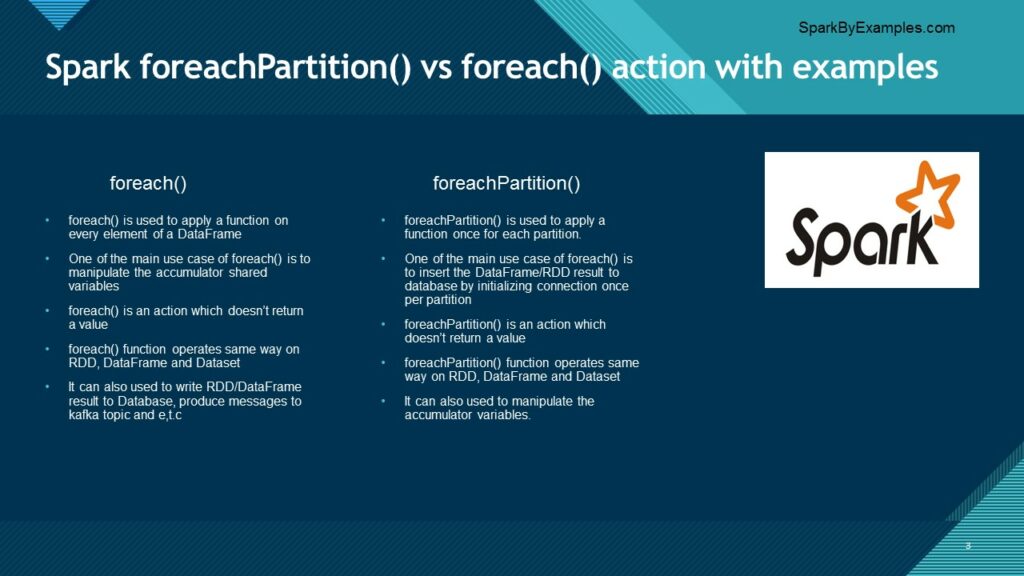

Spark foreachPartition vs foreach what to use? Spark By {Examples}

Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. The main idea behind data partitioning is to optimise your. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. It is crucial for optimizing performance when dealing with large datasets. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. Simply put, partitions in spark are the smaller, manageable chunks of your big data. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. When you create a dataframe from a file/table, based on certain. It is an important tool for achieving optimal s3 storage.

From www.youtube.com

Apache Spark Dynamic Partition Pruning Spark Tutorial Part 11 YouTube Partition Spark When Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. It is. Partition Spark When.

From www.projectpro.io

How Data Partitioning in Spark helps achieve more parallelism? Partition Spark When In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. When you create a dataframe from a file/table, based on certain. It is crucial for optimizing performance when dealing with large datasets. Simply put, partitions in spark are the smaller, manageable chunks of your big data. Pyspark partition is. Partition Spark When.

From blog.csdn.net

Spark分区 partition 详解_spark partitionCSDN博客 Partition Spark When Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. When you create a dataframe from a file/table, based on certain. The main idea behind data partitioning is to optimise your. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different.. Partition Spark When.

From www.ishandeshpande.com

Understanding Partitions in Apache Spark Partition Spark When In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. The main idea behind data partitioning is to optimise your. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. In this post, we’ll learn how to explicitly control. Partition Spark When.

From klaojgfcx.blob.core.windows.net

How To Determine Number Of Partitions In Spark at Troy Powell blog Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. It is an important tool for achieving optimal s3 storage. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Partitioning refers to the division of data into chunks, known as partitions, which can. Partition Spark When.

From medium.com

Dynamic Partition Pruning. Query performance optimization in Spark Partition Spark When It is an important tool for achieving optimal s3 storage. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. In the context of apache spark, it can be defined as. Partition Spark When.

From statusneo.com

Everything you need to understand Data Partitioning in Spark StatusNeo Partition Spark When In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. The main idea behind data partitioning is to optimise your. It is an important tool for achieving optimal s3. Partition Spark When.

From blogs.perficient.com

Spark Partition An Overview / Blogs / Perficient Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Simply put, partitions in spark are the smaller, manageable chunks of your big data. Spark partitioning refers to the division. Partition Spark When.

From sparkbyexamples.com

Spark foreachPartition vs foreach what to use? Spark By {Examples} Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. The main idea behind data partitioning is to optimise your. It is an important tool for achieving optimal s3 storage. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. It is crucial for. Partition Spark When.

From klaojgfcx.blob.core.windows.net

How To Determine Number Of Partitions In Spark at Troy Powell blog Partition Spark When It is crucial for optimizing performance when dealing with large datasets. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. Partitioning refers to the division of data into. Partition Spark When.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Partition Spark When Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. It is crucial for optimizing performance when dealing with large datasets. Simply put, partitions in spark are the smaller, manageable chunks of your big data. It is an important tool for achieving optimal s3 storage. In the context of apache. Partition Spark When.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Partition Spark When It is crucial for optimizing performance when dealing with large datasets. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Simply put, partitions in spark are the smaller, manageable chunks of your big data. Partitioning refers to the division of data into chunks, known as partitions, which can. Partition Spark When.

From www.gangofcoders.net

How does Spark partition(ing) work on files in HDFS? Gang of Coders Partition Spark When Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. It is crucial for optimizing performance when dealing with large datasets. Simply put, partitions in spark are the smaller, manageable chunks of your big data. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each. Partition Spark When.

From dzone.com

Dynamic Partition Pruning in Spark 3.0 DZone Partition Spark When The main idea behind data partitioning is to optimise your. It is an important tool for achieving optimal s3 storage. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition. Partition Spark When.

From sparkbyexamples.com

Spark Partitioning & Partition Understanding Spark By {Examples} Partition Spark When It is crucial for optimizing performance when dealing with large datasets. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Pyspark partition is a way to split a large dataset into smaller datasets. Partition Spark When.

From sparkbyexamples.com

Get the Size of Each Spark Partition Spark By {Examples} Partition Spark When Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. It is an important tool for achieving optimal s3 storage. Pyspark partition is a way to split a large dataset. Partition Spark When.

From www.youtube.com

How to create partitions with parquet using spark YouTube Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. It is crucial for optimizing performance when dealing with large datasets. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. It is an important tool for achieving optimal s3 storage.. Partition Spark When.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan Partition Spark When Simply put, partitions in spark are the smaller, manageable chunks of your big data. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Spark partitioning refers to the division. Partition Spark When.

From www.youtube.com

Spark Application Partition By in Spark Chapter 2 LearntoSpark Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Simply put, partitions in spark are the smaller, manageable chunks of your big data. It is crucial for optimizing performance when dealing with large. Partition Spark When.

From stackoverflow.com

pyspark Skewed partitions when setting spark.sql.files Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. The main idea behind data partitioning is to optimise your. Simply put, partitions in spark are the smaller, manageable chunks of your big data. It is crucial for optimizing performance when dealing with large datasets. Partitioning refers to the division of data into. Partition Spark When.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan Partition Spark When Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Pyspark partition is a. Partition Spark When.

From discover.qubole.com

Introducing Dynamic Partition Pruning Optimization for Spark Partition Spark When The main idea behind data partitioning is to optimise your. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. When you create a dataframe from a file/table, based on certain. Simply put, partitions in spark are the smaller, manageable chunks of your big data. In this post, we’ll. Partition Spark When.

From stackoverflow.com

pyspark Skewed partitions when setting spark.sql.files Partition Spark When The main idea behind data partitioning is to optimise your. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Simply put, partitions in spark are the smaller, manageable chunks of your big data. When you create a dataframe from a file/table, based on certain. Spark partitioning refers to the division. Partition Spark When.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Partition Spark When Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. The main idea behind data partitioning is to optimise your. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. It is an important tool for achieving optimal s3 storage. It. Partition Spark When.

From www.waitingforcode.com

What's new in Apache Spark 3.0 dynamic partition pruning on Partition Spark When When you create a dataframe from a file/table, based on certain. It is crucial for optimizing performance when dealing with large datasets. Partitioning refers to the division of data into chunks, known as partitions, which can be processed independently across different. Simply put, partitions in spark are the smaller, manageable chunks of your big data. In the context of apache. Partition Spark When.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark Partition Spark When In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. When you create a dataframe from a file/table, based on certain. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. It is an important tool for achieving optimal. Partition Spark When.

From www.youtube.com

How to find Data skewness in spark / How to get count of rows from each Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. It is crucial for optimizing performance when dealing with large datasets. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Pyspark partition is a way to split a large. Partition Spark When.

From medium.com

Managing Spark Partitions. How data is partitioned and when do you Partition Spark When The main idea behind data partitioning is to optimise your. Simply put, partitions in spark are the smaller, manageable chunks of your big data. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across. Partition Spark When.

From www.jowanza.com

Partitions in Apache Spark — Jowanza Joseph Partition Spark When It is an important tool for achieving optimal s3 storage. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. Pyspark partition is a way to split a large. Partition Spark When.

From www.youtube.com

How to partition and write DataFrame in Spark without deleting Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. Partitioning refers to the. Partition Spark When.

From www.newsletter.swirlai.com

SAI 26 Partitioning and Bucketing in Spark (Part 1) Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. When you create a dataframe from a file/table, based on certain. It is crucial for optimizing performance when dealing with large. Partition Spark When.

From engineering.salesforce.com

How to Optimize Your Apache Spark Application with Partitions Partition Spark When In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys.. Partition Spark When.

From www.youtube.com

Apache Spark Data Partitioning Example YouTube Partition Spark When In spark, data is distributed across the nodes in the form of partitions, allowing tasks to be executed in parallel. When you create a dataframe from a file/table, based on certain. The main idea behind data partitioning is to optimise your. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across. Partition Spark When.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Partition Spark When In this post, we’ll learn how to explicitly control partitioning in spark, deciding exactly where each row should go. In the context of apache spark, it can be defined as a dividing the dataset into multiple parts across the cluster. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. When you create. Partition Spark When.

From medium.com

Spark Partitioning Partition Understanding Medium Partition Spark When When you create a dataframe from a file/table, based on certain. It is crucial for optimizing performance when dealing with large datasets. Pyspark partition is a way to split a large dataset into smaller datasets based on one or more partition keys. Spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Partitioning. Partition Spark When.