Parquet Column Limit . It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Here’s a detailed look at how parquet achieves data compression: Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Aim for around 1gb per file (spark partition) (1). When navigating the parquet file, the application can use information in this metadata to limit the data scan; Know your parquet files, and you know your scaling limits. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. The apache parquet file format is popular for storing and interchanging tabular data. Ideally, you would use snappy compression (default) due to snappy. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments.

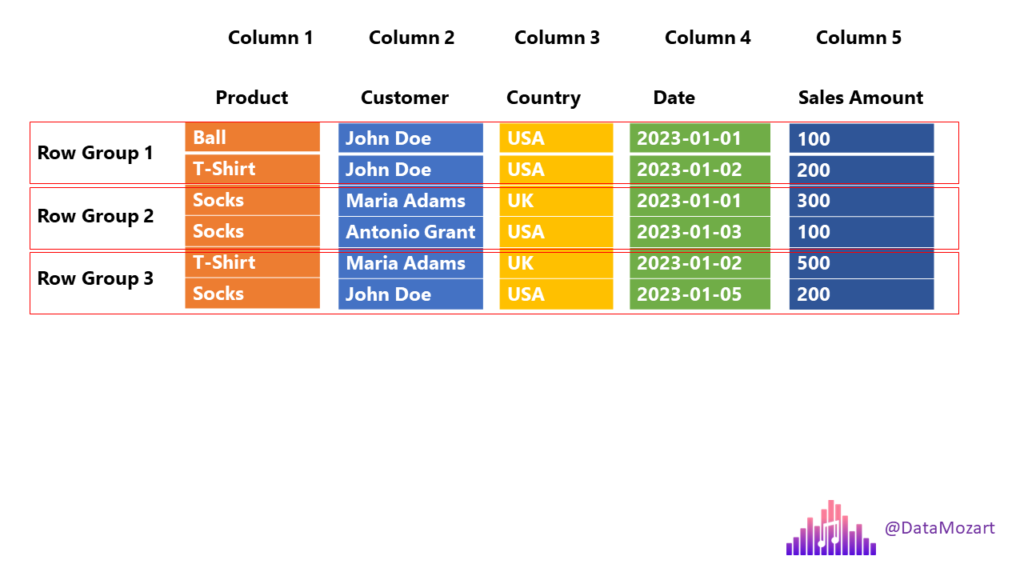

from data-mozart.com

Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Ideally, you would use snappy compression (default) due to snappy. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Know your parquet files, and you know your scaling limits. The apache parquet file format is popular for storing and interchanging tabular data. Aim for around 1gb per file (spark partition) (1). Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Here’s a detailed look at how parquet achieves data compression: When navigating the parquet file, the application can use information in this metadata to limit the data scan; The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size.

Parquet file format everything you need to know! Data Mozart

Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Aim for around 1gb per file (spark partition) (1). The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. The apache parquet file format is popular for storing and interchanging tabular data. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Know your parquet files, and you know your scaling limits. Here’s a detailed look at how parquet achieves data compression: When navigating the parquet file, the application can use information in this metadata to limit the data scan; Ideally, you would use snappy compression (default) due to snappy.

From zhuanlan.zhihu.com

SQL Server 的列存 知乎 Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Here’s a detailed look at how parquet achieves data compression: The apache parquet file format is popular for storing and interchanging. Parquet Column Limit.

From oswinrh.medium.com

Parquet, Avro or ORC?. When you are working on a big data… by Oswin Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. Know your parquet files, and you know your scaling limits. Ideally, you would use snappy compression (default) due to snappy. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Aim for around 1gb per file (spark partition) (1). When. Parquet Column Limit.

From mungfali.com

Deflection Of Beams Table Parquet Column Limit Here’s a detailed look at how parquet achieves data compression: Ideally, you would use snappy compression (default) due to snappy. When navigating the parquet file, the application can use information in this metadata to limit the data scan; The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Parquet’s columnar. Parquet Column Limit.

From www.linkedin.com

Optimizing Parquet Column Selection with PyArrow Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Larger. Parquet Column Limit.

From www.influxdata.com

Apache Parquet InfluxData Parquet Column Limit Ideally, you would use snappy compression (default) due to snappy. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Here’s a detailed look at how parquet achieves data compression: Larger row groups allow for larger column chunks which makes it possible to do larger sequential. When navigating the parquet. Parquet Column Limit.

From www.youtube.com

ACI 318 Code Requirements For Reinforce concrete Columns Design Parquet Column Limit Larger row groups allow for larger column chunks which makes it possible to do larger sequential. When navigating the parquet file, the application can use information in this metadata to limit the data scan; Ideally, you would use snappy compression (default) due to snappy. Here’s a detailed look at how parquet achieves data compression: Parquet’s columnar storage format allows for. Parquet Column Limit.

From data-mozart.com

Parquet file format everything you need to know! Data Mozart Parquet Column Limit Here’s a detailed look at how parquet achieves data compression: It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Aim for around 1gb per file (spark partition) (1). Explore how adjusting parquet. Parquet Column Limit.

From cpparquet.it

Home CP Parquet Parquet Column Limit Know your parquet files, and you know your scaling limits. Ideally, you would use snappy compression (default) due to snappy. Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Aim for around 1gb per file (spark partition) (1). The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even. Parquet Column Limit.

From professionnels.tarkett.fr

OAK BRUSHED Profilés parquets Plinthes, angles & profilés Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; The apache parquet file format is popular for storing and interchanging tabular data. Aim for around 1gb per file (spark partition) (1). Larger row groups allow for larger column chunks which makes it possible to do larger sequential. The parquet specification does. Parquet Column Limit.

From www.tecracer.com

PushDownPredicates in Parquet and how to use them to reduce IOPS Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data.. Parquet Column Limit.

From medium.com

Optimizing Parquet Column Selection with PyArrow by Divyansh Patel Parquet Column Limit Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Know your parquet files, and you know your scaling limits. The parquet specification does not limit these data structures to 2gb (2³¹. Parquet Column Limit.

From ashisparajuli.blogspot.com

Parquet is a column based data store or File Format (Useful for Spark Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; The apache parquet file format is popular for storing and interchanging tabular data. Aim for around 1gb per file (spark partition) (1). Larger row groups allow for larger column chunks which makes it possible to do larger sequential. The parquet specification does. Parquet Column Limit.

From ryanpeek.github.io

Using Geo/parquet Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Know your parquet files, and you know your scaling limits. When navigating the parquet file, the application can use information in this metadata to limit the data. Parquet Column Limit.

From www.youtube.com

Columns Limit State of Collapse Compression Theory of Reinforced Parquet Column Limit Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Aim for around 1gb per file (spark partition) (1). The parquet specification does not limit these data structures to 2gb (2³¹ bytes). Parquet Column Limit.

From www.influxdata.com

Apache Parquet InfluxData Parquet Column Limit Aim for around 1gb per file (spark partition) (1). Here’s a detailed look at how parquet achieves data compression: When navigating the parquet file, the application can use information in this metadata to limit the data scan; Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Know your parquet files, and you. Parquet Column Limit.

From www.influxdata.com

Querying Parquet with Millisecond Latency InfluxData Parquet Column Limit When navigating the parquet file, the application can use information in this metadata to limit the data scan; Ideally, you would use snappy compression (default) due to snappy. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Parquet’s columnar storage format allows for efficient compression by leveraging the. Parquet Column Limit.

From www.influxdata.com

Querying Parquet with Millisecond Latency InfluxData Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Know your parquet files, and you know your scaling. Parquet Column Limit.

From www.scribd.com

Limits For Reinforcement For Columns PDF Concrete Column Parquet Column Limit Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Ideally, you would use snappy compression (default) due to snappy. Larger row groups allow for larger column chunks which. Parquet Column Limit.

From www.influxdata.com

Querying Parquet with Millisecond Latency InfluxData Parquet Column Limit The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. The apache parquet file format is popular for storing and interchanging tabular data. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Here’s a detailed look at how. Parquet Column Limit.

From www.pinterest.com

Apache Parquet (Figure 41) is an open source, columnoriented storage Parquet Column Limit Know your parquet files, and you know your scaling limits. Aim for around 1gb per file (spark partition) (1). Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering. Parquet Column Limit.

From data-mozart.com

Parquet file format everything you need to know! Data Mozart Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Ideally, you would use snappy compression (default) due to snappy. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering. Parquet Column Limit.

From www.dremio.com

What Is Apache Parquet? Dremio Parquet Column Limit The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Here’s a detailed look at how parquet achieves data compression: Know your parquet files, and you know your scaling limits. When navigating the. Parquet Column Limit.

From peter-hoffmann.com

EuroSciPy 2018 Apache Parquet as a columnar storage for large Parquet Column Limit Larger row groups allow for larger column chunks which makes it possible to do larger sequential. The apache parquet file format is popular for storing and interchanging tabular data. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Explore how adjusting parquet file row groups to match file system. Parquet Column Limit.

From data-mozart.com

Parquet file format everything you need to know! Data Mozart Parquet Column Limit The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Aim for around 1gb per file (spark partition) (1). Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. The apache parquet file format is popular for storing and. Parquet Column Limit.

From www.youtube.com

Parquet Column Level Access Control with Presto YouTube Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Know your parquet files, and you know your scaling limits. Here’s a detailed look. Parquet Column Limit.

From www.upsolver.com

Parquet, ORC, and Avro The File Format Fundamentals of Big Data Upsolver Parquet Column Limit Ideally, you would use snappy compression (default) due to snappy. Aim for around 1gb per file (spark partition) (1). When navigating the parquet file, the application can use information in this metadata to limit the data scan; Here’s a detailed look at how parquet achieves data compression: The apache parquet file format is popular for storing and interchanging tabular data.. Parquet Column Limit.

From www.youtube.com

066 Parquet Another Columnar Format YouTube Parquet Column Limit Here’s a detailed look at how parquet achieves data compression: Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Aim for around 1gb per file (spark partition) (1). Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Parquet’s columnar storage format. Parquet Column Limit.

From data-mozart.com

Parquet file format everything you need to know! Data Mozart Parquet Column Limit Ideally, you would use snappy compression (default) due to snappy. When navigating the parquet file, the application can use information in this metadata to limit the data scan; Here’s a detailed look at how parquet achieves data compression: It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Aim. Parquet Column Limit.

From hxeccuojy.blob.core.windows.net

Parquet Column Metadata at Jose Tedesco blog Parquet Column Limit Know your parquet files, and you know your scaling limits. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Larger row groups allow for larger column chunks which. Parquet Column Limit.

From www.youtube.com

The Parquet Format and Performance Optimization Opportunities Boudewijn Parquet Column Limit Here’s a detailed look at how parquet achieves data compression: Know your parquet files, and you know your scaling limits. The apache parquet file format is popular for storing and interchanging tabular data. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Aim for around 1gb per file. Parquet Column Limit.

From dzone.com

Understanding how Parquet Integrates with Avro, Thrift and Protocol Parquet Column Limit Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Here’s a detailed look at how parquet achieves data compression: Ideally, you would use snappy compression (default) due to snappy. It uses a. Parquet Column Limit.

From learn.microsoft.com

reading .parquet column in correct datatype Microsoft Q&A Parquet Column Limit Aim for around 1gb per file (spark partition) (1). Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Explore how adjusting parquet file row groups to match file system block sizes can improve i/o efficiency, especially in hdfs environments. Here’s a detailed look at how parquet achieves data compression: Know your parquet files,. Parquet Column Limit.

From blog.colorkrew.com

Parquet Files Smaller and Faster than CSV Colorkrew Blog Parquet Column Limit Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Aim for around 1gb per file (spark partition) (1). The parquet specification does not limit these data structures to 2gb (2³¹ bytes) or even 4gb (2³² bytes) in size. Know your parquet files, and you know your scaling limits. Ideally, you would use. Parquet Column Limit.

From www.techrepublic.com

What are Jira column limits and how do you set them? TechRepublic Parquet Column Limit Larger row groups allow for larger column chunks which makes it possible to do larger sequential. Ideally, you would use snappy compression (default) due to snappy. The apache parquet file format is popular for storing and interchanging tabular data. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each column. Know your parquet files,. Parquet Column Limit.

From www.harveymaria.com

Parquet English Oak Parquet Natural Oak Collection by Harvey Maria Parquet Column Limit The apache parquet file format is popular for storing and interchanging tabular data. It uses a hybrid storage format which sequentially stores chunks of columns, lending to high performance when selecting and filtering data. Know your parquet files, and you know your scaling limits. Parquet’s columnar storage format allows for efficient compression by leveraging the similarity of data within each. Parquet Column Limit.