Calibration_Curve N_Bins . calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶.

from ploomber.io

the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem.

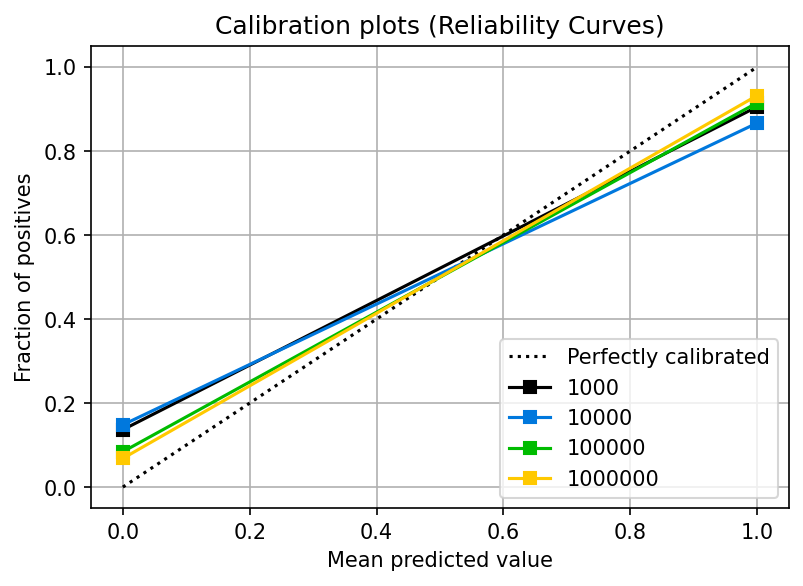

Can I trust my model's probabilities? A deep dive into probability calibration

Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a.

From www.researchgate.net

Calibration curves of 6month, 1year, and 2year SKD and the decision... Download Scientific Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well. Calibration_Curve N_Bins.

From encord.com

Calibration Curve Definition Machine Learning Glossary Encord Encord Calibration_Curve N_Bins the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins. Calibration_Curve N_Bins.

From www.researchgate.net

Expected values and standard uncertainties for the calibration curve... Download Scientific Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the. Calibration_Curve N_Bins.

From discourse.datamethods.org

Proper way to get an averaged calibration curve from nested resampling model validation Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. the r rms package makes smooth nonparametric calibration curves easy to get, either using an. Calibration_Curve N_Bins.

From www.researchgate.net

The calibration curves in the training dataset (from left to right are... Download Scientific Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none,. Calibration_Curve N_Bins.

From scikit-learn.org

Probability Calibration curves — scikitlearn 0.16.1 documentation Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. Calibration_curve (y_true, y_prob, *, pos_label. Calibration_Curve N_Bins.

From napsterinblue.github.io

Using Calibration Curves to Pick Your Classifier Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. Calibration_curve (y_true, y_prob, *, pos_label. Calibration_Curve N_Bins.

From www.researchgate.net

Ten calibration curves for each line pair, one for each of the ten bins... Download Scientific Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. It is used to check the calibration. Calibration_Curve N_Bins.

From blog.sepscience.com

Calibration Curves Part 1 Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curve of N I (500.5 nm) for different contents of robusta... Download Scientific Calibration_Curve N_Bins the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curve in the external test cohort. The data is divided into... Download Scientific Calibration_Curve N_Bins a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. It is used to check the. Calibration_Curve N_Bins.

From ploomber.io

Can I trust my model's probabilities? A deep dive into probability calibration Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *,. Calibration_Curve N_Bins.

From www.researchgate.net

4. Calibration curves for an input and output electrode showing the... Download Scientific Diagram Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source]. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curve described by equation A =0.0159 + 0.766 C, where A... Download Scientific Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the actual observed. Calibration_Curve N_Bins.

From www.youtube.com

Calibration Curve Tutorial Lesson 1 Plotting Calibration Data YouTube Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. calibration curves, also referred to as reliability diagrams. Calibration_Curve N_Bins.

From www.thepharmaeducation.com

How to Make a Calibration Curve in Excel The Pharma Education Pharmaceutical Education site Calibration_Curve N_Bins the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. It is used to check the calibration of a. Calibration_Curve N_Bins.

From www.researchgate.net

Example of a calibration curve, which plots the observed frequency of... Download Scientific Calibration_Curve N_Bins Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. . Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curves shown on a log 10 scale. (A) Calibration curve... Download Scientific Diagram Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package. Calibration_Curve N_Bins.

From lijiancheng0614.github.io

Probability Calibration curves — scikitlearn 0.17 文档 Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package. Calibration_Curve N_Bins.

From openlab.help.agilent.com

Calibration curves (Reporting) Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve. Calibration_Curve N_Bins.

From weightinginbayesianmodels.github.io

Calibration Curvefitting Calibration_Curve N_Bins the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. It is used to check the. Calibration_Curve N_Bins.

From www.researchgate.net

Standard calibration curves using basic calibration method for the... Download Scientific Diagram Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform'). Calibration_Curve N_Bins.

From scikit-learn.org

Probability Calibration curves — scikitlearn 1.4.dev0 documentation Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. calibration curves, also referred. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curve. Download Scientific Diagram Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. Calibration_curve (y_true, y_prob, *, pos_label =. Calibration_Curve N_Bins.

From chem.libretexts.org

5.4 Linear Regression and Calibration Curves Chemistry LibreTexts Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. the r rms package makes smooth nonparametric calibration curves easy to get, either. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curve formed by GCMS measurements of nine calibration... Download Scientific Diagram Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. the r rms package makes smooth. Calibration_Curve N_Bins.

From saxamos.github.io

Probability calibration Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curves based on the peaktobase ratio of the 393.4 nm Ca... Download Scientific Calibration_Curve N_Bins the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration plots (reliability curve) of the XGBoost, XGBoost +... Download Scientific Diagram Calibration_Curve N_Bins It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. the r rms package makes smooth nonparametric calibration curves easy to get, either using. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration plot comparing the predicted probabilities divided into ten... Download Scientific Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match. Calibration_Curve N_Bins.

From scikit-learn.org

Probability Calibration curves — scikitlearn 0.19.2 documentation Calibration_Curve N_Bins calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. a probability calibration curve is a plot between the predicted probabilities and the. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curves at 25°C fitted according to equation 7 (•),... Download Scientific Diagram Calibration_Curve N_Bins a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. Calibration_curve (y_true, y_prob, *, pos_label. Calibration_Curve N_Bins.

From www.researchgate.net

Calibration curves of models. (ad) A calibration curve was plotted to... Download Scientific Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. a probability calibration curve is a plot between the predicted probabilities and the actual observed frequency of the positive class of a binary classification problem. the r rms package makes. Calibration_Curve N_Bins.

From www.researchgate.net

Examples of empirical binning calibration with 10 equalwidth bins. As... Download Scientific Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. Calibration_curve (y_true, y_prob, *, pos_label. Calibration_Curve N_Bins.

From www.youtube.com

Using Excel for a Calibration Curve How To Create A Linear Standard Curve In Excel YouTube Calibration_Curve N_Bins sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') [source] #. It is used to check the calibration of a classifier, i.e., how closely the predicted probabilities match the actual probabilities. the r rms package makes smooth nonparametric calibration curves easy to get, either using an independent external. sklearn.calibration.calibration_curve(y_true, y_prob, *, pos_label=none, n_bins=5, strategy='uniform') ¶. calibration curves, also referred. Calibration_Curve N_Bins.