Pytorch Embedding From Pretrained . In nlp, it is almost always the case that your features. I currently use embeddings like. A simple lookup table that stores embeddings of a fixed dictionary and size. Rather than training our own word vectors from scratch,. I am trying to write a siamese network of two embedding networks that share weights. I found several examples online for. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. This module is often used to store word embeddings and retrieve. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. Pytorch allows you to load these embeddings into the nn.embedding layer.

from softscients.com

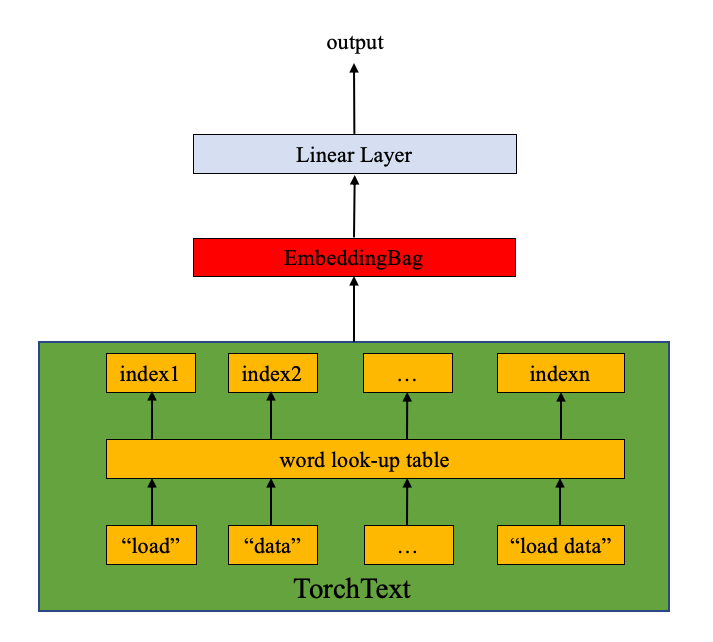

I am trying to write a siamese network of two embedding networks that share weights. A simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and retrieve. Pytorch allows you to load these embeddings into the nn.embedding layer. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. In nlp, it is almost always the case that your features. Rather than training our own word vectors from scratch,. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. I currently use embeddings like. I found several examples online for.

Pytorch Belajar Natural Languange Processing NLP Softscients

Pytorch Embedding From Pretrained I currently use embeddings like. Pytorch allows you to load these embeddings into the nn.embedding layer. In nlp, it is almost always the case that your features. I am trying to write a siamese network of two embedding networks that share weights. This module is often used to store word embeddings and retrieve. I found several examples online for. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. A simple lookup table that stores embeddings of a fixed dictionary and size. I currently use embeddings like. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Rather than training our own word vectors from scratch,.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Pytorch Embedding From Pretrained Pytorch allows you to load these embeddings into the nn.embedding layer. I found several examples online for. I currently use embeddings like. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. Rather than training our own word vectors from scratch,. A simple lookup table that stores embeddings of a fixed dictionary and size. Word. Pytorch Embedding From Pretrained.

From laptrinhx.com

PyTorch internals LaptrinhX Pytorch Embedding From Pretrained I found several examples online for. Rather than training our own word vectors from scratch,. This module is often used to store word embeddings and retrieve. In nlp, it is almost always the case that your features. I currently use embeddings like. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. From v0.4.0 there is. Pytorch Embedding From Pretrained.

From discuss.pytorch.org

PyTorch pretrained models not working PyTorch Forums Pytorch Embedding From Pretrained I found several examples online for. A simple lookup table that stores embeddings of a fixed dictionary and size. I currently use embeddings like. Rather than training our own word vectors from scratch,. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Pytorch allows you to load these embeddings into the nn.embedding. Pytorch Embedding From Pretrained.

From www.learnpytorch.io

08. PyTorch Paper Replicating Zero to Mastery Learn PyTorch for Deep Pytorch Embedding From Pretrained From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. In nlp, it is almost always the case that your features. A simple lookup table that stores embeddings of a fixed dictionary and size. I currently use embeddings like. This module is often used to store word embeddings and retrieve. I found several examples online. Pytorch Embedding From Pretrained.

From www.aritrasen.com

Deep Learning with Pytorch Text Generation LSTMs 3.3 Pytorch Embedding From Pretrained This module is often used to store word embeddings and retrieve. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. Rather than training our own word vectors from scratch,. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. I have been working with pretrained embeddings (glove) and would like. Pytorch Embedding From Pretrained.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Pytorch Embedding From Pretrained Pytorch allows you to load these embeddings into the nn.embedding layer. I found several examples online for. A simple lookup table that stores embeddings of a fixed dictionary and size. In nlp, it is almost always the case that your features. I am trying to write a siamese network of two embedding networks that share weights. Rather than training our. Pytorch Embedding From Pretrained.

From blog.csdn.net

神经网络 Embedding层理解; Embedding层中使用预训练词向量_embedding 神经网络CSDN博客 Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. In nlp, it is almost always the case that your features. This module is often used to store. Pytorch Embedding From Pretrained.

From blog.ezyang.com

PyTorch internals ezyang’s blog Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and retrieve. Pytorch allows you to load these embeddings into the nn.embedding layer. I am trying to write a siamese network of two embedding networks that share weights. From v0.4.0 there is a new function from_pretrained() which makes. Pytorch Embedding From Pretrained.

From blog.ezyang.com

PyTorch internals ezyang’s blog Pytorch Embedding From Pretrained Rather than training our own word vectors from scratch,. In nlp, it is almost always the case that your features. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. This module is often used to store word embeddings and retrieve. Pytorch allows you to load these embeddings into the nn.embedding layer. From. Pytorch Embedding From Pretrained.

From www.codeunderscored.com

PyTorch Reciprocal() Code Underscored Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. I currently use embeddings like. Pytorch allows you to load these embeddings into the nn.embedding layer. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Rather than training our own word vectors from scratch,. I am trying to write. Pytorch Embedding From Pretrained.

From devpost.com

VZPyTorch Devpost Pytorch Embedding From Pretrained I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. I currently use embeddings like. In nlp, it is almost always the case that your features. A simple lookup table that stores embeddings of a fixed dictionary and size. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very. Pytorch Embedding From Pretrained.

From softscients.com

Pytorch Belajar Natural Languange Processing NLP Softscients Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. Pytorch allows you to load these embeddings into the nn.embedding layer. In nlp, it is almost always the case that your features. This module is often used to store word embeddings and retrieve. Rather than training our own word vectors from scratch,. Word embeddings are dense vectors. Pytorch Embedding From Pretrained.

From imagetou.com

Pytorch Train Pretrained Model Image to u Pytorch Embedding From Pretrained In nlp, it is almost always the case that your features. A simple lookup table that stores embeddings of a fixed dictionary and size. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. This module is often. Pytorch Embedding From Pretrained.

From github.com

GitHub wangjia0602/EBRNPyTorch Embedded Block Residual Network A Pytorch Embedding From Pretrained Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. I currently use embeddings like. I found several examples online for. Rather than training our own word vectors from scratch,. In nlp, it is almost always the case that. Pytorch Embedding From Pretrained.

From blog.csdn.net

Pytorch学习Embedding_pytorch 导出word embeddingCSDN博客 Pytorch Embedding From Pretrained This module is often used to store word embeddings and retrieve. I found several examples online for. A simple lookup table that stores embeddings of a fixed dictionary and size. I am trying to write a siamese network of two embedding networks that share weights. In nlp, it is almost always the case that your features. I have been working. Pytorch Embedding From Pretrained.

From barkmanoil.com

Pytorch Nn Embedding? The 18 Correct Answer Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. Rather than training our own word vectors from scratch,. This module is often used to store word embeddings and retrieve. Pytorch allows you to load these embeddings into the nn.embedding layer. In nlp, it is almost always the case that your features. I found several examples online. Pytorch Embedding From Pretrained.

From colab.research.google.com

Google Colab Pytorch Embedding From Pretrained I currently use embeddings like. Rather than training our own word vectors from scratch,. Pytorch allows you to load these embeddings into the nn.embedding layer. I am trying to write a siamese network of two embedding networks that share weights. A simple lookup table that stores embeddings of a fixed dictionary and size. I have been working with pretrained embeddings. Pytorch Embedding From Pretrained.

From www.educba.com

PyTorch Model Introduction Overview What is PyTorch Model? Pytorch Embedding From Pretrained Rather than training our own word vectors from scratch,. This module is often used to store word embeddings and retrieve. A simple lookup table that stores embeddings of a fixed dictionary and size. In nlp, it is almost always the case that your features. I found several examples online for. From v0.4.0 there is a new function from_pretrained() which makes. Pytorch Embedding From Pretrained.

From github.com

Assert 'resize_positional_embedding' prevents creating a model with Pytorch Embedding From Pretrained Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. A simple lookup table that stores embeddings of a fixed dictionary and size. In. Pytorch Embedding From Pretrained.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Embedding From Pretrained Pytorch allows you to load these embeddings into the nn.embedding layer. In nlp, it is almost always the case that your features. I currently use embeddings like. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. This module is often used to store word embeddings and retrieve. I am trying to write a siamese. Pytorch Embedding From Pretrained.

From blog.csdn.net

pytorch embedding层详解(从原理到实战)CSDN博客 Pytorch Embedding From Pretrained Word embeddings are dense vectors of real numbers, one per word in your vocabulary. A simple lookup table that stores embeddings of a fixed dictionary and size. Pytorch allows you to load these embeddings into the nn.embedding layer. Rather than training our own word vectors from scratch,. I am trying to write a siamese network of two embedding networks that. Pytorch Embedding From Pretrained.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Pytorch Embedding From Pretrained This module is often used to store word embeddings and retrieve. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. In nlp, it is almost always the case that your features. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I am trying to write a siamese network of. Pytorch Embedding From Pretrained.

From blog.csdn.net

pytorch embedding层详解(从原理到实战)CSDN博客 Pytorch Embedding From Pretrained Word embeddings are dense vectors of real numbers, one per word in your vocabulary. A simple lookup table that stores embeddings of a fixed dictionary and size. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. This module is often used to store word embeddings and retrieve. Pytorch allows you to load. Pytorch Embedding From Pretrained.

From github.com

Embedding layer tensor shape · Issue 99268 · pytorch/pytorch · GitHub Pytorch Embedding From Pretrained In nlp, it is almost always the case that your features. This module is often used to store word embeddings and retrieve. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. A simple lookup table that stores embeddings of a fixed dictionary and size. I found several examples online for. I am trying to write. Pytorch Embedding From Pretrained.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Embedding From Pretrained From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. I am trying to write a siamese network of two embedding networks that share weights. I found several examples online for. Rather than training our own word vectors from scratch,. I have been working with pretrained embeddings (glove) and would like to allow these to. Pytorch Embedding From Pretrained.

From github.com

Pretrained_Model_Pytorch/vgg.py at master · fengjiqiang/Pretrained Pytorch Embedding From Pretrained I currently use embeddings like. I found several examples online for. Pytorch allows you to load these embeddings into the nn.embedding layer. This module is often used to store word embeddings and retrieve. Rather than training our own word vectors from scratch,. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. I. Pytorch Embedding From Pretrained.

From blog.csdn.net

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用_embedding_dim Pytorch Embedding From Pretrained This module is often used to store word embeddings and retrieve. I found several examples online for. A simple lookup table that stores embeddings of a fixed dictionary and size. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. In nlp, it is almost always the case that your features. Pytorch allows you to load. Pytorch Embedding From Pretrained.

From www.youtube.com

[pytorch] Embedding, LSTM 입출력 텐서(Tensor) Shape 이해하고 모델링 하기 YouTube Pytorch Embedding From Pretrained I found several examples online for. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. Pytorch allows you to load these embeddings into. Pytorch Embedding From Pretrained.

From zhuanlan.zhihu.com

【Pytorch详细教程十】使用Tensorboard在 Pytorch 中进行可视化(Tensorboard详细使用教程) 知乎 Pytorch Embedding From Pretrained Rather than training our own word vectors from scratch,. I currently use embeddings like. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. I found several examples online for. This module is often used to store word embeddings. Pytorch Embedding From Pretrained.

From github.com

nn.Embedding.from_pretrained accept tensor of type Long · Issue 86663 Pytorch Embedding From Pretrained From v0.4.0 there is a new function from_pretrained() which makes loading an embedding very comfortable. In nlp, it is almost always the case that your features. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Pytorch allows you to load these embeddings into the nn.embedding layer. I currently use embeddings like. I. Pytorch Embedding From Pretrained.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Pytorch Embedding From Pretrained A simple lookup table that stores embeddings of a fixed dictionary and size. Rather than training our own word vectors from scratch,. I found several examples online for. Pytorch allows you to load these embeddings into the nn.embedding layer. This module is often used to store word embeddings and retrieve. In nlp, it is almost always the case that your. Pytorch Embedding From Pretrained.

From www.vrogue.co

Guide To Feed Forward Network Using Pytorch With Mnist Dataset www Pytorch Embedding From Pretrained I found several examples online for. This module is often used to store word embeddings and retrieve. I currently use embeddings like. Rather than training our own word vectors from scratch,. Pytorch allows you to load these embeddings into the nn.embedding layer. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. From. Pytorch Embedding From Pretrained.

From coderzcolumn.com

Word Embeddings for PyTorch Text Classification Networks Pytorch Embedding From Pretrained I currently use embeddings like. I found several examples online for. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. I am trying to write a siamese network of two embedding networks that share weights. A simple lookup. Pytorch Embedding From Pretrained.

From python.plainenglish.io

Image Classification with PyTorch by Varrel Tantio Python in Plain Pytorch Embedding From Pretrained I am trying to write a siamese network of two embedding networks that share weights. Word embeddings are dense vectors of real numbers, one per word in your vocabulary. I currently use embeddings like. I found several examples online for. Rather than training our own word vectors from scratch,. In nlp, it is almost always the case that your features.. Pytorch Embedding From Pretrained.

From www.youtube.com

What are PyTorch Embeddings Layers (6.4) YouTube Pytorch Embedding From Pretrained I currently use embeddings like. A simple lookup table that stores embeddings of a fixed dictionary and size. I have been working with pretrained embeddings (glove) and would like to allow these to be finetuned. Pytorch allows you to load these embeddings into the nn.embedding layer. I found several examples online for. I am trying to write a siamese network. Pytorch Embedding From Pretrained.