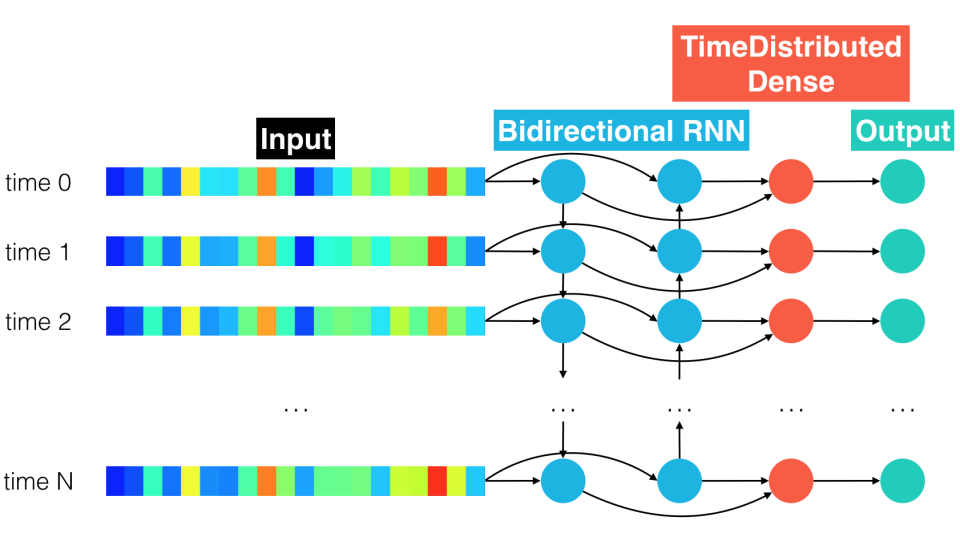

Time Distributed Dense . Deploy ml on mobile, microcontrollers and other edge devices. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. That can work with several inputs type, including images. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,.

from seanvonb.github.io

When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. To effectively learn how to use this. Deploy ml on mobile, microcontrollers and other edge devices. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. That can work with several inputs type, including images. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input.

Speech Recognizer

Time Distributed Dense Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Deploy ml on mobile, microcontrollers and other edge devices. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. That can work with several inputs type, including images. To effectively learn how to use this. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,.

From www.researchgate.net

Pixel RCNN convolutional layers. Firstly, output from... Download Time Distributed Dense Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. That can work with. Time Distributed Dense.

From zhuanlan.zhihu.com

批量归一化BN讲解 知乎 Time Distributed Dense Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Dense in keras applies fully connected layers to the last. Time Distributed Dense.

From github.com

LSTM fully connected architecture · Issue 4149 · kerasteam/keras · GitHub Time Distributed Dense Time distributed layer will do that job, it can apply the same transformation for a list of input data. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply. Time Distributed Dense.

From stackoverflow.com

keras Confused about how to implement timedistributed LSTM + LSTM Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Deploy ml on mobile, microcontrollers and other edge devices. That can work with several inputs type, including images. Timedistributed is a wrapper layer that will apply a layer the temporal. Time Distributed Dense.

From towardsdatascience.com

Difference between Local Response Normalization and Batch Normalization Time Distributed Dense To effectively learn how to use this. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. The timedistributed. Time Distributed Dense.

From samueldavidbryan.github.io

Translating ASL Fingerspelling Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. That can work with several inputs type, including images. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Deploy ml on mobile,. Time Distributed Dense.

From ubuntuask.com

How to Implement A TimeDistributed Dense (Tdd) Layer In Python in 2024? Time Distributed Dense Time distributed layer will do that job, it can apply the same transformation for a list of input data. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Dense in keras applies fully. Time Distributed Dense.

From zhuanlan.zhihu.com

时序数据的表征学习方法(四)——LSTM自编码器 知乎 Time Distributed Dense That can work with several inputs type, including images. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one. Time Distributed Dense.

From seanvonb.github.io

Speech Recognizer Time Distributed Dense Deploy ml on mobile, microcontrollers and other edge devices. Time distributed layer will do that job, it can apply the same transformation for a list of input data. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. The timedistributed achieves this trick by applying the same. Time Distributed Dense.

From discuss.pytorch.org

Efficient Time Distributed Dense PyTorch Forums Time Distributed Dense Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. That can work with several inputs type, including images. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. To effectively learn how to. Time Distributed Dense.

From zhuanlan.zhihu.com

『迷你教程』LSTM网络下如何正确使用时间分布层 知乎 Time Distributed Dense That can work with several inputs type, including images. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their. Time Distributed Dense.

From www.scribd.com

Distributed Time Synchronization in Ultra Dense Networks PDF Time Distributed Dense When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Deploy ml on mobile, microcontrollers and other edge devices. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of. Time Distributed Dense.

From www.researchgate.net

Block diagram of the line based time distributed architecture Time Distributed Dense The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. That can work with several inputs type, including images. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation.. Time Distributed Dense.

From www.mi-research.net

Continuoustime Distributed Heavyball Algorithm for Distributed Convex Time Distributed Dense That can work with several inputs type, including images. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Timedistributed is a wrapper layer that will apply a layer the temporal dimension. Time Distributed Dense.

From www.researchgate.net

The structure of time distributed convolutional gated recurrent unit Time Distributed Dense Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Time distributed layer will do that job, it can apply the same transformation for a list of input data. That can work with several inputs. Time Distributed Dense.

From blog.finxter.com

Deep Forecasting Bitcoin with LSTM Architectures Be on the Right Side Time Distributed Dense Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. To effectively learn how to use this. Time distributed layer will do that job, it can apply the same transformation for a list of input data. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one. Time Distributed Dense.

From www.researchgate.net

Architecture of the multivariate forecast model. TimeDistributed Time Distributed Dense To effectively learn how to use this. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Deploy ml on mobile, microcontrollers and other edge devices. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. When using the timedistributed, you need to have. Time Distributed Dense.

From www.pythonfixing.com

[FIXED] How to implement timedistributed dense (TDD) layer in PyTorch Time Distributed Dense Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is only necessary for certain layers that. Time Distributed Dense.

From www.researchgate.net

F1Score graph against (a) hidden units in GRU layer, (b) hidden units Time Distributed Dense Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Time distributed layer will do that job, it can apply the same transformation for a list of input data. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. To effectively learn how. Time Distributed Dense.

From github.com

Keras TimeDistributed on a Model creates duplicate layers, and is Time Distributed Dense That can work with several inputs type, including images. To effectively learn how to use this. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Dense in keras applies fully connected. Time Distributed Dense.

From blog.csdn.net

ts13_install tf env_RNN_Bidirectional LSTM_GRU_Minimal gated Time Distributed Dense When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. To effectively learn how to use this. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Deploy ml on mobile, microcontrollers and other edge devices. Timedistributed is. Time Distributed Dense.

From www.semanticscholar.org

Figure 5 from A sublinear time distributed algorithm for minimum Time Distributed Dense To effectively learn how to use this. Deploy ml on mobile, microcontrollers and other edge devices. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Dense in keras applies fully connected layers to. Time Distributed Dense.

From www.vrogue.co

Architectures Of The Cnn Cnn Lstm Vanilla Lstm And St vrogue.co Time Distributed Dense The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. That can work with several inputs type, including images. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. To effectively learn how to use this. Time. Time Distributed Dense.

From www.tcom242242.net

【Keras,図】RepeatVectorとTimeDistributedがどのように操作するか?のメモ Time Distributed Dense Deploy ml on mobile, microcontrollers and other edge devices. Timedistributed is only necessary for certain layers that cannot handle additional dimensions in their implementation. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. Dense in keras applies fully connected layers to the last output dimension, whereas. Time Distributed Dense.

From www.web-dev-qa-db-fra.com

python — Comment configurer 1DConvolution et LSTM dans Keras Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Deploy ml on mobile, microcontrollers and other edge devices. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data.. Time Distributed Dense.

From www.researchgate.net

Time Distributed Stacked LSTM Model Download Scientific Diagram Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. When using the timedistributed, you. Time Distributed Dense.

From www.researchgate.net

Model summary of LSTM layers with Time Distributed Dense layer Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. That can work with several inputs type, including images. To effectively learn how to use this. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Time distributed layer will do. Time Distributed Dense.

From www.researchgate.net

Structure of Time Distributed CNN model Download Scientific Diagram Time Distributed Dense That can work with several inputs type, including images. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. To effectively learn how to use this. The timedistributed achieves this trick by applying. Time Distributed Dense.

From www.researchgate.net

Detailed architecture with visualization of timedistributed layer Time Distributed Dense The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in this case,. Deploy. Time Distributed Dense.

From 9to5answer.com

[Solved] TimeDistributed(Dense) vs Dense in Keras Same 9to5Answer Time Distributed Dense That can work with several inputs type, including images. Deploy ml on mobile, microcontrollers and other edge devices. To effectively learn how to use this. Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data.. Time Distributed Dense.

From tech.smile.eu

How to work with Time Distributed data in a neural network Smile's Time Distributed Dense Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Deploy ml on mobile, microcontrollers and other edge devices. Keras.layers.timedistributed(layer, **kwargs). Time Distributed Dense.

From blog.csdn.net

CNNLSTM的flatten_怎末从flatten输入到lstmCSDN博客 Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Deploy ml on mobile, microcontrollers and other edge devices. Time distributed layer will do that job, it can apply the same transformation for a list of input data. That can work with several inputs type, including images. When using the timedistributed, you need to. Time Distributed Dense.

From builtin.com

Fully Connected Layer vs Convolutional Layer Explained Built In Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. Deploy ml on mobile, microcontrollers and other edge devices. Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. When using the timedistributed, you need to have a sequence through time so that you can apply the same layer (in. Time Distributed Dense.

From www.researchgate.net

Architecture of the multivariate forecast model. TimeDistributed Time Distributed Dense Keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. That can work with several inputs type, including images. To effectively learn how to use this. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Timedistributed is only necessary for certain layers that. Time Distributed Dense.

From rajan.sh

Shapeshift Time Distributed Dense Dense in keras applies fully connected layers to the last output dimension, whereas timedistributeddense. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Deploy ml on mobile, microcontrollers and other edge devices. That can work with several inputs type, including images. When using the timedistributed, you need to have. Time Distributed Dense.