Markov Chain Questions And Answers . Problem 2.5 let {xn}n≥0 be a stochastic. Markov chains are a relatively simple but very interesting and useful class of random processes. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). When the system is in state 0 it stays in that state with probability 0.4. Consider the markov chain in figure 11.17. A markov chain describes a system whose state changes over time. Markov chains are discrete state space processes that have the markov property. Consider the following continuous markov chain. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. (a) obtain the transition rate matrix. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$.

from www.chegg.com

Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Markov chains are discrete state space processes that have the markov property. Consider the following continuous markov chain. When the system is in state 0 it stays in that state with probability 0.4. A markov chain describes a system whose state changes over time. Consider the markov chain in figure 11.17. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Problem 2.5 let {xn}n≥0 be a stochastic. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. (a) obtain the transition rate matrix.

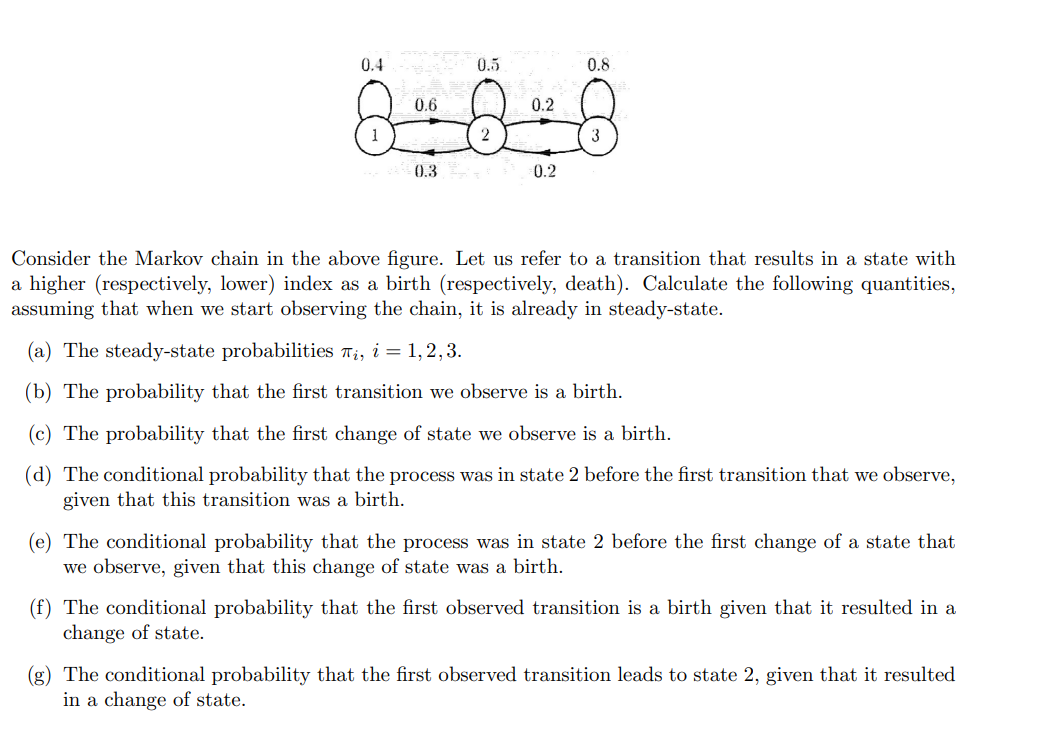

Solved Consider the Markov chain in the above figure. Let us

Markov Chain Questions And Answers Markov chains are discrete state space processes that have the markov property. A markov chain describes a system whose state changes over time. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Markov chains are discrete state space processes that have the markov property. Consider the markov chain in figure 11.17. Markov chains are a relatively simple but very interesting and useful class of random processes. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. (a) obtain the transition rate matrix. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. When the system is in state 0 it stays in that state with probability 0.4. Consider the following continuous markov chain. Problem 2.5 let {xn}n≥0 be a stochastic.

From www.researchgate.net

118 questions with answers in MARKOV CHAINS Science topic Markov Chain Questions And Answers Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. A markov chain describes a system whose state changes over time. Problem 2.5 let {xn}n≥0 be a stochastic. (a) obtain the transition rate matrix. Consider the following continuous markov chain. Markov chains are a relatively simple but very interesting. Markov Chain Questions And Answers.

From www.chegg.com

Solved Question 1 Let Xn be a Markov chain with states S = Markov Chain Questions And Answers Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Consider the following continuous markov chain. When the system is in state 0 it stays in that state with probability 0.4. Problem 2.5. Markov Chain Questions And Answers.

From www.chegg.com

Solved The diagrams below show three Markov chains, where Markov Chain Questions And Answers Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. (a) obtain the transition rate matrix. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). When the system is in state 0 it stays in that state with probability 0.4. A markov chain describes a system whose state changes over. Markov Chain Questions And Answers.

From www.chegg.com

5 Markov Chain Terminology In this question, we will Markov Chain Questions And Answers Markov chains are discrete state space processes that have the markov property. Markov chains are a relatively simple but very interesting and useful class of random processes. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Consider the markov chain in figure 11.17. Usually usually they are deflned. Markov Chain Questions And Answers.

From www.bartleby.com

Answered Consider the following Markov chain P =… bartleby Markov Chain Questions And Answers Consider the markov chain in figure 11.17. Markov chains are a relatively simple but very interesting and useful class of random processes. Markov chains are discrete state space processes that have the markov property. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Consider the following continuous markov chain. A markov chain describes. Markov Chain Questions And Answers.

From study.com

Quiz & Worksheet Markov Chain Markov Chain Questions And Answers Markov chains are discrete state space processes that have the markov property. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. A markov chain describes a system whose state changes over time. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. Consider the following continuous markov chain. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\). Markov Chain Questions And Answers.

From www.chegg.com

Solved Please Answer all the questions below 1) A Markov Markov Chain Questions And Answers Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Consider the markov chain in figure 11.17. When the system is in state 0 it stays in that state with probability 0.4. Markov chains are a relatively simple but very interesting and useful class of random processes. Show that. Markov Chain Questions And Answers.

From www.chegg.com

Solved c) For the Markov chain shown below identify the Markov Chain Questions And Answers Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Markov chains are a relatively simple but very interesting and useful class of random processes. Consider the markov. Markov Chain Questions And Answers.

From www.chegg.com

Solved 5. (12 points) A Markov chain Xo, X1, X2, has the Markov Chain Questions And Answers Consider the markov chain in figure 11.17. When the system is in state 0 it stays in that state with probability 0.4. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Problem 2.5 let {xn}n≥0 be a stochastic. Show that {yn}n≥0 is a homogeneous markov chain and determine. Markov Chain Questions And Answers.

From www.chegg.com

Solved Problem 2 [Markov Chains] Suppose a Markov chain has Markov Chain Questions And Answers Markov chains are a relatively simple but very interesting and useful class of random processes. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Consider the markov chain in figure 11.17. A markov chain describes a system whose state changes over time. When the system is in state 0 it stays in that. Markov Chain Questions And Answers.

From www.chegg.com

Solved 3. Markov chains An example of a twostate Markov Markov Chain Questions And Answers Consider the markov chain in figure 11.17. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Markov chains are discrete state space processes that have the markov property. Markov chains are a relatively simple but very interesting and useful class of random processes. Usually usually they are deflned. Markov Chain Questions And Answers.

From www.chegg.com

Solved Answer the questions in the following Markov chain Markov Chain Questions And Answers Markov chains are a relatively simple but very interesting and useful class of random processes. Problem 2.5 let {xn}n≥0 be a stochastic. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. (a) obtain the transition rate matrix. Consider the following continuous markov chain. Markov chains are discrete state. Markov Chain Questions And Answers.

From www.numerade.com

SOLVED Markov and dseparation) Consider the causal Markov Chain Questions And Answers Markov chains are a relatively simple but very interesting and useful class of random processes. Consider the markov chain in figure 11.17. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. A markov chain describes a system whose state changes over time. (a) obtain the transition rate matrix.. Markov Chain Questions And Answers.

From www.chegg.com

Solved Answer the following questions! a. Here is the Markov Markov Chain Questions And Answers Problem 2.5 let {xn}n≥0 be a stochastic. Markov chains are a relatively simple but very interesting and useful class of random processes. Markov chains are discrete state space processes that have the markov property. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. (a) obtain the transition rate. Markov Chain Questions And Answers.

From www.chegg.com

Solved A Markov chain {xn} with TPM given by Markov Chain Questions And Answers There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Markov chains are a relatively simple but very interesting and useful class of random processes. When the system is in state 0 it stays in that state with probability 0.4. Markov chains are discrete state space processes that have the markov property. A markov chain describes a system whose state changes over. Markov Chain Questions And Answers.

From www.chegg.com

Solved 2. Consider the Markov chain below. For all parts of Markov Chain Questions And Answers Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. Consider the markov chain in figure 11.17. When the system is in state 0 it stays in that state with probability 0.4. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. (a). Markov Chain Questions And Answers.

From www.chegg.com

Solved HW11.2. Markov Chain Steady State Word problem Markov Chain Questions And Answers When the system is in state 0 it stays in that state with probability 0.4. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. Consider the following continuous markov chain. A markov chain describes a system whose state changes over time. Consider the markov chain in figure 11.17.. Markov Chain Questions And Answers.

From www.chegg.com

Solved We consider a Markov chain on S = {1, 2} with Markov Chain Questions And Answers (a) obtain the transition rate matrix. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Markov chains are a relatively simple but very interesting and useful class of random processes. A markov chain describes a system whose state changes over time. Consider the markov chain. Markov Chain Questions And Answers.

From www.chegg.com

Solved Markov chains are widely used in modeling several Markov Chain Questions And Answers Markov chains are a relatively simple but very interesting and useful class of random processes. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. (a) obtain the transition rate matrix. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Problem 2.5. Markov Chain Questions And Answers.

From www.chegg.com

Solved In this Markov chain all 1 transitions with nonzero Markov Chain Questions And Answers There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. When the system is in state 0 it stays in that state with probability 0.4.. Markov Chain Questions And Answers.

From www.chegg.com

Solved Problem 4. A simple Markov chain 6/10 points Markov Chain Questions And Answers Markov chains are discrete state space processes that have the markov property. Problem 2.5 let {xn}n≥0 be a stochastic. When the system is in state 0 it stays in that state with probability 0.4. A markov chain describes a system whose state changes over time. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Usually usually they are deflned to have. Markov Chain Questions And Answers.

From www.chegg.com

Solved Question 1 Consider the following Markov chain Markov Chain Questions And Answers Markov chains are discrete state space processes that have the markov property. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Problem 2.5 let {xn}n≥0 be a stochastic. Consider the following continuous. Markov Chain Questions And Answers.

From www.chegg.com

Solved A markov chain with three states, S={ 1, 2, 3} has Markov Chain Questions And Answers Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Markov chains are discrete state space processes that have the markov property. A markov chain describes a system whose state changes over time. Consider the following continuous markov chain. (a) obtain the transition rate matrix. Markov. Markov Chain Questions And Answers.

From www.chegg.com

Solved 10 marks] 4. A Markov chain on 10, 1,2,3 has Markov Chain Questions And Answers When the system is in state 0 it stays in that state with probability 0.4. Markov chains are a relatively simple but very interesting and useful class of random processes. A markov chain describes a system whose state changes over time. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is. Markov Chain Questions And Answers.

From www.chegg.com

Solved Consider the Markov chain in the above figure. Let us Markov Chain Questions And Answers Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Problem 2.5 let {xn}n≥0 be a stochastic. Consider the following continuous markov chain. Markov chains are discrete state space processes that have the. Markov Chain Questions And Answers.

From www.chegg.com

Solved Let {X_n} Be A Markov Chain With The Following Tra... Markov Chain Questions And Answers (a) obtain the transition rate matrix. Consider the markov chain in figure 11.17. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. A markov chain describes a system whose state changes over time. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded. Markov chains. Markov Chain Questions And Answers.

From www.chegg.com

Project 6 Markov Chains For Problem 1 use the Markov Chain Questions And Answers Consider the markov chain in figure 11.17. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Consider the following continuous markov chain. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). A markov chain describes a system whose state changes over time. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition. Markov Chain Questions And Answers.

From www.numerade.com

SOLVED Question (50 points) Markov chains and conditional Markov Chain Questions And Answers Problem 2.5 let {xn}n≥0 be a stochastic. (a) obtain the transition rate matrix. Markov chains are a relatively simple but very interesting and useful class of random processes. Consider the markov chain in figure 11.17. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. Markov chains are discrete state space processes that have the markov property.. Markov Chain Questions And Answers.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download Markov Chain Questions And Answers Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. A markov chain describes a system whose state changes over time. Markov chains are discrete state space processes that have the markov property. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let. Markov Chain Questions And Answers.

From www.bartleby.com

Answered A Markov chain has the transition… bartleby Markov Chain Questions And Answers Consider the markov chain in figure 11.17. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). A markov chain describes a system whose state changes over time. (a) obtain the transition rate matrix. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Markov chains are discrete state space processes that have the markov property.. Markov Chain Questions And Answers.

From www.chegg.com

Solved Consider a Markov Chain {Xn, n > 0} specified by the Markov Chain Questions And Answers Problem 2.5 let {xn}n≥0 be a stochastic. (a) obtain the transition rate matrix. When the system is in state 0 it stays in that state with probability 0.4. Markov chains are discrete state space processes that have the markov property. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. A markov chain describes a system whose state changes over time. Usually. Markov Chain Questions And Answers.

From www.chegg.com

Solved 6. (20 points) Consider a Markov chain with Markov Chain Questions And Answers (a) obtain the transition rate matrix. A markov chain describes a system whose state changes over time. Consider the markov chain in figure 11.17. Markov chains are a relatively simple but very interesting and useful class of random processes. Exercise 22.1 (subchain from a markov chain) assume \(x=\{x_n:n\geq 0\}\) is a markov chain and let \(\{n_k:k\geq 0\}\) be an unbounded.. Markov Chain Questions And Answers.

From www.transtutors.com

(Solved) Suppose A and B are each ergodic Markov chains with Markov Chain Questions And Answers Consider the following continuous markov chain. There are two recurrent classes, $r_1=\{1,2\}$, and $r_2=\{5,6,7\}$. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. When the system is in state 0 it stays in that state with probability 0.4. Usually usually they are deflned to have also discrete time (but deflnitions vary slightly in textbooks). Markov chains. Markov Chain Questions And Answers.

From www.chegg.com

Solved Problem 2 (Markov Chains). In this problem we will Markov Chain Questions And Answers A markov chain describes a system whose state changes over time. Markov chains are discrete state space processes that have the markov property. Consider the following continuous markov chain. When the system is in state 0 it stays in that state with probability 0.4. Problem 2.5 let {xn}n≥0 be a stochastic. Consider the markov chain in figure 11.17. Markov chains. Markov Chain Questions And Answers.

From www.chegg.com

Solved Question 2 A 2state {0,1} Markov chain has Markov Chain Questions And Answers Consider the markov chain in figure 11.17. Markov chains are a relatively simple but very interesting and useful class of random processes. (a) obtain the transition rate matrix. Show that {yn}n≥0 is a homogeneous markov chain and determine the transition probabilities. A markov chain describes a system whose state changes over time. Markov chains are discrete state space processes that. Markov Chain Questions And Answers.