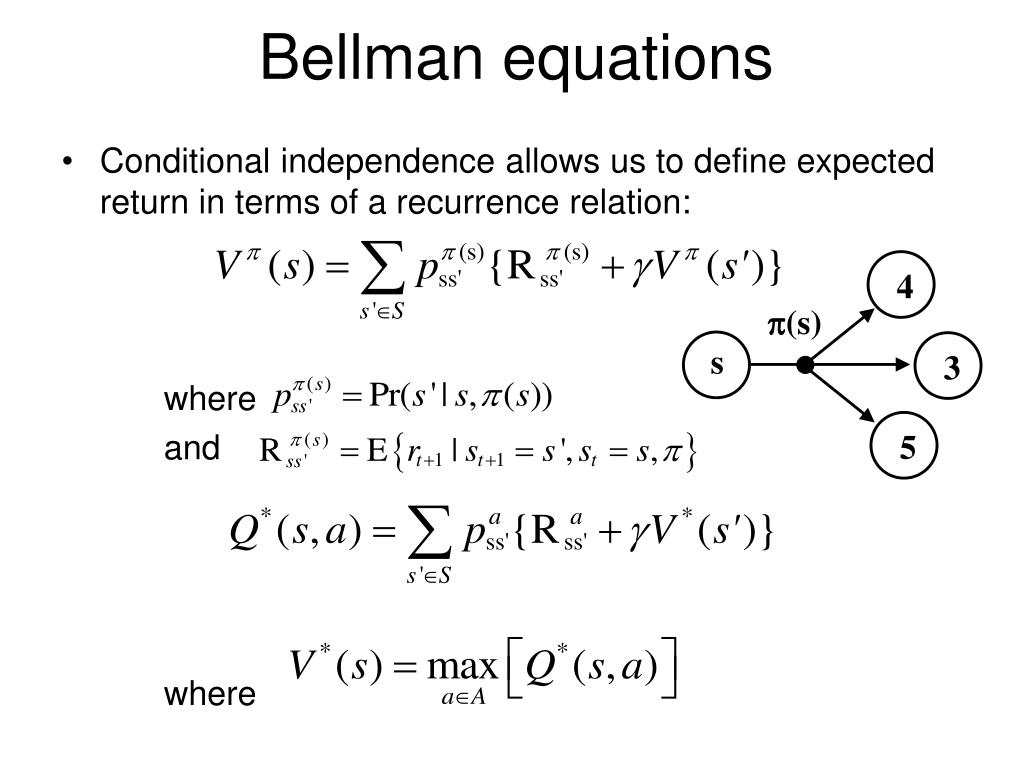

Bellman Equations . Current state where the agent is in the environment. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. If you were to measure the value of the current state you are in, how would you do this?. Numeric representation of a state which helps the agent to find its path. bellman equations, named after the creator of dynamic programming richard e. so, to solve this problem we should use bellman equation: V (s)=maxa(r (s,a)+ γv (s’)) state (s): After taking action (a) at state (s) the agent reaches s’. understanding the bellman equations. the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be.

from www.slideserve.com

so, to solve this problem we should use bellman equation: After taking action (a) at state (s) the agent reaches s’. If you were to measure the value of the current state you are in, how would you do this?. Current state where the agent is in the environment. understanding the bellman equations. V (s)=maxa(r (s,a)+ γv (s’)) state (s): bellman equations, named after the creator of dynamic programming richard e. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which helps the agent to find its path. the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be.

PPT Decision Making Under Uncertainty Lec 8 Reinforcement Learning

Bellman Equations so, to solve this problem we should use bellman equation: Current state where the agent is in the environment. Numeric representation of a state which helps the agent to find its path. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. bellman equations, named after the creator of dynamic programming richard e. After taking action (a) at state (s) the agent reaches s’. understanding the bellman equations. If you were to measure the value of the current state you are in, how would you do this?. V (s)=maxa(r (s,a)+ γv (s’)) state (s): the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. so, to solve this problem we should use bellman equation:

From stackoverflow.com

reinforcement learning Why Gt+1 = v(St+1) in Bellman Equation for Bellman Equations the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. Numeric representation of a state which helps the agent to find its path. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Current state where the agent. Bellman Equations.

From www.assemblyai.com

Reinforcement Learning With (Deep) QLearning Explained Bellman Equations Numeric representation of a state which helps the agent to find its path. After taking action (a) at state (s) the agent reaches s’. V (s)=maxa(r (s,a)+ γv (s’)) state (s): the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. so, to solve this problem. Bellman Equations.

From dnddnjs.gitbook.io

Bellman Optimality Equation Fundamental of Reinforcement Learning Bellman Equations learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which helps the agent to find its path. After taking action (a) at state (s) the agent reaches s’. understanding the bellman equations. bellman equations, named after the creator of dynamic programming richard e.. Bellman Equations.

From www.youtube.com

Bellman Equations, Dynamic Programming, Generalized Policy Iteration Bellman Equations the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which helps the agent to find its path. V (s)=maxa(r (s,a)+ γv (s’)). Bellman Equations.

From ha5ha6.github.io

Bellman Equation Jiexin Wang Bellman Equations bellman equations, named after the creator of dynamic programming richard e. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which helps the agent to find its path. the objective of this article is to offer the first steps towards deriving the bellman. Bellman Equations.

From www.slideshare.net

Lecture22 Bellman Equations If you were to measure the value of the current state you are in, how would you do this?. After taking action (a) at state (s) the agent reaches s’. so, to solve this problem we should use bellman equation: bellman equations, named after the creator of dynamic programming richard e. Numeric representation of a state which helps. Bellman Equations.

From stats.stackexchange.com

machine learning bellman equation mathmatics Cross Validated Bellman Equations bellman equations, named after the creator of dynamic programming richard e. If you were to measure the value of the current state you are in, how would you do this?. After taking action (a) at state (s) the agent reaches s’. so, to solve this problem we should use bellman equation: the objective of this article is. Bellman Equations.

From www.youtube.com

Bellman equation made easy and clear YouTube Bellman Equations so, to solve this problem we should use bellman equation: If you were to measure the value of the current state you are in, how would you do this?. Numeric representation of a state which helps the agent to find its path. V (s)=maxa(r (s,a)+ γv (s’)) state (s): the objective of this article is to offer the. Bellman Equations.

From www.youtube.com

The Bellman Equations 3 YouTube Bellman Equations After taking action (a) at state (s) the agent reaches s’. Numeric representation of a state which helps the agent to find its path. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we should use bellman equation: V (s)=maxa(r (s,a)+ γv (s’)) state. Bellman Equations.

From huggingface.co

An Introduction to QLearning Part 1 Bellman Equations V (s)=maxa(r (s,a)+ γv (s’)) state (s): If you were to measure the value of the current state you are in, how would you do this?. Current state where the agent is in the environment. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which. Bellman Equations.

From www.youtube.com

Intro RL I 3 Equations de Bellman YouTube Bellman Equations Numeric representation of a state which helps the agent to find its path. If you were to measure the value of the current state you are in, how would you do this?. so, to solve this problem we should use bellman equation: After taking action (a) at state (s) the agent reaches s’. the objective of this article. Bellman Equations.

From www.youtube.com

Bellman Equations YouTube Bellman Equations so, to solve this problem we should use bellman equation: Numeric representation of a state which helps the agent to find its path. If you were to measure the value of the current state you are in, how would you do this?. bellman equations, named after the creator of dynamic programming richard e. the objective of this. Bellman Equations.

From studylib.net

Bellman Equations for the DMP Search Model Simplified Worker Bellman Equations understanding the bellman equations. Current state where the agent is in the environment. the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. V (s)=maxa(r (s,a)+ γv (s’)) state (s): bellman equations, named after the creator of dynamic programming richard e. Numeric representation of a. Bellman Equations.

From www.slideserve.com

PPT CSE 473 Markov Decision Processes PowerPoint Presentation, free Bellman Equations learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we should use bellman equation: Numeric representation of a state which helps the agent to find its path. After taking action (a) at state (s) the agent reaches s’. Current state where the agent is. Bellman Equations.

From www.youtube.com

The Bellman Equations 2 YouTube Bellman Equations bellman equations, named after the creator of dynamic programming richard e. V (s)=maxa(r (s,a)+ γv (s’)) state (s): learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Numeric representation of a state which helps the agent to find its path. understanding the bellman equations. so, to solve. Bellman Equations.

From www.geeksforgeeks.org

Bellman Equation Bellman Equations Current state where the agent is in the environment. the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. Numeric representation of a state which helps the agent to find its path. bellman equations, named after the creator of dynamic programming richard e. V (s)=maxa(r (s,a)+. Bellman Equations.

From medium.com

Ch 12Reinforcement learning Complete Guide towardsAGI Bellman Equations so, to solve this problem we should use bellman equation: learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. understanding the bellman equations. bellman equations, named after the creator of dynamic programming richard e. If you were to measure the value of the current state you are. Bellman Equations.

From www.youtube.com

Continuous Time Dynamic Programming The HamiltonJacobiBellman Bellman Equations If you were to measure the value of the current state you are in, how would you do this?. so, to solve this problem we should use bellman equation: learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. understanding the bellman equations. bellman equations, named after the. Bellman Equations.

From towardsdatascience.com

How the Bellman equation works in Deep RL? Towards Data Science Bellman Equations learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we should use bellman equation: understanding the bellman equations. V (s)=maxa(r (s,a)+ γv (s’)) state (s): the objective of this article is to offer the first steps towards deriving the bellman equation, which. Bellman Equations.

From neptune.ai

Markov Decision Process in Reinforcement Learning Everything You Need Bellman Equations Current state where the agent is in the environment. bellman equations, named after the creator of dynamic programming richard e. V (s)=maxa(r (s,a)+ γv (s’)) state (s): understanding the bellman equations. After taking action (a) at state (s) the agent reaches s’. If you were to measure the value of the current state you are in, how would. Bellman Equations.

From www.slideserve.com

PPT Lirong Xia PowerPoint Presentation, free download ID4959047 Bellman Equations V (s)=maxa(r (s,a)+ γv (s’)) state (s): so, to solve this problem we should use bellman equation: the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman.. Bellman Equations.

From www.slideserve.com

PPT Decision Making Under Uncertainty Lec 8 Reinforcement Learning Bellman Equations understanding the bellman equations. bellman equations, named after the creator of dynamic programming richard e. Numeric representation of a state which helps the agent to find its path. If you were to measure the value of the current state you are in, how would you do this?. learn the basics of markov decision processes (mdps) and how. Bellman Equations.

From www.youtube.com

How to use Bellman Equation Reinforcement Learning Bellman Equation Bellman Equations If you were to measure the value of the current state you are in, how would you do this?. Numeric representation of a state which helps the agent to find its path. Current state where the agent is in the environment. understanding the bellman equations. so, to solve this problem we should use bellman equation: bellman equations,. Bellman Equations.

From www.youtube.com

Clear Explanation of Value Function and Bellman Equation (PART I Bellman Equations bellman equations, named after the creator of dynamic programming richard e. understanding the bellman equations. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. If you were to measure the value of the current state you are in, how would you do this?. Numeric representation of a state. Bellman Equations.

From www.youtube.com

HamiltonJacobiBellman equation YouTube Bellman Equations bellman equations, named after the creator of dynamic programming richard e. If you were to measure the value of the current state you are in, how would you do this?. Current state where the agent is in the environment. After taking action (a) at state (s) the agent reaches s’. understanding the bellman equations. learn the basics. Bellman Equations.

From www.youtube.com

Bellman Principle of Optimality Reinforcement Learning Machine Bellman Equations the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. V (s)=maxa(r (s,a)+ γv (s’)) state (s): Current state where the agent is in the environment. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. If you. Bellman Equations.

From ailephant.com

Overview of Deep Reinforcement Learning AILEPHANT Bellman Equations Current state where the agent is in the environment. Numeric representation of a state which helps the agent to find its path. bellman equations, named after the creator of dynamic programming richard e. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. the objective of this article is. Bellman Equations.

From www.youtube.com

The Bellman Equations 1 YouTube Bellman Equations learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we should use bellman equation: After taking action (a) at state (s) the agent reaches s’. bellman equations, named after the creator of dynamic programming richard e. If you were to measure the value. Bellman Equations.

From int8.io

Bellman Equations, Dynamic Programming and Reinforcement Learning (part Bellman Equations After taking action (a) at state (s) the agent reaches s’. If you were to measure the value of the current state you are in, how would you do this?. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. V (s)=maxa(r (s,a)+ γv (s’)) state (s): understanding the bellman. Bellman Equations.

From www.slideserve.com

PPT Chapter 4 Dynamic Programming PowerPoint Presentation, free Bellman Equations bellman equations, named after the creator of dynamic programming richard e. Numeric representation of a state which helps the agent to find its path. so, to solve this problem we should use bellman equation: learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. Current state where the agent. Bellman Equations.

From int8.io

Bellman Equations, Dynamic Programming and Reinforcement Learning (part Bellman Equations Current state where the agent is in the environment. Numeric representation of a state which helps the agent to find its path. V (s)=maxa(r (s,a)+ γv (s’)) state (s): understanding the bellman equations. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we. Bellman Equations.

From dokumen.tips

(PDF) Bellman Equations and Dynamic Programming · Bellman Equations and Bellman Equations Current state where the agent is in the environment. V (s)=maxa(r (s,a)+ γv (s’)) state (s): understanding the bellman equations. so, to solve this problem we should use bellman equation: bellman equations, named after the creator of dynamic programming richard e. After taking action (a) at state (s) the agent reaches s’. the objective of this. Bellman Equations.

From dotkay.github.io

Bellman Expectation Equations Action Value Function Bellman Equations Numeric representation of a state which helps the agent to find its path. understanding the bellman equations. After taking action (a) at state (s) the agent reaches s’. the objective of this article is to offer the first steps towards deriving the bellman equation, which can be considered to be. learn the basics of markov decision processes. Bellman Equations.

From www.numerade.com

SOLVED The Bellman Equations are V(s) = maxQ*(s,a) Q*(s,a) = D*T(s,a Bellman Equations learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. bellman equations, named after the creator of dynamic programming richard e. so, to solve this problem we should use bellman equation: If you were to measure the value of the current state you are in, how would you do. Bellman Equations.

From www.youtube.com

Value Functions and Bellman Equations in Reinforcement Learning Bellman Equations Current state where the agent is in the environment. learn the basics of markov decision processes (mdps) and how to solve them using dynamic programming and bellman. so, to solve this problem we should use bellman equation: After taking action (a) at state (s) the agent reaches s’. Numeric representation of a state which helps the agent to. Bellman Equations.