Pytorch Set_Epoch . we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. you maintain control over all aspects via pytorch code in your lightningmodule. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: for epoch in range(0, epochs + 1): The trainer uses best practices embedded by. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,.

from discuss.pytorch.org

Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. you maintain control over all aspects via pytorch code in your lightningmodule. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. for epoch in range(0, epochs + 1): you can use learning rate scheduler torch.optim.lr_scheduler.steplr. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. The trainer uses best practices embedded by. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order:

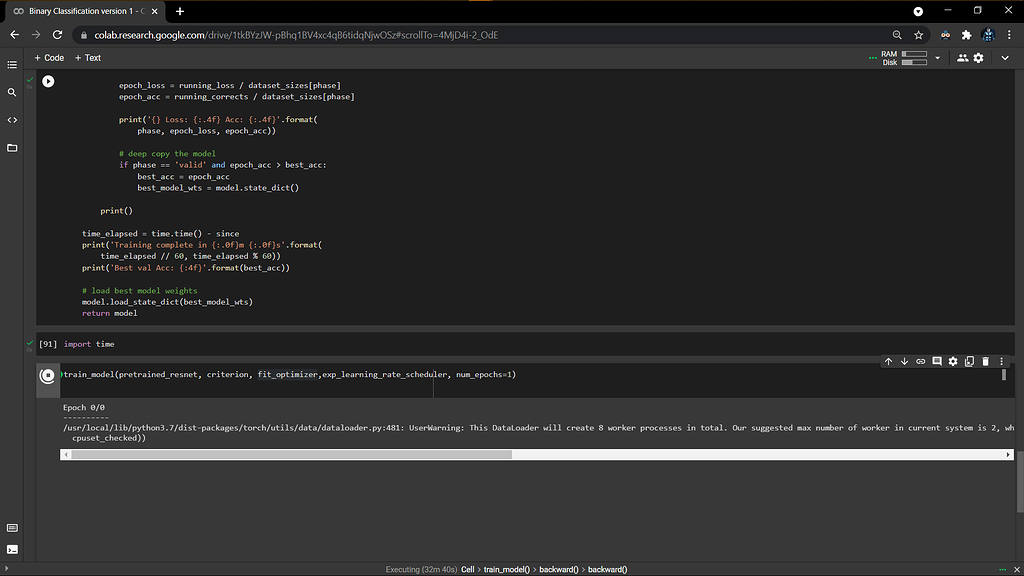

My model got stuck at first epoch PyTorch Forums

Pytorch Set_Epoch now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. you maintain control over all aspects via pytorch code in your lightningmodule. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. The trainer uses best practices embedded by. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. for epoch in range(0, epochs + 1): calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling.

From zhuanlan.zhihu.com

【MindSpore易点通】如何迁移PyTorch代码并在Ascend上实现单机单卡训练 知乎 Pytorch Set_Epoch based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: The trainer uses best practices embedded by. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. for epoch in range(0, epochs + 1): calling the set_epoch() method on the distributedsampler. Pytorch Set_Epoch.

From blog.csdn.net

type=int, help='number of data Pytorch Set_Epoch Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. for epoch in range(0, epochs + 1): you maintain control over all aspects. Pytorch Set_Epoch.

From pythonguides.com

PyTorch TanH Python Guides Pytorch Set_Epoch you can use learning rate scheduler torch.optim.lr_scheduler.steplr. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. you maintain control over all aspects via pytorch code in your lightningmodule. in. Pytorch Set_Epoch.

From discuss.pytorch.org

What does the last_epoch parameter of SGDR mean? PyTorch Forums Pytorch Set_Epoch Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. The trainer uses best. Pytorch Set_Epoch.

From github.com

GitHub UEFIcode/pytorchexamples A set of examples around pytorch Pytorch Set_Epoch Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. for epoch in range(0, epochs + 1): based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: you maintain control over all aspects via pytorch code. Pytorch Set_Epoch.

From www.datanami.com

New PyTorch 2.0 Compiler Promises Big Speedup for AI Developers Pytorch Set_Epoch based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. The trainer uses best practices embedded by. you maintain control over all aspects via pytorch code. Pytorch Set_Epoch.

From medium.com

PyTorch 2.0 release explained. One line of code to add, PyTorch 2.0 Pytorch Set_Epoch for epoch in range(0, epochs + 1): you maintain control over all aspects via pytorch code in your lightningmodule. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss. Pytorch Set_Epoch.

From medium.com

Introduction to Pytorch and Tensors The Startup Medium Pytorch Set_Epoch in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. based on the docs it’s necessary. Pytorch Set_Epoch.

From imagetou.com

Pytorch Lightning Save Model Every N Epochs Image to u Pytorch Set_Epoch we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. you maintain control over all aspects via pytorch code in your lightningmodule. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. now if you did want to pass the epoch number into. Pytorch Set_Epoch.

From discuss.pytorch.org

Pytorch code isn't showing Epoch neither errors PyTorch Forums Pytorch Set_Epoch we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. you maintain control over all aspects via pytorch code in your lightningmodule. The trainer uses best practices embedded by. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator. Pytorch Set_Epoch.

From discuss.pytorch.org

TensorBoard's add_histogram() execution time explodes with each epoch Pytorch Set_Epoch The trainer uses best practices embedded by. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. . Pytorch Set_Epoch.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Set_Epoch calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. you maintain control over all aspects via pytorch code in your lightningmodule. The trainer uses best practices embedded by. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. Dataset. Pytorch Set_Epoch.

From pythonguides.com

PyTorch Add Dimension [With 6 Examples] Python Guides Pytorch Set_Epoch you can use learning rate scheduler torch.optim.lr_scheduler.steplr. for epoch in range(0, epochs + 1): based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. The trainer. Pytorch Set_Epoch.

From pythonguides.com

PyTorch Stack Tutorial + Examples Python Guides Pytorch Set_Epoch Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. for epoch in range(0, epochs + 1): you can use learning rate scheduler torch.optim.lr_scheduler.steplr. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of. Pytorch Set_Epoch.

From blog.guvi.in

PyTorch vs TensorFlow 10 Powerful Differences You Must Know! GUVI Blogs Pytorch Set_Epoch you can use learning rate scheduler torch.optim.lr_scheduler.steplr. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. now if you did want to pass the epoch number into the dataset, you could do. Pytorch Set_Epoch.

From github.com

数据并行数 × epoch数 = 真实的epoch数? · Issue 2962 · hpcaitech/ColossalAI · GitHub Pytorch Set_Epoch The trainer uses best practices embedded by. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. for epoch in. Pytorch Set_Epoch.

From stackoverflow.com

logging How to extract loss and accuracy from logger by each epoch in Pytorch Set_Epoch now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. The trainer uses best practices embedded by. you maintain control over all aspects via pytorch code in your lightningmodule. . Pytorch Set_Epoch.

From discuss.pytorch.org

Pytorch code isn't showing Epoch neither errors PyTorch Forums Pytorch Set_Epoch in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass. Pytorch Set_Epoch.

From discuss.pytorch.org

My model got stuck at first epoch PyTorch Forums Pytorch Set_Epoch The trainer uses best practices embedded by. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. you maintain control over all aspects via pytorch code in your lightningmodule. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: you can use. Pytorch Set_Epoch.

From blog.csdn.net

pytorch lightning 使用记录_pytorchlighting 日志CSDN博客 Pytorch Set_Epoch calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets. Pytorch Set_Epoch.

From morioh.com

GPU Metrics Library in New PyTorch Release Pytorch Set_Epoch Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. The trainer uses best practices embedded by. for epoch in range(0, epochs + 1): you can use learning rate scheduler torch.optim.lr_scheduler.steplr. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: you maintain control over all aspects via pytorch code in your lightningmodule. now. Pytorch Set_Epoch.

From exyrxnktt.blob.core.windows.net

Pytorch Set Model To Eval at Norbert McCarter blog Pytorch Set_Epoch based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: you can use learning rate scheduler torch.optim.lr_scheduler.steplr. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. The trainer uses best practices embedded by. calling. Pytorch Set_Epoch.

From www.youtube.com

Add Dropout Regularization to a Neural Network in PyTorch YouTube Pytorch Set_Epoch now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. for epoch in range(0, epochs + 1): . Pytorch Set_Epoch.

From pylessons.com

PyLessons Pytorch Set_Epoch for epoch in range(0, epochs + 1): Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. The trainer uses best practices embedded by. you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class. Pytorch Set_Epoch.

From www.bilibili.com

PyTorch Tutorial 17 Saving and Load... 哔哩哔哩 Pytorch Set_Epoch based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable.. Pytorch Set_Epoch.

From github.com

Set epoch using n_epoch · Issue 1185 · junyanz/pytorchCycleGANand Pytorch Set_Epoch we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. for epoch in range(0, epochs + 1): calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. in distributed mode, calling the. Pytorch Set_Epoch.

From www.freecodecamp.org

Learn PyTorch for Deep Learning Free 26Hour Course Pytorch Set_Epoch in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. now if you did want to pass the epoch number into the. Pytorch Set_Epoch.

From iamtrask.github.io

Tutorial Deep Learning in PyTorch i am trask Pytorch Set_Epoch you can use learning rate scheduler torch.optim.lr_scheduler.steplr. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable.. Pytorch Set_Epoch.

From www.youtube.com

pytorch add regularization YouTube Pytorch Set_Epoch we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling. in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is.. Pytorch Set_Epoch.

From discuss.pytorch.org

Pytorch model training stops at the end of the first Epoch torchx Pytorch Set_Epoch based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. you can use learning rate scheduler torch.optim.lr_scheduler.steplr. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. in distributed mode, calling the set_epoch() method at. Pytorch Set_Epoch.

From pythonguides.com

PyTorch Stack Tutorial + Examples Python Guides Pytorch Set_Epoch for epoch in range(0, epochs + 1): The trainer uses best practices embedded by. you maintain control over all aspects via pytorch code in your lightningmodule. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the dataset class that sets a class variable. . Pytorch Set_Epoch.

From pythonguides.com

PyTorch Add Dimension [With 6 Examples] Python Guides Pytorch Set_Epoch in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. you maintain control over all aspects via pytorch code in your lightningmodule. Dataset = customimagedataset(epoch=epoch, annotations_file, img_dir, transform,. for epoch in range(0, epochs + 1): you can use learning rate scheduler torch.optim.lr_scheduler.steplr. now if you did. Pytorch Set_Epoch.

From discuss.pytorch.org

The epoch oscillation on the training set is obvious nlp PyTorch Forums Pytorch Set_Epoch you can use learning rate scheduler torch.optim.lr_scheduler.steplr. The trainer uses best practices embedded by. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. now if you did want to pass the epoch number into the dataset, you could do that using a custom method inside the. Pytorch Set_Epoch.

From pythonguides.com

PyTorch Reshape Tensor Useful Tutorial Python Guides Pytorch Set_Epoch we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. based on the docs it’s necessary to use set_epoch to guarantee a different shuffling order: The trainer uses best practices embedded by. now if you did want to pass the epoch number into the dataset, you could. Pytorch Set_Epoch.

From 9to5answer.com

[Solved] Calculate the accuracy every epoch in PyTorch 9to5Answer Pytorch Set_Epoch in distributed mode, calling the set_epoch() method at the beginning of each epoch before creating the dataloader iterator is. we’ll look at pytorch optimizers, which implement algorithms to adjust model weights based on the outcome of a loss function. calling the set_epoch() method on the distributedsampler at the beginning of each epoch is necessary to make shuffling.. Pytorch Set_Epoch.