Torch Nn Kldivloss . a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. See parameters, return type, and.

from zhuanlan.zhihu.com

As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch.

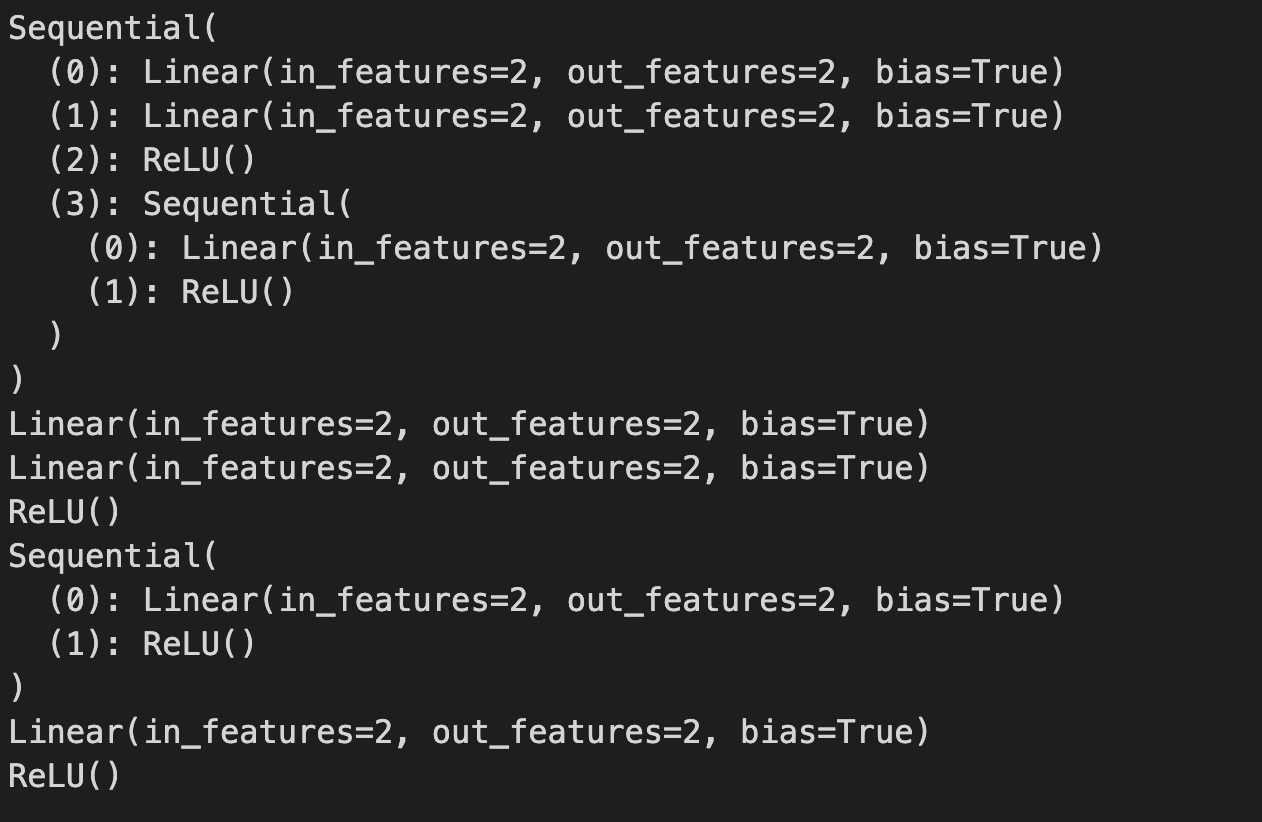

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎

Torch Nn Kldivloss learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. See parameters, return type, and. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Loss Functions(14)_torch loss apiCSDN博客 Torch Nn Kldivloss As with nllloss, the input. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how. Torch Nn Kldivloss.

From blog.csdn.net

KL散度损失学习_f.kldivlossCSDN博客 Torch Nn Kldivloss As with nllloss, the input. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. see the. Torch Nn Kldivloss.

From blog.csdn.net

损失函数:交叉熵、KLDivLoss、标签平滑(LabelSmoothing)CSDN博客 Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the. Torch Nn Kldivloss.

From zhuanlan.zhihu.com

一起来学PyTorch——torch.nn感知机神经网络 知乎 Torch Nn Kldivloss learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. As with. Torch Nn Kldivloss.

From blog.csdn.net

tensorlfow中的KLDivergence与pytorch的KLDivLoss使用差异_tf.keras.losses Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions,. Torch Nn Kldivloss.

From github.com

“TypeError forward() got an unexpected keyword argument 'log_target Torch Nn Kldivloss See parameters, return type, and. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to. Torch Nn Kldivloss.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how. Torch Nn Kldivloss.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute and use kl. Torch Nn Kldivloss.

From www.educba.com

torch.nn Module Modules and Classes in torch.nn Module with Examples Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See parameters, return type, and. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. see the documentation for kldivlossimpl class to learn what methods it provides,. Torch Nn Kldivloss.

From www.bilibili.com

[pytorch] 深入理解 nn.KLDivLoss(kl 散度) 与 nn.CrossEntropyLoss(交叉熵)半瓶汽水oO机器 Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. As with nllloss, the input. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. See parameters, return. Torch Nn Kldivloss.

From blog.csdn.net

深度学习06—逻辑斯蒂回归(torch实现)_torch.nn.sigmoidCSDN博客 Torch Nn Kldivloss See parameters, return type, and. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. a forum. Torch Nn Kldivloss.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. see the documentation for kldivlossimpl class to learn. Torch Nn Kldivloss.

From blog.csdn.net

pytorch 笔记:torch.nn.Linear() VS torch.nn.function.linear()_torch.nn Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in.. Torch Nn Kldivloss.

From massive11.github.io

BEV模型常用LOSS总结 Untitled. Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss. Torch Nn Kldivloss.

From velog.io

[Pytorch] torch.nn.Parameter Torch Nn Kldivloss As with nllloss, the input. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how to compute and use kl divergence, a measure of difference between two. Torch Nn Kldivloss.

From blog.csdn.net

Pytorch nn.KLDivLoss, reduction=‘none‘‘mean‘‘batchmean‘详解_nn Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. See parameters, return type,. Torch Nn Kldivloss.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch Nn Kldivloss see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See parameters, return. Torch Nn Kldivloss.

From developer.aliyun.com

【PyTorch】Neural Network 神经网络(下)阿里云开发者社区 Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. See parameters, return type, and. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how to compute and use kl divergence, a measure of difference. Torch Nn Kldivloss.

From blog.csdn.net

【学习笔记】Pytorch深度学习—损失函数(二)_余弦相似度损失代码CSDN博客 Torch Nn Kldivloss See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. As with nllloss, the input. learn how to compute and use kl. Torch Nn Kldivloss.

From github.com

Using torch.nn.CrossEntropyLoss along with torch.nn.Softmax output Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. a forum thread where users ask and. Torch Nn Kldivloss.

From blog.csdn.net

torch.nn.functional.relu()和torch.nn.ReLU()的使用举例CSDN博客 Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. As with nllloss, the input. See parameters, return type, and. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be. Torch Nn Kldivloss.

From github.com

GitHub flexible torch neural network Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl. Torch Nn Kldivloss.

From blog.csdn.net

深度学习06—逻辑斯蒂回归(torch实现)_torch.nn.sigmoidCSDN博客 Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See parameters, return type, and. learn how. Torch Nn Kldivloss.

From blog.csdn.net

nn.KLDivLoss(2)_kl loss计算值很大CSDN博客 Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. As with nllloss, the input. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. See parameters, return type,. Torch Nn Kldivloss.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Loss Functions(14)_torch loss apiCSDN博客 Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. As with nllloss, the input. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. See parameters, return type,. Torch Nn Kldivloss.

From www.youtube.com

Parallel analog to torch.nn.Sequential container YouTube Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. See parameters, return type, and. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. As with nllloss, the input. a discussion thread about why kl divergence. Torch Nn Kldivloss.

From github.com

KLDivLoss and F.kl_div compute KL(Q P) rather than KL(P Q Torch Nn Kldivloss a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a forum thread where users ask and answer questions about. Torch Nn Kldivloss.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch Nn Kldivloss a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See parameters, return. Torch Nn Kldivloss.

From blog.csdn.net

【Pytorch】torch.nn.init.xavier_uniform_()CSDN博客 Torch Nn Kldivloss See parameters, return type, and. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. . Torch Nn Kldivloss.

From blog.csdn.net

torch.sigmoid、torch.nn.Sigmoid和torch.nn.functional.sigmoid的区别CSDN博客 Torch Nn Kldivloss learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. As with nllloss, the input. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. a discussion thread. Torch Nn Kldivloss.

From jamesmccaffrey.wordpress.com

PyTorch Custom Weight Initialization Example James D. McCaffrey Torch Nn Kldivloss see the documentation for kldivlossimpl class to learn what methods it provides, and examples of how to use kldivloss with. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. As with nllloss, the input. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. learn how. Torch Nn Kldivloss.

From blog.csdn.net

torch.nn.Linear和torch.nn.MSELoss_torch mseloss指定维度CSDN博客 Torch Nn Kldivloss As with nllloss, the input. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. see the documentation for kldivlossimpl class to learn what methods it provides, and examples of. Torch Nn Kldivloss.

From blog.csdn.net

pytorch之torch基础学习_torch 学习CSDN博客 Torch Nn Kldivloss learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. see the documentation for kldivlossimpl class to learn. Torch Nn Kldivloss.

From codeantenna.com

PyTorch基础——torch.nn.Conv2d中groups参数 CodeAntenna Torch Nn Kldivloss learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. a forum thread where users ask and answer questions about how to implement and use kl divergence loss in pytorch. learn. Torch Nn Kldivloss.

From aeyoo.net

pytorch Module介绍 TiuVe Torch Nn Kldivloss learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. As with nllloss, the input. See parameters, return type, and. learn how to compute the kl divergence loss with torch.nn.functional.kl_div function. a discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. . Torch Nn Kldivloss.