Torch.nn.batchnorm1D . Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the.

from discuss.pytorch.org

Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the.

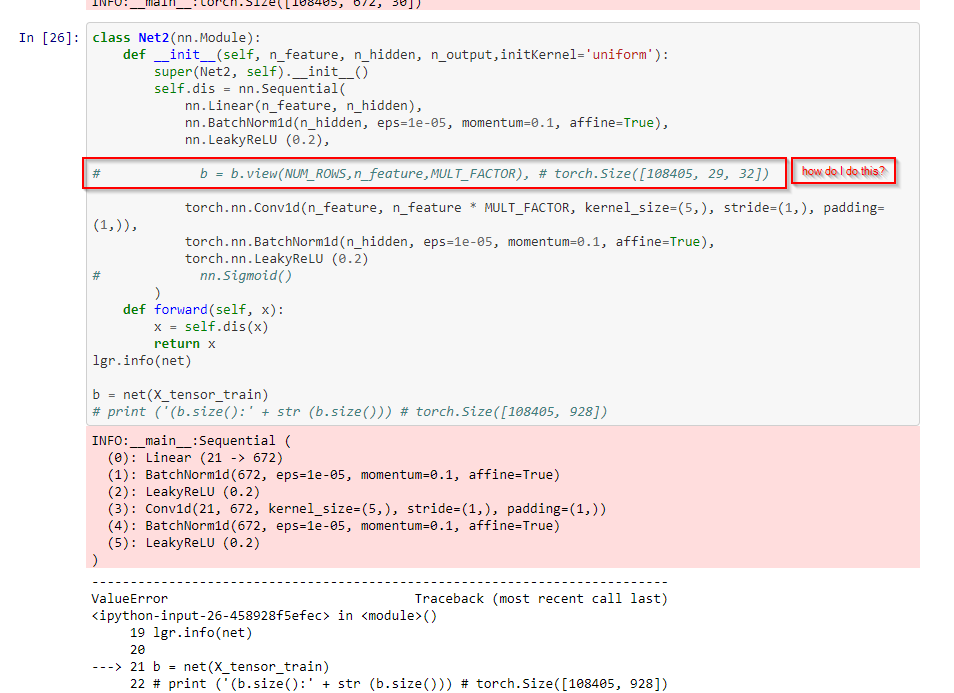

Issue with nn.conv1d() for one dimensional data PyTorch Forums

Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d.

From blog.csdn.net

normalization in nn (batchnorm layernorm instancenorm groupnorm)CSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From github.com

🐛 [Bug] Error compiling torch.nn.BatchNorm1d on Docker built on top of Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From www.youtube.com

Regularization module torch.nn.BatchNorm1d 설명 YouTube Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

nn.BatchNorm 和nn.LayerNorm详解_nn.layernorm使用CSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From github.com

torch.nn.BatchNorm1d Segmentation Fault with mixed CPU/GPU · Issue Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

nn.BatchNorm讲解,nn.BatchNorm1d, nn.BatchNorm2d代码演示CSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From github.com

torchdirectml RuntimeError on nn.BatchNorm1d · Issue 407 Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From www.youtube.com

torch.nn.BatchNorm1d Explained YouTube Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From www.youtube.com

nn.BatchNorm1d in PyTorch (mistake pointed in description) YouTube Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From blog.sciencenet.cn

科学网—Pytorch中nn.Conv1d、Conv2D与BatchNorm1d、BatchNorm2d函数 张伟的博文 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From blog.csdn.net

PyTorch正则化和批标准化_pytorch 标准化CSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From discuss.pytorch.org

Torch.nn.modules.rnn PyTorch Forums Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Pooling layers(3)_pytorch nn.avgpool1dCSDN博客 Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Normalization Layers(7)_lazybatchnormCSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From blog.csdn.net

About PyTorch BatchNorm1d, 2d, 3d_batchnormalization1d 2d 3d区别 pytorch Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

【Pytorch】torch.nn.init.xavier_uniform_()CSDN博客 Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From discuss.pytorch.org

Issue with nn.conv1d() for one dimensional data PyTorch Forums Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

原始数据经过卷积层conv,批归一化层BatchNorm1d,最大池化层MaxPool1d,后数据的形状会发生什么样的改变?_批归一化后nn Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From blog.csdn.net

001 Conv2d、BatchNorm2d、MaxPool2d_torch conv2d后执行batchnorm2dCSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.

From zhuanlan.zhihu.com

torch.nn 之 Normalization Layers 知乎 Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From blog.csdn.net

torch.sigmoid、torch.nn.Sigmoid和torch.nn.functional.sigmoid的区别CSDN博客 Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

Pytorch BN(BatchNormal)计算过程与源码分析和train与eval的区别_batchnorm2d具体计算过程CSDN博客 Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From blog.csdn.net

batchnorm2d参数 torch_科学网Pytorch中nn.Conv1d、Conv2D与BatchNorm1d Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From blog.csdn.net

批量归一化CSDN博客 Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From blog.csdn.net

1DCNN添加Residual Block, python代码实现及讲解_pytorch 1dcnnCSDN博客 Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From blog.csdn.net

解决:AttributeError SyncBatchNorm is only supported within torch.nn Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From discuss.pytorch.org

Issue with nn.conv1d() for one dimensional data PyTorch Forums Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From discuss.pytorch.org

Performance difference when using BatchNorm1d vs StandardScaler Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From codeantenna.com

batchnorm2d参数 torch_科学网Pytorch中nn.Conv1d、Conv2D与BatchNorm1d Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Normalization Layers(7)_lazybatchnormCSDN博客 Torch.nn.batchnorm1D Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Lazy initialization based on the ``num_features`` argument of the. Torch.nn.batchnorm1D.

From www.chegg.com

Solved torch.nn.BatchNorm1d() and nn. Dropout() after the Torch.nn.batchnorm1D Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Torch.nn.batchnorm1D.

From discuss.pytorch.org

Loss abnormal after using batchnorm1d autograd PyTorch Forums Torch.nn.batchnorm1D Lazy initialization based on the ``num_features`` argument of the. For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Torch.nn.batchnorm1D.

From discuss.pytorch.org

How to normalize audio data in PyTorch? audio PyTorch Forums Torch.nn.batchnorm1D For linear data points fed into networks without spatial dimensions, use nn.batchnorm1d. Lazy initialization based on the ``num_features`` argument of the. Batchnorm1d is a module that applies batch normalization (batchnorm) to 1d input data (often representing features or channels) within a. Ra :class:`torch.nn.batchnorm1d` module with lazy initialization. Torch.nn.batchnorm1D.