Print Java Rdd . Finally, iterate the result of the collect () and print /show it on the console. Make sure your rdd is small enough to store in spark driver’s memory. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Return an rdd created by piping elements to a forked external process. The resulting rdd is computed by executing the given process once. Return an rdd with the elements from this that are not in other. First apply the transformations on rdd. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Uses this partitioner/partition size, because even if other is huge, the. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Use collect () method to retrieve the data from rdd. This returns an array type in scala.

from www.youtube.com

Uses this partitioner/partition size, because even if other is huge, the. The resulting rdd is computed by executing the given process once. This returns an array type in scala. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Return an rdd with the elements from this that are not in other. Finally, iterate the result of the collect () and print /show it on the console. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Use collect () method to retrieve the data from rdd. Make sure your rdd is small enough to store in spark driver’s memory. First apply the transformations on rdd.

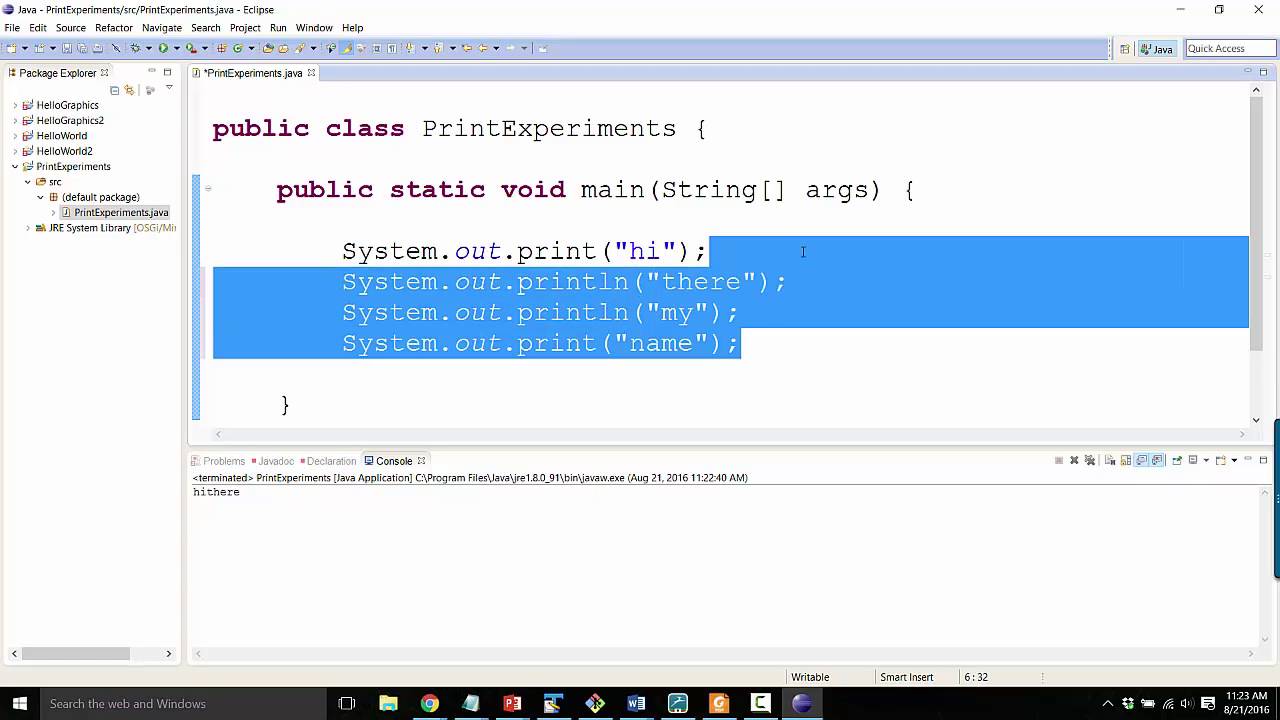

Intro Java Print statements YouTube

Print Java Rdd Use collect () method to retrieve the data from rdd. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. First apply the transformations on rdd. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd created by piping elements to a forked external process. Uses this partitioner/partition size, because even if other is huge, the. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Finally, iterate the result of the collect () and print /show it on the console. Use collect () method to retrieve the data from rdd. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. This returns an array type in scala. Return an rdd with the elements from this that are not in other. The resulting rdd is computed by executing the given process once.

From www.instanceofjava.com

Apache Spark create rdd from an array java convert an array in to RDD Print Java Rdd Return an rdd created by piping elements to a forked external process. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd with the elements from this that are not in other. Use collect () method to retrieve the data from rdd. I have taken the depicted results from a spark decision tree model. Print Java Rdd.

From www.tutorialgateway.org

Java Program to Print Alphabets from A to Z Print Java Rdd Return an rdd with the elements from this that are not in other. First apply the transformations on rdd. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. The resulting rdd is computed by executing. Print Java Rdd.

From www.youtube.com

14.12 How to print duplicate Elements in ArrayList in Java Tutorial Print Java Rdd Uses this partitioner/partition size, because even if other is huge, the. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. The resulting rdd is computed by executing the given process once. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd with the elements from this. Print Java Rdd.

From blog.csdn.net

Spark06:【案例】创建RDD:使用集合创建RDD、使用本地文件和HDFS文件创建RDD_hdfs创建上半年薪资rdd是什么CSDN博客 Print Java Rdd First apply the transformations on rdd. Finally, iterate the result of the collect () and print /show it on the console. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Use collect () method to retrieve the data from rdd. Return an rdd with the elements from this that are not in. Print Java Rdd.

From linuxhint.com

How to Print a List in Java Print Java Rdd Finally, iterate the result of the collect () and print /show it on the console. Return an rdd created by piping elements to a forked external process. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Return an rdd with the elements from this that are not in other. The resulting rdd. Print Java Rdd.

From www.blogarama.com

Java example program to print message without using System.out.println() Print Java Rdd Uses this partitioner/partition size, because even if other is huge, the. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd created by piping elements to a forked external process. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Finally, iterate the result of the collect. Print Java Rdd.

From javabeat.net

What is the Difference Between print() and println() in Java Print Java Rdd Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Make sure your rdd is small enough to store in spark driver’s memory. First apply the transformations on rdd. This returns an array type in scala. Return an rdd created by. Print Java Rdd.

From intellipaat.com

Spark and RDD Cheat Sheet Download in PDF & JPG Format Intellipaat Print Java Rdd Make sure your rdd is small enough to store in spark driver’s memory. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Return an rdd created by piping elements to a forked external process. Uses this partitioner/partition size, because even if other is huge, the. Perform a simple map reduce, mapping the doubles rdd to a new. Print Java Rdd.

From ittutorial.org

PySpark RDD Example IT Tutorial Print Java Rdd Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. First apply the transformations on rdd. This returns an array type in scala. Make sure your rdd is small enough to store in spark driver’s memory. Use collect () method to retrieve the data from rdd. Perform a simple map reduce, mapping the doubles rdd to a new. Print Java Rdd.

From ciksiti.com

So drucken Sie ein Array in Java Print Java Rdd Return an rdd created by piping elements to a forked external process. Uses this partitioner/partition size, because even if other is huge, the. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. This returns an array type in scala. Return an rdd with the elements from this that are not in other. Finally, iterate the result of. Print Java Rdd.

From github.com

java cannot print color · Issue 19097 · PowerShell/PowerShell · GitHub Print Java Rdd Return an rdd created by piping elements to a forked external process. Make sure your rdd is small enough to store in spark driver’s memory. Finally, iterate the result of the collect () and print /show it on the console. First apply the transformations on rdd. Perform a simple map reduce, mapping the doubles rdd to a new rdd of. Print Java Rdd.

From tecadmin.net

5 Methods to Print an Array in Java TecAdmin Print Java Rdd Return an rdd created by piping elements to a forked external process. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Use collect () method to retrieve the data from rdd. Make sure your rdd. Print Java Rdd.

From www.youtube.com

Print Formatting Part 6 printf() Flag + (JAVA) YouTube Print Java Rdd Make sure your rdd is small enough to store in spark driver’s memory. The resulting rdd is computed by executing the given process once. Use collect () method to retrieve the data from rdd. Return an rdd created by piping elements to a forked external process. Perform a simple map reduce, mapping the doubles rdd to a new rdd of. Print Java Rdd.

From bitsmore360.tumblr.com

Bitsmore360 — Java Ques 101 How to print patterns in java? Print Java Rdd I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Uses this partitioner/partition size, because even if other is huge, the. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd created by piping elements to a forked external process. First apply the transformations on rdd. The. Print Java Rdd.

From www.youtube.com

Printing.java YouTube Print Java Rdd Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Return an rdd created by piping elements to a forked external process. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. The resulting rdd is computed by executing the given process once. Finally, iterate the result of the collect (). Print Java Rdd.

From sparkbyexamples.com

Create Java RDD from List Collection Spark By {Examples} Print Java Rdd I have taken the depicted results from a spark decision tree model to a javapairrdd as below. First apply the transformations on rdd. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Use collect () method to retrieve the data from rdd. Uses this partitioner/partition size, because even if other is huge, the. Return an rdd with. Print Java Rdd.

From www.tutorialgateway.org

Java Program to Print Inverted Star Pyramid Print Java Rdd Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. This returns an array type in scala. Return an rdd with the elements from this that are not in other. I have taken the depicted results. Print Java Rdd.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Print Java Rdd Return an rdd created by piping elements to a forked external process. Finally, iterate the result of the collect () and print /show it on the console. Return an rdd with the elements from this that are not in other. First apply the transformations on rdd. Make sure your rdd is small enough to store in spark driver’s memory. The. Print Java Rdd.

From aitechtogether.com

【Python】PySpark 数据计算 ① ( RDDmap 方法 RDDmap 语法 传入普通函数 传入 lambda Print Java Rdd Uses this partitioner/partition size, because even if other is huge, the. Finally, iterate the result of the collect () and print /show it on the console. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce. Print Java Rdd.

From www.youtube.com

Intro Java Print statements YouTube Print Java Rdd Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd with the elements from this that are not in other. Uses this partitioner/partition size, because even if other is huge, the. Return an rdd created by piping elements to a forked external process. I have taken the depicted results from a spark decision tree. Print Java Rdd.

From www.javaprogramto.com

Java Program To Count Number Of Digits In Number Print Java Rdd Return an rdd created by piping elements to a forked external process. Finally, iterate the result of the collect () and print /show it on the console. First apply the transformations on rdd. Uses this partitioner/partition size, because even if other is huge, the. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. The resulting rdd is. Print Java Rdd.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Print Java Rdd The resulting rdd is computed by executing the given process once. Use collect () method to retrieve the data from rdd. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Finally, iterate the result of. Print Java Rdd.

From hocjava.com

Cách sử dụng printf trong Java Học Java Print Java Rdd Return an rdd created by piping elements to a forked external process. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Make sure your rdd is small enough to store in spark driver’s memory. Uses. Print Java Rdd.

From linuxhint.com

How to print a 2d array in java Print Java Rdd Return an rdd created by piping elements to a forked external process. Use collect () method to retrieve the data from rdd. This returns an array type in scala. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value. Print Java Rdd.

From www.tutorialgateway.org

Java Program to Print Diamond Alphabets Pattern Print Java Rdd Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Uses this partitioner/partition size, because even if other is huge, the. Make sure your rdd is small enough to store in spark driver’s memory. Org.apache.spark.rdd.rdd[_]) =. Print Java Rdd.

From stackoverflow.com

My java zybook's code isnt working for floating point formatting Print Java Rdd First apply the transformations on rdd. This returns an array type in scala. Use collect () method to retrieve the data from rdd. Uses this partitioner/partition size, because even if other is huge, the. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. The resulting rdd is computed by executing the given process once. Perform a simple. Print Java Rdd.

From www.youtube.com

How to Print Simple 2D Array in Java YouTube Print Java Rdd This returns an array type in scala. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Return an rdd with the elements from this that are not in other. Use collect () method to retrieve the data from rdd. First apply the transformations on rdd. The resulting rdd is computed by executing the given process once. Uses. Print Java Rdd.

From matnoble.github.io

图解Spark RDD的五大特性 MatNoble Print Java Rdd Return an rdd created by piping elements to a forked external process. Return an rdd with the elements from this that are not in other. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. Make sure your rdd is small enough to store in spark driver’s memory. This returns an array type in scala. I have taken. Print Java Rdd.

From www.youtube.com

Print Statement In Java YouTube Print Java Rdd The resulting rdd is computed by executing the given process once. Make sure your rdd is small enough to store in spark driver’s memory. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. This returns an array type in scala. Return an rdd created by piping elements to a forked external process.. Print Java Rdd.

From lilianmeowkirk.blogspot.com

Import Java Util Scanner Print Java Rdd Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. First apply the transformations on rdd. Make sure your rdd is small enough to store in spark driver’s memory. Return an rdd with the elements from this that are not in other. Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then. Print Java Rdd.

From www.youtube.com

Java Tutorial 03 using print() & println() methods YouTube Print Java Rdd Uses this partitioner/partition size, because even if other is huge, the. The resulting rdd is computed by executing the given process once. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. This returns an array type in scala. Use collect () method to retrieve the data from rdd. Finally, iterate the result. Print Java Rdd.

From exomnbfck.blob.core.windows.net

Java Print Query Result at Yong Moran blog Print Java Rdd Uses this partitioner/partition size, because even if other is huge, the. Org.apache.spark.rdd.rdd[_]) = rdd.foreach(println) [2] or even better, using implicits, you can add. I have taken the depicted results from a spark decision tree model to a javapairrdd as below. First apply the transformations on rdd. The resulting rdd is computed by executing the given process once. Use collect (). Print Java Rdd.

From www.javaprogramto.com

Java Spark RDD reduce() Examples sum, min and max opeartions Print Java Rdd Use collect () method to retrieve the data from rdd. Return an rdd created by piping elements to a forked external process. First apply the transformations on rdd. Uses this partitioner/partition size, because even if other is huge, the. Return an rdd with the elements from this that are not in other. I have taken the depicted results from a. Print Java Rdd.

From exosintbd.blob.core.windows.net

Java Print Keystore Location at Anthony Turner blog Print Java Rdd First apply the transformations on rdd. Finally, iterate the result of the collect () and print /show it on the console. This returns an array type in scala. Uses this partitioner/partition size, because even if other is huge, the. Use collect () method to retrieve the data from rdd. I have taken the depicted results from a spark decision tree. Print Java Rdd.

From www.youtube.com

How to Print Variables in Java System.out.println with Variables Print Java Rdd Perform a simple map reduce, mapping the doubles rdd to a new rdd of integers, then reduce it by calling the sum function of integer class to return the summed value of your rdd. Uses this partitioner/partition size, because even if other is huge, the. Make sure your rdd is small enough to store in spark driver’s memory. Return an. Print Java Rdd.