Dice And Entropy . The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. The second part is with math: The post has four parts. Entropy as a measure of the multiplicity of a system. Sounds as a good reason to dive into the meaning of entropy. In the first part, i introduce a maximum entropy principle on the example of a dice. We also present the main questions of information theory, data compression and error. One example that familiar and easy to analyze is a rolling dice. Four axioms that make entropy a unique function are recapped. The probability of finding a system in a given state depends upon the multiplicity of. Present the connection between cross entropy and dice related losses in segmentation. This post is all about dice and maximum entropy. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Dice loss and cross entropy loss. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the.

from www.datasciencecentral.com

Dice loss and cross entropy loss. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. One example that familiar and easy to analyze is a rolling dice. Present the connection between cross entropy and dice related losses in segmentation. In the first part, i introduce a maximum entropy principle on the example of a dice. The probability of finding a system in a given state depends upon the multiplicity of. This post is all about dice and maximum entropy. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Sounds as a good reason to dive into the meaning of entropy. The post has four parts.

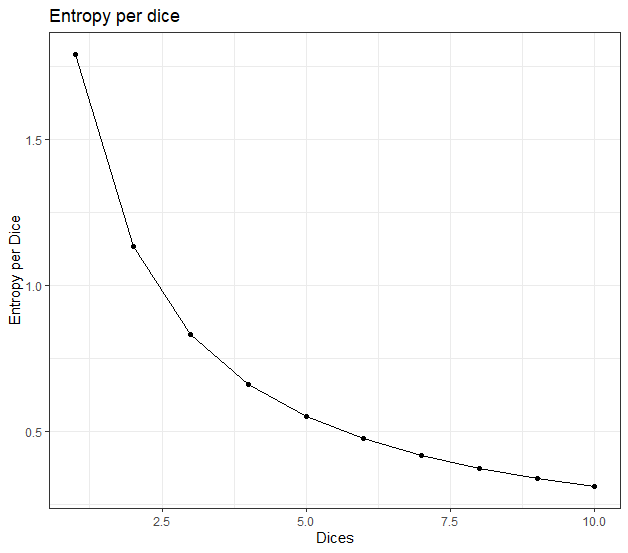

Entropy of rolling dices

Dice And Entropy This post is all about dice and maximum entropy. One example that familiar and easy to analyze is a rolling dice. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. The second part is with math: Sounds as a good reason to dive into the meaning of entropy. Dice loss and cross entropy loss. Expose the hidden bias of dice. The post has four parts. Entropy as a measure of the multiplicity of a system. This post is all about dice and maximum entropy. Present the connection between cross entropy and dice related losses in segmentation. The probability of finding a system in a given state depends upon the multiplicity of. Four axioms that make entropy a unique function are recapped. We also present the main questions of information theory, data compression and error. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy The post has four parts. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. The second part is with math: Sounds as a good reason to dive into the meaning of entropy. Dice loss and cross entropy loss. Entropy as a measure of the multiplicity of a system. The. Dice And Entropy.

From www.researchgate.net

Results of testing the binary crossentropy loss function and Dice loss Dice And Entropy One example that familiar and easy to analyze is a rolling dice. Sounds as a good reason to dive into the meaning of entropy. The second part is with math: The post has four parts. We also present the main questions of information theory, data compression and error. [16] proposes to make exponential and logarithmic transforms to both dice loss. Dice And Entropy.

From contrattypetransport.blogspot.com

Contrat type transport Dice loss vs cross entropy Dice And Entropy The second part is with math: This post is all about dice and maximum entropy. Expose the hidden bias of dice. The post has four parts. One example that familiar and easy to analyze is a rolling dice. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Four axioms that make entropy a unique. Dice And Entropy.

From www.researchgate.net

(a) Dice coefficient (DC) curve and (b) cross entropy loss curve for Dice And Entropy Present the connection between cross entropy and dice related losses in segmentation. Expose the hidden bias of dice. Dice loss and cross entropy loss. This post is all about dice and maximum entropy. In the first part, i introduce a maximum entropy principle on the example of a dice. The notion of entropy, which is fundamental to the whole topic. Dice And Entropy.

From www.researchgate.net

By comparing the crossentropy loss function, dice loss function Dice And Entropy The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. Dice loss and cross entropy loss. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Four axioms that make entropy a unique function are recapped. We also present the main questions of information theory, data compression and. Dice And Entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy This post is all about dice and maximum entropy. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. Present the connection between cross entropy and dice related losses in segmentation. Four axioms that make entropy a unique function are recapped. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution. Dice And Entropy.

From towardsdatascience.com

Throwing dice with maximum entropy principle Towards Data Science Dice And Entropy The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. The second part is with math: Entropy as a measure of the multiplicity of a system. The probability of finding a system in a given state depends upon the multiplicity of. The post has four parts. In the first part, i introduce a. Dice And Entropy.

From support.airgap.it

Coin Flip and Dice Roll Entropy Collection AirGap Support Dice And Entropy In the first part, i introduce a maximum entropy principle on the example of a dice. This post is all about dice and maximum entropy. Present the connection between cross entropy and dice related losses in segmentation. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. Dice loss and cross entropy loss.. Dice And Entropy.

From www.flickr.com

Two Dice Perutations1 Entropy, 2 dice, 7 is the most rand… Flickr Dice And Entropy This post is all about dice and maximum entropy. The second part is with math: Dice loss and cross entropy loss. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. The probability of finding a system in a given state depends upon the multiplicity of. Sounds as a good. Dice And Entropy.

From paperswithcode.com

Unified Focal loss Generalising Dice and cross entropybased losses to Dice And Entropy The second part is with math: The probability of finding a system in a given state depends upon the multiplicity of. Entropy as a measure of the multiplicity of a system. Sounds as a good reason to dive into the meaning of entropy. In the first part, i introduce a maximum entropy principle on the example of a dice. Expose. Dice And Entropy.

From www.youtube.com

5 How to do COLDCARD Dice Rolls seed entropy YouTube Dice And Entropy Entropy as a measure of the multiplicity of a system. Present the connection between cross entropy and dice related losses in segmentation. We also present the main questions of information theory, data compression and error. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. [16] proposes to make exponential and logarithmic transforms. Dice And Entropy.

From www.researchgate.net

Comparison of binary cross entropy and dice coefficient values for Dice And Entropy The second part is with math: This post is all about dice and maximum entropy. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. In the first part, i introduce a maximum entropy principle on the example of a dice. The post has four parts. Entropy as a measure of the multiplicity. Dice And Entropy.

From www.researchgate.net

Top view of a square dice of 6cm in length. nonuniform scalar entropy Dice And Entropy The second part is with math: One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. Present the connection between cross entropy and dice related losses in segmentation. Dice loss and cross entropy loss. Entropy as a measure of the multiplicity of a system. In the first part, i introduce. Dice And Entropy.

From www.youtube.com

Dice and Entropy YouTube Dice And Entropy The post has four parts. In the first part, i introduce a maximum entropy principle on the example of a dice. The probability of finding a system in a given state depends upon the multiplicity of. Four axioms that make entropy a unique function are recapped. One example that familiar and easy to analyze is a rolling dice. One dice. Dice And Entropy.

From www.expii.com

Entropy — Definition & Overview Expii Dice And Entropy In the first part, i introduce a maximum entropy principle on the example of a dice. Dice loss and cross entropy loss. We also present the main questions of information theory, data compression and error. Four axioms that make entropy a unique function are recapped. The probability of finding a system in a given state depends upon the multiplicity of.. Dice And Entropy.

From www.youtube.com

Entropy Calculation Part 2 Intro to Machine Learning YouTube Dice And Entropy The second part is with math: One example that familiar and easy to analyze is a rolling dice. Entropy as a measure of the multiplicity of a system. We also present the main questions of information theory, data compression and error. Sounds as a good reason to dive into the meaning of entropy. This post is all about dice and. Dice And Entropy.

From enoch-brinkmeyer.blogspot.com

dice games properly explained pdf enochbrinkmeyer Dice And Entropy Dice loss and cross entropy loss. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. Entropy as a measure of the multiplicity of a system. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. The post has four parts. Sounds as. Dice And Entropy.

From www.researchgate.net

Comparison of cross entropy and Dice losses for segmenting small and Dice And Entropy Sounds as a good reason to dive into the meaning of entropy. Dice loss and cross entropy loss. In the first part, i introduce a maximum entropy principle on the example of a dice. Entropy as a measure of the multiplicity of a system. The post has four parts. [16] proposes to make exponential and logarithmic transforms to both dice. Dice And Entropy.

From www.datasciencecentral.com

Entropy of rolling dices Dice And Entropy The second part is with math: In the first part, i introduce a maximum entropy principle on the example of a dice. Dice loss and cross entropy loss. We also present the main questions of information theory, data compression and error. The post has four parts. The notion of entropy, which is fundamental to the whole topic of this book,. Dice And Entropy.

From towardsdatascience.com

Throwing dice with maximum entropy principle Towards Data Science Dice And Entropy The post has four parts. In the first part, i introduce a maximum entropy principle on the example of a dice. Dice loss and cross entropy loss. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. The probability of finding a system in a given state depends upon the multiplicity of. Four axioms that. Dice And Entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy The post has four parts. The second part is with math: One example that familiar and easy to analyze is a rolling dice. The probability of finding a system in a given state depends upon the multiplicity of. Expose the hidden bias of dice. The notion of entropy, which is fundamental to the whole topic of this book, is introduced. Dice And Entropy.

From bytepawn.com

Bytepawn Marton Trencseni Cross entropy, joint entropy, conditional Dice And Entropy In the first part, i introduce a maximum entropy principle on the example of a dice. The probability of finding a system in a given state depends upon the multiplicity of. We also present the main questions of information theory, data compression and error. Dice loss and cross entropy loss. Expose the hidden bias of dice. One example that familiar. Dice And Entropy.

From www.youtube.com

Entropy, Microstates, and the Boltzmann Equation Pt 2 YouTube Dice And Entropy The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value, so the. Present the connection between cross entropy and dice related losses in segmentation. Dice loss and cross entropy loss. We also present the main. Dice And Entropy.

From www.researchgate.net

(a) Dice coefficient curves for training dataset, (b) Cross entropy Dice And Entropy One example that familiar and easy to analyze is a rolling dice. Present the connection between cross entropy and dice related losses in segmentation. In the first part, i introduce a maximum entropy principle on the example of a dice. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. The second part is with. Dice And Entropy.

From www.researchgate.net

The binary accuracy, dice coefficient and binary cross entropy loss Dice And Entropy Present the connection between cross entropy and dice related losses in segmentation. Expose the hidden bias of dice. The post has four parts. The second part is with math: Four axioms that make entropy a unique function are recapped. We also present the main questions of information theory, data compression and error. Dice loss and cross entropy loss. Sounds as. Dice And Entropy.

From www.researchgate.net

Loss and accuracy values, using Dice coefficient (blue) and Dice And Entropy One example that familiar and easy to analyze is a rolling dice. Four axioms that make entropy a unique function are recapped. The probability of finding a system in a given state depends upon the multiplicity of. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. Present the connection between cross entropy. Dice And Entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy The post has four parts. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Expose the hidden bias of dice. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. One example that familiar and easy to analyze is a rolling dice. This post is all about. Dice And Entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy Present the connection between cross entropy and dice related losses in segmentation. Dice loss and cross entropy loss. Sounds as a good reason to dive into the meaning of entropy. One example that familiar and easy to analyze is a rolling dice. One dice has 6 faces with values (1,2,3,4,5,6) and a uniform distribution of probability 1/6 for every value,. Dice And Entropy.

From medium.com

Entropy Collection with Dice Rolls and Coin Flips by Damilola Debel Dice And Entropy We also present the main questions of information theory, data compression and error. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. This post is all about dice and maximum entropy. One example that familiar and easy to analyze is a rolling dice. The notion of entropy, which is fundamental to the whole topic. Dice And Entropy.

From www.youtube.com

Entropy and Rolling Dice Example 3 YouTube Dice And Entropy Four axioms that make entropy a unique function are recapped. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Entropy as a measure of the multiplicity of a system. This post is all about dice and maximum entropy. Present the connection between cross entropy and dice related losses in segmentation. The second part is. Dice And Entropy.

From www.mdpi.com

ClusterBased Thermodynamics of Interacting Dice in a Lattice Dice And Entropy Expose the hidden bias of dice. We also present the main questions of information theory, data compression and error. Entropy as a measure of the multiplicity of a system. Present the connection between cross entropy and dice related losses in segmentation. The second part is with math: The notion of entropy, which is fundamental to the whole topic of this. Dice And Entropy.

From gaussian37.github.io

Information Theory (Entropy, KL Divergence, Cross Entropy) gaussian37 Dice And Entropy Four axioms that make entropy a unique function are recapped. Sounds as a good reason to dive into the meaning of entropy. Entropy as a measure of the multiplicity of a system. We also present the main questions of information theory, data compression and error. In the first part, i introduce a maximum entropy principle on the example of a. Dice And Entropy.

From www.pinterest.com

Entropy God's Dice Game Entropy, Dice games, Understanding Dice And Entropy This post is all about dice and maximum entropy. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. Four axioms that make entropy a unique function are recapped. Entropy as a measure of the multiplicity of a system. The notion of entropy, which is fundamental to the whole topic of this book, is introduced. Dice And Entropy.

From bricaud.github.io

A simple explanation of entropy in decision trees Benjamin Ricaud Dice And Entropy Entropy as a measure of the multiplicity of a system. One example that familiar and easy to analyze is a rolling dice. Present the connection between cross entropy and dice related losses in segmentation. [16] proposes to make exponential and logarithmic transforms to both dice loss an cross entropy. This post is all about dice and maximum entropy. One dice. Dice And Entropy.

From www.researchgate.net

Comparison of cross entropy and Dice losses for segmenting small and Dice And Entropy We also present the main questions of information theory, data compression and error. The probability of finding a system in a given state depends upon the multiplicity of. The notion of entropy, which is fundamental to the whole topic of this book, is introduced here. Expose the hidden bias of dice. Present the connection between cross entropy and dice related. Dice And Entropy.