Torch.cuda.comm.gather . You signed out in another tab or window. It implements the same function as cpu tensors, but they utilize gpus for. Reload to refresh your session. This package adds support for cuda tensor types. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. You signed in with another tab or window. I’m using a gru encoder and dataparallel. Reload to refresh your session. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch.

from blog.csdn.net

You signed out in another tab or window. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Reload to refresh your session. I’m using a gru encoder and dataparallel. You signed in with another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. It implements the same function as cpu tensors, but they utilize gpus for. Reload to refresh your session.

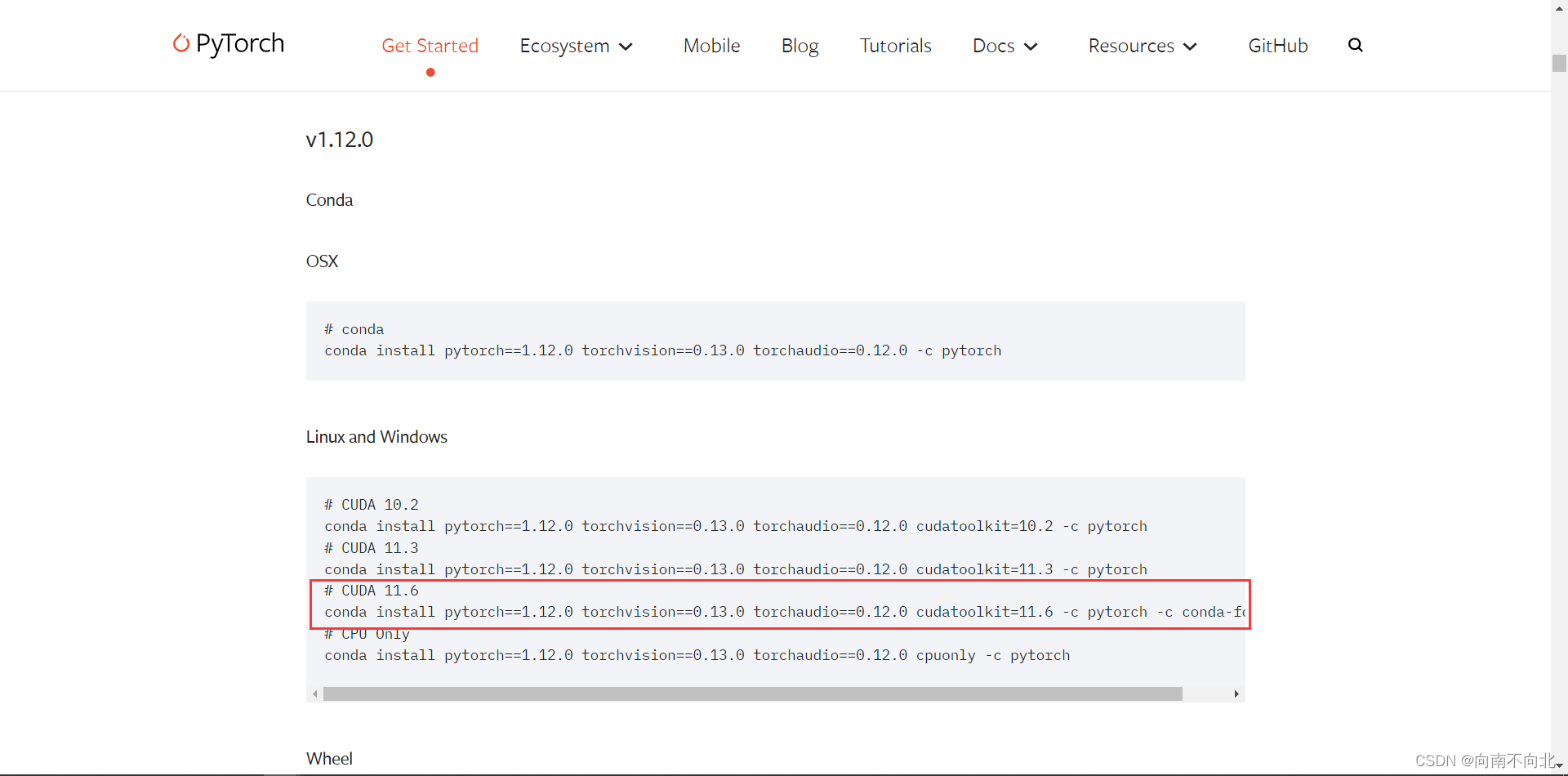

深度学习—Python、Cuda、Cudnn、Torch环境配置搭建_torch cudaCSDN博客

Torch.cuda.comm.gather Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. You signed out in another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. Reload to refresh your session. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. It implements the same function as cpu tensors, but they utilize gpus for. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. I’m using a gru encoder and dataparallel. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. This package adds support for cuda tensor types. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Reload to refresh your session. You signed in with another tab or window.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch.cuda.comm.gather I already did repadding my sequence to the total length of the input, and it worked for. Reload to refresh your session. Reload to refresh your session. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. It implements the same function as cpu tensors, but they utilize gpus for. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors.. Torch.cuda.comm.gather.

From exohzhnuf.blob.core.windows.net

Torch.gather Cuda Error DeviceSide Assert Triggered at Steve Heil blog Torch.cuda.comm.gather You signed in with another tab or window. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. You signed out in another tab or window. I’m using a gru encoder and dataparallel. Reload to refresh your session. This package adds support for cuda tensor types. I already did repadding my sequence to the total length of the input, and it worked. Torch.cuda.comm.gather.

From github.com

torch.cuda.is_available() is False but check the python cmd torch.cuda Torch.cuda.comm.gather I’m using a gru encoder and dataparallel. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. This package adds support for cuda tensor types. Reload to refresh your session. You signed in with another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. You signed out in. Torch.cuda.comm.gather.

From exohzhnuf.blob.core.windows.net

Torch.gather Cuda Error DeviceSide Assert Triggered at Steve Heil blog Torch.cuda.comm.gather I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. This package adds support for cuda tensor types. You signed in with. Torch.cuda.comm.gather.

From exohzhnuf.blob.core.windows.net

Torch.gather Cuda Error DeviceSide Assert Triggered at Steve Heil blog Torch.cuda.comm.gather Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. I already did repadding my sequence to the total length of the input, and it worked for. You signed in with another tab. Torch.cuda.comm.gather.

From exohzhnuf.blob.core.windows.net

Torch.gather Cuda Error DeviceSide Assert Triggered at Steve Heil blog Torch.cuda.comm.gather Reload to refresh your session. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. Reload to refresh your session. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. I already did repadding my sequence to the total length of the input, and it worked for. You signed out in another tab or. Torch.cuda.comm.gather.

From blog.csdn.net

torch.distributed多卡/多GPU/分布式DPP(一) —— torch.distributed.launch & all Torch.cuda.comm.gather Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. I’m using a gru encoder and dataparallel. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. It implements the same function as cpu tensors, but they utilize gpus for. I already did repadding my sequence to the total length of. Torch.cuda.comm.gather.

From blog.csdn.net

【2023最新方案】安装CUDA,cuDNN,Pytorch GPU版并解决torch.cuda.is_available()返回false等 Torch.cuda.comm.gather You signed in with another tab or window. Reload to refresh your session. It implements the same function as cpu tensors, but they utilize gpus for. I’m using a gru encoder and dataparallel. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. I already did repadding my sequence to the total. Torch.cuda.comm.gather.

From www.cnblogs.com

查看torch版本和cuda 灵性 博客园 Torch.cuda.comm.gather It implements the same function as cpu tensors, but they utilize gpus for. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Reload to refresh your session. This package adds support for cuda tensor types. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none). Torch.cuda.comm.gather.

From github.com

torch.distributed.all_gather function stuck · Issue 10680 · openmmlab Torch.cuda.comm.gather Reload to refresh your session. It implements the same function as cpu tensors, but they utilize gpus for. I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation. Torch.cuda.comm.gather.

From blog.csdn.net

Conda+CUDA+Torch+cuDNN下载及安装(总结)CSDN博客 Torch.cuda.comm.gather This package adds support for cuda tensor types. Reload to refresh your session. I already did repadding my sequence to the total length of the input, and it worked for. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Reload to refresh your session. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors. Torch.cuda.comm.gather.

From www.cnblogs.com

torch的cuda版本安装 一眉师傅 博客园 Torch.cuda.comm.gather Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. It implements the same function as cpu tensors, but they utilize gpus for. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. You signed out in another tab or window. You signed in with another tab or window. Reload to refresh your session. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers. Torch.cuda.comm.gather.

From discuss.pytorch.org

How do I build this file to be included? (torch_cuda_cu.dll) PyTorch Torch.cuda.comm.gather Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed out in another tab or window. Reload to refresh your session. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. I already did repadding my sequence. Torch.cuda.comm.gather.

From blog.csdn.net

torch.cuda.is_available()为false的解决办法_torch.cuda is available返回falseCSDN博客 Torch.cuda.comm.gather Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. I already did repadding my sequence to the total length of the input, and it worked for. This package adds support for cuda tensor types. Torch.cuda.comm.reduce_add_coalesced() can handle a list. Torch.cuda.comm.gather.

From blog.csdn.net

【PyTorch】Torch.gather()用法详细图文解释CSDN博客 Torch.cuda.comm.gather Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Reload to refresh your session. You signed in with another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. I’m using a gru encoder and dataparallel. Cuda sanitizer est. Torch.cuda.comm.gather.

From nhanvietluanvan.com

Assertionerror Torch Not Compiled With Cuda Enabled Torch.cuda.comm.gather You signed in with another tab or window. Reload to refresh your session. I already did repadding my sequence to the total length of the input, and it worked for. This package adds support for cuda tensor types. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Reload to refresh your. Torch.cuda.comm.gather.

From blog.csdn.net

torch.cuda.is_available()显示Flase时候的解决方法_安装了cuda为什么flaseCSDN博客 Torch.cuda.comm.gather Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. Reload to refresh your session. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed in with another tab or window. It implements the same function as cpu tensors, but they utilize gpus for. This package adds support for cuda tensor types.. Torch.cuda.comm.gather.

From www.educba.com

PyTorch CUDA Complete Guide on PyTorch CUDA Torch.cuda.comm.gather I’m using a gru encoder and dataparallel. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. I already did repadding my sequence to the total length of the input, and it worked for. It implements the same function as cpu. Torch.cuda.comm.gather.

From stackoverflow.com

python 3.x torch.cuda.is_available() returns False on ssh server with Torch.cuda.comm.gather I’m using a gru encoder and dataparallel. You signed in with another tab or window. It implements the same function as cpu tensors, but they utilize gpus for. You signed out in another tab or window. Reload to refresh your session. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. Reload to refresh your session. Torch.cuda.comm.reduce_add_coalesced() can. Torch.cuda.comm.gather.

From www.cnblogs.com

torch的cuda版本安装 一眉师傅 博客园 Torch.cuda.comm.gather Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. You signed in with another tab or window. I’m using a gru encoder and dataparallel. You signed out in another tab or window. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. This package adds support for cuda tensor types. Cuda sanitizer est un prototype d'outil permettant de détecter. Torch.cuda.comm.gather.

From github.com

Torch.cudaa.device_count() shows only one gpu in MIG A100 · Issue Torch.cuda.comm.gather You signed out in another tab or window. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. This package adds support for cuda tensor types. I’m using a gru encoder and dataparallel. You signed in with another tab or. Torch.cuda.comm.gather.

From blog.csdn.net

【2023最新方案】安装CUDA,cuDNN,Pytorch GPU版并解决torch.cuda.is_available()返回false等 Torch.cuda.comm.gather Reload to refresh your session. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed in with another tab or window. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. I’m using a gru encoder and dataparallel. It implements the same function as cpu tensors, but they utilize. Torch.cuda.comm.gather.

From blog.csdn.net

Cuda 和 GPU版torch安装最全攻略,以及在GPU 上运行 torch代码_torch gpuCSDN博客 Torch.cuda.comm.gather Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed out in another tab or window. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. You signed in with another tab or window. It implements the same function as cpu tensors, but they utilize gpus for. Torch.cuda.comm.reduce_add_coalesced(). Torch.cuda.comm.gather.

From hub.tcno.co

How to downgrade CUDA on Linux Change CUDA versions for Torch Torch.cuda.comm.gather I already did repadding my sequence to the total length of the input, and it worked for. It implements the same function as cpu tensors, but they utilize gpus for. Reload to refresh your session. Reload to refresh your session. You signed in with another tab or window. This package adds support for cuda tensor types. Torch.cuda.comm.reduce_add_coalesced() can handle a. Torch.cuda.comm.gather.

From zhuanlan.zhihu.com

torch.cuda.is_available()为False(如何安装gpu版本的torch) 知乎 Torch.cuda.comm.gather This package adds support for cuda tensor types. It implements the same function as cpu tensors, but they utilize gpus for. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Reload to refresh your session. I’m using a gru encoder. Torch.cuda.comm.gather.

From aitechtogether.com

torch.gather()使用解析 AI技术聚合 Torch.cuda.comm.gather You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. This package adds support for cuda tensor types. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. I’m using a gru encoder. Torch.cuda.comm.gather.

From github.com

AttributeError module 'torch.cuda' has no attribute · Issue Torch.cuda.comm.gather Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. You signed in with another tab or window. You signed out in another tab or window. Reload to refresh your session. I’m using a gru encoder and dataparallel. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. It implements the same function as cpu tensors, but they utilize gpus for.. Torch.cuda.comm.gather.

From blog.csdn.net

torch.cuda.is_available()返回false解决办法_pytorch cuda返回falseCSDN博客 Torch.cuda.comm.gather This package adds support for cuda tensor types. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed in with another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. It implements the same function as cpu tensors, but. Torch.cuda.comm.gather.

From blog.csdn.net

在服务器上配置torch 基于gpu (torch.cuda.is_available()的解决方案)_服务器配置torch gpu环境CSDN博客 Torch.cuda.comm.gather Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. I’m using a gru encoder and dataparallel. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers. Torch.cuda.comm.gather.

From blog.csdn.net

gather torch_torch.nn.DataParallel中数据Gather的问题:维度不匹配CSDN博客 Torch.cuda.comm.gather I’m using a gru encoder and dataparallel. You signed out in another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. This package adds support for cuda tensor types. It implements the same function as cpu tensors, but they utilize gpus for. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers. Torch.cuda.comm.gather.

From blog.csdn.net

【2023最新方案】安装CUDA,cuDNN,Pytorch GPU版并解决torch.cuda.is_available()返回false等 Torch.cuda.comm.gather I’m using a gru encoder and dataparallel. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. Reload to refresh your session. This package adds support for cuda tensor types. You signed in with another tab or window. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. Reload to refresh your session. I already did repadding my sequence to the. Torch.cuda.comm.gather.

From exohzhnuf.blob.core.windows.net

Torch.gather Cuda Error DeviceSide Assert Triggered at Steve Heil blog Torch.cuda.comm.gather This package adds support for cuda tensor types. I already did repadding my sequence to the total length of the input, and it worked for. Torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) [source] gathers tensors from multiple gpu devices. You signed in with another tab or window. You signed out in another tab or window. I’m using a gru encoder and dataparallel.. Torch.cuda.comm.gather.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch.cuda.comm.gather Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. You signed out in another tab or window. I already did repadding my sequence to the total length of the input, and it worked for. You signed in with another tab or window. Reload to refresh your session. Reload to refresh your session. This package adds support for cuda. Torch.cuda.comm.gather.

From github.com

torch.cuda.synchronize() takes a lot of time for the 10B model training Torch.cuda.comm.gather Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre les flux dans pytorch. You signed out in another tab or window. Torch.cuda.comm.reduce_add_coalesced() can handle a list of tensors with different size, but. You signed in with another tab or window. Torch.cuda.comm.gather torch.cuda.comm.gather(tensors, dim=0, destination=none, *, out=none) gathers tensors. It implements the same function as cpu. Torch.cuda.comm.gather.

From blog.csdn.net

深度学习—Python、Cuda、Cudnn、Torch环境配置搭建_torch cudaCSDN博客 Torch.cuda.comm.gather You signed in with another tab or window. It implements the same function as cpu tensors, but they utilize gpus for. Reload to refresh your session. I’m using a gru encoder and dataparallel. Reload to refresh your session. This package adds support for cuda tensor types. Cuda sanitizer est un prototype d'outil permettant de détecter les erreurs de synchronisation entre. Torch.cuda.comm.gather.