Collect List Import . Returns a list of objects. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. It can be used with select () method. It allows you to group. To utilize `collect_list` and `collect_set`, you need to import them from the. From group by ; I tried using collect_list as follows: Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Select , collect_list() as list_column. Dataframe.select(collect_list(column_name),.) where, column_name is the. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Importing necessary modules and functions.

from docs.metasfresh.org

From group by ; It allows you to group. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select () method. Returns a list of objects. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). To utilize `collect_list` and `collect_set`, you need to import them from the. Importing necessary modules and functions.

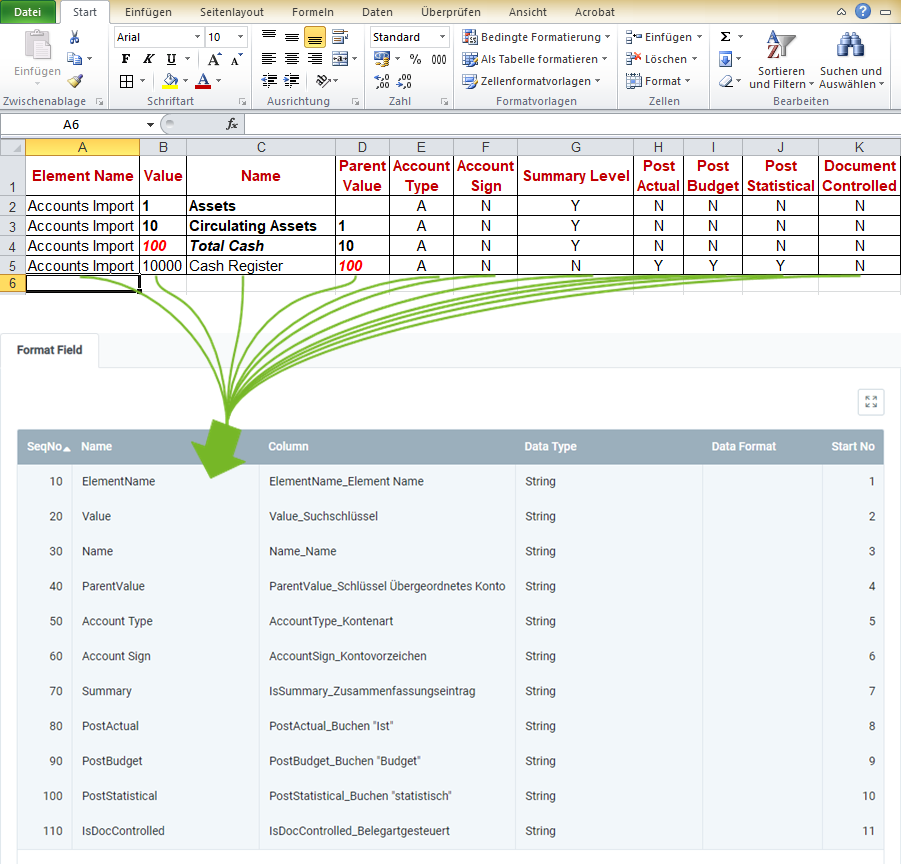

Format example for importing chart of accounts data

Collect List Import Returns a list of objects. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. From group by ; Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select () method. It allows you to group. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by I tried using collect_list as follows: Importing necessary modules and functions. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). To utilize `collect_list` and `collect_set`, you need to import them from the. Returns a list of objects. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Select , collect_list() as list_column.

From help.dorik.com

How to add items to a Custom CollectionN Dorik Helpdesk Collect List Import Importing necessary modules and functions. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Collect List Import.

From guidedimports.com

FAS Incoterms What FAS Means and Pricing Guided Imports Collect List Import From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Importing necessary modules and functions. Returns a list of objects. Dataframe.select(collect_list(column_name),.) where, column_name is the. From group by ; To utilize `collect_list` and `collect_set`, you need to import them from the. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype). Collect List Import.

From learn.wab.edu

Import a Book NoodleTools for Students WAB Learns at Western Collect List Import Importing necessary modules and functions. It allows you to group. Select , collect_list() as list_column. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Returns a list of objects. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select. Collect List Import.

From www.youtube.com

How to Make A Page of Collections on Shopify (Collection within a Collect List Import I tried using collect_list as follows: To utilize `collect_list` and `collect_set`, you need to import them from the. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by It can be used with select () method. Select , collect_list() as list_column. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. From. Collect List Import.

From docs.metasfresh.org

Format example for importing chart of accounts data Collect List Import It can be used with select () method. From group by ; The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by Returns a list of. Collect List Import.

From www.javaprogramto.com

Java 8 Stream Collect to List Collect List Import Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. It allows you to group. Select , collect_list() as list_column. It can be used with select () method. Importing necessary modules. Collect List Import.

From www.mdn.gov.mm

Top Ten Trading Partners of Import from Myanmar 20192020 (October Collect List Import To utilize `collect_list` and `collect_set`, you need to import them from the. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Importing necessary modules and functions. Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select () method. Returns a list of objects. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. I tried using collect_list as follows: Select , collect_list(). Collect List Import.

From incodocs.com

Create Commercial Invoice Document For ImportExport IncoDocs Collect List Import Dataframe.select(collect_list(column_name),.) where, column_name is the. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Importing necessary modules and functions. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Returns a list of objects. Select , collect_list() as list_column. To utilize `collect_list` and `collect_set`, you need to. Collect List Import.

From www.collect.org

How To Create An Import Map Collect! Help Collect List Import The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Importing necessary modules and functions. Returns a list of objects. From group by ; Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Select , collect_list() as list_column. It allows you to. Collect List Import.

From bigdatansql.com

Usage of Collect_List function in Apache Hive Big Data and SQL Collect List Import Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by It can be used with select () method. Select , collect_list() as list_column. Importing necessary modules and functions. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in. Collect List Import.

From www.collect.org

How To Import Import Maps Collect! Help Collect List Import Returns a list of objects. It can be used with select () method. I tried using collect_list as follows: Select , collect_list() as list_column. To utilize `collect_list` and `collect_set`, you need to import them from the. Dataframe.select(collect_list(column_name),.) where, column_name is the. Importing necessary modules and functions. It allows you to group. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending. Collect List Import.

From ecomclips.com

Bulk Import Tracking information on Sellercloud via Excel or CSV Collect List Import Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select. Collect List Import.

From seohub.net.au

What a Content Hub Is & How to Create One (+ Examples) Collect List Import Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by Importing necessary modules and functions. Pyspark sql collect_list() and collect_set() functions. Collect List Import.

From www.youtube.com

IncoTerms 2010 International Trade Import Export Business Supply Chain Collect List Import Importing necessary modules and functions. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Dataframe.select(collect_list(column_name),.) where, column_name is the. It can be used with select () method. I tried using. Collect List Import.

From www.youtube.com

Collect_List and Collect_Set in PySpark Databricks Tutorial Series Collect List Import Returns a list of objects. Importing necessary modules and functions. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Dataframe.select(collect_list(column_name),.) where, column_name is the. To utilize `collect_list` and `collect_set`, you need to import them from the. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Spark sql collect_list() and collect_set() functions are used to create. Collect List Import.

From guidedimports.com

Shipping Incoterms the Complete Guide Guided Imports Collect List Import Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Select , collect_list() as list_column. It allows you to group. It can be used with select () method. To utilize `collect_list` and `collect_set`, you need to import them from the. Dataframe.select(collect_list(column_name),.) where, column_name is the. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically. Collect List Import.

From www.collect.org

How To Export Import Maps Collect! Help Collect List Import Returns a list of objects. It allows you to group. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by From group by ; Dataframe.select(collect_list(column_name),.) where, column_name is the. The collect_list function in pyspark is a powerful tool for aggregating data and. Collect List Import.

From sparkbyexamples.com

Spark Working with collect_list() and collect_set() functions Spark Collect List Import Dataframe.select(collect_list(column_name),.) where, column_name is the. Select , collect_list() as list_column. Importing necessary modules and functions. It can be used with select () method. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in. Collect List Import.

From www.collect.org

How To Import Import Maps Collect! Help Collect List Import Dataframe.select(collect_list(column_name),.) where, column_name is the. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Returns a list of objects. It can be used with select () method. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. It allows you to group. I tried using collect_list as follows: To utilize `collect_list` and `collect_set`,. Collect List Import.

From www.advancedontrade.com

Import Purchase Order Samples in Excel For Ex Works, FCA and FOB Collect List Import Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by Select , collect_list() as list_column. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). It can be used with select () method. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. It allows you to group. The collect_list function. Collect List Import.

From www.pinterest.co.kr

Coin Collection Inventory Log Print and Write and Fillable PDF Etsy Collect List Import Select , collect_list() as list_column. It allows you to group. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). To utilize `collect_list` and `collect_set`, you need to import them from the. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Importing necessary modules and functions. I tried using collect_list as follows: From group by ; Dataframe.select(collect_list(column_name),.) where, column_name is the. Collect List Import.

From www.glocksoft.com

How to Import Emails from a multicolumn CSV file Collect List Import Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. To utilize `collect_list` and `collect_set`, you need to import them from the. It allows you to group. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Select , collect_list() as list_column. Spark sql collect_list() and collect_set() functions are used to. Collect List Import.

From gcollect.ginimachine.com

Howtouse Collect List Import It allows you to group. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. To utilize `collect_list` and `collect_set`, you need to import them from the. Select , collect_list() as list_column. Importing necessary modules and functions. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Dataframe.select(collect_list(column_name),.) where, column_name is the. Returns a. Collect List Import.

From nexgengame.com

Complete Import/Export Guide in GTA 5 Online [Updated 2021] Collect List Import It allows you to group. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Dataframe.select(collect_list(column_name),.) where, column_name is the. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). It can be used with select () method. Select , collect_list() as list_column. Returns a list of objects.. Collect List Import.

From www.dripcapital.com

Documents Required for ImportExport Customs Clearance Collect List Import Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after. Collect List Import.

From www.collect.org

How To Create An Import Map Collect! Help Collect List Import Returns a list of objects. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. To utilize `collect_list` and `collect_set`, you need to import them from. Collect List Import.

From www.pinterest.cl

IncoTerms 2010 explained for Import Export Shipping Warehouse Collect List Import Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by I tried using collect_list as follows: From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). It allows. Collect List Import.

From www.collect.org

How To Create An Import Map Collect! Help Collect List Import Importing necessary modules and functions. Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). To utilize `collect_list` and `collect_set`, you need to import them from the. Spark sql collect_list() and collect_set() functions are used to. Collect List Import.

From university.webflow.com

Use components in Collection lists and Collection pages flow Collect List Import Importing necessary modules and functions. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by To utilize `collect_list` and `collect_set`, you need to import them from the. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. It can. Collect List Import.

From farming.co.uk

Farming News British Food & Drink import figures “staggering“ Collect List Import The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. Select , collect_list() as list_column. I tried using collect_list as follows: Importing necessary modules and functions. From group by ; From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). To utilize `collect_list` and `collect_set`, you need to. Collect List Import.

From incodocs.com

Create a Packing List [Free Template] IncoDocs Collect List Import From group by ; Dataframe.select(collect_list(column_name),.) where, column_name is the. Importing necessary modules and functions. To utilize `collect_list` and `collect_set`, you need to import them from the. It allows you to group. It can be used with select () method. I tried using collect_list as follows: From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). The collect_list function in. Collect List Import.

From collectforstripe.com

Collect for Stripe Guide Collect List Import Returns a list of objects. I tried using collect_list as follows: Importing necessary modules and functions. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). It allows you to group. It can be used with. Collect List Import.

From blog.csdn.net

Map<Integer, String> lendReturnMap = lendReturnList.stream().collect Collect List Import Select , collect_list() as list_column. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. It can be used with select () method. Importing necessary modules and functions. From pyspark.sql import functions as f ordered_df = input_df.orderby(['id','date'],ascending = true). Pyspark.sql.functions.collect_list(col:columnorname) → pyspark.sql.column.column [source] ¶. From group by ; Dataframe.select(collect_list(column_name),.) where, column_name is. Collect List Import.

From techguruplus.com

Book Collection List Template In Excel (Download.xlsx) Collect List Import Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. The collect_list function in pyspark is a powerful tool for aggregating data and creating lists from a column in a dataframe. To utilize `collect_list` and `collect_set`, you need to import them from the. Select , collect_list() as list_column. It allows you to. Collect List Import.

From knkatiniemi.fi

Collection List Page Collect List Import Select , collect_list() as list_column. It can be used with select () method. Importing necessary modules and functions. Pyspark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging. Spark sql collect_list() and collect_set() functions are used to create an array (arraytype) column on dataframe by merging rows, typically after group by From. Collect List Import.