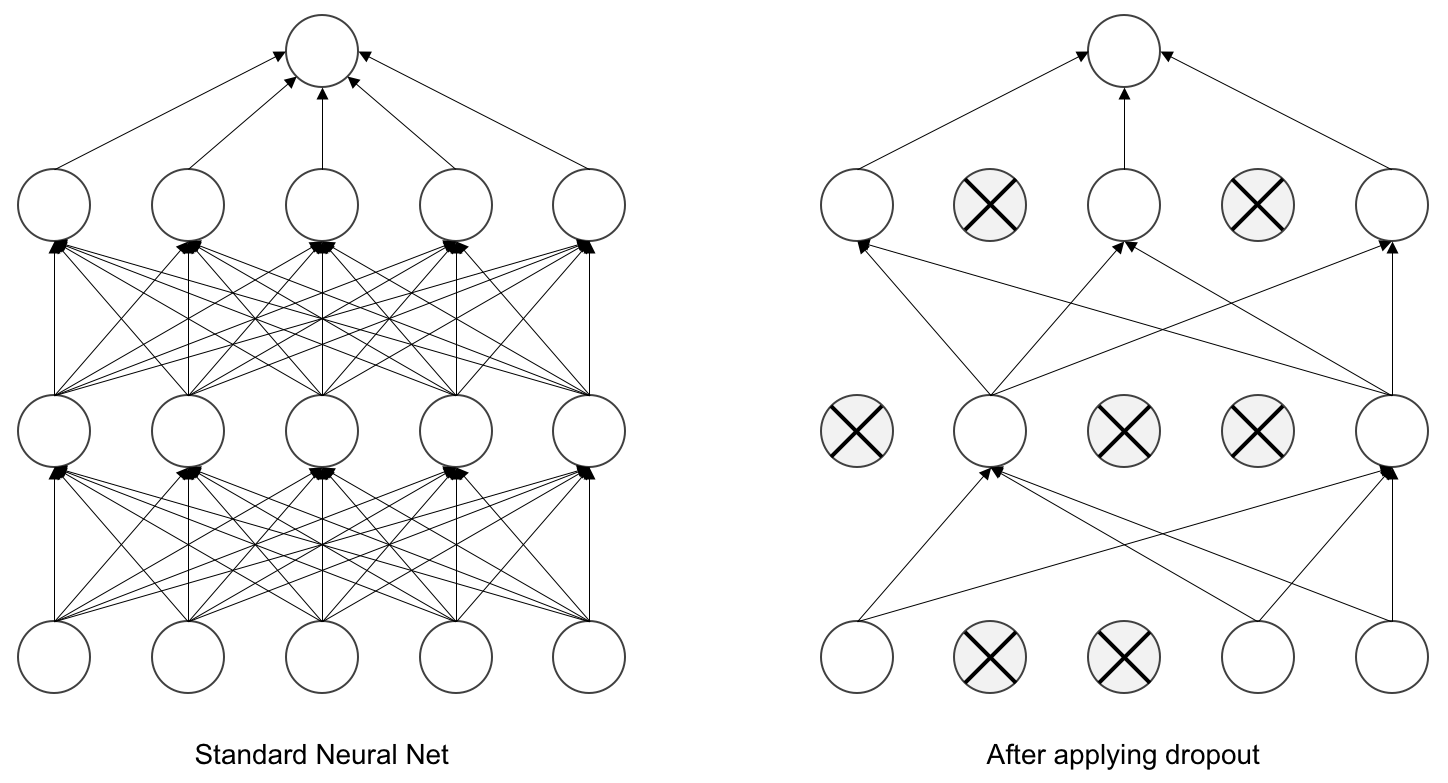

What Is Dropout Rate Neural Network . Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. All of the guidance online mentions a dropout rate. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. It has convolution layers and then 6 hidden layers. This randomness prevents the network from. All the forward and backwards connections with a dropped. A simple way to prevent neural networks from overfitting” paper. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). Dropout is a technique where randomly selected neurons are ignored during training. This means that their contribution to the activation of downstream. They are “dropped out” randomly. I am currently building a convolution neural network to play the game 2048.

from aashay96.medium.com

It has convolution layers and then 6 hidden layers. This randomness prevents the network from. Dropout is a technique where randomly selected neurons are ignored during training. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). All of the guidance online mentions a dropout rate. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. This means that their contribution to the activation of downstream. All the forward and backwards connections with a dropped. I am currently building a convolution neural network to play the game 2048. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network.

Experimentation with Variational Dropout Do exist inside a

What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. All the forward and backwards connections with a dropped. I am currently building a convolution neural network to play the game 2048. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. This means that their contribution to the activation of downstream. This randomness prevents the network from. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). They are “dropped out” randomly. All of the guidance online mentions a dropout rate. Dropout is a technique where randomly selected neurons are ignored during training. It has convolution layers and then 6 hidden layers. A simple way to prevent neural networks from overfitting” paper.

From www.geogebra.org

Deep Neural Network Dropout GeoGebra What Is Dropout Rate Neural Network It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). All the forward and backwards connections with a dropped. For instance, if p=0.5, it implies a neuron has a 50%. What Is Dropout Rate Neural Network.

From www.researchgate.net

The Recurrent Neural Network Model with possible Dropout Locations What Is Dropout Rate Neural Network It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. They are “dropped out” randomly. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). I am currently building a convolution neural network to play the game 2048. All the. What Is Dropout Rate Neural Network.

From www.weixiushen.com

Must Know Tips/Tricks in Deep Neural Networks (by XiuShen Wei ) What Is Dropout Rate Neural Network All of the guidance online mentions a dropout rate. All the forward and backwards connections with a dropped. A simple way to prevent neural networks from overfitting” paper. It has convolution layers and then 6 hidden layers. This means that their contribution to the activation of downstream. It involves randomly “dropping out” a fraction of neurons during the training process,. What Is Dropout Rate Neural Network.

From deepai.org

Learning Rate Dropout DeepAI What Is Dropout Rate Neural Network For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. All the forward and backwards connections with a dropped. This randomness prevents the network from. The term “dropout” refers to dropping out the nodes. What Is Dropout Rate Neural Network.

From www.researchgate.net

Description and relevance of dropout rate. Appropriate Appropriate What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. They are “dropped out” randomly. All of the guidance online mentions a dropout rate. Dropout is a technique where randomly selected neurons are ignored during training. This randomness prevents the network from. A simple way to prevent neural networks from overfitting” paper. For instance, if p=0.5, it implies a neuron. What Is Dropout Rate Neural Network.

From www.frontiersin.org

Frontiers Dropout in Neural Networks Simulates the Paradoxical What Is Dropout Rate Neural Network Dropout is a technique where randomly selected neurons are ignored during training. I am currently building a convolution neural network to play the game 2048. All the forward and backwards connections with a dropped. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. The term “dropout” refers to dropping out the. What Is Dropout Rate Neural Network.

From www.mdpi.com

Algorithms Free FullText Modified Convolutional Neural Network What Is Dropout Rate Neural Network Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. It has convolution layers and then 6 hidden layers. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). All of the guidance online mentions a dropout rate. I am currently building. What Is Dropout Rate Neural Network.

From www.ai2news.com

Adaptive dropout for training deep neural networks AI牛丝 What Is Dropout Rate Neural Network Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. They are “dropped out” randomly. This means that their contribution to the activation of downstream. All the forward and backwards connections with a dropped. I am currently building a convolution neural network to play the game 2048. All of the guidance online mentions a. What Is Dropout Rate Neural Network.

From api.deepai.org

On Dropout, Overfitting, and Interaction Effects in Deep Neural What Is Dropout Rate Neural Network All of the guidance online mentions a dropout rate. I am currently building a convolution neural network to play the game 2048. All the forward and backwards connections with a dropped. Dropout is a technique where randomly selected neurons are ignored during training. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network. What Is Dropout Rate Neural Network.

From analyticsindiamag.com

A Complete Understanding of Dense Layers in Neural Networks What Is Dropout Rate Neural Network I am currently building a convolution neural network to play the game 2048. All the forward and backwards connections with a dropped. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. Dropout is. What Is Dropout Rate Neural Network.

From programmathically.com

Dropout Regularization in Neural Networks How it Works and When to Use What Is Dropout Rate Neural Network This randomness prevents the network from. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. Dropout is a technique where randomly selected neurons are ignored during training. A simple way to prevent neural networks from. What Is Dropout Rate Neural Network.

From www.youtube.com

Tutorial 9 Drop Out Layers in Multi Neural Network YouTube What Is Dropout Rate Neural Network Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. A simple way to prevent neural networks from overfitting” paper. It has convolution layers and then 6 hidden layers. I am currently building a convolution neural. What Is Dropout Rate Neural Network.

From www.baeldung.com

How ReLU and Dropout Layers Work in CNNs Baeldung on Computer Science What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. Dropout is a technique where randomly selected neurons are ignored during training. This randomness prevents the network from. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. The term “dropout” refers to dropping out the nodes (input and hidden layer) in. What Is Dropout Rate Neural Network.

From www.frontiersin.org

Frontiers Dropout in Neural Networks Simulates the Paradoxical What Is Dropout Rate Neural Network For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. It has convolution layers and then 6 hidden layers. All the forward and backwards connections with a dropped. I am currently building a convolution neural network to play the game 2048. This means that their contribution to the activation of downstream. Dropout. What Is Dropout Rate Neural Network.

From www.researchgate.net

Dropout rate learning algorithm. Download Scientific Diagram What Is Dropout Rate Neural Network All the forward and backwards connections with a dropped. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. Dropout is a technique where randomly selected neurons are ignored during training. All of the guidance online mentions a dropout rate. I am currently building a convolution neural network to play the game. What Is Dropout Rate Neural Network.

From www.researchgate.net

Effect of dropout rate on prediction results Download Scientific Diagram What Is Dropout Rate Neural Network A simple way to prevent neural networks from overfitting” paper. They are “dropped out” randomly. All of the guidance online mentions a dropout rate. All the forward and backwards connections with a dropped. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. Dropout is a technique where randomly selected neurons are ignored during. What Is Dropout Rate Neural Network.

From cdanielaam.medium.com

Dropout Layer Explained in the Context of CNN by Carla Martins Medium What Is Dropout Rate Neural Network Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. This means that their contribution to the activation of downstream. This randomness prevents the network from. I am currently building a convolution neural network to play the game 2048. Dropout is a technique where randomly selected neurons are ignored during training. The term “dropout”. What Is Dropout Rate Neural Network.

From www.researchgate.net

(PDF) Dropout Rate Prediction of Massive Open Online Courses Based on What Is Dropout Rate Neural Network Dropout is a technique where randomly selected neurons are ignored during training. This randomness prevents the network from. I am currently building a convolution neural network to play the game 2048. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. Dropout is a regularization technique which involves randomly ignoring or “dropping. What Is Dropout Rate Neural Network.

From aashay96.medium.com

Experimentation with Variational Dropout Do exist inside a What Is Dropout Rate Neural Network It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. A simple way to prevent neural networks from overfitting” paper. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in. What Is Dropout Rate Neural Network.

From www.frontiersin.org

Frontiers Dropout in Neural Networks Simulates the Paradoxical What Is Dropout Rate Neural Network Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). All of the guidance online mentions a dropout rate. It has convolution layers and then 6 hidden layers. This randomness prevents the. What Is Dropout Rate Neural Network.

From medium.com

Understanding Dropout in Deep Neural Networks by Venkata Sasank What Is Dropout Rate Neural Network Dropout is a technique where randomly selected neurons are ignored during training. This randomness prevents the network from. I am currently building a convolution neural network to play the game 2048. A simple way to prevent neural networks from overfitting” paper. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. Dropout. What Is Dropout Rate Neural Network.

From towardsai.net

Introduction to Neural Networks and Their Key Elements… Towards AI What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. This randomness prevents the network from. I am currently building a convolution neural network to play the game 2048. A simple way to prevent neural networks from overfitting” paper. All the forward and backwards connections with a dropped. The term “dropout” refers to dropping out the nodes (input and hidden. What Is Dropout Rate Neural Network.

From dataaspirant.com

Deep learning dropout What Is Dropout Rate Neural Network Dropout is a technique where randomly selected neurons are ignored during training. I am currently building a convolution neural network to play the game 2048. This means that their contribution to the activation of downstream. They are “dropped out” randomly. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. A simple. What Is Dropout Rate Neural Network.

From deeplizard.com

Dropout Regularization for Neural Networks Deep Learning Dictionary What Is Dropout Rate Neural Network They are “dropped out” randomly. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. I am currently building a convolution neural network to play the game 2048. All the forward and backwards connections with a dropped. All of the guidance online mentions a dropout rate. It has convolution layers and then 6 hidden. What Is Dropout Rate Neural Network.

From evbn.org

Dropout Regularization in Deep Learning Analytics Vidhya EUVietnam What Is Dropout Rate Neural Network All the forward and backwards connections with a dropped. This randomness prevents the network from. This means that their contribution to the activation of downstream. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. It has convolution layers and then 6 hidden layers. Dropout is a regularization technique which involves randomly. What Is Dropout Rate Neural Network.

From www.researchgate.net

(PDF) Appropriateness of Dropout Layers and Allocation of Their 0.5 What Is Dropout Rate Neural Network All of the guidance online mentions a dropout rate. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). It has convolution layers and then 6 hidden layers. I am currently building. What Is Dropout Rate Neural Network.

From learnopencv.com

Implementing a CNN in TensorFlow & Keras What Is Dropout Rate Neural Network For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as seen in figure 1). I am currently building a convolution neural network to play the game 2048. A simple way to prevent neural networks. What Is Dropout Rate Neural Network.

From www.linkedin.com

How to Tune a Neural Network's Dropout Rate What Is Dropout Rate Neural Network All the forward and backwards connections with a dropped. I am currently building a convolution neural network to play the game 2048. This means that their contribution to the activation of downstream. It has convolution layers and then 6 hidden layers. Dropout is a technique where randomly selected neurons are ignored during training. A simple way to prevent neural networks. What Is Dropout Rate Neural Network.

From shichaoji.com

tensorflow neural network dropout decay learning rate Data Science What Is Dropout Rate Neural Network A simple way to prevent neural networks from overfitting” paper. I am currently building a convolution neural network to play the game 2048. They are “dropped out” randomly. All of the guidance online mentions a dropout rate. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. This randomness prevents the network. What Is Dropout Rate Neural Network.

From lassehansen.me

Neural Networks step by step Lasse Hansen What Is Dropout Rate Neural Network It has convolution layers and then 6 hidden layers. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. This means that their contribution to the activation of downstream. The term “dropout” refers to dropping out. What Is Dropout Rate Neural Network.

From www.analyticsvidhya.com

Tuning the Hyperparameters and Layers of Neural Network Deep Learning What Is Dropout Rate Neural Network Dropout is a technique where randomly selected neurons are ignored during training. All of the guidance online mentions a dropout rate. They are “dropped out” randomly. Dropout is a regularization technique which involves randomly ignoring or “dropping out” some layer outputs during. The term “dropout” refers to dropping out the nodes (input and hidden layer) in a neural network (as. What Is Dropout Rate Neural Network.

From www.analyticsvidhya.com

Evolution and Concepts Of Neural Networks Deep Learning What Is Dropout Rate Neural Network They are “dropped out” randomly. All the forward and backwards connections with a dropped. This means that their contribution to the activation of downstream. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network.. What Is Dropout Rate Neural Network.

From www.researchgate.net

Visualization of neural network loss function rate (Truncate = 150 What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. I am currently building a convolution neural network to play the game 2048. All of the guidance online mentions a dropout rate. Dropout is a technique where randomly selected neurons are ignored during training. They are “dropped out” randomly. It involves randomly “dropping out” a fraction of neurons during the. What Is Dropout Rate Neural Network.

From www.researchgate.net

The Recurrent Neural Network model with possible dropout locations What Is Dropout Rate Neural Network All the forward and backwards connections with a dropped. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. They are “dropped out” randomly. For instance, if p=0.5, it implies a neuron has a 50% chance of dropping out in every epoch. A simple way to prevent neural networks from overfitting” paper.. What Is Dropout Rate Neural Network.

From www.researchgate.net

The comparison of different dropout rate hyperparameters for each What Is Dropout Rate Neural Network This means that their contribution to the activation of downstream. A simple way to prevent neural networks from overfitting” paper. All the forward and backwards connections with a dropped. It involves randomly “dropping out” a fraction of neurons during the training process, effectively creating a sparse network. For instance, if p=0.5, it implies a neuron has a 50% chance of. What Is Dropout Rate Neural Network.