Ml Distillation . By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used.

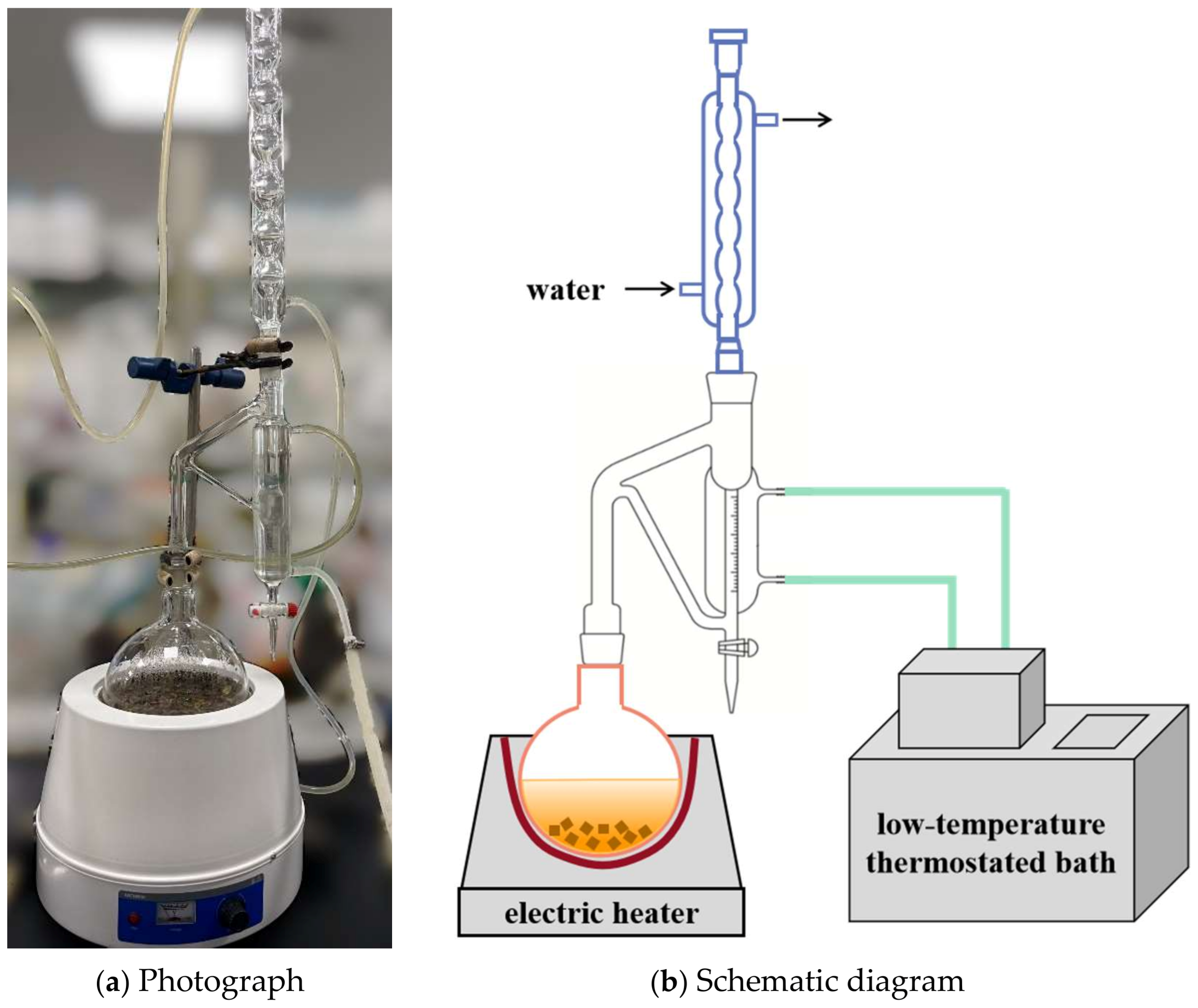

from www.mdpi.com

By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used.

Separations Free FullText Optimization of Steam Distillation

Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model).

From www.ebay.co.uk

500/1000ml Lab Distilling Apparatus Round Flask with Coil Glass Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. We achieve some surprising results on mnist and we show that we can significantly improve. Ml Distillation.

From www.shutterstock.com

diagramme de distillation simple en chimie image vectorielle de stock Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation is a technique that enables. Ml Distillation.

From www.slideshare.net

Distillation Ml Distillation We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger. Ml Distillation.

From fr.aliexpress.com

Appareil De DistillationAchetez des lots à Petit Prix Appareil De Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. By enabling smaller and sometimes even more efficient models that. Ml Distillation.

From www.stanhope-seta.co.uk

Distillation 500 ml Flask Heater StanhopeSeta Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation is a technique that enables. Ml Distillation.

From www.laborxing.com

Solvent distillation drying apparatus, capacity 500 to 2.000 ml Laborxing Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of. Ml Distillation.

From www.vecteezy.com

Simple distillation model in chemistry laboratory 2399309 Vector Art at Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model. Ml Distillation.

From labsuppliesusa.com

250ml Distilling Flask, Distillation Flask, with Side arm KLM Bio Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. By enabling smaller and sometimes even more efficient models that. Ml Distillation.

From www.indiamart.com

MAYALAB Laboratory Testing Products Glass Distillation Apparatus, For Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a. Ml Distillation.

From www.ubuy.za.com

Buy 1000ml Essential Oil Distillation Apparatus with Graham Condenser Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a. Ml Distillation.

From www.aliexpress.com

15pcs New 500ml Lab Distillation Apparatus Essential Oil Pure Water Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation is a technique that enables. Ml Distillation.

From www.aliexpress.com

1000ml,24/40,Distillation Apparatus,Vacuum Distill Kit,Vigreux Column Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the. Ml Distillation.

From www.stanhope-seta.co.uk

Distillation 500 ml Flask Heater (for up to 3 flasks) StanhopeSeta Ml Distillation By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist. Ml Distillation.

From www.stanhope-seta.co.uk

ASTM D86 Distillation Kit StanhopeSeta Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. In machine learning, distillation is a technique for transferring knowledge from a large, complex model. Ml Distillation.

From pubs.acs.org

Lesson Learned from a Fire during Distillation Choose the Appropriate Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. Knowledge distillation is a technique that enables knowledge transfer from. Ml Distillation.

From www.indiamart.com

Nikam Distillation Unit, Capacity From 100 Ml To 400 Liter at best Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. In machine learning, distillation is a technique for transferring knowledge from a. Ml Distillation.

From chinainstrument.en.made-in-china.com

Double Units Distillation Tester Distillation Equipment for Petroleum Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we. Ml Distillation.

From www.myxxgirl.com

Distillation Condenser My XXX Hot Girl Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we. Ml Distillation.

From www.pinterest.com

Simple Distillation Distillation, Chemistry lessons, Teaching chemistry Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to. Ml Distillation.

From acemachinerychina.en.made-in-china.com

Alcohol Distiller 100L 200L 300L Tank with 4" 6" 8" Copper/Stainless Ml Distillation We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without. Ml Distillation.

From www.aliexpress.com

Laboratory distillation unit, purification device 250ml Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to. Ml Distillation.

From shop.wf-education.com

Distillation Flask 50Ml Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). We achieve some surprising results on mnist and we show that we. Ml Distillation.

From supertekglassware.com

Vacuum Distillation Ml Distillation We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large. Ml Distillation.

From www.chemicals.co.uk

Distillation Of A Product From A Reaction The Chemistry Blog Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. In machine learning, distillation is a technique for transferring knowledge from a large, complex model. Ml Distillation.

From www.premierlabsupply.com

10mL Distillation Flask for ISL® PMD 100 PREMIER Lab Supply Ml Distillation By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve. Ml Distillation.

From www.merchantnavydecoded.com

Distillation Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we. Ml Distillation.

From tillescenter.org

Precision 14/20 10 mL Dean Stark Distillation Apparatus tillescenter Ml Distillation We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without. Ml Distillation.

From tice.ac-montpellier.fr

LA DISTILLATION SIMPLE Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the. Ml Distillation.

From chem.libretexts.org

1A.3 Classifying Matter Chemistry LibreTexts Ml Distillation By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. We achieve some surprising results on mnist and we show that we can significantly improve. Ml Distillation.

From www.stanhope-seta.co.uk

Setastill Distillation StanhopeSeta Ml Distillation Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. In machine learning, distillation is a technique. Ml Distillation.

From www.ecrater.com

Premium 1000ml 24/40 distillation kit with stands & heating mantle Ml Distillation Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without losing validity. Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. We achieve some surprising results on mnist and we show that we. Ml Distillation.

From www.vernier.com

Fractional Distillation > Experiment 8 from Chemistry with Vernier Ml Distillation We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. Knowledge distillation refers to the process of transferring the knowledge from a large. Ml Distillation.

From www.mdpi.com

Separations Free FullText Optimization of Steam Distillation Ml Distillation By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. Knowledge distillation refers to the process of transferring the knowledge from a large. Ml Distillation.

From www.vevor.fr

VEVOR équipement Expérimental de Distillation 500ML, Laboratoire Ml Distillation In machine learning, distillation is a technique for transferring knowledge from a large, complex model (often called the teacher model). Knowledge distillation refers to the process of transferring the knowledge from a large unwieldy model or set of models to a single smaller model that can be practically. Knowledge distillation is a technique that enables knowledge transfer from large, computationally. Ml Distillation.

From www.stanhope-seta.co.uk

Straight Bore Neck Distillation Flask 125 ml (Pack of 5) StanhopeSeta Ml Distillation By enabling smaller and sometimes even more efficient models that retain much of the performance of their larger counterparts, knowledge distillation helps bridge the gap. We achieve some surprising results on mnist and we show that we can significantly improve the acoustic model of a heavily used. In machine learning, distillation is a technique for transferring knowledge from a large,. Ml Distillation.