Torch.distributed.nn.jit.instantiator . In addition to wrapping the model with dataparallel, we. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. 🐛 describe the bug code to reproduce the issue: Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,.

from blog.csdn.net

🐛 describe the bug code to reproduce the issue: In addition to wrapping the model with dataparallel, we. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,.

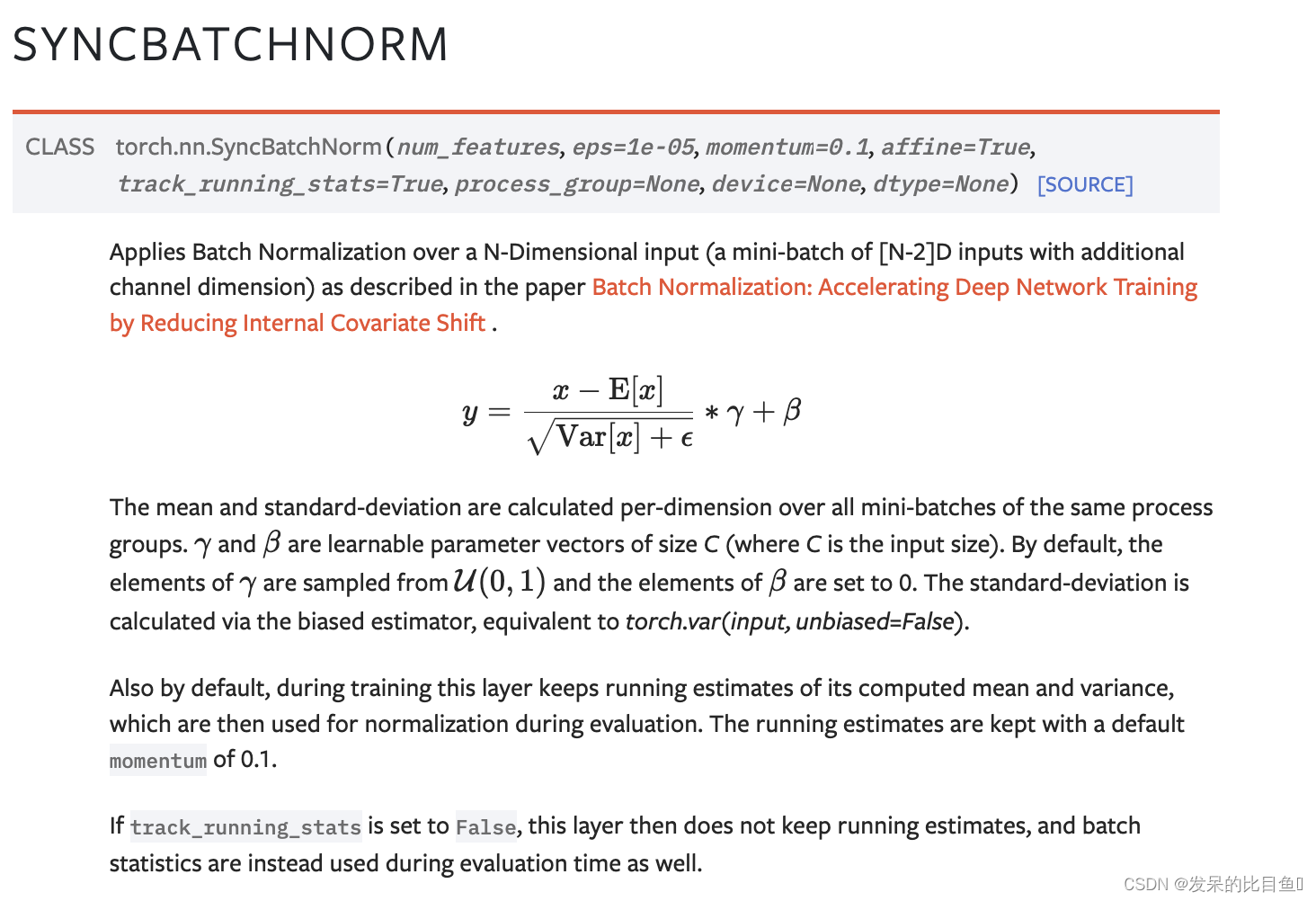

小白学Pytorch系列Torch.nn API Normalization Layers(7)_lazybatchnormCSDN博客

Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: In addition to wrapping the model with dataparallel, we. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. 🐛 describe the bug code to reproduce the issue: / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,.

From github.com

torch.jit.trace with pack_padded_sequence cannot do dynamic batch Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. In addition to wrapping the model with dataparallel, we. 🐛 describe the bug code to reproduce the issue: I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e.,. Torch.distributed.nn.jit.instantiator.

From github.com

torch.jit.script failed to compile nn.MultiheadAttention when Torch.distributed.nn.jit.instantiator In addition to wrapping the model with dataparallel, we. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Based on a quick look,. Torch.distributed.nn.jit.instantiator.

From github.com

torch.distributed.nn.all_reduce incorrectly scales the gradient · Issue Torch.distributed.nn.jit.instantiator In addition to wrapping the model with dataparallel, we. I suspect the fix is torch.cuda.init() or a version thereof. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. 🐛 describe the bug code to reproduce the issue: / distributed / nn / jit /. Torch.distributed.nn.jit.instantiator.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Normalization Layers(7)_lazybatchnormCSDN博客 Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. I suspect the fix is torch.cuda.init() or a version thereof. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import. Torch.distributed.nn.jit.instantiator.

From github.com

jit tracing error for nn.Sequential with nn.Conv2d in torch 1.1.0 Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. 🐛 describe the bug code to reproduce the issue: In addition to wrapping the model with dataparallel, we. I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners. Torch.distributed.nn.jit.instantiator.

From github.com

[JIT] support list of nn.Module in torchscript · Issue 36061 · pytorch Torch.distributed.nn.jit.instantiator Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In addition to wrapping the model with dataparallel, we. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to. Torch.distributed.nn.jit.instantiator.

From aitechtogether.com

【深度学习】多卡训练__单机多GPU方法详解(torch.nn.DataParallel、torch.distributed) AI技术聚合 Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: I suspect the fix is torch.cuda.init() or a version thereof. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick look, what i think is happening. Torch.distributed.nn.jit.instantiator.

From github.com

torch.save does not work if nn.Module has partial JIT. · Issue 15116 Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. In addition to wrapping the model with dataparallel, we. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. 🐛 describe the bug code. Torch.distributed.nn.jit.instantiator.

From github.com

torch.nn.utils.clip_grad_norm_ super slow with PyTorch distributed Torch.distributed.nn.jit.instantiator Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included. Torch.distributed.nn.jit.instantiator.

From blog.csdn.net

TorchScript (将动态图转为静态图)(模型部署)(jit)(torch.jit.trace)(torch.jit.script Torch.distributed.nn.jit.instantiator I suspect the fix is torch.cuda.init() or a version thereof. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In addition to wrapping the model with dataparallel, we. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import. Torch.distributed.nn.jit.instantiator.

From www.educba.com

PyTorch JIT Script and Modules of PyTorch JIT with Example Torch.distributed.nn.jit.instantiator Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. 🐛 describe the bug code to reproduce the issue: I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import. Torch.distributed.nn.jit.instantiator.

From github.com

[jit] Problem saving nn.Module as a TorchScript module (DLRM model Torch.distributed.nn.jit.instantiator I suspect the fix is torch.cuda.init() or a version thereof. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. 🐛 describe the bug code to reproduce the issue: The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Based on a quick look,. Torch.distributed.nn.jit.instantiator.

From github.com

[JIT] Support torch.distributions.utils.broadcast_all() · Issue 10041 Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. In addition to wrapping. Torch.distributed.nn.jit.instantiator.

From zhuanlan.zhihu.com

Torch DDP入门 知乎 Torch.distributed.nn.jit.instantiator Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. 🐛 describe the bug code to reproduce the issue: Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os. Torch.distributed.nn.jit.instantiator.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. I suspect the fix is torch.cuda.init() or a version thereof. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included in. Torch.distributed.nn.jit.instantiator.

From aeyoo.net

pytorch Module介绍 TiuVe Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. 🐛 describe the bug code to reproduce the issue: I suspect the fix is torch.cuda.init() or a version thereof. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source]. Torch.distributed.nn.jit.instantiator.

From blog.csdn.net

【PyTorch】PyCharm远程连接服务器,调试torch.distributed.launch分布式程序_torch Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. In addition to wrapping. Torch.distributed.nn.jit.instantiator.

From blog.csdn.net

torchjitload(model_path) 失败原因CSDN博客 Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. 🐛 describe the bug code to reproduce the issue: I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers. Torch.distributed.nn.jit.instantiator.

From github.com

torch.distributed.init_process_group setting variables · Issue 13 Torch.distributed.nn.jit.instantiator / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. I suspect the fix is torch.cuda.init() or a version thereof. In addition to wrapping the model with dataparallel, we. 🐛 describe the bug code to reproduce the issue: The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners. Torch.distributed.nn.jit.instantiator.

From www.educba.com

torch.nn Module Modules and Classes in torch.nn Module with Examples Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import. Torch.distributed.nn.jit.instantiator.

From github.com

torch.jit.load support specifying a target device. · Issue 775 Torch.distributed.nn.jit.instantiator The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In addition to wrapping the model with dataparallel, we. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick look, what i think is happening is when torch_distributed_debug is set. Torch.distributed.nn.jit.instantiator.

From github.com

Write some torch.distributed.nn.* tests for the new dispatcher passable Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. In addition to wrapping the model. Torch.distributed.nn.jit.instantiator.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch.distributed.nn.jit.instantiator The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In addition to wrapping the model with dataparallel, we. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to. Torch.distributed.nn.jit.instantiator.

From github.com

[JIT] nn.Sequential of nn.Module with input type List[torch.Tensor Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. 🐛 describe the bug code to reproduce the issue: In addition to wrapping the model with dataparallel, we. I. Torch.distributed.nn.jit.instantiator.

From github.com

[jit] support for torch.distributed primitives · Issue 41353 · pytorch Torch.distributed.nn.jit.instantiator In addition to wrapping the model with dataparallel, we. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a. Torch.distributed.nn.jit.instantiator.

From github.com

torch.nn.DataParallel or torch.distributed.run · Issue 1814 Torch.distributed.nn.jit.instantiator In addition to wrapping the model with dataparallel, we. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Based on a quick. Torch.distributed.nn.jit.instantiator.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. In addition to wrapping the model with dataparallel, we. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included in pytorch. Torch.distributed.nn.jit.instantiator.

From blog.csdn.net

TorchScript (将动态图转为静态图)(模型部署)(jit)(torch.jit.trace)(torch.jit.script Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. I suspect the fix is torch.cuda.init() or a version thereof. In addition to wrapping the model with dataparallel, we.. Torch.distributed.nn.jit.instantiator.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. 🐛 describe the bug code to reproduce the issue: In addition to wrapping the model with dataparallel, we. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys. Torch.distributed.nn.jit.instantiator.

From github.com

xla/test_torch_distributed_xla_backend.py at master · pytorch/xla · GitHub Torch.distributed.nn.jit.instantiator The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. I suspect the fix is torch.cuda.init() or a version thereof. In addition to wrapping the model with dataparallel, we. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate.. Torch.distributed.nn.jit.instantiator.

From github.com

Model training stops after "INFOtorch.nn.parallel.distributedReducer Torch.distributed.nn.jit.instantiator The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. 🐛 describe the bug code to reproduce the issue: Based on a quick. Torch.distributed.nn.jit.instantiator.

From github.com

torch.jit.trace fails when the model has the function nn.functional Torch.distributed.nn.jit.instantiator Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. In addition to wrapping the model with dataparallel, we. 🐛 describe the bug code to reproduce the issue: / distributed / nn / jit. Torch.distributed.nn.jit.instantiator.

From data-flair.training

Torch.nn in PyTorch DataFlair Torch.distributed.nn.jit.instantiator 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In addition to wrapping. Torch.distributed.nn.jit.instantiator.

From github.com

Adding a new kwarg to a torch.nn.functional function breaks FC for JIT Torch.distributed.nn.jit.instantiator I suspect the fix is torch.cuda.init() or a version thereof. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. 🐛 describe the bug code to reproduce the issue: Torch.distributed.device_mesh.init_device_mesh(device_type, mesh_shape, *, mesh_dim_names=none) [source] initializes a devicemesh based on device_type,. In addition to wrapping the model with dataparallel, we. Based on a quick look,. Torch.distributed.nn.jit.instantiator.

From github.com

how to use torch.jit.script with toch.nn.DataParallel · Issue 67438 Torch.distributed.nn.jit.instantiator Based on a quick look, what i think is happening is when torch_distributed_debug is set to detail, we create a wrapper pg (used to validate. I suspect the fix is torch.cuda.init() or a version thereof. / distributed / nn / jit / instantiator.py #!/usr/bin/python3 import importlib import logging import os import sys import tempfile import. In addition to wrapping the. Torch.distributed.nn.jit.instantiator.