Get List Of Folders In S3 Bucket Pyspark . Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Createdataframe (fis) df = (df. Select the file, and click on. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3 files in a bucket/folder. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. To read from s3 we need the path to the file saved in s3. For this example, we are using data files stored in dbfs at. Is it possible to list all of the files in given s3 path (ex: The folder i want to access is:. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. To get the path open the s3 bucket we created.

from code2care.org

The folder i want to access is:. There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Select the file, and click on. To read from s3 we need the path to the file saved in s3. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. For this example, we are using data files stored in dbfs at. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function.

Get the total size and number of objects of a AWS S3 bucket and folders

Get List Of Folders In S3 Bucket Pyspark I'm trying to generate a list of all s3 files in a bucket/folder. I'm trying to generate a list of all s3 files in a bucket/folder. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. To read from s3 we need the path to the file saved in s3. The folder i want to access is:. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. Is it possible to list all of the files in given s3 path (ex: For this example, we are using data files stored in dbfs at. Select the file, and click on. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. There are usually in the magnitude of millions of files in the folder. To get the path open the s3 bucket we created. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Createdataframe (fis) df = (df. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands.

From www.geeksforgeeks.org

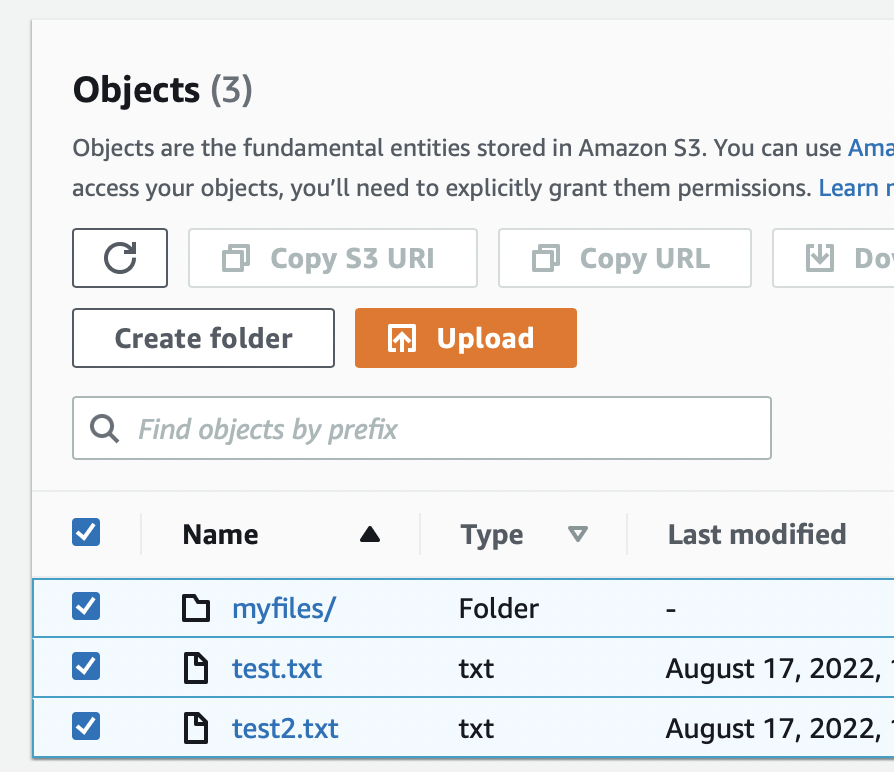

How To Store Data in a S3 Bucket? Get List Of Folders In S3 Bucket Pyspark To read from s3 we need the path to the file saved in s3. The folder i want to access is:. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. Select the file, and click on. I'm trying to generate a list of all s3 files in. Get List Of Folders In S3 Bucket Pyspark.

From fyottqvbu.blob.core.windows.net

How To Get A S3 Bucket Url at Henry Shepler blog Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. Select the file, and click on. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. To read from s3 we need the path to the file saved in s3. Is it possible to list all of the files in given s3 path (ex: I have a s3 bucket in which i. Get List Of Folders In S3 Bucket Pyspark.

From www.hava.io

Amazon S3 Fundamentals Get List Of Folders In S3 Bucket Pyspark You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3 files in a. Get List Of Folders In S3 Bucket Pyspark.

From github.com

GitHub mehroosali/s3redshiftbatchetlpipeline Built functional Get List Of Folders In S3 Bucket Pyspark Is it possible to list all of the files in given s3 path (ex: Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Select the file, and click on. To get the path open the s3 bucket we created. I'm trying to generate a list of all s3 files in a bucket/folder. If you want to list the files/objects inside a. Get List Of Folders In S3 Bucket Pyspark.

From klavmkifv.blob.core.windows.net

Create Folder In S3 Bucket Terminal at Johnny Lemos blog Get List Of Folders In S3 Bucket Pyspark Select the file, and click on. Createdataframe (fis) df = (df. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. The folder i want to access is:. To read from s3 we need the path to the file saved in s3. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. If you want to. Get List Of Folders In S3 Bucket Pyspark.

From campolden.org

Get All File Names In S3 Bucket Python Templates Sample Printables Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Createdataframe (fis) df = (df. Is it possible to list all of the files in given s3 path (ex: To read from s3. Get List Of Folders In S3 Bucket Pyspark.

From akshaykotawar.hashnode.dev

How Efficiently Download Folders with Folder Structure from Amazon S3 Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. The folder i want to access is:. For this example, we are using data files stored in dbfs at. I'm trying to generate a list of all s3 files in a bucket/folder. Select the file, and click on. Is it possible to. Get List Of Folders In S3 Bucket Pyspark.

From docs.cloudeka.id

Upload files and folders in S3 Cloudeka Get List Of Folders In S3 Bucket Pyspark In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. To get the path open the s3 bucket we created. For. Get List Of Folders In S3 Bucket Pyspark.

From fyopsvtos.blob.core.windows.net

Create Folder In S3 Bucket Nodejs at Donald Villanueva blog Get List Of Folders In S3 Bucket Pyspark I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Createdataframe (fis) df = (df. Select the file, and click on. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. The folder i want to access is:. To read from s3 we need the path to the file saved in s3. There. Get List Of Folders In S3 Bucket Pyspark.

From exormduoq.blob.core.windows.net

Get List Of Files In S3 Bucket Python at Richard Wiggins blog Get List Of Folders In S3 Bucket Pyspark For this example, we are using data files stored in dbfs at. I'm trying to generate a list of all s3 files in a bucket/folder. To get the path open the s3 bucket we created. Createdataframe (fis) df = (df. There are usually in the magnitude of millions of files in the folder. Is it possible to list all of. Get List Of Folders In S3 Bucket Pyspark.

From learn.microsoft.com

Want to pull files from nested S3 bucket folders and want to save them Get List Of Folders In S3 Bucket Pyspark In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. To read from s3 we need the path to the file saved in s3. Select the file, and click. Get List Of Folders In S3 Bucket Pyspark.

From github.com

GitHub FoodyFood/pythons3bucketexplorer Explore an S3 bucket and Get List Of Folders In S3 Bucket Pyspark If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. In this tutorial, we are going to learn few ways to list files in s3 bucket using. Get List Of Folders In S3 Bucket Pyspark.

From ruslanmv.com

How to read and write files from S3 bucket with PySpark in a Docker Get List Of Folders In S3 Bucket Pyspark There are usually in the magnitude of millions of files in the folder. Createdataframe (fis) df = (df. To get the path open the s3 bucket we created. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Is it possible to list all of the files in given s3 path (ex: Select the. Get List Of Folders In S3 Bucket Pyspark.

From www.linuxconsultant.org

Downloading Folders From AWS S3 Bucket cp vs sync Linux Consultant Get List Of Folders In S3 Bucket Pyspark There are usually in the magnitude of millions of files in the folder. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. To read from s3 we need the path to the file saved in s3. Is it possible to list all. Get List Of Folders In S3 Bucket Pyspark.

From www.geeksforgeeks.org

How To Store Data in a S3 Bucket? Get List Of Folders In S3 Bucket Pyspark Is it possible to list all of the files in given s3 path (ex: If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. For this example, we are using data files stored in dbfs at. I'm trying to generate a list of. Get List Of Folders In S3 Bucket Pyspark.

From exoopimvu.blob.core.windows.net

List Of Files In S3 Bucket at Albert Stone blog Get List Of Folders In S3 Bucket Pyspark Select the file, and click on. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. For this example, we are using data files stored in dbfs at. Createdataframe (fis) df = (df. Is it possible to list all of the files in given s3 path (ex: There are usually in. Get List Of Folders In S3 Bucket Pyspark.

From exokfdeir.blob.core.windows.net

List Objects In S3 Bucket Folder at Jennifer Hernandez blog Get List Of Folders In S3 Bucket Pyspark Createdataframe (fis) df = (df. The folder i want to access is:. For this example, we are using data files stored in dbfs at. To read from s3 we need the path to the file saved in s3. Select the file, and click on. Is it possible to list all of the files in given s3 path (ex: To get. Get List Of Folders In S3 Bucket Pyspark.

From campolden.org

Pyspark List All Files In S3 Directory Templates Sample Printables Get List Of Folders In S3 Bucket Pyspark I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Is it possible to list all of the files in given s3 path (ex: In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. You can list files on. Get List Of Folders In S3 Bucket Pyspark.

From exoopimvu.blob.core.windows.net

List Of Files In S3 Bucket at Albert Stone blog Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. The folder i want to access is:. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Is it possible to list all of the files in given s3 path (ex: There are usually in the magnitude of millions of files in the folder. To read from s3 we need the path. Get List Of Folders In S3 Bucket Pyspark.

From campolden.org

Delete Folder In S3 Bucket Aws Cli Templates Sample Printables Get List Of Folders In S3 Bucket Pyspark In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. For this example, we are using data files stored in dbfs at. To get the path open the s3 bucket we created. Is it possible to list all of the files in given s3 path (ex: If you. Get List Of Folders In S3 Bucket Pyspark.

From www.youtube.com

List files and folders of AWS S3 bucket using prefix & delimiter YouTube Get List Of Folders In S3 Bucket Pyspark You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored in dbfs at. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. To get the path open. Get List Of Folders In S3 Bucket Pyspark.

From code2care.org

Get the total size and number of objects of a AWS S3 bucket and folders Get List Of Folders In S3 Bucket Pyspark For this example, we are using data files stored in dbfs at. To read from s3 we need the path to the file saved in s3. There are usually in the magnitude of millions of files in the folder. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Fis =. Get List Of Folders In S3 Bucket Pyspark.

From klavmkifv.blob.core.windows.net

Create Folder In S3 Bucket Terminal at Johnny Lemos blog Get List Of Folders In S3 Bucket Pyspark There are usually in the magnitude of millions of files in the folder. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. Createdataframe (fis) df = (df. The folder i want to access is:. Select the file, and click on. To get the path open the s3 bucket we created. I'm trying to generate a list of all s3 files in. Get List Of Folders In S3 Bucket Pyspark.

From exodpgkwu.blob.core.windows.net

Get List Of Files In S3 Bucket Java at Norma Christensen blog Get List Of Folders In S3 Bucket Pyspark I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. The folder i want to access is:. To read from s3 we need the path to the file saved. Get List Of Folders In S3 Bucket Pyspark.

From www.radishlogic.com

How to upload a file to S3 Bucket using boto3 and Python Radish Logic Get List Of Folders In S3 Bucket Pyspark The folder i want to access is:. Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. To read from s3 we need the path to the file saved in s3. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. I have a s3. Get List Of Folders In S3 Bucket Pyspark.

From www.twilio.com

How to Store and Display Media Files Using Python and Amazon S3 Buckets Get List Of Folders In S3 Bucket Pyspark For this example, we are using data files stored in dbfs at. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. There are usually in the magnitude of millions of files in the folder. To get the path open the s3 bucket we created. To read from s3 we need the path to. Get List Of Folders In S3 Bucket Pyspark.

From klavmkifv.blob.core.windows.net

Create Folder In S3 Bucket Terminal at Johnny Lemos blog Get List Of Folders In S3 Bucket Pyspark In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. Createdataframe (fis) df = (df. To get the path open the s3 bucket we created. The folder i want to access is:. To read from s3 we need the path to the file saved in s3. You can. Get List Of Folders In S3 Bucket Pyspark.

From www.radishlogic.com

How to download all files in an S3 Bucket using AWS CLI Radish Logic Get List Of Folders In S3 Bucket Pyspark You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. To read from s3 we need the path to the file saved in s3. There are usually in the magnitude of millions of files in the folder. The folder i want to access is:. To get the path open the s3 bucket we created.. Get List Of Folders In S3 Bucket Pyspark.

From exodpgkwu.blob.core.windows.net

Get List Of Files In S3 Bucket Java at Norma Christensen blog Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. To read from s3 we need the path to the file saved in s3. I'm trying to generate a list of all s3 files in a bucket/folder. In this tutorial, we are going to. Get List Of Folders In S3 Bucket Pyspark.

From stackoverflow.com

amazon web services How to get object URL of all image files from Get List Of Folders In S3 Bucket Pyspark To get the path open the s3 bucket we created. For this example, we are using data files stored in dbfs at. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. Createdataframe (fis) df = (df. If you want to list the files/objects inside a specific folder. Get List Of Folders In S3 Bucket Pyspark.

From techblogs.42gears.com

Listing Objects in S3 Bucket using ASP Core Part3 Tech Blogs Get List Of Folders In S3 Bucket Pyspark Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. I'm trying to generate a list of all s3 files in a bucket/folder. The folder i want to access is:. You can list files on a distributed file system (dbfs, s3 or hdfs). Get List Of Folders In S3 Bucket Pyspark.

From exoopimvu.blob.core.windows.net

List Of Files In S3 Bucket at Albert Stone blog Get List Of Folders In S3 Bucket Pyspark Fis = list_files_with_hdfs (spark, s3://a_bucket/) df = spark. The folder i want to access is:. Createdataframe (fis) df = (df. If you want to list the files/objects inside a specific folder within an s3 bucket then you will need to use the list_objects_v2 method with the prefix. I have a s3 bucket in which i store datafiles that are to. Get List Of Folders In S3 Bucket Pyspark.

From ruslanmv.com

How to read and write files from S3 bucket with PySpark in a Docker Get List Of Folders In S3 Bucket Pyspark Createdataframe (fis) df = (df. There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Is it possible to list all of the files in given s3 path (ex: In this tutorial, we are going to learn few ways to list. Get List Of Folders In S3 Bucket Pyspark.

From jotelulu.com

S3 Buckets Quick Guide Get List Of Folders In S3 Bucket Pyspark I'm trying to generate a list of all s3 files in a bucket/folder. I have a s3 bucket in which i store datafiles that are to be processed by my pyspark code. Createdataframe (fis) df = (df. Is it possible to list all of the files in given s3 path (ex: In this tutorial, we are going to learn few. Get List Of Folders In S3 Bucket Pyspark.

From plainenglish.io

AWS Lambda Get a List of Folders in the S3 Bucket Get List Of Folders In S3 Bucket Pyspark You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I'm trying to generate a list of all s3 files in a bucket/folder. In this tutorial, we are going to learn few ways to list files in s3 bucket using python, boto3, and list_objects_v2 function. To read from s3 we need the path to. Get List Of Folders In S3 Bucket Pyspark.