How Does Bert Encoder Work . Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. What is bert and how does it work? Bert stands for bidirectional encoder representation transformer. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. It has created a major. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data.

from www.mdpi.com

What is bert and how does it work? Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representation transformer.

Symmetry Free FullText Contextual EmbeddingsBased Page

How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representation transformer. What is bert and how does it work? Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently.

From www.researchgate.net

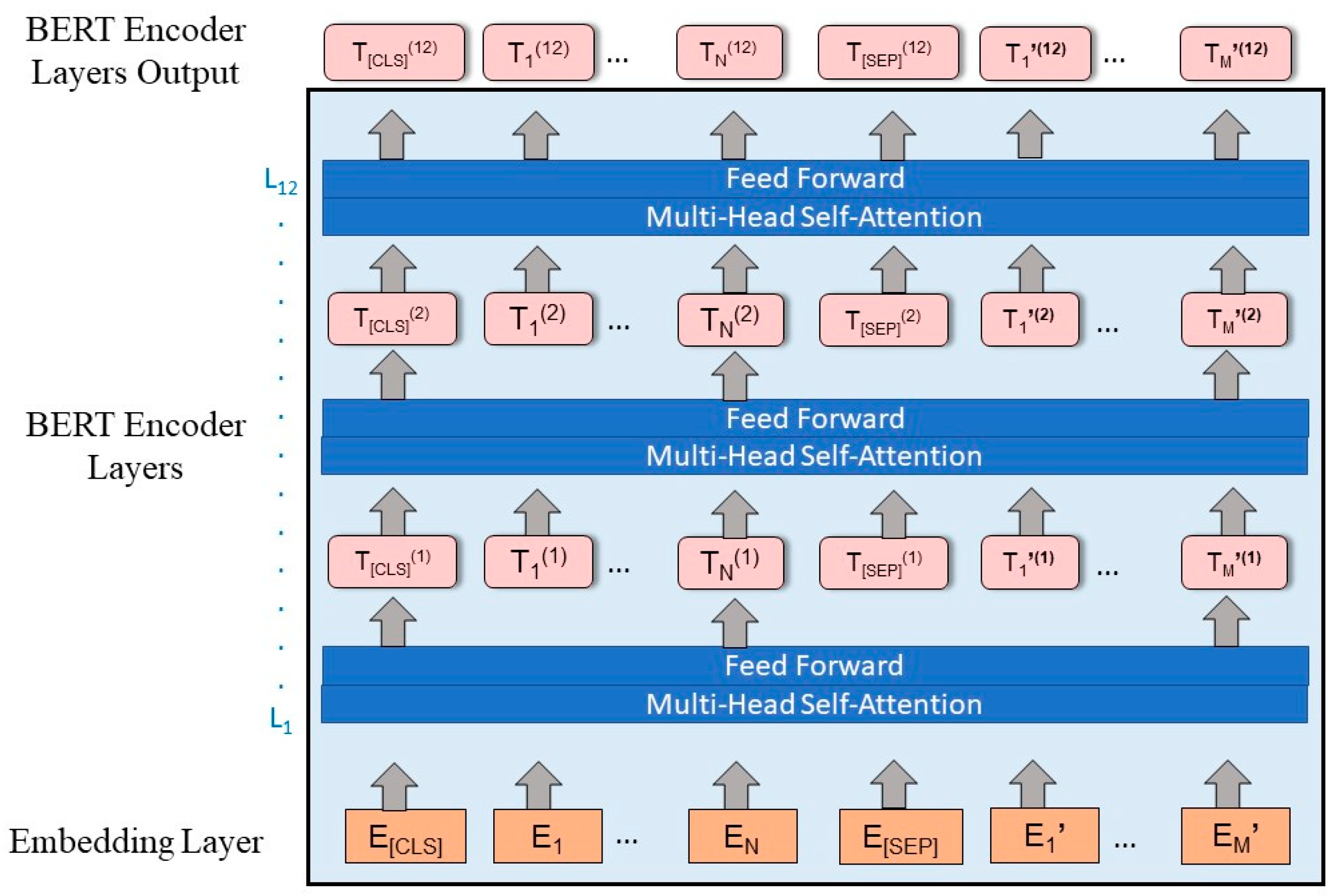

BERT Encoder N × Transformer Blocks. Download Scientific Diagram How Does Bert Encoder Work It has created a major. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. What is bert and how does it. How Does Bert Encoder Work.

From ekbanaml.github.io

BERT (Bidirectional Encoder Representation From Transformers) EKbana How Does Bert Encoder Work Bert stands for bidirectional encoder representation transformer. It has created a major. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input. How Does Bert Encoder Work.

From www.turing.com

How BERT NLP Optimization Model Works How Does Bert Encoder Work What is bert and how does it work? This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representation transformer. It has created a. How Does Bert Encoder Work.

From www.turing.com

How BERT NLP Optimization Model Works How Does Bert Encoder Work It has created a major. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. What is bert and how does it work? Bert takes a different approach, it considers all the words of the. How Does Bert Encoder Work.

From ekbanaml.github.io

BERT (Bidirectional Encoder Representation From Transformers) EKbana How Does Bert Encoder Work Bert stands for bidirectional encoder representation transformer. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as shown. How Does Bert Encoder Work.

From www.exxactcorp.com

BERT Transformers How Do They Work? Exxact Blog How Does Bert Encoder Work Bert stands for bidirectional encoder representation transformer. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representations from transformers and is a language. How Does Bert Encoder Work.

From blog.csdn.net

Bert系列:BERT(Bidirectional Encoder Representations from Transformers)原理 How Does Bert Encoder Work Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. What is bert and how does it work? Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. It. How Does Bert Encoder Work.

From huggingface.co

Leveraging Pretrained Language Model Checkpoints for EncoderDecoder How Does Bert Encoder Work It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert stands for bidirectional encoder representation transformer. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert takes a different approach, it considers all the words of the input. How Does Bert Encoder Work.

From arize.com

Unleashing the Power of BERT How the Transformer Model Revolutionized NLP How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. What is bert and how does it work? Bert stands for bidirectional encoder representation transformer.. How Does Bert Encoder Work.

From www.turing.com

How BERT NLP Optimization Model Works How Does Bert Encoder Work What is bert and how does it work? Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representation transformer. It has created a major. This approach. How Does Bert Encoder Work.

From www.researchgate.net

Dialogue Generator. Bert2Transformer’s encoder is Bert base, and the How Does Bert Encoder Work This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual. How Does Bert Encoder Work.

From medium.com

How Does Bert Model Work?. BERT stands for Bidirectional Encoder… by How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as shown in the elmo (embeddings from. How Does Bert Encoder Work.

From programmer.ink

[actual combat] it's time to thoroughly understand the BERT model (don How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. What is bert and how does it work? Let’s take a look. How Does Bert Encoder Work.

From humboldt-wi.github.io

Bidirectional Encoder Representations from Transformers (BERT) How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. It has created a major. Bert stands for bidirectional encoder representation transformer. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. This approach works well for many nlp tasks as shown. How Does Bert Encoder Work.

From zhuanlan.zhihu.com

[NLP]BERT Bidirectional Encoder Representations from Transformers 知乎 How Does Bert Encoder Work It has created a major. Bert stands for bidirectional encoder representation transformer. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. What is bert and how does it work? Let’s take a look at. How Does Bert Encoder Work.

From neptune.ai

10 Things You Need to Know About BERT and the Transformer Architecture How Does Bert Encoder Work Bert stands for bidirectional encoder representation transformer. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. What is bert and how does it work?. How Does Bert Encoder Work.

From exoxoibxb.blob.core.windows.net

What Is Bert Transformer at Dara Lowery blog How Does Bert Encoder Work Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. It has created a major. Bert takes a different approach, it considers all the words of the input sentence. How Does Bert Encoder Work.

From wandb.ai

An Introduction to BERT And How To Use It BERT_Sentiment_Analysis How Does Bert Encoder Work What is bert and how does it work? Bert stands for bidirectional encoder representation transformer. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp. How Does Bert Encoder Work.

From www.geeksforgeeks.org

Explanation of BERT Model NLP How Does Bert Encoder Work Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. What is bert and how does it work? It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as. How Does Bert Encoder Work.

From www.researchgate.net

Overview of proposed framework. On the left BERT encoder in green How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert stands for bidirectional encoder representation transformer. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. This approach works well for many nlp tasks. How Does Bert Encoder Work.

From exoxoibxb.blob.core.windows.net

What Is Bert Transformer at Dara Lowery blog How Does Bert Encoder Work This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representation transformer. Bert takes a different approach, it considers all the words of the. How Does Bert Encoder Work.

From medium.com

BERT And Its Model Variants. BERT BERT (Bidirectional Encoder… by How Does Bert Encoder Work Bert stands for bidirectional encoder representation transformer. It has created a major. What is bert and how does it work? Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. This approach. How Does Bert Encoder Work.

From www.mdpi.com

Symmetry Free FullText Contextual EmbeddingsBased Page How Does Bert Encoder Work What is bert and how does it work? It has created a major. Bert stands for bidirectional encoder representation transformer. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Let’s take a look at. How Does Bert Encoder Work.

From quantpedia.com

BERT Model Bidirectional Encoder Representations from Transformers How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. Bert stands for bidirectional encoder representation transformer. It has created a major. This approach works. How Does Bert Encoder Work.

From www.geeksforgeeks.org

Explanation of BERT Model NLP How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. What is bert and how does it work? Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. It has created a major. Let’s take. How Does Bert Encoder Work.

From learnopencv.com

BERT Bidirectional Encoder Representations from Transformers How Does Bert Encoder Work Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. It has created a major. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention. How Does Bert Encoder Work.

From resources.experfy.com

BiEncoders BERT Model Via Transferring Knowledge CrossEncoders How Does Bert Encoder Work This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. What is bert and how does it work? Bert takes a different approach, it considers all the words of the input sentence simultaneously and then. How Does Bert Encoder Work.

From towardsdatascience.com

Understanding BERT — (Bidirectional Encoder Representations from How Does Bert Encoder Work Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. What is bert and how does it work? This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. It has created a major. Bert stands for bidirectional encoder representations. How Does Bert Encoder Work.

From www.youtube.com

Google BERT Architecture Explained 1/3 (BERT, Seq2Seq, Encoder How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language. How Does Bert Encoder Work.

From pysnacks.com

A Tutorial on using BERT for Text Classification w Fine Tuning How Does Bert Encoder Work It has created a major. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. What is bert and how does it work? Bert stands for bidirectional encoder representation. How Does Bert Encoder Work.

From medium.com

Dissecting BERT Part 1 The Encoder by Miguel Romero Calvo How Does Bert Encoder Work What is bert and how does it work? Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert stands for bidirectional encoder representation transformer. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. This approach works well for many nlp. How Does Bert Encoder Work.

From www.researchgate.net

(PDF) Incorporating BERT into Neural Machine Translation How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. It has created a major. This approach works well. How Does Bert Encoder Work.

From www.researchgate.net

BERTbased transformer encoder architecture Download Scientific Diagram How Does Bert Encoder Work It has created a major. Bert stands for bidirectional encoder representation transformer. Let’s take a look at how bert works, covering the technology behind the model, how it’s trained, and how it processes data. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. What is bert and how does it work? This approach. How Does Bert Encoder Work.

From yngie-c.github.io

BERT (Bidirectional Encoder Representations from Transformer) · Data How Does Bert Encoder Work Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual meaning of the words. It has created a major. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. What is bert and how does it work? This approach. How Does Bert Encoder Work.

From quantpedia.com

BERT Model Bidirectional Encoder Representations from Transformers How Does Bert Encoder Work This approach works well for many nlp tasks as shown in the elmo (embeddings from language models) paper recently. Bert stands for bidirectional encoder representations from transformers and is a language representation model by google. Bert takes a different approach, it considers all the words of the input sentence simultaneously and then uses an attention mechanism to develop a contextual. How Does Bert Encoder Work.