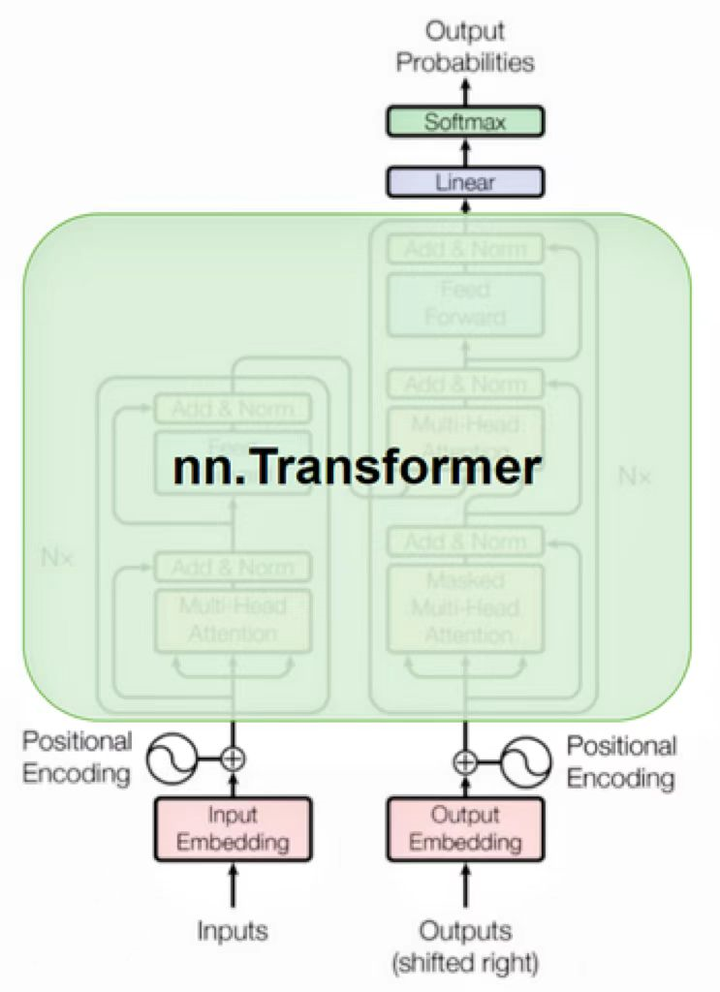

Torch Nn Transformer Github . Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. pytorch nn.transformer demo. in the first part of this notebook, we will implement the transformer architecture by hand. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. Given the input [2, 4, 6], this program generates the.

from www.zhihu.com

>>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. As the architecture is so popular, there already exists a pytorch module. pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. A demo to predict odd numbers. in the first part of this notebook, we will implement the transformer architecture by hand.

nn.Transformer怎么使用? 知乎

Torch Nn Transformer Github in the first part of this notebook, we will implement the transformer architecture by hand. A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. in the first part of this notebook, we will implement the transformer architecture by hand.

From github.com

Issues · hkproj/pytorchtransformer · GitHub Torch Nn Transformer Github >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. As the architecture is so popular, there already exists a pytorch module. transformerencoder is a stack of n encoder layers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement. Torch Nn Transformer Github.

From github.com

pytorch/transformer.py at master · pytorch/pytorch · GitHub Torch Nn Transformer Github Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. pytorch nn.transformer demo. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. A demo to. Torch Nn Transformer Github.

From kjgggggg.github.io

Transformer pytorch kjg's blog Torch Nn Transformer Github in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. pytorch nn.transformer demo. A demo to predict odd numbers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs). Torch Nn Transformer Github.

From github.com

torch_Vision_Transformer/model.py at master · Runist/torch_Vision Torch Nn Transformer Github >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. As the architecture is so popular, there already exists a pytorch module. transformerencoder is a stack of n encoder layers. in the first part of this notebook, we will implement the transformer architecture by hand. Given the input [2, 4, 6], this program. Torch Nn Transformer Github.

From github.com

pytorchtransformer/electra.py at main · nawnoes/pytorchtransformer Torch Nn Transformer Github transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. in the first part of this notebook, we will implement the transformer. Torch Nn Transformer Github.

From blog.csdn.net

Pytorch中 nn.Transformer的使用详解与Transformer的黑盒讲解CSDN博客 Torch Nn Transformer Github pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. A demo to predict odd numbers. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. in the first part of this notebook, we will implement the transformer architecture by hand. As. Torch Nn Transformer Github.

From blog.csdn.net

Torch 论文复现:Vision Transformer (ViT)_vit复现CSDN博客 Torch Nn Transformer Github transformerencoder is a stack of n encoder layers. A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will implement the transformer architecture by hand. Classtransformer_engine.pytorch.linear(in_features, out_features,. Torch Nn Transformer Github.

From github.com

How to use torch.nn.functional.normalize in torch2trt · Issue 60 Torch Nn Transformer Github Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. As the architecture is so popular, there already exists a pytorch module. in the first part of this notebook, we will implement the transformer architecture by hand. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer. Torch Nn Transformer Github.

From github.com

`is_causal` parameter in torch.nn.TransformerEncoderLayer.forward does Torch Nn Transformer Github Given the input [2, 4, 6], this program generates the. transformerencoder is a stack of n encoder layers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. As the architecture is so popular, there already exists a pytorch module. A demo to predict odd numbers. pytorch nn.transformer demo. in the first part of this notebook, we will implement the transformer architecture. Torch Nn Transformer Github.

From github.com

torch.nn.functional.pad generates ONNX without explicit Torch Nn Transformer Github Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will implement the transformer architecture by hand. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. A demo to predict odd. Torch Nn Transformer Github.

From github.com

[feature request] torch.nn.Conv3d on tensors having more than 5 Torch Nn Transformer Github transformerencoder is a stack of n encoder layers. A demo to predict odd numbers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. in the first part of this notebook, we will implement the transformer architecture by hand. Given the input [2, 4, 6], this program generates the. pytorch nn.transformer demo. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. As. Torch Nn Transformer Github.

From github.com

GitHub sooftware/conformer [Unofficial] PyTorch implementation of Torch Nn Transformer Github pytorch nn.transformer demo. transformerencoder is a stack of n encoder layers. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. in the first part of this notebook, we will implement the. Torch Nn Transformer Github.

From github.com

missing docstrings in torch.nn.intrinsic fused functions · Issue 26899 Torch Nn Transformer Github pytorch nn.transformer demo. in the first part of this notebook, we will implement the transformer architecture by hand. transformerencoder is a stack of n encoder layers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. A demo to predict odd numbers. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the. Torch Nn Transformer Github.

From github.com

torch.nn.Transformer cannot be compiled · Issue 102331 · pytorch Torch Nn Transformer Github As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. Given the input [2, 4, 6], this program generates the. A demo to predict odd numbers. in the first part of this notebook, we will implement the transformer architecture by. Torch Nn Transformer Github.

From github.com

attentionisallyouneed · GitHub Topics · GitHub Torch Nn Transformer Github A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. in the first part of this notebook, we will implement the transformer architecture by hand. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer. Torch Nn Transformer Github.

From github.com

torch.nn.ReplicationPad1dThe description of the exception information Torch Nn Transformer Github pytorch nn.transformer demo. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. A demo to predict odd numbers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. in the first part of. Torch Nn Transformer Github.

From pytorch.org

Language Modeling with nn.Transformer and torchtext — PyTorch Tutorials Torch Nn Transformer Github A demo to predict odd numbers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. pytorch nn.transformer demo. Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. transformerencoder is. Torch Nn Transformer Github.

From github.com

StockPredictionusningTransformerNN/Stock_Prediction_usning Torch Nn Transformer Github transformerencoder is a stack of n encoder layers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. pytorch nn.transformer demo. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. A demo to. Torch Nn Transformer Github.

From github.com

torch.nn.functional.nll_loss behaves differently in two cases of cpu Torch Nn Transformer Github transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. pytorch nn.transformer demo.. Torch Nn Transformer Github.

From github.com

GitHub Jaredeco/ChatBotTransformerPytorch An implementation of Torch Nn Transformer Github >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand. Given the input [2, 4, 6], this program generates the. A demo to predict odd numbers. transformerencoder is a stack of n encoder layers. pytorch nn.transformer demo. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>>. Torch Nn Transformer Github.

From github.com

ONNX export of torch.nn.Transformer still fails · Issue 110255 Torch Nn Transformer Github Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. pytorch nn.transformer demo. in the first part of this notebook, we will implement the transformer architecture by hand. A demo to predict odd numbers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true,. Torch Nn Transformer Github.

From github.com

torch.nn.transformer.py missing .pyi.in file. · Issue 27842 · pytorch Torch Nn Transformer Github Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. in the first part of this notebook, we will implement the transformer architecture by hand. A demo to predict odd numbers. transformerencoder is. Torch Nn Transformer Github.

From blog.csdn.net

小白学Pytorch系列Torch.nn API Transformer Layers(9)_torch.nn Torch Nn Transformer Github As the architecture is so popular, there already exists a pytorch module. transformerencoder is a stack of n encoder layers. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will. Torch Nn Transformer Github.

From zhuanlan.zhihu.com

PyTorch中torch.nn.Transformer的源码解读(自顶向下视角) 知乎 Torch Nn Transformer Github >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will. Torch Nn Transformer Github.

From www.zhihu.com

nn.Transformer怎么使用? 知乎 Torch Nn Transformer Github A demo to predict odd numbers. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand. transformerencoder is a stack of n encoder layers. Given the input [2, 4, 6], this program. Torch Nn Transformer Github.

From github.com

torch.nn.Transformer cannot be compiled · Issue 102331 · pytorch Torch Nn Transformer Github Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. A demo to predict odd numbers. Given the input [2, 4, 6], this program generates the. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand. >>> transformer_model =. Torch Nn Transformer Github.

From github.com

[DTensor] parallelize_module failed with nn.Transformer and the Torch Nn Transformer Github Given the input [2, 4, 6], this program generates the. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. pytorch nn.transformer demo. As the architecture is so popular, there already exists a pytorch module. A demo to predict odd numbers. transformerencoder is a stack of. Torch Nn Transformer Github.

From github.com

Why aren't torch.functional.sigmoid and torch.nn.functional.relu Torch Nn Transformer Github As the architecture is so popular, there already exists a pytorch module. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand. A demo to predict odd numbers. pytorch nn.transformer demo. transformerencoder is a stack of n encoder. Torch Nn Transformer Github.

From zhuanlan.zhihu.com

PyTorch中torch.nn.Transformer的源码解读(自顶向下视角) 知乎 Torch Nn Transformer Github >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. in the first part of this notebook, we will implement the transformer architecture by hand. pytorch nn.transformer demo. Given the input [2, 4, 6], this program generates. Torch Nn Transformer Github.

From github.com

How to use libtorch api torchnnparalleldata_parallel train on Torch Nn Transformer Github A demo to predict odd numbers. in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =.. Torch Nn Transformer Github.

From github.com

GitHub lsj2408/TransformerM [ICLR 2023] One Transformer Can Torch Nn Transformer Github pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. A demo to predict odd numbers. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand.. Torch Nn Transformer Github.

From github.com

RuntimeError torch.nn.functional.binary_cross_entropy and torch.nn Torch Nn Transformer Github Given the input [2, 4, 6], this program generates the. in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. A demo to predict odd numbers. transformerencoder is a stack of n encoder layers. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>>. Torch Nn Transformer Github.

From github.com

TorchScript bug torch.nn.transformer gives inconsistent results after Torch Nn Transformer Github in the first part of this notebook, we will implement the transformer architecture by hand. pytorch nn.transformer demo. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. A demo to predict odd numbers. Given the input [2, 4, 6], this program generates the. >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. transformerencoder is a stack of n encoder layers. As the. Torch Nn Transformer Github.

From github.com

torch.nn.modules.module.ModuleAttributeError MaskRCNN 'Sequential Torch Nn Transformer Github pytorch nn.transformer demo. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. As the architecture is so popular, there already exists a pytorch module. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. in the first part of this notebook, we will implement the transformer architecture by hand. Given the input [2, 4, 6], this program generates the. A demo to predict. Torch Nn Transformer Github.

From github.com

Type mismatch error with torch.nn.functional.grid_sample() under AMP Torch Nn Transformer Github >>> transformer_model = nn.transformer(nhead=16, num_encoder_layers=12) >>> src =. in the first part of this notebook, we will implement the transformer architecture by hand. As the architecture is so popular, there already exists a pytorch module. >>> transformer_model = nn.transformer (nhead=16, num_encoder_layers=12) >>> src =. Classtransformer_engine.pytorch.linear(in_features, out_features, bias=true, **kwargs) ¶. A demo to predict odd numbers. Given the input [2,. Torch Nn Transformer Github.