Large Filter Model . Here in one part, they were showing a cnn model for classifying human and horses. 1) we need an effective means to train models with large. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. To facilitate such a study, several challenges need to be addressed: Large language models (llms) have made remarkable strides in various tasks. View a pdf of the paper titled large language models meet collaborative filtering: Through extensive experiments on nine datasets across four ie tasks, we. In this work, we aim to provide a thorough answer to this question.

from www.onallcylinders.com

1) we need an effective means to train models with large. View a pdf of the paper titled large language models meet collaborative filtering: In this work, we aim to provide a thorough answer to this question. Large language models (llms) have made remarkable strides in various tasks. Here in one part, they were showing a cnn model for classifying human and horses. To facilitate such a study, several challenges need to be addressed: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Through extensive experiments on nine datasets across four ie tasks, we. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work.

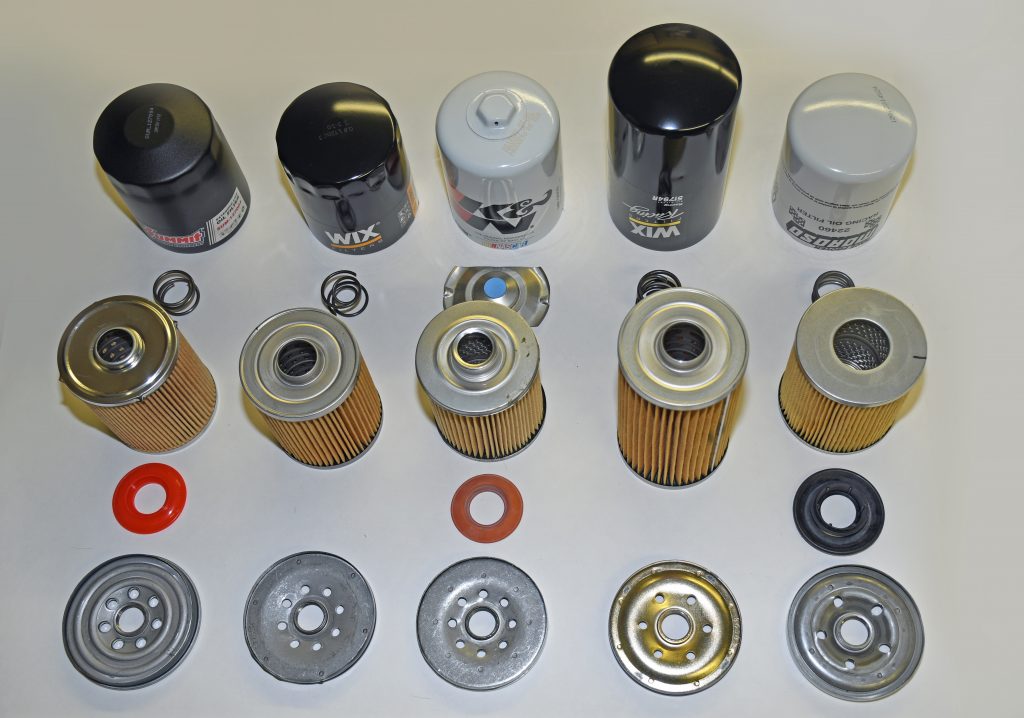

Oil Down A Look Inside Several Popular Oil Filter Models

Large Filter Model In this work, we aim to provide a thorough answer to this question. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Large language models (llms) have made remarkable strides in various tasks. In this work, we aim to provide a thorough answer to this question. 1) we need an effective means to train models with large. Through extensive experiments on nine datasets across four ie tasks, we. View a pdf of the paper titled large language models meet collaborative filtering: Here in one part, they were showing a cnn model for classifying human and horses. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. To facilitate such a study, several challenges need to be addressed:

From tillescenter.org

Blue/Black 1 in/out 20 Big Blue Filter Housing tillescenter Industrial Large Filter Model To facilitate such a study, several challenges need to be addressed: In this work, we aim to provide a thorough answer to this question. Through extensive experiments on nine datasets across four ie tasks, we. 1) we need an effective means to train models with large. View a pdf of the paper titled large language models meet collaborative filtering: Large. Large Filter Model.

From www.youtube.com

Activated Carbon Filter Design & Working Principle of ACF Why it Large Filter Model Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. In this work, we aim to provide a thorough answer to this question. View a pdf of the paper titled large language models meet collaborative filtering: Here in one part, they were. Large Filter Model.

From www.walmart.com

Brita Large Stream Filter as You Pour Plastic 10Cup Gray Water Filter Large Filter Model 1) we need an effective means to train models with large. In this work, we aim to provide a thorough answer to this question. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. View a pdf of the paper titled large language models meet collaborative filtering: To facilitate such a study, several. Large Filter Model.

From www.asahi-spectra.com

Large filters Asahi Spectra USA Inc. Large Filter Model Through extensive experiments on nine datasets across four ie tasks, we. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. View a pdf of. Large Filter Model.

From www.walmart.com

PUR Classic Dispenser Water Filter, 30 Cup, DS1800Z, Blue/White Large Filter Model In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Through extensive experiments on nine datasets across four ie tasks, we. View a pdf of the paper titled large language models meet collaborative filtering: In this work, we aim to provide a thorough answer to this question. Cnns with such large filters are. Large Filter Model.

From www.slideserve.com

PPT Software Architecture Pipe and Filter Model PowerPoint Large Filter Model Large language models (llms) have made remarkable strides in various tasks. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Through extensive experiments on nine datasets across four ie tasks, we. 1) we need an effective means to train models with. Large Filter Model.

From www.amazon.com

DRINK KATY'S Large Coffee Filters (9.5 Inch x 4.5 Inch) 12 Large Filter Model Large language models (llms) have made remarkable strides in various tasks. 1) we need an effective means to train models with large. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Here in one part, they were showing a cnn model. Large Filter Model.

From homeoneusa.com

The 9 Best Large Water Filter With Spout Home One Life Large Filter Model 1) we need an effective means to train models with large. Large language models (llms) have made remarkable strides in various tasks. Here in one part, they were showing a cnn model for classifying human and horses. To facilitate such a study, several challenges need to be addressed: Through extensive experiments on nine datasets across four ie tasks, we. In. Large Filter Model.

From www.onallcylinders.com

Oil Down A Look Inside Several Popular Oil Filter Models Large Filter Model Here in one part, they were showing a cnn model for classifying human and horses. Large language models (llms) have made remarkable strides in various tasks. To facilitate such a study, several challenges need to be addressed: 1) we need an effective means to train models with large. In this work, we aim to provide a thorough answer to this. Large Filter Model.

From grabcad.com

Water Filter Model 3D CAD Model Library GrabCAD Large Filter Model In this work, we aim to provide a thorough answer to this question. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Large language. Large Filter Model.

From wholehousewaterfilters.com.au

Triple Big Blue Filter Housing 10 Inch • Whole House Water Filters Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: 1) we need an effective means to train models with large. Large language models (llms) have made remarkable strides in various tasks. In this work, we aim to provide a thorough answer to this question. Cnns with such large filters are expensive to train and require a. Large Filter Model.

From blog.bitsrc.io

The Basics of Pipes and Filters Pattern Bits and Pieces Large Filter Model Here in one part, they were showing a cnn model for classifying human and horses. Through extensive experiments on nine datasets across four ie tasks, we. To facilitate such a study, several challenges need to be addressed: Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures. Large Filter Model.

From www.amazon.com

Hayward Pro Series 24 Inch In Ground Pool Sand Filter Large Filter Model Through extensive experiments on nine datasets across four ie tasks, we. View a pdf of the paper titled large language models meet collaborative filtering: 1) we need an effective means to train models with large. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet. Large Filter Model.

From www.youtube.com

water purifier filter working model for science project exhibition Large Filter Model Here in one part, they were showing a cnn model for classifying human and horses. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Large language models (llms) have made remarkable strides in various tasks. View a pdf of the paper titled large language models meet collaborative filtering: To facilitate such a. Large Filter Model.

From www.poolsuppliessuperstore.com

Hayward W3C4030 Swim Clear Large Capacity Cartridge Filter, 425 sq ft Large Filter Model Large language models (llms) have made remarkable strides in various tasks. In this work, we aim to provide a thorough answer to this question. 1) we need an effective means to train models with large. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet. Large Filter Model.

From waterfilter.net.au

Best Whole House Water Filter Systems MDC Water Pty Ltd Large Filter Model 1) we need an effective means to train models with large. Through extensive experiments on nine datasets across four ie tasks, we. In this work, we aim to provide a thorough answer to this question. Large language models (llms) have made remarkable strides in various tasks. Cnns with such large filters are expensive to train and require a lot of. Large Filter Model.

From www.hydac.com

PLF2 Process filter, inline filter Filtration technology HYDAC Large Filter Model In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. In this work, we aim to provide a thorough answer to this question. Through extensive experiments on nine datasets across four ie tasks, we. Large language models (llms) have made remarkable strides in various tasks. 1) we need an effective means to train. Large Filter Model.

From grabcad.com

Free CAD Designs, Files & 3D Models The GrabCAD Community Library Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Through extensive experiments on nine datasets across four ie tasks, we. Here in one part, they were showing a. Large Filter Model.

From www.walmart.com

OEM LG Air Conditioner AC Filter Shipped With A2H243GA0, A3C363GA0 Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Here in one part, they were showing a cnn model for classifying human and horses. Large language models (llms) have made remarkable strides in various tasks. Through extensive experiments on. Large Filter Model.

From www.clarencewaterfilters.com.au

Clarence Water Filters Australia Chlorine Town Large Twin Whole of Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: Through extensive experiments on nine datasets across four ie tasks, we. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Here in one part, they were showing a. Large Filter Model.

From www.northerntool.com

Milwaukee Large Wet/Dry Vacuum Foam Wet Filter, Model 49901990 Large Filter Model In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Through extensive experiments on nine datasets across four ie tasks, we. Here in one part,. Large Filter Model.

From www.slideserve.com

PPT Attention PowerPoint Presentation, free download ID732864 Large Filter Model Through extensive experiments on nine datasets across four ie tasks, we. View a pdf of the paper titled large language models meet collaborative filtering: Large language models (llms) have made remarkable strides in various tasks. 1) we need an effective means to train models with large. To facilitate such a study, several challenges need to be addressed: Here in one. Large Filter Model.

From engineeringlearn.com

7 Types of Air Filters (Home) Pros, Cons and Sizes of Filters Large Filter Model To facilitate such a study, several challenges need to be addressed: In this work, we aim to provide a thorough answer to this question. Large language models (llms) have made remarkable strides in various tasks. 1) we need an effective means to train models with large. Cnns with such large filters are expensive to train and require a lot of. Large Filter Model.

From www.comsol.de

Using Data Filtering to Improve Model Performance COMSOL Blog Large Filter Model Large language models (llms) have made remarkable strides in various tasks. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Through extensive experiments on nine datasets across four ie tasks, we. 1) we need an effective means to train models with large. In this work, we aim to provide a thorough answer. Large Filter Model.

From howtofunda.com

water purification (filter) working model science project using paper Large Filter Model In this work, we aim to provide a thorough answer to this question. View a pdf of the paper titled large language models meet collaborative filtering: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and require a lot of data, which is. Large Filter Model.

From www.researchgate.net

The comparison of three filter models Download Scientific Diagram Large Filter Model In this work, we aim to provide a thorough answer to this question. View a pdf of the paper titled large language models meet collaborative filtering: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and require a lot of data, which is. Large Filter Model.

From www.petco.com

Tetra Whisper BioBag Large Aquarium Filter Cartridges 12ct Large Filter Model In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. 1) we need an effective means to train models with large. View a pdf of the paper titled large language models meet collaborative filtering: Cnns with such large filters are expensive to train and require a lot of data, which is the main. Large Filter Model.

From hydrovos.com

10" Whole House Large Capacity Water Filter 5 Micron Hydrovos Water Large Filter Model Large language models (llms) have made remarkable strides in various tasks. Here in one part, they were showing a cnn model for classifying human and horses. To facilitate such a study, several challenges need to be addressed: 1) we need an effective means to train models with large. In this model, the first conv2d layer had 16 filters, followed by. Large Filter Model.

From www.honeywellconsumerstore.com

Honeywell Full Height HEPA and PreFilter Kit Replacement Filters Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Here in. Large Filter Model.

From 5da95c99ae75e4bf.en.made-in-china.com

High Flow with Large Filter Cartridge UPVC Filter China Filter Large Filter Model Large language models (llms) have made remarkable strides in various tasks. To facilitate such a study, several challenges need to be addressed: In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. In this work, we aim to provide a thorough answer to this question. Through extensive experiments on nine datasets across four. Large Filter Model.

From homedecorcreation.com

The 10 Best Pentek 10 Inch Big Blue Water Filter Housing Home Creation Large Filter Model In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Large language models (llms) have made remarkable strides in various tasks. In this work, we aim to provide a thorough answer to this question. 1) we need an effective means to train models with large. Here in one part, they were showing a. Large Filter Model.

From www.asahi-spectra.com

Large filters Asahi Spectra USA Inc. Large Filter Model To facilitate such a study, several challenges need to be addressed: Through extensive experiments on nine datasets across four ie tasks, we. 1) we need an effective means to train models with large. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Cnns with such large filters are expensive to train and. Large Filter Model.

From www.homedepot.ca

RIDGID Standard Filter for 18.9 L (5 Gal.) & Larger Wet Dry Vacuums (2 Large Filter Model To facilitate such a study, several challenges need to be addressed: Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Here in one part, they were showing a cnn model for classifying human and horses. Large language models (llms) have made. Large Filter Model.

From www.amazon.com

JOWSET Jowset Replacement H13 HEPA Air Purifier Filter for Large Filter Model In this work, we aim to provide a thorough answer to this question. Cnns with such large filters are expensive to train and require a lot of data, which is the main reason why cnn architectures like googlenet (alexnet architecture) work. Large language models (llms) have made remarkable strides in various tasks. View a pdf of the paper titled large. Large Filter Model.

From www.filtermate.com.au

20" Filter Set (extra large) Filtermate Large Filter Model View a pdf of the paper titled large language models meet collaborative filtering: In this work, we aim to provide a thorough answer to this question. In this model, the first conv2d layer had 16 filters, followed by two more conv2d layers with. Through extensive experiments on nine datasets across four ie tasks, we. Large language models (llms) have made. Large Filter Model.