Disallow Robots.txt Wildcard . robots.txt file tells search engines where they can and can’t go on your site. disallow and allow directives. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. It also controls how they can crawl allowed content. the following robots.txt (using the * wildcard) should do the job: i have a robots text file which needs to mass exclude certain urls, currently setup as so. If we want to allow all search engines to access everything on the site there are three ways to do this: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain.

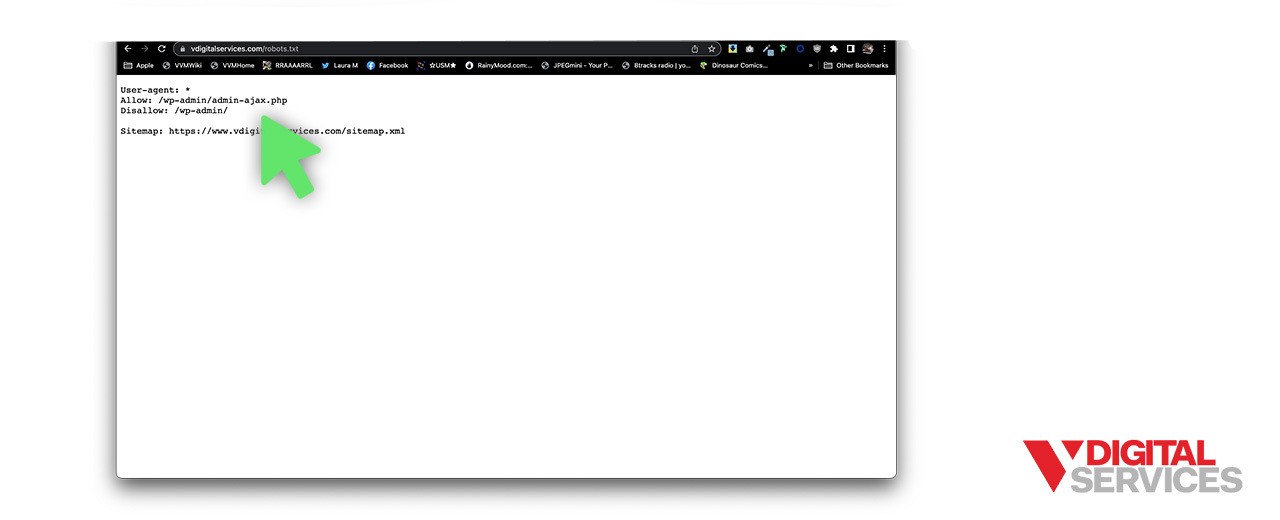

from www.vdigitalservices.com

Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. i have a robots text file which needs to mass exclude certain urls, currently setup as so. disallow and allow directives. If we want to allow all search engines to access everything on the site there are three ways to do this: It also controls how they can crawl allowed content. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the following robots.txt (using the * wildcard) should do the job: robots.txt file tells search engines where they can and can’t go on your site.

Using Robots.txt to Disallow All or Allow All How to Guide

Disallow Robots.txt Wildcard If we want to allow all search engines to access everything on the site there are three ways to do this: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the following robots.txt (using the * wildcard) should do the job: It also controls how they can crawl allowed content. robots.txt file tells search engines where they can and can’t go on your site. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. If we want to allow all search engines to access everything on the site there are three ways to do this: i have a robots text file which needs to mass exclude certain urls, currently setup as so. disallow and allow directives.

From www.youtube.com

masters How can I use robots.txt to disallow subdomain only? (3 Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. disallow and allow directives. If we want to allow all search engines to access everything on the site there are. Disallow Robots.txt Wildcard.

From stackoverflow.com

seo Can a robots.txt disallow use an asterisk for product id wildcard Disallow Robots.txt Wildcard the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. i have a robots text file which needs to mass exclude certain urls, currently setup as so. It also controls how they can crawl allowed content. Your robots.txt directives command the bots to either. Disallow Robots.txt Wildcard.

From ignitevisibility.com

Robots.txt Disallow 2025 Guide for Marketers Disallow Robots.txt Wildcard It also controls how they can crawl allowed content. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. the following. Disallow Robots.txt Wildcard.

From www.youtube.com

masters What does "Disallow /search" mean in robots.txt? (5 Disallow Robots.txt Wildcard If we want to allow all search engines to access everything on the site there are three ways to do this: It also controls how they can crawl allowed content. i have a robots text file which needs to mass exclude certain urls, currently setup as so. robots.txt file tells search engines where they can and can’t go. Disallow Robots.txt Wildcard.

From velog.io

Robots.txt Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. i have a robots text file which needs to mass exclude certain urls, currently setup as so. robots.txt file tells search engines where they can and can’t go on your site. the easy way is to put all files to be disallowed into. Disallow Robots.txt Wildcard.

From www.youtube.com

Robot.txt disallow \*?s= (3 Solutions!!) YouTube Disallow Robots.txt Wildcard the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). robots.txt file tells search engines where they can and can’t go on your site. the following robots.txt (using the * wildcard) should do the job: i have a robots text file which needs to. Disallow Robots.txt Wildcard.

From stackoverflow.com

seo Can a robots.txt disallow use an asterisk for product id wildcard Disallow Robots.txt Wildcard robots.txt file tells search engines where they can and can’t go on your site. It also controls how they can crawl allowed content. disallow and allow directives. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. Your robots.txt directives command the bots. Disallow Robots.txt Wildcard.

From www.vdigitalservices.com

Using Robots.txt to Disallow All or Allow All How to Guide Disallow Robots.txt Wildcard the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. It also controls how they can crawl allowed content. i have a robots text file which needs to mass exclude certain urls, currently setup as so. Your robots.txt directives command the bots to either. Disallow Robots.txt Wildcard.

From builtvisible.com

An SEO's Guide to Robots.txt, Wildcards, the XRobotsTag and Noindex Disallow Robots.txt Wildcard disallow and allow directives. robots.txt file tells search engines where they can and can’t go on your site. i have a robots text file which needs to mass exclude certain urls, currently setup as so. If we want to allow all search engines to access everything on the site there are three ways to do this: . Disallow Robots.txt Wildcard.

From www.youtube.com

Tutorial su robots.txt e disallow YouTube Disallow Robots.txt Wildcard robots.txt file tells search engines where they can and can’t go on your site. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. disallow and allow directives. i have a robots text file which needs to mass exclude certain urls, currently. Disallow Robots.txt Wildcard.

From stackoverflow.com

wordpress Disallow all pagination pages in robots.txt Stack Overflow Disallow Robots.txt Wildcard i have a robots text file which needs to mass exclude certain urls, currently setup as so. the following robots.txt (using the * wildcard) should do the job: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). disallow and allow directives. the. Disallow Robots.txt Wildcard.

From geoffkenyon.com

>> Robots.txt Wildcards How to Use Wildcards in Robots.txt Geoff Kenyon Disallow Robots.txt Wildcard disallow and allow directives. robots.txt file tells search engines where they can and can’t go on your site. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the easy way is to put all files to be disallowed into a separate directory, say. Disallow Robots.txt Wildcard.

From noaheakin.medium.com

A Brief Look At /robots.txt Files by Noah Eakin Medium Disallow Robots.txt Wildcard i have a robots text file which needs to mass exclude certain urls, currently setup as so. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. It also controls how they can crawl allowed content. the following robots.txt (using the * wildcard). Disallow Robots.txt Wildcard.

From www.semrush.com

A Complete Guide to Robots.txt & Why It Matters Disallow Robots.txt Wildcard the following robots.txt (using the * wildcard) should do the job: disallow and allow directives. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). If we want to allow all search engines to access everything on the site there are three ways to do. Disallow Robots.txt Wildcard.

From impactoseo.com

¿Cómo realizar un Disallow en mi Robots.txt Disallow Robots.txt Wildcard the following robots.txt (using the * wildcard) should do the job: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). robots.txt file tells search engines where they can and can’t go on your site. It also controls how they can crawl allowed content. . Disallow Robots.txt Wildcard.

From www.youtube.com

Disallow root but not 4 subdirectories for robots.txt (2 Solutions Disallow Robots.txt Wildcard disallow and allow directives. robots.txt file tells search engines where they can and can’t go on your site. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. i have a robots text file which needs to mass exclude certain urls, currently setup as so. If we want to allow all search engines. Disallow Robots.txt Wildcard.

From www.youtube.com

masters Robots txt disallow on Blogger YouTube Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. disallow and allow directives. the following robots.txt (using the * wildcard) should do the job: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the easy way is to. Disallow Robots.txt Wildcard.

From www.youtube.com

Should I disallow(robots.txt) archive/author pages with links already Disallow Robots.txt Wildcard robots.txt file tells search engines where they can and can’t go on your site. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). It also controls how they can crawl allowed content. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of. Disallow Robots.txt Wildcard.

From www.youtube.com

masters Robots.txt got changed to disallow crawling by accident Disallow Robots.txt Wildcard i have a robots text file which needs to mass exclude certain urls, currently setup as so. disallow and allow directives. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). It also controls how they can crawl allowed content. If we want to allow. Disallow Robots.txt Wildcard.

From builtvisible.com

An SEO's Guide to Robots.txt, Wildcards, the XRobotsTag and Noindex Disallow Robots.txt Wildcard It also controls how they can crawl allowed content. the following robots.txt (using the * wildcard) should do the job: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. i. Disallow Robots.txt Wildcard.

From builtvisible.com

An SEO's Guide to Robots.txt, Wildcards, the XRobotsTag and Noindex Disallow Robots.txt Wildcard the following robots.txt (using the * wildcard) should do the job: the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. disallow and allow directives. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool. Disallow Robots.txt Wildcard.

From www.evemilano.com

Disallow del robots.txt sui parametri degli URL Disallow Robots.txt Wildcard disallow and allow directives. i have a robots text file which needs to mass exclude certain urls, currently setup as so. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). the easy way is to put all files to be disallowed into a. Disallow Robots.txt Wildcard.

From www.semrush.com

A Complete Guide to Robots.txt & Why It Matters Disallow Robots.txt Wildcard disallow and allow directives. If we want to allow all search engines to access everything on the site there are three ways to do this: i have a robots text file which needs to mass exclude certain urls, currently setup as so. the easy way is to put all files to be disallowed into a separate directory,. Disallow Robots.txt Wildcard.

From es.slideshare.net

Robots.txt Disallow areasof your site Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. i have a robots text file which needs to mass exclude certain urls, currently setup as so. It also controls how they can crawl allowed content. disallow and allow directives. If we want to allow all search engines to access everything on the site. Disallow Robots.txt Wildcard.

From www.tffn.net

How to Read Robots.txt A Comprehensive Guide The Enlightened Mindset Disallow Robots.txt Wildcard disallow and allow directives. It also controls how they can crawl allowed content. If we want to allow all search engines to access everything on the site there are three ways to do this: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). i. Disallow Robots.txt Wildcard.

From www.vdigitalservices.com

Using Robots.txt to Disallow All or Allow All How to Guide Disallow Robots.txt Wildcard It also controls how they can crawl allowed content. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. the above is how googlebot handles your examples as can be. Disallow Robots.txt Wildcard.

From www.reliablesoft.net

Robots.txt And SEO Easy Guide For Beginners Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. i have a robots text file which needs to mass exclude certain urls, currently setup as so. It also controls how they can crawl allowed content. disallow and allow directives. the following robots.txt (using the * wildcard) should do the job: robots.txt. Disallow Robots.txt Wildcard.

From www.wysiwygwebbuilder.com

robots.txt Disallow Robots.txt Wildcard disallow and allow directives. It also controls how they can crawl allowed content. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the following robots.txt (using the * wildcard) should do the job: the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools. Disallow Robots.txt Wildcard.

From seohub.net.au

A Complete Guide to Robots.txt & Why It Matters Disallow Robots.txt Wildcard If we want to allow all search engines to access everything on the site there are three ways to do this: robots.txt file tells search engines where they can and can’t go on your site. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to. Disallow Robots.txt Wildcard.

From www.youtube.com

masters What is robots.txt disallowing with Disallow / for Disallow Robots.txt Wildcard i have a robots text file which needs to mass exclude certain urls, currently setup as so. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the easy way is to put all files to be disallowed into a separate directory, say stuff, and leave the one file in the. the above. Disallow Robots.txt Wildcard.

From www.edgeonline.com.au

What is Robots.txt An Exhaustive Guide to the Robots.txt File Disallow Robots.txt Wildcard robots.txt file tells search engines where they can and can’t go on your site. i have a robots text file which needs to mass exclude certain urls, currently setup as so. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. It also controls how they can crawl allowed content. the following robots.txt. Disallow Robots.txt Wildcard.

From www.youtube.com

masters In robots.txt, why would there be "Allow /\*" below a Disallow Robots.txt Wildcard Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. the following robots.txt (using the * wildcard) should do the job: disallow and allow directives. i have a robots text file which needs to mass exclude certain urls, currently setup as so. robots.txt file tells search engines where they can and can’t. Disallow Robots.txt Wildcard.

From seohub.net.au

A Complete Guide to Robots.txt & Why It Matters Disallow Robots.txt Wildcard the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). disallow and allow directives. robots.txt file tells search engines where they can and can’t go on your site. the easy way is to put all files to be disallowed into a separate directory, say. Disallow Robots.txt Wildcard.

From www.firstpagedigital.sg

Guide To Robots.txt Disallow Command For SEO Disallow Robots.txt Wildcard disallow and allow directives. the above is how googlebot handles your examples as can be tested on their robots.txt testing tool (webmaster tools > blocked urls). robots.txt file tells search engines where they can and can’t go on your site. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. It also controls. Disallow Robots.txt Wildcard.

From wp-educator.com

What is the Disallow Statement in Robots.txt? A Simple Explanation Disallow Robots.txt Wildcard disallow and allow directives. i have a robots text file which needs to mass exclude certain urls, currently setup as so. Your robots.txt directives command the bots to either “disallow” or “allow” crawling of certain. It also controls how they can crawl allowed content. the above is how googlebot handles your examples as can be tested on. Disallow Robots.txt Wildcard.