Spark How To Check Number Of Partitions . in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. How to calculate the spark partition size. If it is a column, it will be used as. the show partitions statement is used to list partitions of a table. In apache spark, you can use the rdd.getnumpartitions() method to get the number. There are four ways to get the number of partitions of a spark. An optional partition spec may be specified to return the. numpartitions can be an int to specify the target number of partitions or a column. getting the number of partitions of a spark dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. methods to get the current number of partitions of a dataframe.

from support.apple.com

the show partitions statement is used to list partitions of a table. getting the number of partitions of a spark dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. numpartitions can be an int to specify the target number of partitions or a column. methods to get the current number of partitions of a dataframe. There are four ways to get the number of partitions of a spark. If it is a column, it will be used as. An optional partition spec may be specified to return the. In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size.

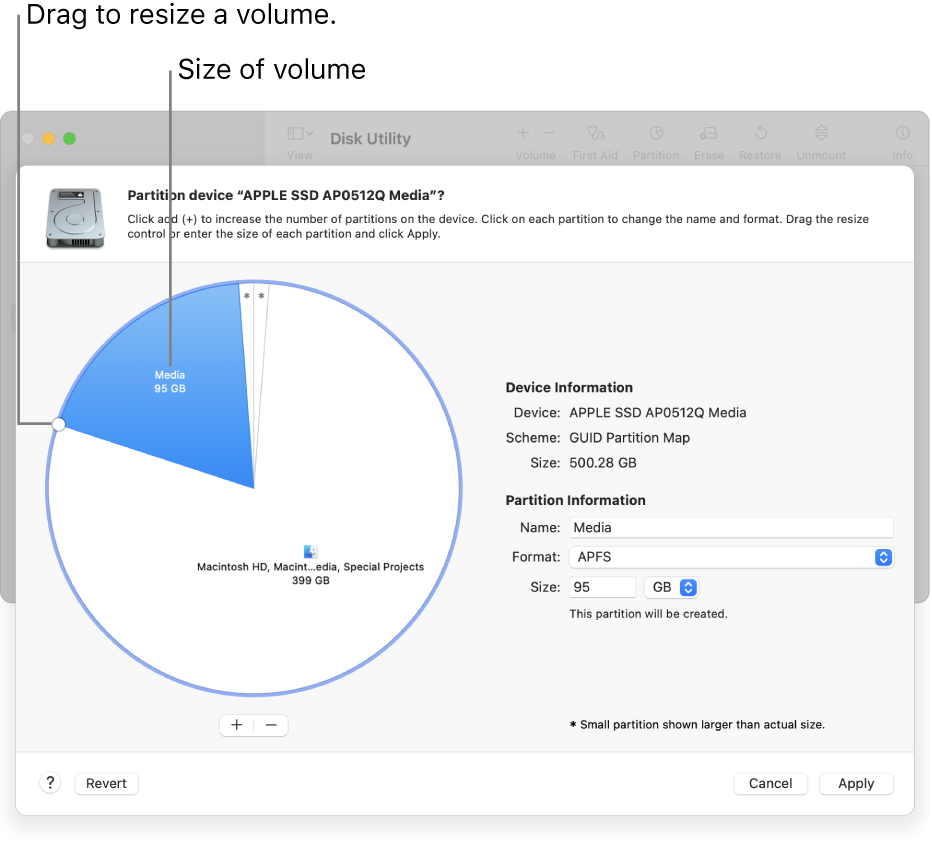

Partition a physical disk in Disk Utility on Mac Apple Support

Spark How To Check Number Of Partitions How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. methods to get the current number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. numpartitions can be an int to specify the target number of partitions or a column. In apache spark, you can use the rdd.getnumpartitions() method to get the number. If it is a column, it will be used as. How to calculate the spark partition size. getting the number of partitions of a spark dataframe. There are four ways to get the number of partitions of a spark. An optional partition spec may be specified to return the.

From www.youtube.com

How to check what type of partition style your disk is using (MBR/GPT Spark How To Check Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. An optional partition spec may be specified to return the. the show partitions statement is used to list partitions of a table. There are four ways to get the number of partitions of a spark. numpartitions can be. Spark How To Check Number Of Partitions.

From superuser.com

partitioning How do you read a HEX partition table? Super User Spark How To Check Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. numpartitions can be an int to specify the target number of partitions or a column. There are four ways to get the number of partitions of a spark. In apache spark, you can use the. Spark How To Check Number Of Partitions.

From medium.com

Guide to Selection of Number of Partitions while reading Data Files in Spark How To Check Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. methods to get the current number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. If it is a column,. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

How Do I Create a Partition Using Diskpart MiniTool Spark How To Check Number Of Partitions the show partitions statement is used to list partitions of a table. An optional partition spec may be specified to return the. There are four ways to get the number of partitions of a spark. getting the number of partitions of a spark dataframe. If it is a column, it will be used as. numpartitions can be. Spark How To Check Number Of Partitions.

From blog.csdn.net

[pySpark][笔记]spark tutorial from spark official site在ipython notebook 下 Spark How To Check Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. If it is a column, it will be used as. How to calculate the spark partition size. methods to get the current number of partitions of a dataframe. the show partitions statement is used. Spark How To Check Number Of Partitions.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan Spark How To Check Number Of Partitions If it is a column, it will be used as. An optional partition spec may be specified to return the. the show partitions statement is used to list partitions of a table. There are four ways to get the number of partitions of a spark. in pyspark, you can use the rdd.getnumpartitions() method to find out the number. Spark How To Check Number Of Partitions.

From www.youtube.com

Number of Partitions in Dataframe Spark Tutorial Interview Question Spark How To Check Number Of Partitions If it is a column, it will be used as. methods to get the current number of partitions of a dataframe. There are four ways to get the number of partitions of a spark. An optional partition spec may be specified to return the. the show partitions statement is used to list partitions of a table. In apache. Spark How To Check Number Of Partitions.

From www.diskpart.com

AOMEI Partition Assistant Operation Notes Spark How To Check Number Of Partitions An optional partition spec may be specified to return the. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. methods to get the current number of partitions of a dataframe. If it is a column, it will be used as. spark generally partitions your rdd based on the. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

How to Check File System MiniTool Partition Wizard Tutorial Spark How To Check Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. in pyspark,. Spark How To Check Number Of Partitions.

From www.minitool.com

How to Check Disk Partitions (4 Easy and Effective Methods) Spark How To Check Number Of Partitions An optional partition spec may be specified to return the. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. getting the number of partitions of a spark dataframe. If it is a column, it will be used as. spark generally partitions your rdd based on the number of. Spark How To Check Number Of Partitions.

From www.diskpart.com

Create Hard Disk Partitions Before Installing Windows 11/10/8/7 Spark How To Check Number Of Partitions methods to get the current number of partitions of a dataframe. getting the number of partitions of a spark dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. There are four ways to get the number of partitions of a spark. If it is a column, it will be used as. How to. Spark How To Check Number Of Partitions.

From www.minitool.com

How to Check Disk Partitions (4 Easy and Effective Methods) Spark How To Check Number Of Partitions getting the number of partitions of a spark dataframe. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. If it is a column, it will be used as. In apache spark, you can use the rdd.getnumpartitions(). Spark How To Check Number Of Partitions.

From www.minitool.com

How to Check Disk Partitions (4 Easy and Effective Methods) Spark How To Check Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. getting the number of partitions of a spark dataframe. the show partitions statement is used to list partitions of a table. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task.. Spark How To Check Number Of Partitions.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Spark How To Check Number Of Partitions getting the number of partitions of a spark dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. numpartitions can be an int to specify the target number of partitions or a column. methods to get the current number of partitions of a dataframe. If it is. Spark How To Check Number Of Partitions.

From www.minitool.com

How to Check Partition Style 4 Best Ways Spark How To Check Number Of Partitions getting the number of partitions of a spark dataframe. An optional partition spec may be specified to return the. the show partitions statement is used to list partitions of a table. In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. spark generally partitions your rdd based. Spark How To Check Number Of Partitions.

From forums.opensuse.org

How to determine exactly the partitions where SUSE is installed Spark How To Check Number Of Partitions the show partitions statement is used to list partitions of a table. getting the number of partitions of a spark dataframe. How to calculate the spark partition size. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the number of. Spark How To Check Number Of Partitions.

From www.itechtics.com

3 Ways To Check Partition Style In Windows 10 MBR Or GPT Spark How To Check Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. If it is a column, it will be used as. the show partitions statement is used to list partitions of a table. In apache spark, you can use the rdd.getnumpartitions() method to get the number.. Spark How To Check Number Of Partitions.

From gearboot7.netlify.app

How To Check Partitions Gearboot7 Spark How To Check Number Of Partitions An optional partition spec may be specified to return the. How to calculate the spark partition size. numpartitions can be an int to specify the target number of partitions or a column. getting the number of partitions of a spark dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of. Spark How To Check Number Of Partitions.

From support.apple.com

Partition a physical disk in Disk Utility on Mac Apple Support Spark How To Check Number Of Partitions There are four ways to get the number of partitions of a spark. How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions(). Spark How To Check Number Of Partitions.

From www.hdd-tool.com

How to check partition with NIUBI Partition Editor? Spark How To Check Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. If it is a column, it will be used as. methods to get the current number. Spark How To Check Number Of Partitions.

From www.techadvisor.com

How to partition Windows 10 Tech Advisor Spark How To Check Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. An optional partition spec may be specified to return the. How to calculate the spark partition size.. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

How to Make Partition Repair in Windows 10/8/7 (Focus on 3 Cases Spark How To Check Number Of Partitions the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. An optional partition spec may be specified to return the. getting the number of partitions of a spark dataframe. If it is a column, it will be. Spark How To Check Number Of Partitions.

From ostechnix.com

How To List Disk Partitions In Linux OSTechNix Spark How To Check Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. numpartitions can be an int to specify the target number of partitions or a column. How to calculate the spark partition size. If it is a column, it will be used as. getting. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

The Most Effective Method for GUID Partition Table Recovery MiniTool Spark How To Check Number Of Partitions An optional partition spec may be specified to return the. If it is a column, it will be used as. the show partitions statement is used to list partitions of a table. getting the number of partitions of a spark dataframe. numpartitions can be an int to specify the target number of partitions or a column. In. Spark How To Check Number Of Partitions.

From www.reddit.com

Guide to Determine Number of Partitions in Apache Spark r/apachespark Spark How To Check Number Of Partitions How to calculate the spark partition size. getting the number of partitions of a spark dataframe. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. An optional partition spec may be specified to return the. . Spark How To Check Number Of Partitions.

From www.easeus.com

How to Partition SSD Safely and Easily 2021 Guide EaseUS Spark How To Check Number Of Partitions An optional partition spec may be specified to return the. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. methods to get the current number of partitions of a dataframe. There are four ways to get. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

How to Change Partition Serial Number MiniTool Partition Wizard Tutorial Spark How To Check Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. How to calculate the spark partition size. methods to get the current number of partitions of a dataframe. An optional partition spec may be specified to return the. the show partitions statement is used. Spark How To Check Number Of Partitions.

From sdrhelp.syniti.com

Using Partitions for Refresh Replications Spark How To Check Number Of Partitions the show partitions statement is used to list partitions of a table. methods to get the current number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. If it is a column, it will be used as. In. Spark How To Check Number Of Partitions.

From www.easeus.com

Disk Partition How to Partition A Hard Drive You Must Know in 2024 Spark How To Check Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. numpartitions can be an int to specify the target number of partitions or a column. the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find. Spark How To Check Number Of Partitions.

From exohjgfcr.blob.core.windows.net

How To Partition A Hard Drive In Windows 10 After Installation at Spark How To Check Number Of Partitions numpartitions can be an int to specify the target number of partitions or a column. How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. If it is a column, it will be used as. In apache spark, you can use the rdd.getnumpartitions() method to get the number. An. Spark How To Check Number Of Partitions.

From towardsdatascience.com

How to repartition Spark DataFrames Towards Data Science Spark How To Check Number Of Partitions getting the number of partitions of a spark dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. There are four ways to get. Spark How To Check Number Of Partitions.

From dxofadauo.blob.core.windows.net

Partition Key In Sql at Julio Bernhard blog Spark How To Check Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. There are four ways to get the number of partitions of a spark. getting the number. Spark How To Check Number Of Partitions.

From dxoappiej.blob.core.windows.net

Partition Hard Drive When Installing Windows 10 at Eric Gamez blog Spark How To Check Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. methods to get the current number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. numpartitions can be an int to specify the target number of partitions or a column.. Spark How To Check Number Of Partitions.

From www.partitionwizard.com

PC Hardware Check on Windows 10 Here Are Best Diagnostic Tools Spark How To Check Number Of Partitions There are four ways to get the number of partitions of a spark. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. How to calculate the spark partition size. the show partitions statement is used to list partitions of a table. methods to get the current number of. Spark How To Check Number Of Partitions.

From sdrhelp.syniti.com

Using Partitions for Refresh Replications Spark How To Check Number Of Partitions the show partitions statement is used to list partitions of a table. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. An optional partition spec may be specified to return the. There are four ways to. Spark How To Check Number Of Partitions.